Jingyun Liang, Jiezhang Cao, Guolei Sun, Kai Zhang, Luc Van Gool, Radu Timofte

Computer Vision Lab, ETH Zurich

This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Window Transformer (arxiv, supp, pretrained models, visual results). SwinIR achieves state-of-the-art performance in

- bicubic/lighweight/real-world image SR

- grayscale/color image denoising

- grayscale/color JPEG compression artifact reduction

🚀 🚀 🚀 News:

- Aug. 16, 2022: Add PlayTorch Demo on running the real-world image SR model on mobile devices

.

- Aug. 01, 2022: Add pretrained models and results on JPEG compression artifact reduction for color images.

- Jun. 10, 2022: See our work on video restoration 🔥🔥🔥 VRT: A Video Restoration Transformer

and RVRT: Recurrent Video Restoration Transformer

for video SR, video deblurring, video denoising, video frame interpolation and space-time video SR.

- Sep. 07, 2021: We provide an interactive online Colab demo for real-world image SR

🔥 for comparison with the first practical degradation model BSRGAN (ICCV2021)

and a recent model RealESRGAN. Try to super-resolve your own images on Colab!

| Real-World Image (x4) | BSRGAN, ICCV2021 | Real-ESRGAN | SwinIR (ours) | SwinIR-Large (ours) |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

- Aug. 26, 2021: See our recent work on real-world image SR: a pratical degrdation model BSRGAN, ICCV2021

- Aug. 26, 2021: See our recent work on generative modelling of image SR and image rescaling: normalizing-flow-based HCFlow, ICCV2021

- Aug. 26, 2021: See our recent work on blind SR: spatially variant kernel estimation (MANet, ICCV2021)

and unsupervised kernel estimation (FKP, CVPR2021)

Image restoration is a long-standing low-level vision problem that aims to restore high-quality images from low-quality images (e.g., downscaled, noisy and compressed images). While state-of-the-art image restoration methods are based on convolutional neural networks, few attempts have been made with Transformers which show impressive performance on high-level vision tasks. In this paper, we propose a strong baseline model SwinIR for image restoration based on the Swin Transformer. SwinIR consists of three parts: shallow feature extraction, deep feature extraction and high-quality image reconstruction. In particular, the deep feature extraction module is composed of several residual Swin Transformer blocks (RSTB), each of which has several Swin Transformer layers together with a residual connection. We conduct experiments on three representative tasks: image super-resolution (including classical, lightweight and real-world image super-resolution), image denoising (including grayscale and color image denoising) and JPEG compression artifact reduction. Experimental results demonstrate that SwinIR outperforms state-of-the-art methods on different tasks by up to 0.14~0.45dB, while the total number of parameters can be reduced by up to 67%.

Used training and testing sets can be downloaded as follows:

| Task | Training Set | Testing Set | Visual Results |

|---|---|---|---|

| classical/lightweight image SR | DIV2K (800 training images) or DIV2K +Flickr2K (2650 images) | Set5 + Set14 + BSD100 + Urban100 + Manga109 download all | here |

| real-world image SR | SwinIR-M (middle size): DIV2K (800 training images) +Flickr2K (2650 images) + OST (alternative link, 10324 images for sky,water,grass,mountain,building,plant,animal) SwinIR-L (large size): DIV2K + Flickr2K + OST + WED(4744 images) + FFHQ (first 2000 images, face) + Manga109 (manga) + SCUT-CTW1500 (first 100 training images, texts) *We use the pionnerring practical degradation model from BSRGAN, ICCV2021  |

RealSRSet+5images | here |

| color/grayscale image denoising | DIV2K (800 training images) + Flickr2K (2650 images) + BSD500 (400 training&testing images) + WED(4744 images) *BSD68/BSD100 images are not used in training. |

grayscale: Set12 + BSD68 + Urban100 color: CBSD68 + Kodak24 + McMaster + Urban100 download all |

here |

| grayscale/color JPEG compression artifact reduction | DIV2K (800 training images) + Flickr2K (2650 images) + BSD500 (400 training&testing images) + WED(4744 images) | grayscale: Classic5 +LIVE1 download all | here |

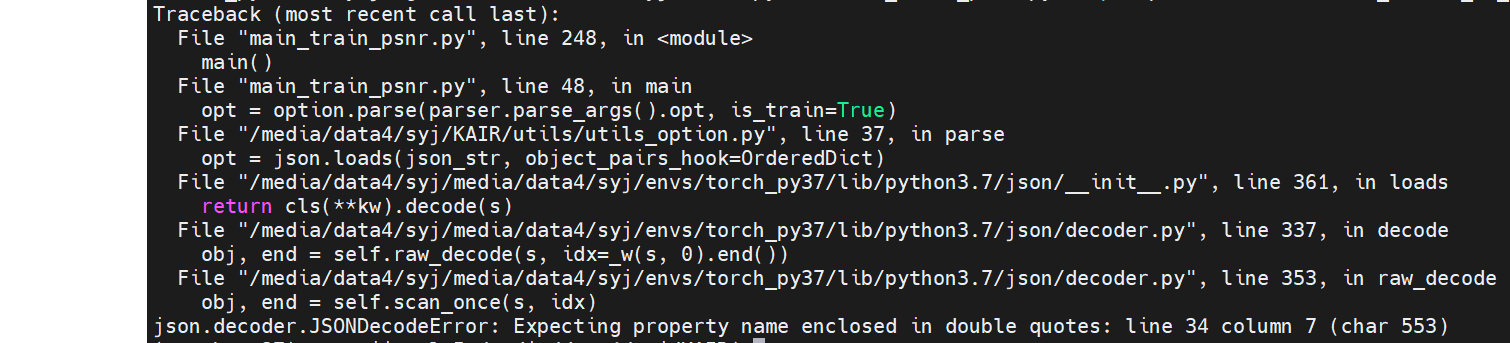

The training code is at KAIR.

For your convience, we provide some example datasets (~20Mb) in /testsets.

If you just want codes, downloading models/network_swinir.py, utils/util_calculate_psnr_ssim.py and main_test_swinir.py is enough.

Following commands will download pretrained models automatically and put them in model_zoo/swinir.

All visual results of SwinIR can be downloaded here.

We also provide an online Colab demo for real-world image SR for comparison with the first practical degradation model BSRGAN (ICCV2021)

We provide a PlayTorch demo for real-world image SR to showcase how to run the SwinIR model in mobile application built with React Native.

# 001 Classical Image Super-Resolution (middle size)

# Note that --training_patch_size is just used to differentiate two different settings in Table 2 of the paper. Images are NOT tested patch by patch.

# (setting1: when model is trained on DIV2K and with training_patch_size=48)

python main_test_swinir.py --task classical_sr --scale 2 --training_patch_size 48 --model_path model_zoo/swinir/001_classicalSR_DIV2K_s48w8_SwinIR-M_x2.pth --folder_lq testsets/Set5/LR_bicubic/X2 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task classical_sr --scale 3 --training_patch_size 48 --model_path model_zoo/swinir/001_classicalSR_DIV2K_s48w8_SwinIR-M_x3.pth --folder_lq testsets/Set5/LR_bicubic/X3 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task classical_sr --scale 4 --training_patch_size 48 --model_path model_zoo/swinir/001_classicalSR_DIV2K_s48w8_SwinIR-M_x4.pth --folder_lq testsets/Set5/LR_bicubic/X4 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task classical_sr --scale 8 --training_patch_size 48 --model_path model_zoo/swinir/001_classicalSR_DIV2K_s48w8_SwinIR-M_x8.pth --folder_lq testsets/Set5/LR_bicubic/X8 --folder_gt testsets/Set5/HR

# (setting2: when model is trained on DIV2K+Flickr2K and with training_patch_size=64)

python main_test_swinir.py --task classical_sr --scale 2 --training_patch_size 64 --model_path model_zoo/swinir/001_classicalSR_DF2K_s64w8_SwinIR-M_x2.pth --folder_lq testsets/Set5/LR_bicubic/X2 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task classical_sr --scale 3 --training_patch_size 64 --model_path model_zoo/swinir/001_classicalSR_DF2K_s64w8_SwinIR-M_x3.pth --folder_lq testsets/Set5/LR_bicubic/X3 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task classical_sr --scale 4 --training_patch_size 64 --model_path model_zoo/swinir/001_classicalSR_DF2K_s64w8_SwinIR-M_x4.pth --folder_lq testsets/Set5/LR_bicubic/X4 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task classical_sr --scale 8 --training_patch_size 64 --model_path model_zoo/swinir/001_classicalSR_DF2K_s64w8_SwinIR-M_x8.pth --folder_lq testsets/Set5/LR_bicubic/X8 --folder_gt testsets/Set5/HR

# 002 Lightweight Image Super-Resolution (small size)

python main_test_swinir.py --task lightweight_sr --scale 2 --model_path model_zoo/swinir/002_lightweightSR_DIV2K_s64w8_SwinIR-S_x2.pth --folder_lq testsets/Set5/LR_bicubic/X2 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task lightweight_sr --scale 3 --model_path model_zoo/swinir/002_lightweightSR_DIV2K_s64w8_SwinIR-S_x3.pth --folder_lq testsets/Set5/LR_bicubic/X3 --folder_gt testsets/Set5/HR

python main_test_swinir.py --task lightweight_sr --scale 4 --model_path model_zoo/swinir/002_lightweightSR_DIV2K_s64w8_SwinIR-S_x4.pth --folder_lq testsets/Set5/LR_bicubic/X4 --folder_gt testsets/Set5/HR

# 003 Real-World Image Super-Resolution (use --tile 400 if you run out-of-memory)

# (middle size)

python main_test_swinir.py --task real_sr --scale 4 --model_path model_zoo/swinir/003_realSR_BSRGAN_DFO_s64w8_SwinIR-M_x4_GAN.pth --folder_lq testsets/RealSRSet+5images --tile

# (larger size + trained on more datasets)

python main_test_swinir.py --task real_sr --scale 4 --large_model --model_path model_zoo/swinir/003_realSR_BSRGAN_DFOWMFC_s64w8_SwinIR-L_x4_GAN.pth --folder_lq testsets/RealSRSet+5images

# 004 Grayscale Image Deoising (middle size)

python main_test_swinir.py --task gray_dn --noise 15 --model_path model_zoo/swinir/004_grayDN_DFWB_s128w8_SwinIR-M_noise15.pth --folder_gt testsets/Set12

python main_test_swinir.py --task gray_dn --noise 25 --model_path model_zoo/swinir/004_grayDN_DFWB_s128w8_SwinIR-M_noise25.pth --folder_gt testsets/Set12

python main_test_swinir.py --task gray_dn --noise 50 --model_path model_zoo/swinir/004_grayDN_DFWB_s128w8_SwinIR-M_noise50.pth --folder_gt testsets/Set12

# 005 Color Image Deoising (middle size)

python main_test_swinir.py --task color_dn --noise 15 --model_path model_zoo/swinir/005_colorDN_DFWB_s128w8_SwinIR-M_noise15.pth --folder_gt testsets/McMaster

python main_test_swinir.py --task color_dn --noise 25 --model_path model_zoo/swinir/005_colorDN_DFWB_s128w8_SwinIR-M_noise25.pth --folder_gt testsets/McMaster

python main_test_swinir.py --task color_dn --noise 50 --model_path model_zoo/swinir/005_colorDN_DFWB_s128w8_SwinIR-M_noise50.pth --folder_gt testsets/McMaster

# 006 JPEG Compression Artifact Reduction (middle size, using window_size=7 because JPEG encoding uses 8x8 blocks)

# grayscale

python main_test_swinir.py --task jpeg_car --jpeg 10 --model_path model_zoo/swinir/006_CAR_DFWB_s126w7_SwinIR-M_jpeg10.pth --folder_gt testsets/classic5

python main_test_swinir.py --task jpeg_car --jpeg 20 --model_path model_zoo/swinir/006_CAR_DFWB_s126w7_SwinIR-M_jpeg20.pth --folder_gt testsets/classic5

python main_test_swinir.py --task jpeg_car --jpeg 30 --model_path model_zoo/swinir/006_CAR_DFWB_s126w7_SwinIR-M_jpeg30.pth --folder_gt testsets/classic5

python main_test_swinir.py --task jpeg_car --jpeg 40 --model_path model_zoo/swinir/006_CAR_DFWB_s126w7_SwinIR-M_jpeg40.pth --folder_gt testsets/classic5

# color

python main_test_swinir.py --task color_jpeg_car --jpeg 10 --model_path model_zoo/swinir/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg10.pth --folder_gt testsets/LIVE1

python main_test_swinir.py --task color_jpeg_car --jpeg 20 --model_path model_zoo/swinir/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg20.pth --folder_gt testsets/LIVE1

python main_test_swinir.py --task color_jpeg_car --jpeg 30 --model_path model_zoo/swinir/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg30.pth --folder_gt testsets/LIVE1

python main_test_swinir.py --task color_jpeg_car --jpeg 40 --model_path model_zoo/swinir/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg40.pth --folder_gt testsets/LIVE1

We achieved state-of-the-art performance on classical/lightweight/real-world image SR, grayscale/color image denoising and JPEG compression artifact reduction. Detailed results can be found in the paper. All visual results of SwinIR can be downloaded here.

Classical Image Super-Resolution (click me)

- More detailed comparison between SwinIR and a representative CNN-based model RCAN (classical image SR, X4)

| Method | Training Set | Training time (8GeForceRTX2080Ti batch=32, iter=500k) |

Y-PSNR/Y-SSIM on Manga109 |

Run time (1GeForceRTX2080Ti, on 256x256 LR image)* |

#Params | #FLOPs | Testing memory |

|---|---|---|---|---|---|---|---|

| RCAN | DIV2K | 1.6 days | 31.22/0.9173 | 0.180s | 15.6M | 850.6G | 593.1M |

| SwinIR | DIV2K | 1.8 days | 31.67/0.9226 | 0.539s | 11.9M | 788.6G | 986.8M |

* We re-test the runtime when the GPU is idle. We refer to the evluation code here.

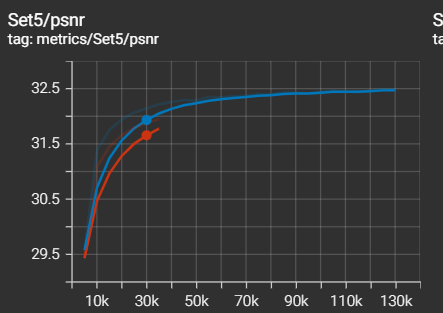

- Results on DIV2K-validation (100 images)

| Training Set | scale factor | PSNR (RGB) | PSNR (Y) | SSIM (RGB) | SSIM (Y) |

|---|---|---|---|---|---|

| DIV2K (800 images) | 2 | 35.25 | 36.77 | 0.9423 | 0.9500 |

| DIV2K+Flickr2K (2650 images) | 2 | 35.34 | 36.86 | 0.9430 | 0.9507 |

| DIV2K (800 images) | 3 | 31.50 | 32.97 | 0.8832 | 0.8965 |

| DIV2K+Flickr2K (2650 images) | 3 | 31.63 | 33.10 | 0.8854 | 0.8985 |

| DIV2K (800 images) | 4 | 29.48 | 30.94 | 0.8311 | 0.8492 |

| DIV2K+Flickr2K (2650 images) | 4 | 29.63 | 31.08 | 0.8347 | 0.8523 |

JPEG Compression Artifact Reduction

on grayscale images

on color images

| Training Set | quality factor | PSNR (RGB) | PSNR-B (RGB) | SSIM (RGB) |

|---|---|---|---|---|

| LIVE1 | 10 | 28.06 | 27.76 | 0.8089 |

| LIVE1 | 20 | 30.45 | 29.97 | 0.8741 |

| LIVE1 | 30 | 31.82 | 31.24 | 0.9018 |

| LIVE1 | 40 | 32.75 | 32.12 | 0.9174 |

@article{liang2021swinir,

title={SwinIR: Image Restoration Using Swin Transformer},

author={Liang, Jingyun and Cao, Jiezhang and Sun, Guolei and Zhang, Kai and Van Gool, Luc and Timofte, Radu},

journal={arXiv preprint arXiv:2108.10257},

year={2021}

}

This project is released under the Apache 2.0 license. The codes are based on Swin Transformer and KAIR. Please also follow their licenses. Thanks for their awesome works.