Comments (9)

Hey, thanks for trying out Robyn. Well we don't directly force the model to not produce 0 coef at all, because we did this before and got issues when there were really weak variables inside that "deserved 0". As you know, you get a set of Pareto output. If all results are not satisfying, I would recommend to run a higher set_trials, e.g. 80, to get more results. Maybe there will be some results that are not reducing your search to 0. Does it make sense?

from robyn.

It's good to know it @gufengzhou, and it make sense, but there are still cases like google brand where it is necessary to protect the brand and assign a budget, in the same way thanks for the feedback.

from robyn.

What you can also try is increase the range of theta for brand. You're using 0-.3 now, you can try 0.3-0.9 to see if you get better result there. If that still doesn't work, try further splitting brand into more granular variables. Hope it helps.

from robyn.

Hi @gibrano as Gufeng said, thanks so much for using our code :)

The steps I usually take are:

- Increase iterations/trials. If that does not work then:

- Increase thetas and/or gammas which will increase the delayed effect in time of the media and increase the spend saturation inflection point. If that does not work then:

- Try different train/test split ratios. You may analyze the correlation of the brand channel with the response (e.g sales) for both the train and test split periods. Ideally both periods should have a similar correlation and representation of the brand channel in question.

- If none of the above work, try to see if different channel aggregations may help for this case?

Hope it helps and thanks for trying out Robyn!

from robyn.

one more thing. I just saw that in the budget allocator, you have the upper contraints as 1.0 --> this means you don't allow any increase of spend. That's why you see unreasonable allocation results. upper constraint should be above 1

from robyn.

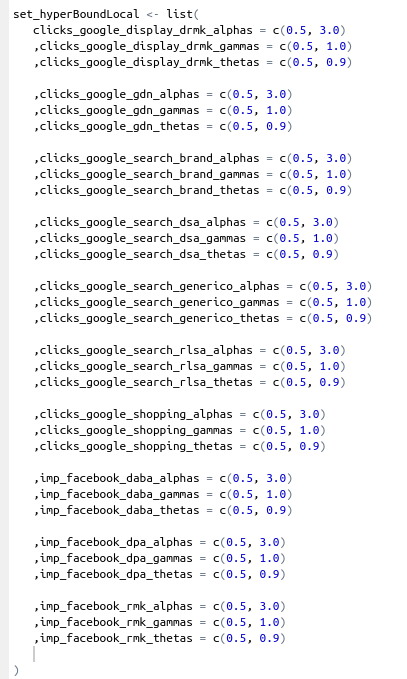

Hi @gufengzhou and @Leonelsentana, thank you so much for the follow up you have given to the case. Following your advises, I have increased iterations to 800 and trials to 80, also I have splited the campaigns into more granular variables with hyperparameters:

My results with the Budget Allocator keeps giving me zero in some coefficients:

With max_historical_response:

With max_response_expected_spend:

Something I would expect with lowers and uppers bounds is that the budgets don't go out of bounds. On the other hand, I notice that in the plot of Response curve and mean spend by channel, there are positive values or positive budgets for each of the channels, or I don't know if I am misinterpreting it.

I appreciate your help.

from robyn.

Generally speaking, Robyn does not guarantee all coefficients to be non-zero. We use ridge regression, a shrinkage method that will also reduce coefs to 0 when the correlation between that feature and dependent variable is "too weak". So there's no guarantee that all media will be non-zero.

Having said that, also with 800 * 80 total iterations, not one of the pareto optimal models is non-zero? If this is the case, one thing you could do is to increase the amount of pareto outputs and "hope" that you can get one non-zero. As you know, I'm outputting 3 pareto fronts by default, which can be increased (although quite hidden). In the .func script line 1439, I define the number of pareto fronts as paretoFronts <- c(1,2,3). You can try to increase to 1-5 or even higher, so that you can get more results. Of course the trade-off is clear: the higher the front number, the worse the model performance in terms of NRMSE and DECOMP.RSSE. But with 800*80 iterations, I can imagine higher fronts might still be quite good. Please try this and let me know if it works.

Regarding the positive budget in response curve plot, well for those 0 coef channels, you will still have a mean spend, but the responses are zero. You can also see that these channels response curves are just an horizontal line.

from robyn.

Another thing worth trying is what Leo mentioned as second point: increase the bounds of hyperparameters for the 0 coef channels, esp. theta to 0 - 0.9 and gamma to 0.1 - 1. I see that you changed the thetas to 0.5-1. By increasing the bounds, we don't mean make it higher, but rather give the model more freedom. For gamma, usually we don't recommend to set it too low (allowing inflexion point to happen early). But in your case it might be worth trying. Let us know how it works.

from robyn.

Feel free to reopen this if more issues occur

from robyn.

Related Issues (20)

- Plot Weibull PDF Adstock and get the peak value time point HOT 2

- wrong notebook links in robyn_python_notebook.ipynb HOT 2

- Using nevergrad for constraining few of the model coefficients to be positive only in ridge regression HOT 1

- Robyn API via Python plumber didn't work when no organic_vars or factor_vars specifty HOT 3

- AttributeError: '_DE' object has no attribute 'set_objective_weights' HOT 2

- hierarchical MMM HOT 1

- Budget Allocator incorporating JSON file HOT 4

- error writing to connection HOT 2

- Robyn future budget allocation feature status HOT 2

- Demo python notebook crashes when using calibration. HOT 3

- Error in robyn_allocator HOT 4

- Total Budget Optimizing Result has incorrect initial total response HOT 3

- Error in robyn_recreate() with a model pre-trained HOT 2

- Issue creating one pagers either through robyn_outputs or onepager() HOT 2

- Hill function never saturates HOT 1

- Robyn Python API - Internal Server Error 500 | Unable to track the logs HOT 5

- Robyn_API is not defined HOT 3

- Unable to achieve Target ROAS with Python Robyn API Allocator Function - target_efficiency HOT 8

- Calculate measurement errors, handling missing data along with outliers HOT 1

- Share of Spend and Share of Effect definitions HOT 4

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from robyn.