- What is Apache Pinot?

- Features

- When should I use Pinot?

- Building Pinot

- Deploying Pinot to Kubernetes

- Join the Community

- Documentation

- License

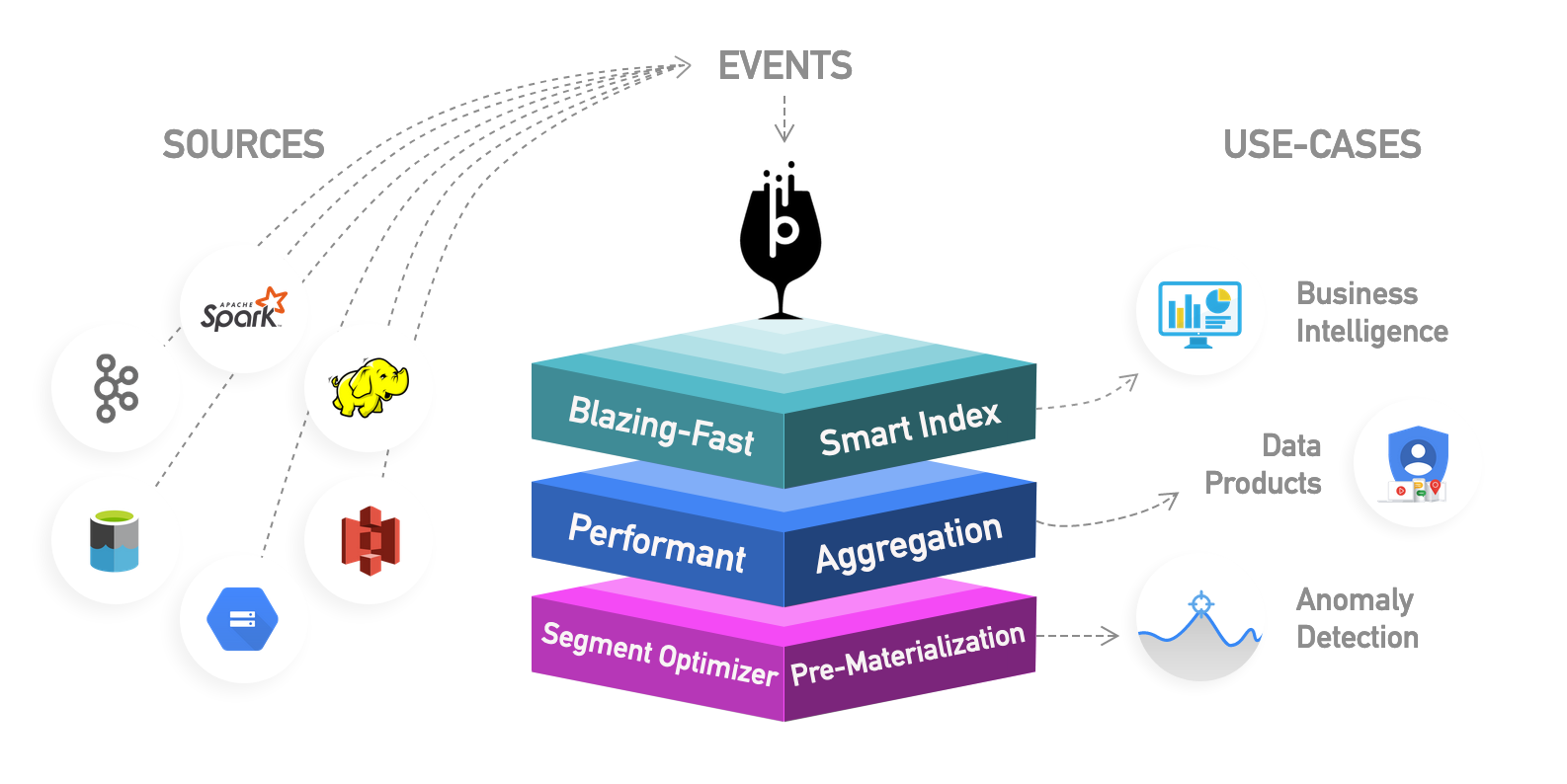

Apache Pinot is a real-time distributed OLAP datastore, built to deliver scalable real-time analytics with low latency. It can ingest from batch data sources (such as Hadoop HDFS, Amazon S3, Azure ADLS, Google Cloud Storage) as well as stream data sources (such as Apache Kafka).

Pinot was built by engineers at LinkedIn and Uber and is designed to scale up and out with no upper bound. Performance always remains constant based on the size of your cluster and an expected query per second (QPS) threshold.

For getting started guides, deployment recipes, tutorials, and more, please visit our project documentation at https://docs.pinot.apache.org.

Pinot was originally built at LinkedIn to power rich interactive real-time analytic applications such as Who Viewed Profile, Company Analytics, Talent Insights, and many more. UberEats Restaurant Manager is another example of a customer facing Analytics App. At LinkedIn, Pinot powers 50+ user-facing products, ingesting millions of events per second and serving 100k+ queries per second at millisecond latency.

-

Column-oriented: a column-oriented database with various compression schemes such as Run Length, Fixed Bit Length.

-

Pluggable indexing: pluggable indexing technologies Sorted Index, Bitmap Index, Inverted Index.

-

Query optimization: ability to optimize query/execution plan based on query and segment metadata.

-

Stream and batch ingest: near real time ingestion from streams and batch ingestion from Hadoop.

-

Query: SQL based query execution engine.

-

Upsert during real-time ingestion: update the data at-scale with consistency

-

Multi-valued fields: support for multi-valued fields, allowing you to query fields as comma separated values.

-

Cloud-native on Kubernetes: Helm chart provides a horizontally scalable and fault-tolerant clustered deployment that is easy to manage using Kubernetes.

Pinot is designed to execute real-time OLAP queries with low latency on massive amounts of data and events. In addition to real-time stream ingestion, Pinot also supports batch use cases with the same low latency guarantees. It is suited in contexts where fast analytics, such as aggregations, are needed on immutable data, possibly, with real-time data ingestion. Pinot works very well for querying time series data with lots of dimensions and metrics.

Example query:

SELECT sum(clicks), sum(impressions) FROM AdAnalyticsTable

WHERE

((daysSinceEpoch >= 17849 AND daysSinceEpoch <= 17856)) AND

accountId IN (123456789)

GROUP BY

daysSinceEpoch TOP 100More detailed instructions can be found at Quick Demo section in the documentation.

# Clone a repo

$ git clone https://github.com/apache/pinot.git

$ cd pinot

# Build Pinot

$ mvn clean install -DskipTests -Pbin-dist

# Run the Quick Demo

$ cd build/

$ bin/quick-start-batch.sh

Please refer to Running Pinot on Kubernetes in our project documentation. Pinot also provides Kubernetes integrations with the interactive query engine, Trino Presto, and the data visualization tool, Apache Superset.

- Ask questions on Apache Pinot Slack

- Please join Apache Pinot mailing lists

[email protected] (subscribe to pinot-dev mailing list)

[email protected] (posting to pinot-dev mailing list)

[email protected] (subscribe to pinot-user mailing list)

[email protected] (posting to pinot-user mailing list) - Apache Pinot Meetup Group: https://www.meetup.com/apache-pinot/

Check out Pinot documentation for a complete description of Pinot's features.

Apache Pinot is under Apache License, Version 2.0