Take me to the code and Jupyter Notebook for Image Recognition!

This article explores a Machine Learning algorithm called Convolution Neural Network (CNN), it's a common Deep Learning technique used for image recognition and classification.

You are provided with a dataset consisting of 5,000 Cat images and 5,000 Dog images. We are going to train a Machine Learning model to learn differences between the two categories. The model will predict if a new unseen image is a Cat or Dog. The code architecture is robust and can be used to recognize any number of image categories, if provided with enough data.

Convolution Neural Networks are good for pattern recognition and feature detection which is especially useful in image classification. Improve the performance of Convolution Neural Networks through hyper-parameter tuning, adding more convolution layers, adding more fully connected layers, or providing more correctly labeled data to the algorithm.

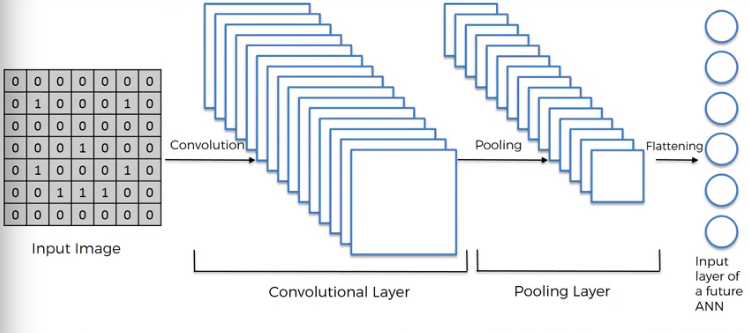

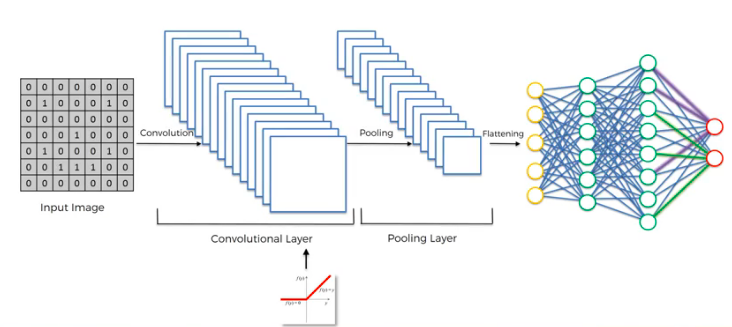

Create a Convolution Neural Network (CNN) with the following steps:

- Convolution

- Max Pooling

- Flattening

- Full Connection

Check out How to implement a neural network, also take a look at A Friendly Introduction to Cross-Entropy Loss.

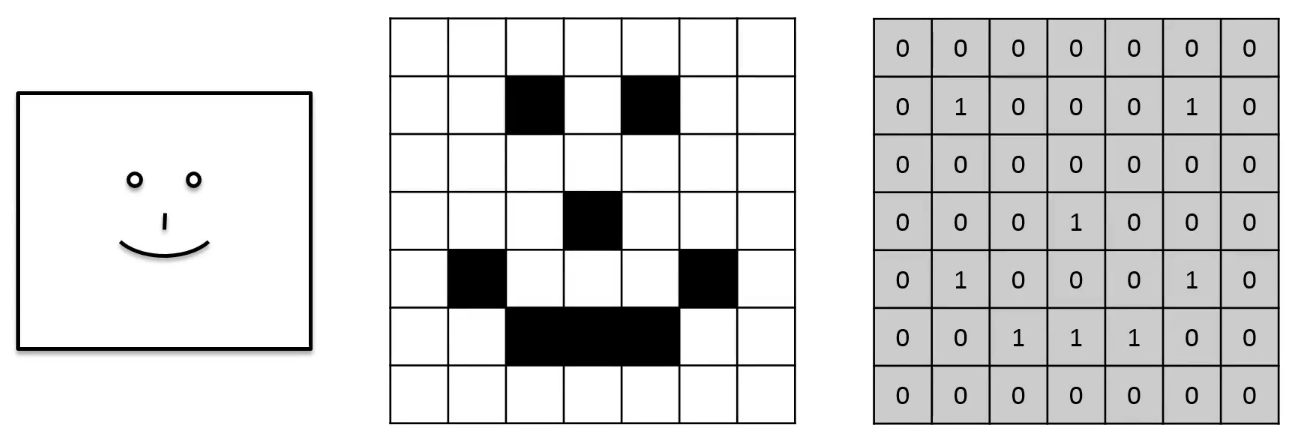

Convolution is a function derived from two other functions through an integration that expresses how the shape of one is modified by the other.

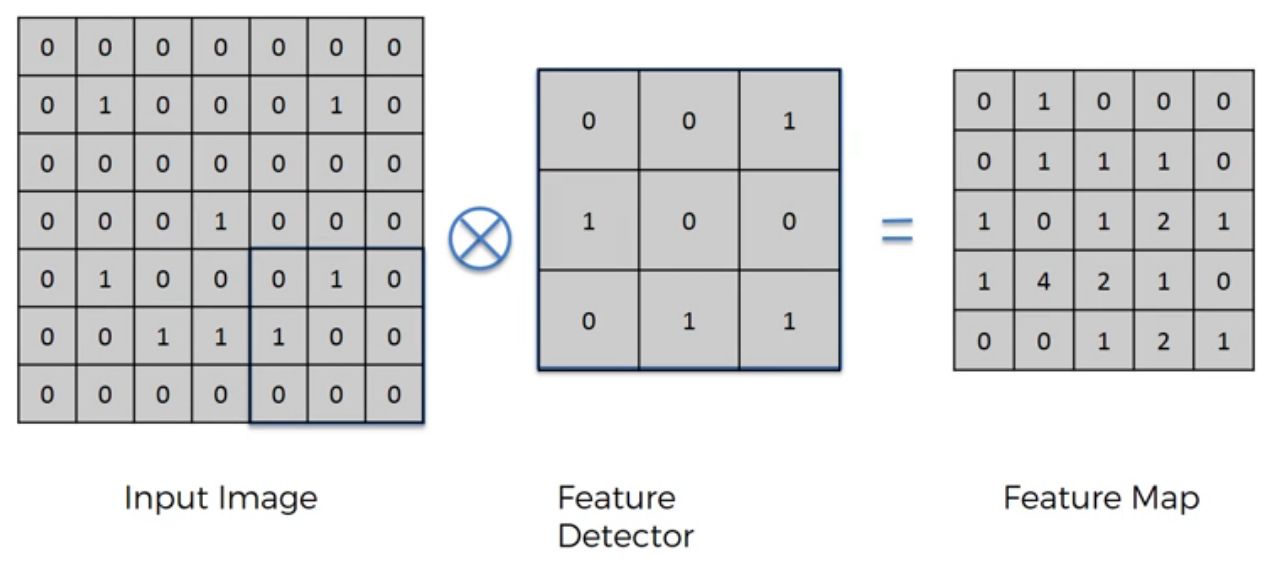

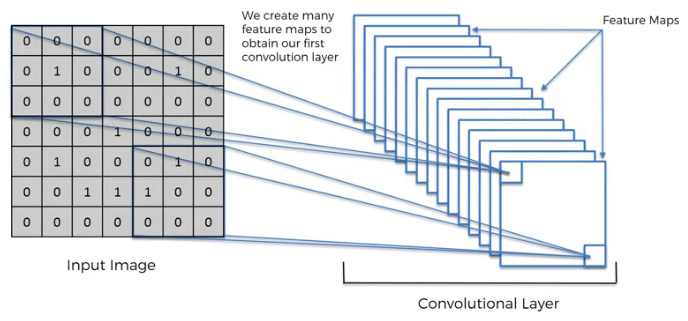

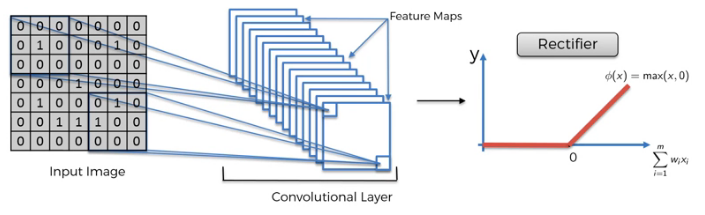

For image recognition, we convolve the input image with Feature Detectors (also known as Kernel or Filter) to generate a Feature Map (also known as Convolved Map or Activation Map). This reveals and preserves patterns in the image, and also compresses the image for easier processing. Feature Maps are generated by element-wise multiplication and addition of corresponding images with Filters consisting of multiple Feature Detectors. This allows the creation of multiple Feature Maps.

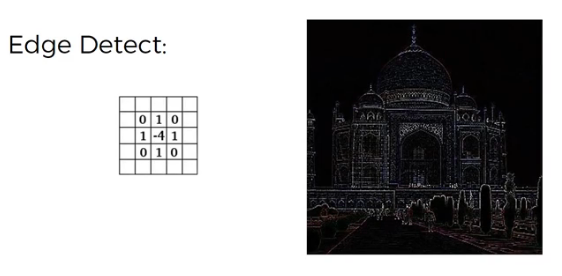

This Image Convolution Guide allows you to play with various filters applied to an image. Edge Detect is a useful filters in Machine Learning. The algorithm creates filters that are not recognizable to humans, perhaps we learn with similar techniques in our subconscious. Feature Maps preserve spatial relationships between pixels throughout processing.

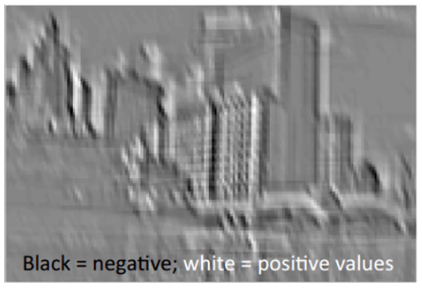

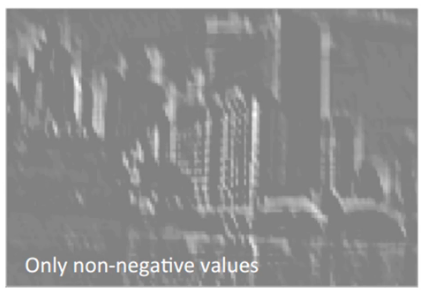

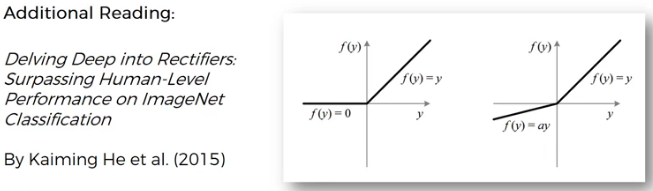

Rectifier Functions are applied to Convolution Neural Networks to increases non-linearity (breaks up linearity). This is an important step for image recognition with CNNs. Images are usually non-linear due to sharp transition of pixels, different colors, etc. ReLU functions help amplify the non-linearity of images so the ML model has an easier time finding patterns.

In the above example, the ReLU operation removed the Black Pixels so there's less White to Gray to Black transitions. Borders now have more abrupt Pixel changes. Microsoft argues that the using their Modified Rectifier Function works better for CNNs.

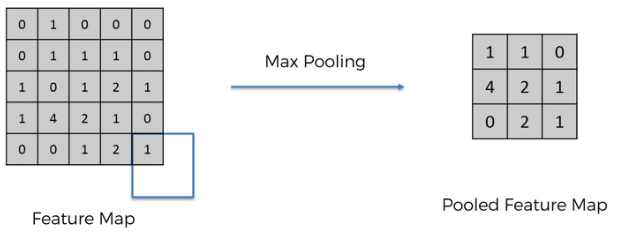

Max Pooling finds the largest value of small grids in the Feature Map, this creates a Pooled Feature Map. Average Pooling (sub-sampling) takes the average values of small grids. It makes sure that your Neural Network has Spatial Invariance (able to find learned features in new images that are slightly varied or distorted). Max Pooling provides resilience against shifter or rotated features. It also further distills Feature Maps (reduces size) while preserving spatial relationships of pixels. Removing unnecessary information also helps prevent overfitting. Read 'Evaluation of Pooling Operations in Convolutional Architectures for Object Recognition.pdf'. Here is an online CNN Visualization Tool.

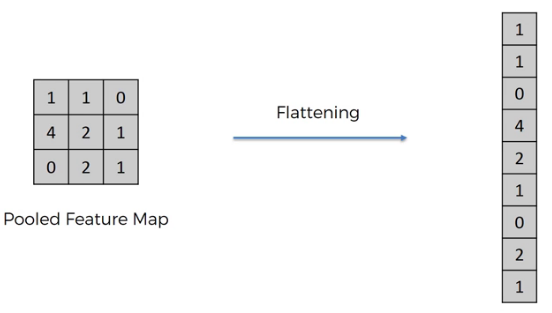

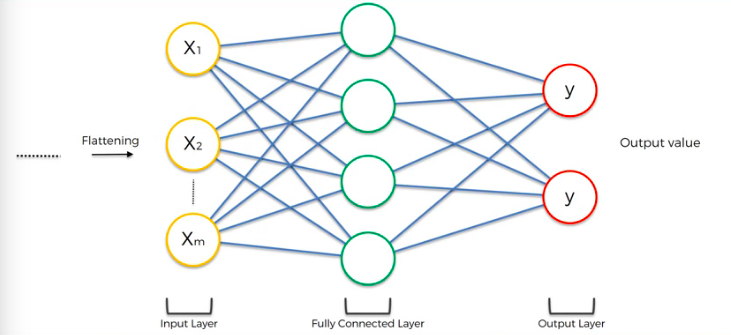

Flattening puts values of the pooled Feature Map matrix into a 1-D vector. This makes it easy for the image data to pass through an Artificial Neural Network algorithm.

This is when the output of a Convolution Neural Network is flattened and fed through a classic Artificial Neural Network. It's important to note that CNNs require fully-connected hidden layers where as regular ANNs don't necessarily need full connections.

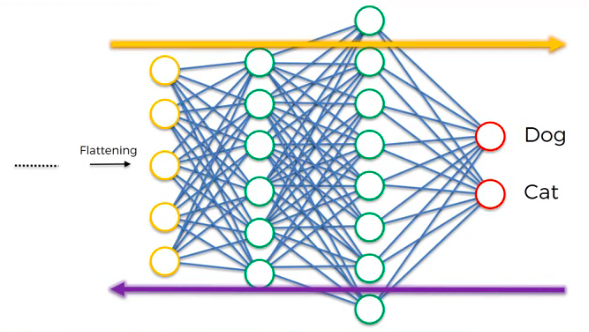

The process of CNN back-propagation adjusts weights of neurons, while adjusting Feature Maps.

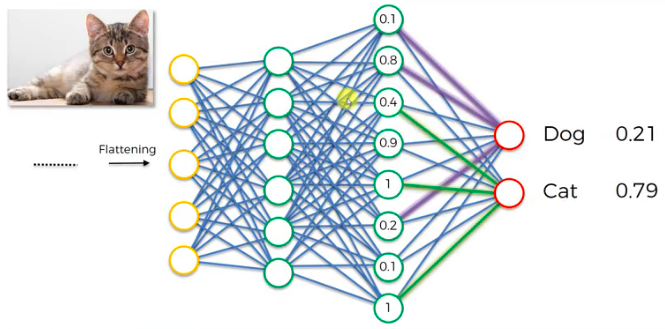

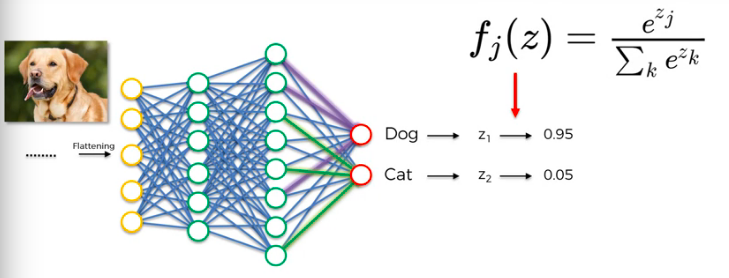

When it's time for the CNN to make a decision between Cat or Dog, the final layer neurons 'vote' on probability of an image being a Cat or Dog (or any other categories you show it). The Neural Network adjusts votes according to the best weights it has determined through back-propagation.

Here is a summary of every step of a CNN, don't forget about the Rectifier Function that removes linearity in Feature Maps, also remember that the hidden layers are fully connected.

This step modifies images to prevent over-fitting. This data augmentation trick can generate tons more data by applying random modifications to existing data like shearing, stretching, zooming, etc. This makes your dataset and algorithm more robust and generalized.

The Softmax function shown below is used to make sure that the probabilities of the output layer add up to one, this gives us a percentage guess. Watch this Geoffrey Hinton video about the SoftMax Function.

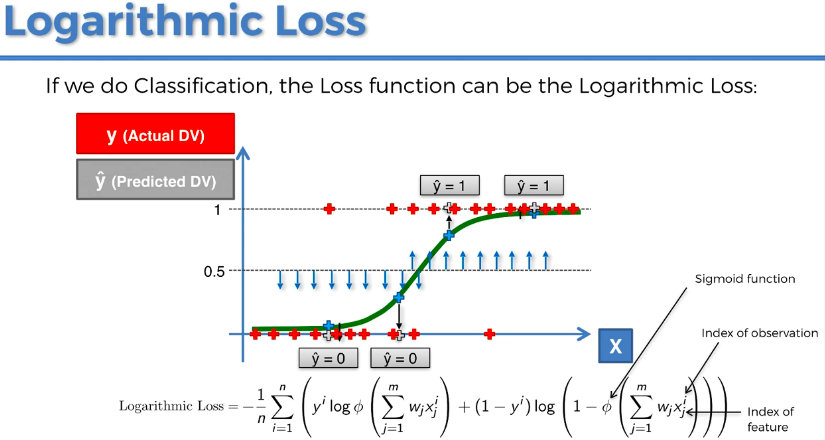

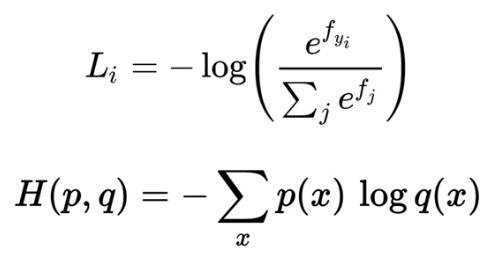

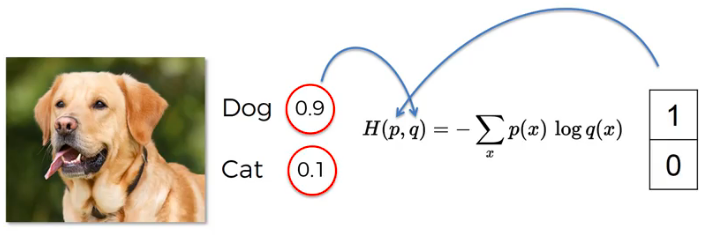

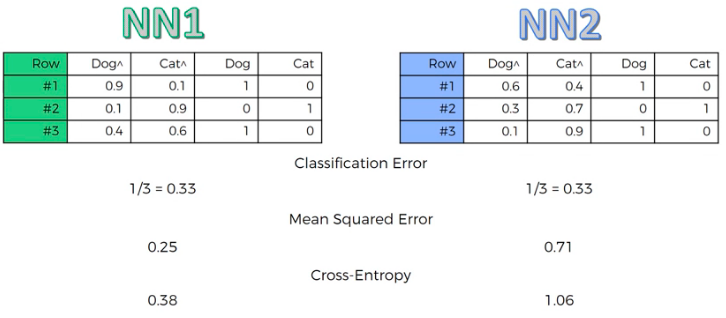

We had previously used the Mean Squared Error (MSE) Cost Function. For CNNs, it's better to use the Cross-Entropy Function as your Cost Function. We use Cross-Entropy as a Loss Function because it has a 'Log' term which helps amplify small Errors and better guide gradient descent.

Download the code and run it with 'Jupyter Notebook' or copy the code into the 'Spyder' IDE found in the Anaconda Distribution. 'Spyder' is similar to MATLAB, it allows you to step through the code and examine the 'Variable Explorer' to see exactly how the data is parsed and analyzed. Jupyter Notebook also offers a Jupyter Variable Explorer Extension which is quite useful for keeping track of variables.

$ git clone https://github.com/AMoazeni/Machine-Learning-Image-Recognition.git

$ cd Machine-Learning-Image-Recognition