Important

The code for quantum tensor networks has been moved to the new Qrochet library.

A Julia library for Tensor Networks. Tenet can be executed both at local environments and on large supercomputers. Its goals are,

- Expressiveness Simple to use. 👶

- Flexibility Extend it to your needs. 🔧

- Performance Goes brr... fast. 🏎️

- Optimized Tensor Network contraction order, powered by

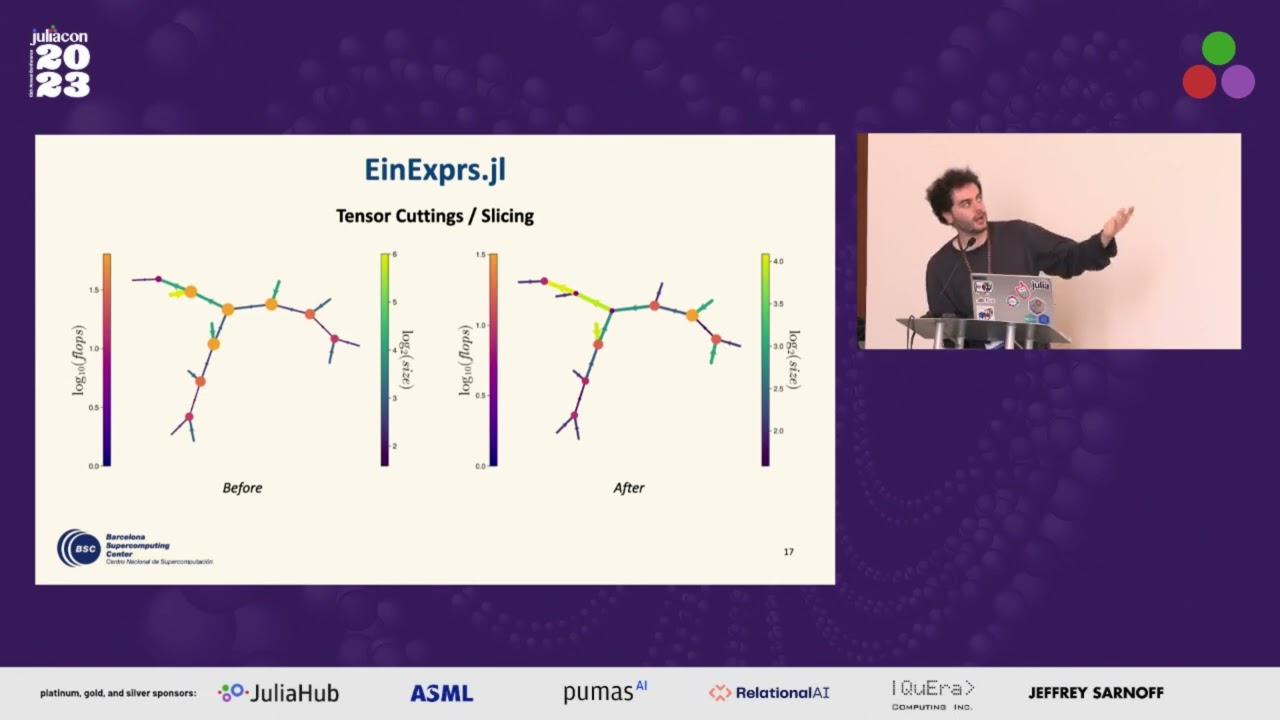

EinExprs - Tensor Network slicing/cuttings

- Automatic Differentiation of TN contraction

- Distributed contraction

- Local Tensor Network transformations

- Hyperindex converter

- Rank simplification

- Diagonal reduction

- Anti-diagonal gauging

- Column reduction

- Split simplification

- 2D & 3D visualization of large networks, powered by

Makie

A video of its presentation at JuliaCon 2023 can be seen here: