Pytest-monitor is a pytest plugin designed for analyzing resource usage.

- Analyze your resources consumption through test functions:

- memory consumption

- time duration

- CPU usage

- Keep a history of your resource consumption measurements.

- Compare how your code behaves between different environments.

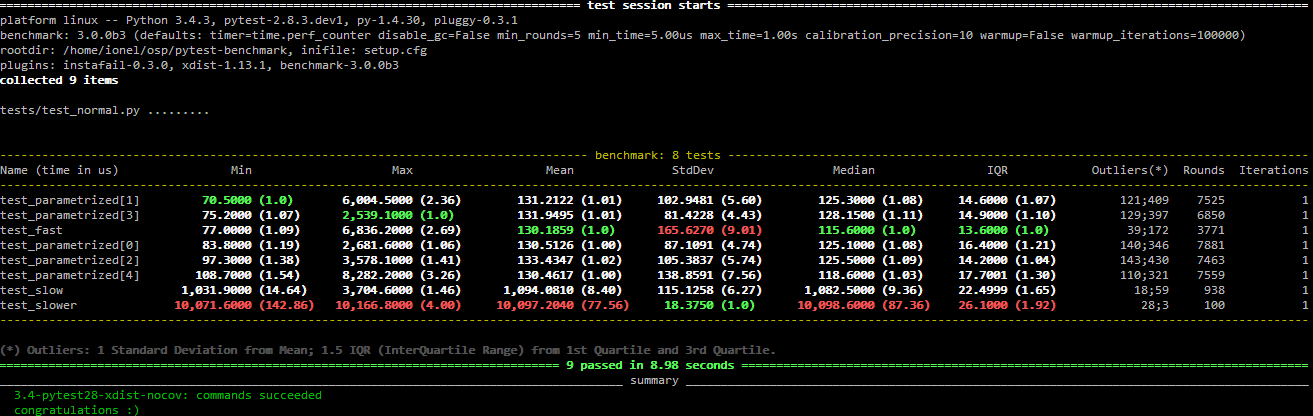

Simply run pytest as usual: pytest-monitor is active by default as soon as it is installed. After running your first session, a .pymon sqlite database will be accessible in the directory where pytest was run.

Example of information collected for the execution context:

| ENV_H | CPU_COUNT | CPU_FREQUENCY_MHZ | CPU_TYPE | CPU_VENDOR | RAM_TOTAL_MB | MACHINE_NODE | MACHINE_TYPE | MACHINE_ARCH | SYSTEM_INFO | PYTHON_INFO |

|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz |

|

some.host.vm.fr |

|

|

Linux - 3.10.0-693.el7.x86_64 | 3.6.8 (default, Jun 28 2019, 11:09:04) n[GCC ... |

Here is an example of collected data stored in the result database:

| RUN_DATE | ENV_H | SCM_ID | ITEM_START_TIME | ITEM | KIND | COMPONENT | TOTAL_TIME | USER_TIME | KERNEL_TIME | CPU_USAGE | MEM_USAGE |

|---|---|---|---|---|---|---|---|---|---|---|---|

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:36.890477 |

|

function |

|

|

|

|

|

1.781250 |

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:39.912029 |

|

function |

|

|

|

|

|

|

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:39.948922 |

|

function |

|

|

|

|

|

|

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:39.983869 |

|

function |

|

|

|

|

|

|

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:40.020823 | pkg1.test_mod1/test_heavy[10000-10000] | function |

|

|

|

|

|

41.292969 |

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:40.093490 |

|

function |

|

|

|

|

|

|

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:40.510525 |

|

function |

|

|

|

|

|

|

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:45.530780 |

|

function |

|

|

|

|

188.164762 |

|

|

8294b1326007d9f4c8a1680f9590c23d | de23e6bdb987ae21e84e6c7c0357488ee66f2639 | 2020-02-17T09:11:50.582954 |

|

function |

|

|

|

|

11.681416 | 2.320312 |

A full documentation is available.

You can install pytest-monitor via conda (through the conda-forge channel):

$ conda install pytest-monitor -c https://conda.anaconda.org/conda-forgeAnother possibility is to install pytest-monitor via pip from PyPI:

$ pip install pytest-monitorYou will need a valid Python 3.5+ interpreter. To get measures, we rely on:

- psutil to extract CPU usage

- memory_profiler to collect memory usage

- and pytest (obviously!)

Note: this plugin doesn't work with unittest

By default, pytest-monitor stores its result in a local SQLite3 local database, making results accessible. If you need a more powerful way to analyze your results, checkout the monitor-server-api which brings both a REST Api for storing and historize your results and an API to query your data. An alternative service (using MongoDB) can be used thanks to a contribution from @dremdem: pytest-monitor-backend.

Contributions are very welcome. Tests can be run with tox. Before submitting a pull request, please ensure that:

- both internal tests and examples are passing.

- internal tests have been written if necessary.

- if your contribution provides a new feature, make sure to provide an example and update the documentation accordingly.

This code is distributed under the MIT license. pytest-monitor is free, open-source software.

If you encounter any problem, please file an issue along with a detailed description.

The main author of pytest-monitor is Jean-Sébastien Dieu, who can be reached at [email protected].

This pytest plugin was generated with Cookiecutter along with @hackebrot's cookiecutter-pytest-plugin template.