Cilium is a networking, observability, and security solution with an eBPF-based dataplane. It provides a simple flat Layer 3 network with the ability to span multiple clusters in either a native routing or overlay mode. It is L7-protocol aware and can enforce network policies on L3-L7 using an identity based security model that is decoupled from network addressing.

Cilium implements distributed load balancing for traffic between pods and to external services, and is able to fully replace kube-proxy, using efficient hash tables in eBPF allowing for almost unlimited scale. It also supports advanced functionality like integrated ingress and egress gateway, bandwidth management and service mesh, and provides deep network and security visibility and monitoring.

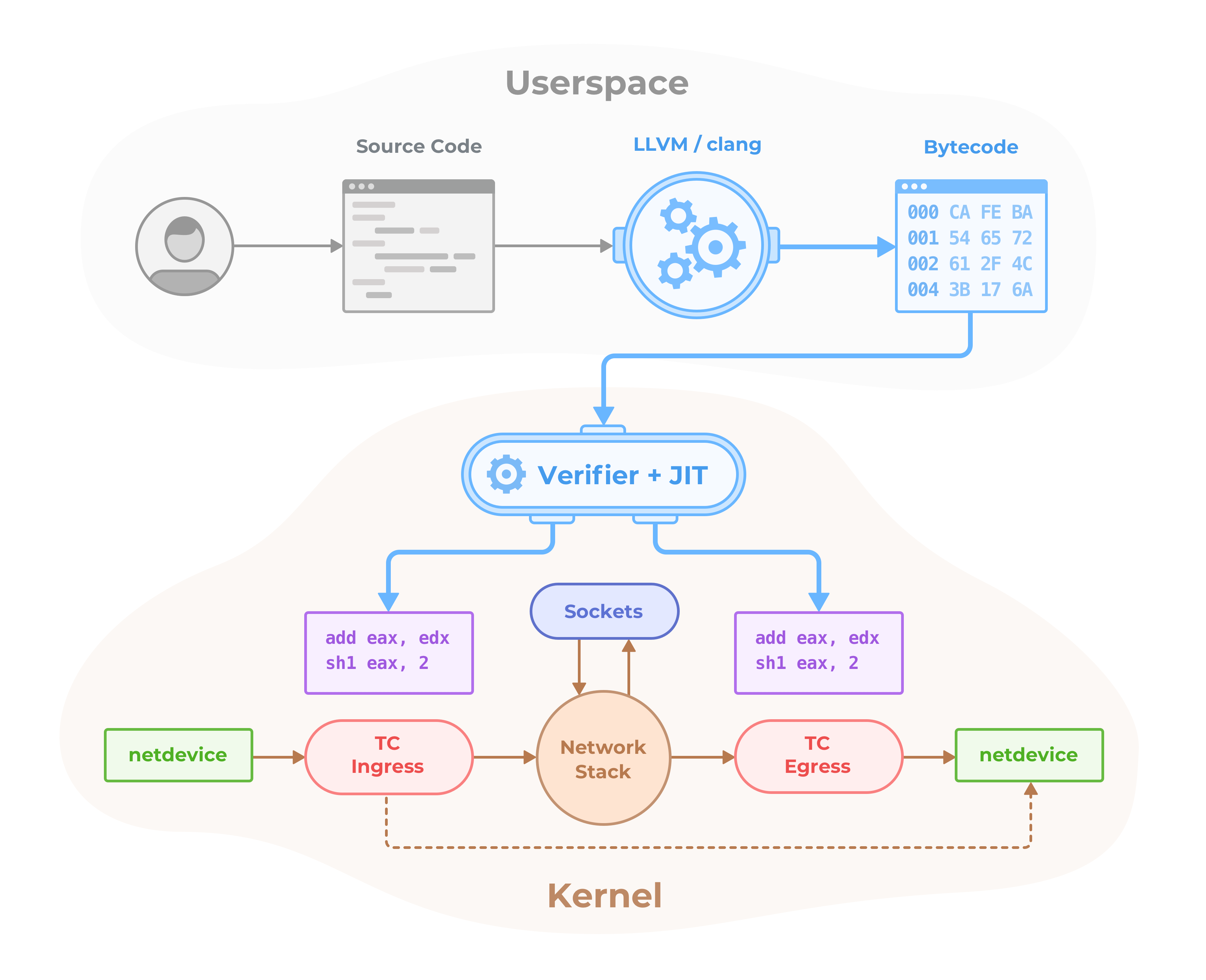

A new Linux kernel technology called eBPF is at the foundation of Cilium. It supports dynamic insertion of eBPF bytecode into the Linux kernel at various integration points such as: network IO, application sockets, and tracepoints to implement security, networking and visibility logic. eBPF is highly efficient and flexible. To learn more about eBPF, visit eBPF.io.

The Cilium community maintains minor stable releases for the last three minor Cilium versions. Older Cilium stable versions from minor releases prior to that are considered EOL.

For upgrades to new minor releases please consult the Cilium Upgrade Guide.

Listed below are the actively maintained release branches along with their latest patch release, corresponding image pull tags and their release notes:

| v1.15 | 2024-06-10 | quay.io/cilium/cilium:v1.15.6 |

Release Notes |

| v1.14 | 2024-06-10 | quay.io/cilium/cilium:v1.14.12 |

Release Notes |

| v1.13 | 2024-06-10 | quay.io/cilium/cilium:v1.13.17 |

Release Notes |

Cilium images are distributed for AMD64 and AArch64 architectures.

Starting with Cilium version 1.13.0, all images include a Software Bill of Materials (SBOM). The SBOM is generated in SPDX format. More information on this is available on Cilium SBOM.

For development and testing purpose, the Cilium community publishes snapshots, early release candidates (RC) and CI container images build from the main branch. These images are not for use in production.

For testing upgrades to new development releases please consult the latest development build of the Cilium Upgrade Guide.

Listed below are branches for testing along with their snapshots or RC releases, corresponding image pull tags and their release notes where applicable:

| main | daily | quay.io/cilium/cilium-ci:latest |

N/A |

| v1.16.0-rc.0 | 2024-06-17 | quay.io/cilium/cilium:v1.16.0-rc.0 |

Release Candidate Notes |

Ability to secure modern application protocols such as REST/HTTP, gRPC and Kafka. Traditional firewalls operate at Layer 3 and 4. A protocol running on a particular port is either completely trusted or blocked entirely. Cilium provides the ability to filter on individual application protocol requests such as:

- Allow all HTTP requests with method

GETand path/public/.*. Deny all other requests. - Allow

service1to produce on Kafka topictopic1andservice2to consume ontopic1. Reject all other Kafka messages. - Require the HTTP header

X-Token: [0-9]+to be present in all REST calls.

See the section Layer 7 Policy in our documentation for the latest list of supported protocols and examples on how to use it.

Modern distributed applications rely on technologies such as application containers to facilitate agility in deployment and scale out on demand. This results in a large number of application containers being started in a short period of time. Typical container firewalls secure workloads by filtering on source IP addresses and destination ports. This concept requires the firewalls on all servers to be manipulated whenever a container is started anywhere in the cluster.

In order to avoid this situation which limits scale, Cilium assigns a security identity to groups of application containers which share identical security policies. The identity is then associated with all network packets emitted by the application containers, allowing to validate the identity at the receiving node. Security identity management is performed using a key-value store.

Label based security is the tool of choice for cluster internal access control. In order to secure access to and from external services, traditional CIDR based security policies for both ingress and egress are supported. This allows to limit access to and from application containers to particular IP ranges.

A simple flat Layer 3 network with the ability to span multiple clusters connects all application containers. IP allocation is kept simple by using host scope allocators. This means that each host can allocate IPs without any coordination between hosts.

The following multi node networking models are supported:

Overlay: Encapsulation-based virtual network spanning all hosts. Currently, VXLAN and Geneve are baked in but all encapsulation formats supported by Linux can be enabled.

When to use this mode: This mode has minimal infrastructure and integration requirements. It works on almost any network infrastructure as the only requirement is IP connectivity between hosts which is typically already given.

Native Routing: Use of the regular routing table of the Linux host. The network is required to be capable to route the IP addresses of the application containers.

When to use this mode: This mode is for advanced users and requires some awareness of the underlying networking infrastructure. This mode works well with:

- Native IPv6 networks

- In conjunction with cloud network routers

- If you are already running routing daemons

Cilium implements distributed load balancing for traffic between application containers and to external services and is able to fully replace components such as kube-proxy. The load balancing is implemented in eBPF using efficient hashtables allowing for almost unlimited scale.

For north-south type load balancing, Cilium's eBPF implementation is optimized for maximum performance, can be attached to XDP (eXpress Data Path), and supports direct server return (DSR) as well as Maglev consistent hashing if the load balancing operation is not performed on the source host.

For east-west type load balancing, Cilium performs efficient service-to-backend translation right in the Linux kernel's socket layer (e.g. at TCP connect time) such that per-packet NAT operations overhead can be avoided in lower layers.

Cilium implements bandwidth management through efficient EDT-based (Earliest Departure Time) rate-limiting with eBPF for container traffic that is egressing a node. This allows to significantly reduce transmission tail latencies for applications and to avoid locking under multi-queue NICs compared to traditional approaches such as HTB (Hierarchy Token Bucket) or TBF (Token Bucket Filter) as used in the bandwidth CNI plugin, for example.

The ability to gain visibility and troubleshoot issues is fundamental to the

operation of any distributed system. While we learned to love tools like

tcpdump and ping and while they will always find a special place in our

hearts, we strive to provide better tooling for troubleshooting. This includes

tooling to provide:

- Event monitoring with metadata: When a packet is dropped, the tool doesn't just report the source and destination IP of the packet, the tool provides the full label information of both the sender and receiver among a lot of other information.

- Metrics export via Prometheus: Key metrics are exported via Prometheus for integration with your existing dashboards.

- Hubble: An observability platform specifically written for Cilium. It provides service dependency maps, operational monitoring and alerting, and application and security visibility based on flow logs.

- Why Cilium?

- Getting Started

- Architecture and Concepts

- Installing Cilium

- Frequently Asked Questions

- Contributing

Berkeley Packet Filter (BPF) is a Linux kernel bytecode interpreter originally introduced to filter network packets, e.g. for tcpdump and socket filters. The BPF instruction set and surrounding architecture have recently been significantly reworked with additional data structures such as hash tables and arrays for keeping state as well as additional actions to support packet mangling, forwarding, encapsulation, etc. Furthermore, a compiler back end for LLVM allows for programs to be written in C and compiled into BPF instructions. An in-kernel verifier ensures that BPF programs are safe to run and a JIT compiler converts the BPF bytecode to CPU architecture-specific instructions for native execution efficiency. BPF programs can be run at various hooking points in the kernel such as for incoming packets, outgoing packets, system calls, kprobes, uprobes, tracepoints, etc.

BPF continues to evolve and gain additional capabilities with each new Linux release. Cilium leverages BPF to perform core data path filtering, mangling, monitoring and redirection, and requires BPF capabilities that are in any Linux kernel version 4.8.0 or newer (the latest current stable Linux kernel is 4.14.x).

Many Linux distributions including CoreOS, Debian, Docker's LinuxKit, Fedora,

openSUSE and Ubuntu already ship kernel versions >= 4.8.x. You can check your Linux

kernel version by running uname -a. If you are not yet running a recent

enough kernel, check the Documentation of your Linux distribution on how to run

Linux kernel 4.9.x or later.

To read up on the necessary kernel versions to run the BPF runtime, see the section Prerequisites.

XDP is a further step in evolution and enables running a specific flavor of BPF programs from the network driver with direct access to the packet's DMA buffer. This is, by definition, the earliest possible point in the software stack, where programs can be attached to in order to allow for a programmable, high performance packet processor in the Linux kernel networking data path.

Further information about BPF and XDP targeted for developers can be found in the BPF and XDP Reference Guide.

To know more about Cilium, its extensions and use cases around Cilium and BPF take a look at Further Readings section.

Join the Cilium Slack channel to chat with Cilium developers and other Cilium users. This is a good place to learn about Cilium, ask questions, and share your experiences.

See Special Interest groups for a list of all SIGs and their meeting times.

The Cilium developer community hangs out on Zoom to chat. Everybody is welcome.

- Weekly, Wednesday, 5:00 pm Europe/Zurich time (CET/CEST), usually equivalent to 8:00 am PT, or 11:00 am ET. Join Zoom

- Third Wednesday of each month, 9:00 am Japan time (JST). Join Zoom

We host a weekly community YouTube livestream called eCHO which (very loosely!) stands for eBPF & Cilium Office Hours. Join us live, catch up with past episodes, or head over to the eCHO repo and let us know your ideas for topics we should cover.

The Cilium project is governed by a group of Maintainers and Committers. How they are selected and govern is outlined in our governance document.

A list of adopters of the Cilium project who are deploying it in production, and of their use cases, can be found in file USERS.md.

Cilium maintains a public roadmap. It gives a high-level view of the main priorities for the project, the maturity of different features and projects, and how to influence the project direction.

The Cilium user space components are licensed under the Apache License, Version 2.0. The BPF code templates are dual-licensed under the General Public License, Version 2.0 (only) and the 2-Clause BSD License (you can use the terms of either license, at your option).