This is Junliang Yu. I am currently a postdoctoral research fellow working on data science [Homepage][Google Scholar]

- I’m working with A/Prof. Hongzhi Yin and Prof. Shazia Sadiq at the University of Queensland.

- My research interests include recommender systems, tiny machine learning, self-supervised learning and graph learning.

- Feel free to drop me an email if you have any questions. 📧

You can find all the implementations of my papers in QRec 😜.

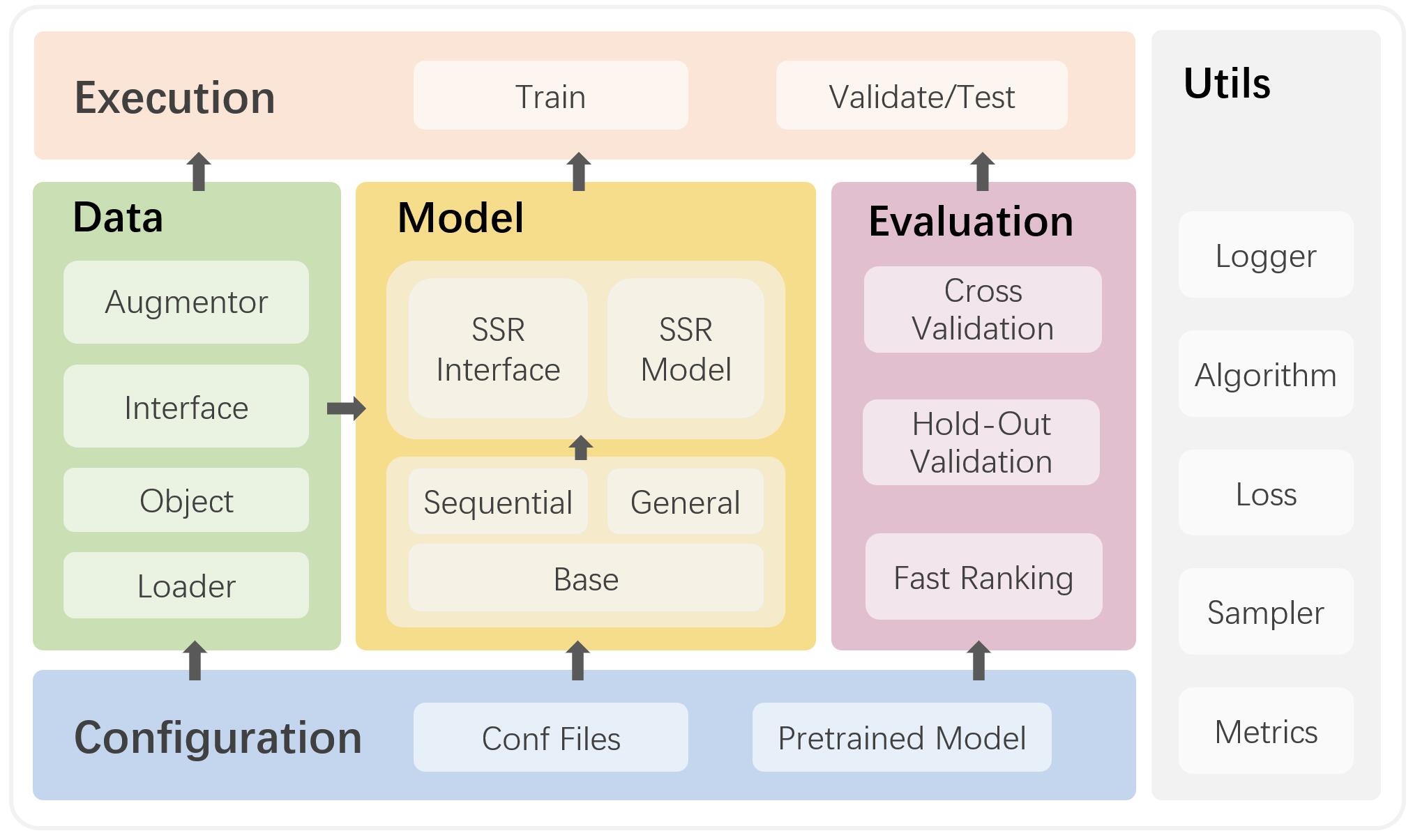

SELFRec is a Python framework for self-supervised recommendation (SSR) which integrates commonly used datasets and metrics, and implements many state-of-the-art SSR models. SELFRec has a lightweight architecture and provides user-friendly interfaces. It can facilitate model implementation and evaluation.