A Python package implementing various colour checker detection algorithms and related utilities.

It is open source and freely available under the BSD-3-Clause terms.

Table of Contents

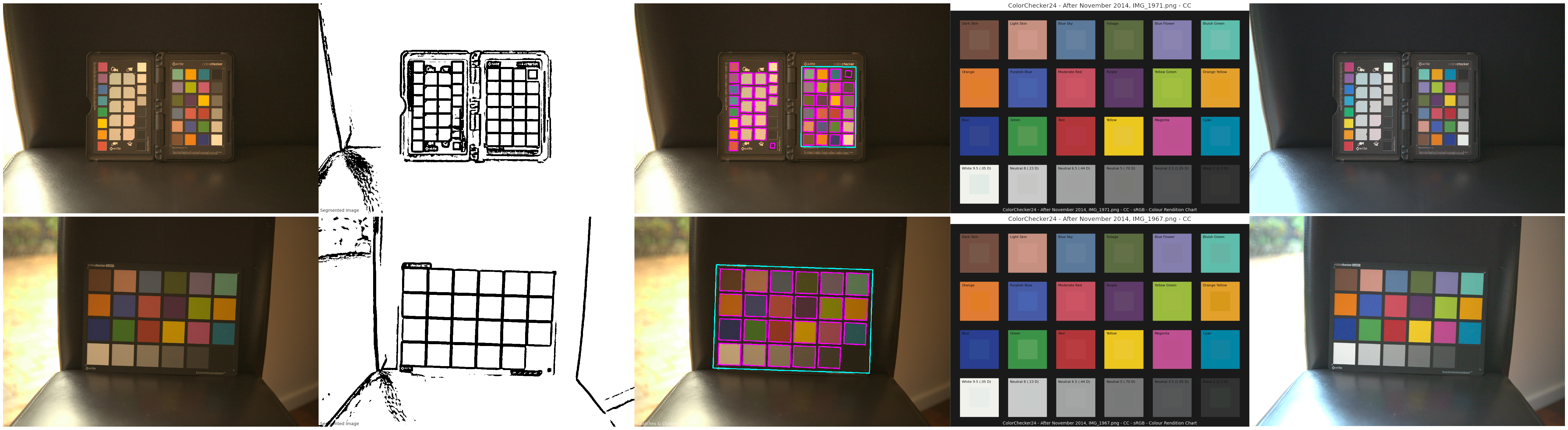

The following colour checker detection algorithms are implemented:

- Segmentation

- Machine learning inference via Ultralytics YOLOv8

- The model is published on HuggingFace, and was trained on a purposely constructed dataset.

- The model has only been trained on ColorChecker Classic 24 images and will not work with ColorChecker Nano or ColorChecker SG images.

- Inference is performed by a script licensed under the terms of the GNU Affero General Public License v3.0 as it uses the Ultralytics YOLOv8 API which is incompatible with the BSD-3-Clause.

Various usage examples are available from the examples directory.

Because of their size, the resources dependencies needed to run the various examples and unit tests are not provided within the Pypi package. They are separately available as Git Submodules when cloning the repository.

Colour - Checker Detection requires various dependencies in order to run:

- python >= 3.9, < 4

- colour-science >= 4.3

- imageio >= 2, < 3

- numpy >= 1.22, < 2

- opencv-python >= 4, < 5

- scipy >= 1.8, < 2

Once the dependencies are satisfied, Colour - Checker Detection can be installed from the Python Package Index by issuing this command in a shell:

pip install --user colour-checker-detection

The overall development dependencies are installed as follows:

pip install --user 'colour-checker-detection[development]'

If you would like to contribute to Colour - Checker Detection, please refer to the following Contributing guide for Colour.

The bibliography is available in the repository in BibTeX format.

The main technical reference Colour - Checker Detection is the API Reference.

The Code of Conduct, adapted from the Contributor Covenant 1.4, is available on the Code of Conduct page.

The Colour Developers can be reached via different means: