This is source code for the paper "Crypto Pump and Dump Detection via Deep Learning Techniques" by Viswanath Chadalapaka, Kyle Chang, Gireesh Mahajan, and Anuj Vasil.

Our work shows that deep learning can be applied to cryptocurrency pump and dump (P&D) data to create higher-scoring models than previously found by La Morgia et al., 2020.

To run our code, use:

python train.py [--option value]

Possible command line options are as follows, by category:

- model: Choose between CLSTM, AnomalyTransformer, TransformerTimeSeries, AnomalyTransfomerIntermediate, and AnomalyTransfomerBasic

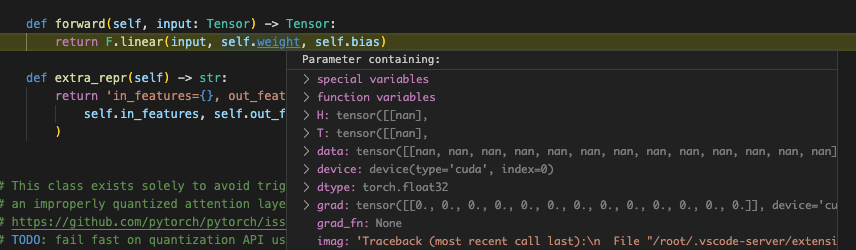

TransformerTimeSeriesmakes use of a standard transformer encoder to establish a baseline w/o anomaly attentionAnomalyTransfomerBasicis the simplest use of anomaly attention. It just creates a list of anomaly attention blocks.AnomalyTransfomerIntermediateuses association scores from anomaly attention in the loss, but does not use the minimax optimization strategy. Instead it makes use of just the maximize phase. This is an intermediate between the final AnomalyTransformer model that produces near identical results, but trains much quicker.AnomalyTransformeruses the minimax optimziation strategy unlikeAnomalyTransfomerIntermediate. Unlike the other models it is much slower to train and has some initial trouble learning due to gradient flow problems, but does produce optimal results.

- n_epochs: Number of epochs to train the given model

- embedding_size: The embedding size of the CLSTM

- n_layers: Number of LSTM layers

- kernel_size: Size of the convolutional kernel

- dropout: Dropout added to the LSTM layers

- cell_norm: True/False -- whether or not to normalize the gate values at the LSTM cell level

- out_norm: True/False -- whether or not to normalize the output of each LSTM layer

- feature_size: amount of features to use from the original data

- n_layers: number of Transformer layers

- n_head: number of heads in multi-head self attention. Only required for base

TransformerTimeSeriesmodel - lambda_: weight of kl divergences between associations in anomaly attention module. Only required for

AnomalyTransfomerIntermediateandAnomalyTransformer

- lr: Learning rate

- lr_decay_step: Number of epochs to wait before decaying the learning rate, 0 for no decay

- lr_decay_factor: Multiplicative learning rate decay factor

- weight_decay: Weight decay regularization

- batch_size: Batch size

- train_ratio: Ratio of data to use for train

- undersample_ratio: Undersample proportion of majority class

- segment_length: Length of each segment

- prthreshold: Set the precision-recall threshold of the model

- kfolds: Enable a k-fold validation scheme. If set to anything other than 1, train_ratio will be ignored

- save: Cache processed data for faster experiment startup times

- validate_every_n: Skips validation every epoch and only validates every n epochs, saves on time

- train_output_every_n: Doesn't output train loss details for cleaner logs

- time_epochs: Adds epoch timing to logs

- final_run: Automatically sets validate_every_n=1 and train_output_every_n=1, used for reproducing paper reults

- verbose: Additional debug output (average output at 0/1 ground truth labels, etc)

- dataset: Point to the time-series dataset to train the model on

- seed: Set the seed of the model

- run_count: Set the number of times to run the model, in order to compute confidence intervals from logs