(2022.11.19) We release the interactive object bounding boxes & classes in the interactions within AVA dataset (2.1 & 2.2)! HAKE-AVA, [Paper]. BTW, we also release a CLIP-based human body part states recognizer in CLIP-Activity2Vec!

(2022.07.29) Our new work PartMap (ECCV'22) is released! Paper, Code

(2022.04.23) Two new works on HOI learning are releassed! Interactiveness Field (CVPR'22) and a new HOI metric mPD (AAAI'22).

(2022.02.14) We release the human body part state labels based on AVA: HAKE-AVA.

(2021.2.7) Upgraded HAKE-Activity2Vec is released! Images/Videos --> human box + ID + skeleton + part states + action + representation. [Description]

(2021.1.15) Our extended version of TIN is accepted by TPAMI!

(2020.10.27) The code of IDN (Paper) in NeurIPS'20 is released!

(2020.6.16) Our larger version HAKE-Large (>120K images, activity and part state labels) is released!

We have opened a tiny repo: HOI learning list (https://github.com/DirtyHarryLYL/HOI-Learning-List). It includes most of the recent HOI-related papers, code, datasets and leaderboard on widely-used benchmarks. Hope it could help everybody interested in HOI.

Code of "Transferable Interactiveness Knowledge for Human-Object Interaction Detection".

Created by Yong-Lu Li, Siyuan Zhou, Xijie Huang, Liang Xu, Ze Ma, Hao-Shu Fang, Yan-Feng Wang, Cewu Lu.

Link: [CVPR arXiv], [TPAMI arXiv]

If you find our work useful in your research, please consider citing:

@inproceedings{li2019transferable,

title={Transferable Interactiveness Knowledge for Human-Object Interaction Detection},

author={Li, Yong-Lu and Zhou, Siyuan and Huang, Xijie and Xu, Liang and Ma, Ze and Fang, Hao-Shu and Wang, Yanfeng and Lu, Cewu},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={3585--3594},

year={2019}

}

@article{li2022transferable,

title={Transferable Interactiveness Knowledge for Human-Object Interaction Detection},

author={Li, Yong-Lu and Liu, Xinpeng and Wu, Xiaoqian and Huang, Xijie and Xu, Liang and Lu, Cewu},

journal={TPAMI},

year={2022}

}

Interactiveness Knowledge indicates whether human and object interact with each other or not. It can be learned across HOI datasets, regardless of HOI category settings. We exploit an Interactiveness Network to learn the general interactiveness knowledge from multiple HOI datasets and perform Non-Interaction Suppression before HOI classification in inference. On account of the generalization of interactiveness, our TIN: Transferable Interactiveness Network is a transferable knowledge learner and can be cooperated with any HOI detection models to achieve desirable results. TIN outperforms state-of-the-art HOI detection results by a great margin, verifying its efficacy and flexibility.

Our Results on HICO-DET dataset

| Method | Full(def) | Rare(def) | None-Rare(def) | Full(ko) | Rare(ko) | None-Rare(ko) |

|---|---|---|---|---|---|---|

| RCD(paper) | 13.75 | 10.23 | 15.45 | 15.34 | 10.98 | 17.02 |

| RPDCD(paper) | 17.03 | 13.42 | 18.11 | 19.17 | 15.51 | 20.26 |

| RCT(paper) | 10.61 | 7.78 | 11.45 | 12.47 | 8.87 | 13.54 |

| RPT1CD(paper) | 16.91 | 13.32 | 17.99 | 19.05 | 15.22 | 20.19 |

| RPT2CD(paper) | 17.22 | 13.51 | 18.32 | 19.38 | 15.38 | 20.57 |

| Interactiveness-optimized | 17.54 | 13.80 | 18.65 | 19.75 | 15.70 | 20.96 |

Our Results on V-COCO dataset

| Method | Full(def) |

|---|---|

| RCD(paper) | 43.2 |

| RPDCD(paper) | 47.8 |

| RCT(paper) | 38.5 |

| RPT1CD(paper) | 48.3 |

| RPT2CD(paper) | 48.7 |

| Interactiveness-optimized | 49.0 |

Please note that we have reimplemented TIN (e.g. replacing the vanilla HOI classifier with iCAN and using cosine_decay lr), thus the result here is different and slight better than the one in [Arxiv].

Besides the instance-level interactiveness between humans and objects, we further propose the part-level interactiveness between body parts and objects (whether a body part is interacted with an object or not). A new large-scale HOI benchmark based on the data from HAKE (CVPR2020), i.e., PaStaNet-HOI is also constructed. It contains 110K+ images with 520 HOIs (without the 80 "no_interaction" HOIs of HICO-DET to avoid the incomplete labeling) and is more difficult than HICO-DET. We hope it can help to benchmark the HOI detection method better.

RCD in new version: R (representation extractor), C (interaction classifier), D (interactiveness discriminator), slightly different from the CVPR 2019 version.

HICO-DET

| Method | Full(def) | Rare(def) | None-Rare(def) | Full(ko) | Rare(ko) | None-Rare(ko) |

|---|---|---|---|---|---|---|

| R+iCAN+D3 | 17.58 | 13.75 | 18.33 | 19.13 | 15.06 | 19.94 |

| RCD | 17.84 | 13.08 | 18.78 | 20.58 | 16.19 | 21.45 |

| RCD1 | 17.49 | 12.23 | 18.53 | 20.28 | 15.25 | 21.27 |

| RCD2 | 18.43 | 13.93 | 19.32 | 21.10 | 16.56 | 22.00 |

| RCD3 | 20.93 | 18.95 | 21.32 | 23.02 | 20.96 | 23.42 |

V-COCO

| Method | Scenario-1 |

|---|---|

| R+iCAN+D3 | 45.8 (46.1) |

| RCD | 48.4 |

| RCD1 | 48.5 |

| RCD2 | 48.7 |

| RCD3 | 49.1 |

PaStaNet-HOI

| Method | mAP |

|---|---|

| iCAN | 11.0 |

| R+iCAN+D3 | 13.13 |

| RCD | 15.38 |

1.Clone this repository.

git clone https://github.com/DirtyHarryLYL/Transferable-Interactiveness-Network.git

2.Download dataset and setup evaluation and API. (The detection results (person and object boudning boxes) are collected from: iCAN: Instance-Centric Attention Network for Human-Object Interaction Detection [website].)

chmod +x ./script/Dataset_download.sh

./script/Dataset_download.sh

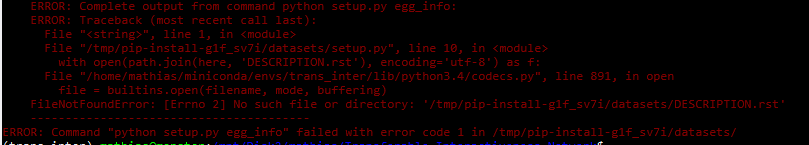

3.Install Python dependencies.

pip install -r requirements.txt

If you have trouble installing requirements, try to update your pip or try to use conda/virtualenv.

4.Download our pre-trained weight (Optional)

<!-- old links-->

<!-- python script/Download_data.py 1f_w7HQxTfXGxOPrkriu7jTyCTC-KPEH3 Weights/TIN_HICO.zip

python script/Download_data.py 1iU9dN9rLtekcHX2MT_zU_df3Yf0paL9s Weights/TIN_VCOCO.zip -->

<!-- new links-->

python script/Download_data.py 1AJIyLETjcHF4oxjZKk1KtXteAqHdK-De Weights/TIN_HICO.zip

python script/Download_data.py 13559njUIizkqd9Yu8CtWAqX2ZPRSIp7R Weights/TIN_VCOCO.zip

1.Train on HICO-DET dataset

python tools/Train_TIN_HICO.py --num_iteration 2000000 --model TIN_HICO_test

2.Train on V-COCO dataset

python tools/Train_TIN_VCOCO.py --num_iteration 20000 --model TIN_VCOCO_test

1.Test on HICO-DET dataset

python tools/Test_TIN_HICO.py --num_iteration 1700000 --model TIN_HICO

2.Test on V-COCO dataset

python tools/Test_TIN_VCOCO.py --num_iteration 6000 --model TIN_VCOCO

Since the interactiveness branch is easier to converge, first pre-training the whole model with HOI classification loss only then finetuning with both HOI and interactiveness loss is preferred to get the best performance.

Q: How is the used loss weights generated?

A: Please refer to this issue for detailed explanation.

You may also be interested in our new work **HAKE**[[website]](http://hake-mvig.cn/home/), HAKE is a new large-scale knowledge base and engine for human activity understanding. HAKE provides elaborate and abundant **body part state** labels for active human instances in a large scale of images and videos. With HAKE, we boost the HOI recognition performance on HICO and some other widely-used human activity benchmarks. Now we are still enlarging and enriching it, and looking forward to working with outstanding researchers around the world on its applications and further improvements. If you have any pieces of advice or interests, please feel free to contact [Yong-Lu Li](https://dirtyharrylyl.github.io/) ([email protected]).Some of the codes are built upon iCAN: Instance-Centric Attention Network for Human-Object Interaction Detection [website]. Thanks them for their great work! The pose estimation results are obtained from AlphaPose . Alpha Pose is an accurate multi-person pose estimator, which is the first real-time open-source system that achieves 70+ mAP (72.3 mAP) on COCO dataset and 80+ mAP (82.1 mAP) on MPII dataset. You may also use your own pose estimation results to train the interactiveness predictor, thus you could directly donwload the train and test pkl files from iCAN [website] and insert your pose results.

If you get any problems or if you find any bugs, don't hesitate to comment on GitHub or make a pull request!

TIN(Transferable Interactiveness Network) is freely available for free non-commercial use, and may be redistributed under these conditions. For commercial queries, please drop an e-mail. We will send the detail agreement to you.