A plugin for the prometheus-net package, exposing .NET core runtime metrics including:

- Garbage collection collection frequencies and timings by generation/ type, pause timings and GC CPU consumption ratio

- Heap size by generation

- Bytes allocated by small/ large object heap

- JIT compilations and JIT CPU consumption ratio

- Thread pool size, scheduling delays and reasons for growing/ shrinking

- Lock contention

- Exceptions thrown, broken down by type

These metrics are essential for understanding the performance of any non-trivial application. Even if your application is well instrumented, you're only getting half the story- what the runtime is doing completes the picture.

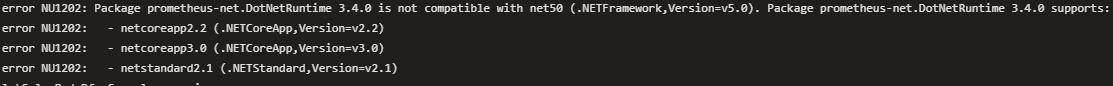

- .NET 5.0+ recommended, .NET core 3.1+ is supported

- The prometheus-net package

The package can be installed from nuget:

dotnet add package prometheus-net.DotNetRuntimeYou can start metric collection with:

IDisposable collector = DotNetRuntimeStatsBuilder.Default().StartCollecting()You can customize the types of .NET metrics collected via the Customize method:

IDisposable collector = DotNetRuntimeStatsBuilder

.Customize()

.WithContentionStats()

.WithJitStats()

.WithThreadPoolStats()

.WithGcStats()

.WithExceptionStats()

.StartCollecting();Once the collector is registered, you should see metrics prefixed with dotnet_ visible in your metric output (make sure you are exporting your metrics).

By default the library will default generate metrics based on event counters. This allows for basic instrumentation of applications with very little performance overhead.

You can enable higher-fidelity metrics by providing a custom CaptureLevel, e.g:

DotNetRuntimeStatsBuilder

.Customize()

.WithGcStats(CaptureLevel.Informational)

.WithExceptionStats(CaptureLevel.Errors)

...

Most builder methods allow the passing of a custom CaptureLevel- see the documentation on exposed metrics for more information.

The harder you work the .NET core runtime, the more events it generates. Event generation and processing costs can stack up, especially around these types of events:

- JIT stats: each method compiled by the JIT compiler emits two events. Most JIT compilation is performed at startup and depending on the size of your application, this could impact your startup performance.

- GC stats with

CaptureLevel.Verbose: every 100KB of allocations, an event is emitted. If you are consistently allocating memory at a rate > 1GB/sec, you might like to disable GC stats. - Exception stats with

CaptureLevel.Errors: for every exception throw, an event is generated.

There have been long-running performance issues since .NET core 3.1 that could see CPU consumption grow over time when long-running trace sessions are used. While many of the performance issues have been addressed now in .NET 6.0, a workaround was identified: stopping and starting (AKA recycling) collectors periodically helped reduce CPU consumption:

IDisposable collector = DotNetRuntimeStatsBuilder.Default()

// Recycles all collectors once every day

.RecycleCollectorsEvery(TimeSpan.FromDays(1))

.StartCollecting()

While this has been observed to reduce CPU consumption this technique has been identified as a possible culprit that can lead to application instability.

Behaviour on different runtime versions is:

- .NET core 3.1: recycling verified to cause massive instability, cannot enable recycling.

- .NET 5.0: recycling verified to be beneficial, recycling every day enabled by default.

- .NET 6.0+: recycling verified to be less necesarry due to long-standing issues being addressed although some users report recycling to be beneficial, disabled by default but recycling can be enabled.

TLDR: If you observe increasing CPU over time, try enabling recycling. If you see unexpected crashes after using this application, try disabling recycling.

An example docker-compose stack is available in the examples/ folder. Start it with:

docker-compose up -d

You can then visit http://localhost:3000 to view metrics being generated by a sample application.

The metrics exposed can drive a rich dashboard, giving you a graphical insight into the performance of your application ( exported dashboard available here):

- The mechanism for listening to runtime events is outlined in the .NET core 2.2 release notes.

- A partial list of core CLR events is available in the ETW events documentation.