DuckDB is a high-performance analytical database system. It is designed to be fast, reliable, portable, and easy to use. DuckDB provides a rich SQL dialect, with support far beyond basic SQL. DuckDB supports arbitrary and nested correlated subqueries, window functions, collations, complex types (arrays, structs), and more. For more information on using DuckDB, please refer to the DuckDB documentation.

If you want to install and use DuckDB, please see our website for installation and usage instructions.

For CSV files and Parquet files, data import is as simple as referencing the file in the FROM clause:

SELECT * FROM 'myfile.csv';

SELECT * FROM 'myfile.parquet';Refer to our Data Import section for more information.

The website contains a reference of functions and SQL constructs available in DuckDB.

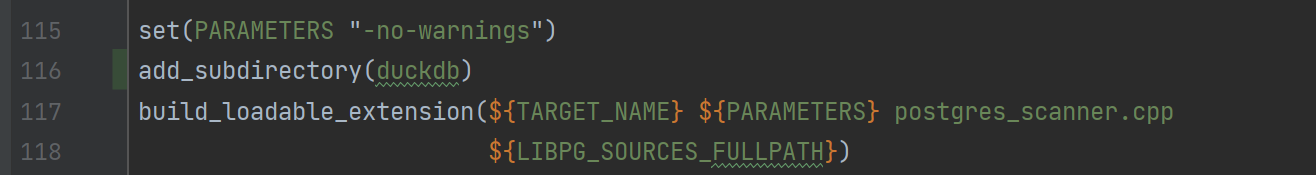

For development, DuckDB requires CMake, Python3 and a C++11 compliant compiler. Run make in the root directory to compile the sources. For development, use make debug to build a non-optimized debug version. You should run make unit and make allunit to verify that your version works properly after making changes. To test performance, you can run BUILD_BENCHMARK=1 BUILD_TPCH=1 make and then perform several standard benchmarks from the root directory by executing ./build/release/benchmark/benchmark_runner. The details of benchmarks are in our Benchmark Guide.

Please also refer to our Build Guide and Contribution Guide.

See the Support Options page.