I work in a company where several custom middleware Docker images are built for our developpers (httpd, Tomcat, PHP-FPM, MySQL, etc). Those images are used to build and run app images in OpenShift. Proper configuration has been made so our middleware images output their logs in stdout using json.

Recently, i have decided to move to ECS to make it easier to read logs in Kibana and your library seems like a perfect choice for Java applications.

First, i have replaced log4j/Graylog with log4j2 and followed the steps below to get json logs before trying my luck with ecs-logging-java :

setenv.sh

CLASSPATH=$CATALINA_HOME/lib/log4j-jul-2.13.2.jar:$CATALINA_HOME/lib/log4j-api-2.13.2.jar:$CATALINA_HOME/lib/log4j-core-2.13.2.jar:$CATALINA_HOME/lib/log4j-appserver-2.13.2.jar:$CATALINA_HOME/lib/jackson-annotations-2.11.0.jar:$CATALINA_HOME/lib/jackson-databind-2.11.0.jar:$CATALINA_HOME/lib/jackson-core-2.11.0.jar

LOGGING_CONFIG="-Dlog4j.configurationFile=$CATALINA_BASE/conf/log4j2.xml"

LOGGING_MANAGER="-Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager"

log4j2.xml

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="INFO">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<JSONLayout compact="true" eventEol="true"/>

</Console>

</Appenders>

<Loggers>

<Root level="INFO">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>

Access logs are managed through a valve in server.xml and are not controlled by log4j2.

When starting Tomcat, logs are properly written in stdout. I think org.apache.logging.log4j.jul.LogManager has something to do with it as it makes Tomcat's legacy JavaUtilLogging mechanism work with log4j.

{"instant":{"epochSecond":1588848888,"nanoOfSecond":655000000},"thread":"main","level":"INFO","loggerName":"org.apache.catalina.startup.VersionLoggerListener","message":"Server version name: Apache Tomcat/8.5.51","endOfBatch":false,"loggerFqcn":"org.apache.logging.log4j.jul.ApiLogger","threadId":1,"threadPriority":5}

(…)

{"instant":{"epochSecond":1588848901,"nanoOfSecond":455000000},"thread":"main","level":"INFO","loggerName":"org.apache.catalina.startup.Catalina","message":"Server startup in 10306 ms","endOfBatch":false,"loggerFqcn":"org.apache.logging.log4j.jul.ApiLogger","threadId":1,"threadPriority":5}

Nice, but we need ECS fields. Custom fields can be added in JsonLayout...

<JsonLayout>

<KeyValuePair key="additionalField1" value="constant value"/>

<KeyValuePair key="additionalField2" value="$${ctx:key}"/>

</JsonLayout>

...but i don't know how to replace existing ones. Let's give a shot at ecs-logging-java!

setenv.sh

CLASSPATH=$CATALINA_HOME/lib/log4j-jul-2.13.2.jar:$CATALINA_HOME/lib/log4j-api-2.13.2.jar:$CATALINA_HOME/lib/log4j-core-2.13.2.jar:$CATALINA_HOME/lib/log4j-appserver-2.13.2.jar:$CATALINA_HOME/lib/jackson-annotations-2.11.0.jar:$CATALINA_HOME/lib/jackson-databind-2.11.0.jar:$CATALINA_HOME/lib/jackson-core-2.11.0.jar:$CATALINA_HOME/lib/log4j2-ecs-layout-0.3.0.jar

log4j2.xml

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="INFO">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<EcsLayout serviceName="Tomcat" eventDataset="catalina.out" stackTraceAsArray="true"/>

</Console>

</Appenders>

<Loggers>

<Root level="INFO">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>

But now, no more messages are shown in logs pertaining to Tomcat's startup. Has the bridge with java.util.logging been lost?

I could manage to get logs by raising the verbosity level to DEBUG...

<Configuration status="DEBUG">

(...)

<Root level="DEBUG">

...but the messages i get are restricted to log4j2's inner workings (and are not even formatted in json) :

2020-05-07 17:33:15,151 main DEBUG Apache Log4j Core 2.13.2 initializing configuration XmlConfiguration[location=/opt/tomcat/conf/log4j2.xml]

(…)

2020-05-07 17:33:18,554 main DEBUG EcsLayout$Builder(serviceName="Tomcat", eventDataset="catalina.out", includeMarkers="null", stackTraceAsArray="true", ={}, includeOrigin="null", charset="null", footerSerializer=null, headerSerializer=null, Configuration(/opt/tomcat/conf/log4j2.xml), footer="null", header="null")

I noticed a module was developped specifically for java.util.logging in ecs-logging-java but i do not wish to revert to using it as i believe log4j2 is the way to go now!

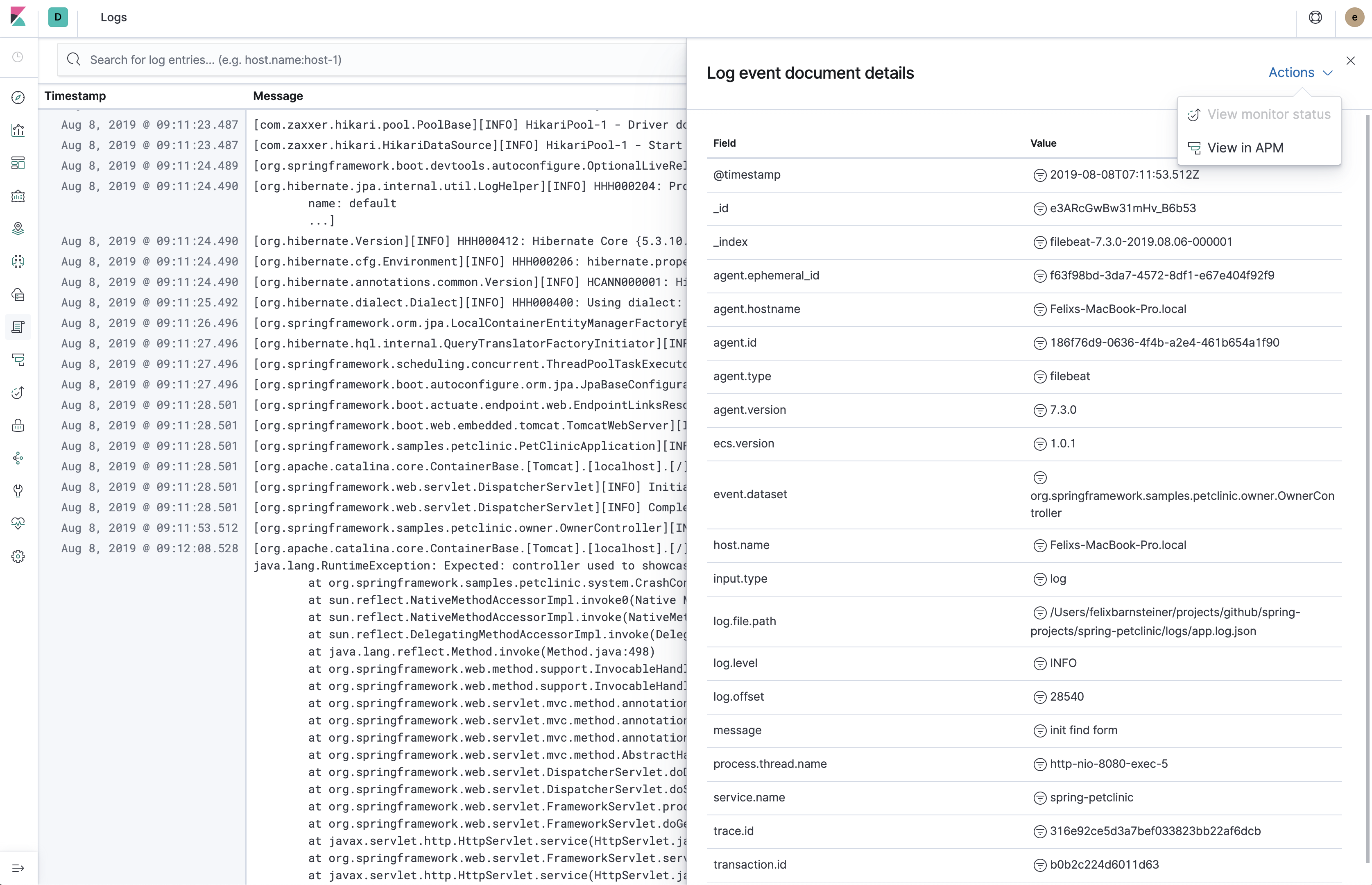

Furthermore, there's a screenshot showing traces of a Tomcat Startup in your home page so maybe i have missed something somewhere or a piece of ecs-logging-java has been broken?

Thanx :)