extremelyd1 / dungeons-and-drawing Goto Github PK

View Code? Open in Web Editor NEWGame written in Java with LWJGL and OpenGL

License: MIT License

Game written in Java with LWJGL and OpenGL

License: MIT License

At the moment the player does not know that the map exists. It can move freely in any direction.

The player should of course not be able to walk through solid tiles.

I am experimenting a bit with the Github system. And while this is a problem that could be useful to solve I do realize it is not our highest priority right now and that it is quite an easy fix I could easily do myself!

Problem

There seems to be a difference between the vertex positions in Blender and the vertex coordinates we have. When creating a model in Blender, everything is as expected. However, when then importing the same model in our engine, the model is rotated -90 degrees around the x-axis (this is now simply fixed by rotating the model by 90 degrees)

Expected cause:

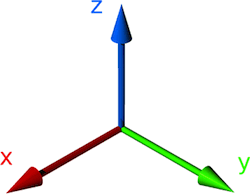

Blender probably interprets the coordinates differently then we do. I think the axes are different. To be more precise, in the default camera position and orientation, I think in Blender the Z-axis is the "vertical" axis and the Y-axis is the "depth" axis while for us this is the other way around (see picture below).

We need a validation tool for the A*-search algorithm, to show that it works correctly outside of the game. The validation tool will be a swing gui where the start and target of the A*-search can be selected in a grid, and obstacles can be made. The gui will then show how the algorithm found it's target.

Validator should display:

I was trying to come up with a way to design animations in a simple way. Repeating animations can of course be done by using (for example) the sine function, but it would also be cool to have a keyframe like system where you define a pair with a timestamp and a value and that the engine interpolates the value in between keyframes. For example:

Keyframe 1: At second 0, scale = 100;

Keyframe 2: At second 10, scale = 0;

The engine then makes sure that at second 2, the scale is 90 and at second 3, the scale is 80, etc.

This could for example be used to fade in and fade out GUI graphics, but could also be used to define a walkcycle for the character.

When I define all properties inside the material, the isColored boolean is set to true. In any other case, the boolean is left at false. My question would be whether this behaviour is intended and how I can easily create Materials for our PLY models.

Current situation

Right now we have one shader that is used by Renderer to render all our graphics. This shader is partially customized by supplying the shader with two integers that are being used as booleans (i.e. either have a value of 1 or 0). These integers are used to differentiate between texture or no texture and one solid color or vertex colors.

Problem

With the extension of our game, we would like to add GUI elements (buttons, debug information, text, pop-ups, etc). Most of these have a 2D appearance. That is, they might be 3D objects (for example the axis for the debug window), but we probably do not want them to be directly part of the scene. So the camera or the lights should have less influence or even no influence at all on these assets.

Another thing we want to add is the morphing shader. This as well would require some refactoring.

Options

I think it is important to early on decide on how we are solving this problem. I foresee some options:

Proposal

I think we should combine 1 and 2. I would keep everything that is part of the 3D scene together, so I would preferably not render objects that use the morphing shader separately and implement this as an extra customizable option inside the current shader. Otherwise I feel like it will be a challenge to make the objects part of the scene (i.e. that they are not drawn on top of the scene and are not influenced by shadows or the surrounding when it comes to lighting). Rendering the 2D GUI after the 3D scene however seems like a good idea as this also simplifies where we have to position our GUI, etc. (we simply draw over the 3D scene we just rendered.

Right now we have to pass each light array separately to the render method. This makes the method very large and ugly. Perhaps a better solution would be to make an object which stores all the lights, which we pass to the render method. This reduces the number of parameters and makes it cleaner.

The game engine loop handles updating still not with a notion of delta time. It sometimes gives updates quickly after each other with a relatively high delta time.

This is especially useful for when debugging and seeing the console

I would suggest a key like F11 for this and default of no full screen for development purposes?

To enable debuggin and playing camera, I think it would be best to create other types of cameras, which all extend the basic Camera class. One camera could be for a free-flying mode, just like we have now (in the early part of the game), where we can control the camera ourselves. Another type of camera could be one that follows the player.

At this point we can start implementing an actual level. We need to get a few things done before we can fix this issue:

Level class.FollowCamera to follow the player.The GameEngine loop method calculates when to call the update method based on a TARGET_UPS, which denotes the target updates per second. Since, the update method gets this parameter instead of a delta time, a player object, for example, will walk slower based on how fast the game runs.

When passing the delta time, that is the time since the last update, game objects can update independently from the speed of the game.

The GameEngine loop renders continuously without letting the Thread sleep, which causes the program to hoard all of the system's resources to render. We want to have the engine render at a certain FPS that can be capped in order to prevent this.

See LWJGL 3 Tutorial

Implementing loading a map from file and defining a file format to use in the level editor

The level initialisation should not happen in the constructor, since the level class is already created at startup. Instead, all initialisation code should happen in the init() method.

At the moment, when switching to another level, we do not call the init method of that level. This should thus happen.

We need to agree on a map file format in which we can define what a map looks like. A hard requirement is a definition of which tile goes where. This format needs to be loaded into a map loader (and possibly exported by a map builder).

x y tile_typex y z light_type light_colorx y orientationSome more considerations, each level will contain logic of which puzzle has which solution and when do enemies become 'active'. Also, all the post-puzzle actions need to be defined somewhere. I think it is best to do this in a separate Level class, such that each new level has it's own class with all this logic.

The file format is simply a neat way of loading a level without hardcoding every single tile.

As of right now, the window cannot be resized. It has a fixed size. It would be preferable to be able to resize the window to fit your own needs.

When the window is resized, the player might gain an 'advantage' since he/she can see more of the map. I don't think this is a big issue in our case since you won't get that much of an advantage I think.

If we can work this out in a separate branch first, we can all see how this would change the code (and prevent merge conflicts)

Problem

When resizing the window such that it has another ratio than the original 16:9, the image is distorted.

Expected cause

One of the matrices important for rendering is probably not updated. I suspect the view matrix, projection matrix or both. The code in the GameWindow class has now been properly adjusted, but we still have to adjust some code in at least the Render class I think.

What is the problem

Lighting does not always work. Some places do not seem to be affected by the lights. I expect this to be a computational error.

Reproduce the problem

Open the Engine and make the point light orange. The tree is partially lit as expected. Now make the light a blue color and the green part of the tree model does not seem affected by the light.

Maybe it is nice to have a static class with all the colors we use in the GUI, so we can quickly experiment with different styles. Right now, the default colors are defined as static variables at the top of the GUI classes.

Right now, the camera rotation vector only contains the x and z rotation. But it would be nice to have the directional vector available. So we need some kind of computation to calculate the directional vector.

Example

If the vector rotated 45 degrees around the z axis, then the rotational vector would be (1, 1, 0) (not normalised). The vector points in the direction.

With this, we can easily add up position and rotation to get a new location.

In the PLYLoader class, we switch around the y and z coordinates in order to change the orientation of the coordinates we use in comparison to the one blender uses.

We use the y axis for up, while Blender uses the z axis for up.

However, we do not account for this change in loading the normal coordinates. These are still in the Blender orientation.

When I try to load the game in full screen mode (fullScreen = true), then the window is still only a small portion of the whole screen.

It would be nice to be able to enable/disable the debug menu in-game.

Possibly with the key F3?

Implementation done according to the tutorial (Shadows not Cascaded Shadows) however does not work.

Problem

When drawing on the DrawingCanvas, we draw lines in between consecutive positions of the mouse. However, with certain patterns (sharp turns) this results in very jagged edges.

Possible solution

We might be able to solve it by involving the style of the stroke. I think playing around with this setting (from the Nano API) might help:

// Sets how sharp path corners are drawn.

// Can be one of NVG_MITER (default), NVG_ROUND, NVG_BEVEL.

void nvgLineJoin(NVGcontext* ctx, int join);As the tutor noted: The orientation of the player seems flipped when walking diagonal.

This can be used to show texts like

"Press E to interact"

(in the tutorial level)

"Use w,a,s,d to move"

It's meant as a non-intrusive way of providing information to the player, i.e. it does not pause the game.

This text can then also be used in the debug menu.

When the player walks over a tile, it can trigger the start of a puzzle. Thus we need some way of attaching event listeners to a tile. Of course this should not be done for every tile, so it should be done somewhat smart.

Now that we can have levels in the game, we can start building a 'main menu'. This menu should simply be a nice title screen with the title of the game and a way of starting the first level.

The first thing to do is to figure out how we are going to design this menu. First we should come up with some concept art/ideas. Then, we we all agree, we can build the actual menu level.

The drawing interface is an important component of the game. It should support all the puzzles we want to create, therefore a good discussion is needed to write down all the requirements for this component.

The drawing interface should be 2D where the user has a clear space to draw an object. This space should not be too large since the user needs to use all the available space (for better network results).

Since the user can find "blueprints" in the game which acts like hints, we need some kind of interface to show these hints. But because we haven't yet fully decided how to implement this, we cannot decide how to show this to the user at this point.

The network outputs a map of all classes and a percentage of how likely it thinks the drawn image is that class. This needs to be interpreted in some way and shown back to the user. Based on this outcome, the game should be notified to do "something", like an action (e.g. a wall falling down, blocks appearing, monsters spawning, etc.)

There needs to be a system of tiles and a map. This system needs to be versatile enough to support all the operations we need for this game. This issue will describe these requirements and (hopefully) try to document all specifications.

Map

The map object provides an easy interface of querying the map for tiles. The player might want to see whether the tile in front of him is blocked or not, in order to see if the player can walk there

Player.update() {

if (!map.getTile(position.translate(movement)).isBlocked() { // do movement }

}The map will supply the render class with all the tiles and a method to convert the x, y coordinates int he map array to actual world coordinates.

Actually, this is done very simply since our tiles will have a width of 1 unit. So their position in the array are the actual world coordinates.

The map will have a load method to load the method. A MapLoader is a simple interface so that we can easily create implementations for this.

Tile

A simple tile consists out of

MeshThis defines the most basic tile there can be. This tile can be used to create "fancier" tiles that contain more operations.

The text component can render text to the screen, but the background of the text image is not transparant. There should be an option to set the background colour I think.

We should change some of the interaction with the mouse to also make use of the KeyBinding system. In the 2D GUI especially, this is not yet the case

Right now, the GameWindow object is being passed around through almost every class. I think that this introduces a lot of dependencies between interfaces/methods which can (and I think should) be avoided.

The GameWindow object is already a singleton, but it is not used as such. I propose to implement it this way and remove (almost) all GameWindow parameters.

Right now we simply hardcode all the keys which are used in the game in the update methods. This will become quite messy if we have more keys bound to actions.

Have one KeyBinding / Input class which provides an easy interface for key bindings. It would be the translation between keys to actions. For example, in a update method of a level:

KeyBinding binding = new DefaultKeyBinding();

if (binding.isForwardPressed()) { // update player position }

if (binding.isToggleDebugMenuPressed()) { // toggle debug menu }This way, we only have to define our key bindings once. If we want some special keybindings in certain cases, we can simply override the DefaultKeyBInding with SomeSpecialKeyBindings.

The KeyBinding class is then responsible for reading out the LWJGL events for input.

Thoughts?

One of the FollowCamera class constructors does not define the offset, but does use it for calculation, which results in a NullPointerException.

We need to implement animation in our game. We want to do this for the player (and possibly monster) walking. Therefore, we should have a system that can do this.

Should a GUI Button activate if the mouse button is pressed or when the button is released? This probably depends on what sort of buttons we want to use. If a Button always removes itself when it activates (and thus will not activate again), our current situation is fine. If this is not the case, we should adjust the code to only activate the button on releasing the mouse button

I think it might be a good idea to have some kind of visual indication what the positive and negative x-, and z-axis are in the game. When I move the camera around, I can become disoriented quickly. I think some kind of helper tool for debugging purposes could help us

The idea would be to create a symbol that is always aligned with the axis, just like this:

This can then either be placed at the mouse position, or the center of the screen in front of all objects, or even in like the lower left corner? Or even perhaps like the Minecraft f3 menu.

Mobs need pathfinding to find their path to the player. We should therefore create such algorithms, like

A*.

Each pathfinding class can implement a Pathfinding interface.

Basically that. Directional lights don't work.

Right now I keep getting errors when moving the player when I don't have a complete map. I would like to be able to disable the collision detection of the player at startup and at runtime.

Right now when I enable no light, the scene is still very light up. I would like to have control over the darkness of the room to create very dark corridors. What would we need to change/set in order to achieve this?

Problem

The next step in solving #9 is to convert the data in OpenGL to a format that can be used by the Neural Network. We store the data in OpenGL as a list of a list of points: List<List<Float>>. When during drawing, the mouse is released and pressed again, a new sublist is created to store all the points of this next part of the drawing. For each point, two floats are added to the list. One for the x-coordinate of the mouse and one for the y-coordinate. We need to convert this data or the rendered screen to a format that can be used by the NN

Possible solutions

There should be some mechanism to define/load levels. The best approach would be a level between the GameEngine and IGameLogic that handles the levels.

We need some kind of class that handles the trained neural network. Some tasks that need to be implemented:

evaluate() method which gets an image and returns a list of strings with the probabilities.NDArray so it can be used in the network.evaluate() method to only hold the probabilities of the items requested. This is useful when checking a puzzle.When the drawing menu is opened (or for example a main menu is opened) we should be able to pause the game in the meantime. Can we simply stop the game loop?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.