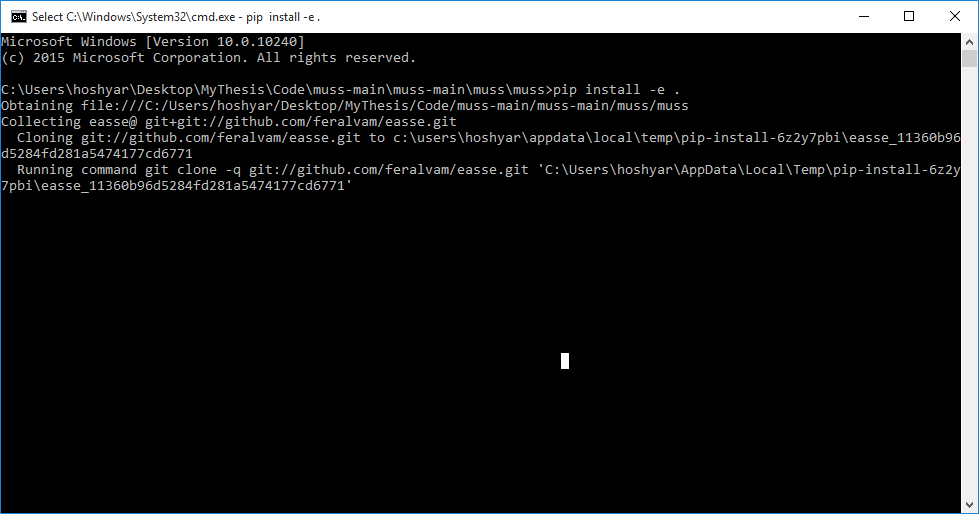

After installing all the dependencies (pip install -e .), I launched the python script for simplify the french text given in exemple and the command failed. (python scripts/simplify.py scripts/examples.fr --model-name muss_fr_mined)

python scripts/simplify.py scripts/examples.fr --model-name muss_fr_mined fairseq-generate /home/unapei-muss/admin/tmp/tmp3iwo639d --dataset-impl raw --gen-subset tmp --path /home/unapei-muss/www/muss/resources/models/muss_fr_mined/model.pt --beam 5 --nbest 1 --lenpen 1.0 --diverse-beam-groups -1 --diverse-beam-strength 0.5 --max-tokens 8000 --model-overrides "{'encoder_embed_path': None, 'decoder_embed_path': None}" --skip-invalid-size-inputs-valid-test --task 'translation_from_pretrained_bart' --langs 'ar_AR,cs_CZ,de_DE,en_XX,es_XX,et_EE,fi_FI,fr_XX,gu_IN,hi_IN,it_IT,ja_XX,kk_KZ,ko_KR,lt_LT,lv_LV,my_MM,ne_NP,nl_XX,ro_RO,ru_RU,si_LK,tr_TR,vi_VN,zh_CN' INFO:fairseq_cli.generate:Namespace(all_gather_list_size=16384, batch_size=None, batch_size_valid=None, beam=5, bf16=False, bpe=None, broadcast_buffers=False, bucket_cap_mb=25, checkpoint_shard_count=1, checkpoint_suffix='', constraints=None, cpu=False, criterion='cross_entropy', curriculum=0, data='/home/unapei-muss/admin/tmp/tmp3iwo639d', data_buffer_size=10, dataset_impl='raw', ddp_backend='c10d', decoding_format=None, device_id=0, disable_validation=False, distributed_backend='nccl', distributed_init_method=None, distributed_no_spawn=False, distributed_port=-1, distributed_rank=0, distributed_world_size=1, distributed_wrapper='DDP', diverse_beam_groups=-1, diverse_beam_strength=0.5, diversity_rate=-1.0, empty_cache_freq=0, eval_bleu=False, eval_bleu_args=None, eval_bleu_detok='space', eval_bleu_detok_args=None, eval_bleu_print_samples=False, eval_bleu_remove_bpe=None, eval_tokenized_bleu=False, fast_stat_sync=False, find_unused_parameters=False, fix_batches_to_gpus=False, fixed_validation_seed=None, force_anneal=None, fp16=False, fp16_init_scale=128, fp16_no_flatten_grads=False, fp16_scale_tolerance=0.0, fp16_scale_window=None, gen_subset='tmp', iter_decode_eos_penalty=0.0, iter_decode_force_max_iter=False, iter_decode_max_iter=10, iter_decode_with_beam=1, iter_decode_with_external_reranker=False, langs='ar_AR,cs_CZ,de_DE,en_XX,es_XX,et_EE,fi_FI,fr_XX,gu_IN,hi_IN,it_IT,ja_XX,kk_KZ,ko_KR,lt_LT,lv_LV,my_MM,ne_NP,nl_XX,ro_RO,ru_RU,si_LK,tr_TR,vi_VN,zh_CN', left_pad_source='True', left_pad_target='False', lenpen=1.0, lm_path=None, lm_weight=0.0, load_alignments=False, local_rank=0, localsgd_frequency=3, log_format=None, log_interval=100, lr_scheduler='fixed', lr_shrink=0.1, match_source_len=False, max_len_a=0, max_len_b=200, max_source_positions=1024, max_target_positions=1024, max_tokens=8000, max_tokens_valid=8000, memory_efficient_bf16=False, memory_efficient_fp16=False, min_len=1, min_loss_scale=0.0001, model_overrides="{'encoder_embed_path': None, 'decoder_embed_path': None}", model_parallel_size=1, nbest=1, no_beamable_mm=False, no_early_stop=False, no_progress_bar=False, no_repeat_ngram_size=0, no_seed_provided=False, nprocs_per_node=1, num_batch_buckets=0, num_shards=1, num_workers=1, optimizer=None, path='/home/unapei-muss/www/muss/resources/models/muss_fr_mined/model.pt', pipeline_balance=None, pipeline_checkpoint='never', pipeline_chunks=0, pipeline_decoder_balance=None, pipeline_decoder_devices=None, pipeline_devices=None, pipeline_encoder_balance=None, pipeline_encoder_devices=None, pipeline_model_parallel=False, prefix_size=0, prepend_bos=False, print_alignment=False, print_step=False, profile=False, quantization_config_path=None, quiet=False, remove_bpe=None, replace_unk=None, required_batch_size_multiple=8, required_seq_len_multiple=1, results_path=None, retain_dropout=False, retain_dropout_modules=None, retain_iter_history=False, sacrebleu=False, sampling=False, sampling_topk=-1, sampling_topp=-1.0, score_reference=False, scoring='bleu', seed=1, shard_id=0, skip_invalid_size_inputs_valid_test=True, slowmo_algorithm='LocalSGD', slowmo_momentum=None, source_lang=None, target_lang=None, task='translation_from_pretrained_bart', temperature=1.0, tensorboard_logdir=None, threshold_loss_scale=None, tokenizer=None, tpu=False, train_subset='train', truncate_source=False, unkpen=0, unnormalized=False, upsample_primary=1, user_dir=None, valid_subset='valid', validate_after_updates=0, validate_interval=1, validate_interval_updates=0, warmup_updates=0, zero_sharding='none') INFO:fairseq.tasks.translation:[complex] dictionary: 250001 types INFO:fairseq.tasks.translation:[simple] dictionary: 250001 types INFO:fairseq.data.data_utils:loaded 4 examples from: /home/unapei-muss/admin/tmp/tmp3iwo639d/tmp.complex-simple.complex INFO:fairseq.data.data_utils:loaded 4 examples from: /home/unapei-muss/admin/tmp/tmp3iwo639d/tmp.complex-simple.simple INFO:fairseq.tasks.translation:/home/unapei-muss/admin/tmp/tmp3iwo639d tmp complex-simple 4 examples INFO:fairseq_cli.generate:loading model(s) from /home/unapei-muss/www/muss/resources/models/muss_fr_mined/model.pt Killed