This is tensorflow Faster-RCNN implementation from scratch supporting to the batch processing. All methods are tried to be created in the simplest way for easy understanding. Most of the operations performed during the implementation were carried out as described in the paper and tf-rpn repository.

It's implemented and tested with tensorflow 2.0.

MobileNetV2 and VGG16 backbones are supported.

Project models created in virtual environment using miniconda. You can also create required virtual environment with conda.

To create virtual environment (tensorflow-2 gpu environment):

conda env create -f environment.ymlThere are two different backbone, first one the legacy vgg16 backbone and the second and default one is mobilenet_v2. You can easily specify the backbone to be used with the --backbone parameter. Default backbone is mobilenet_v2.

To train and test Faster-RCNN model:

python faster_rcnn_trainer.py --backbone mobilenet_v2

python faster_rcnn_predictor.py --backbone mobilenet_v2You can also train and test RPN alone:

python rpn_trainer.py --backbone vgg16

python rpn_predictor.py --backbone vgg16If you have GPU issues you can use -handle-gpu flag with these commands:

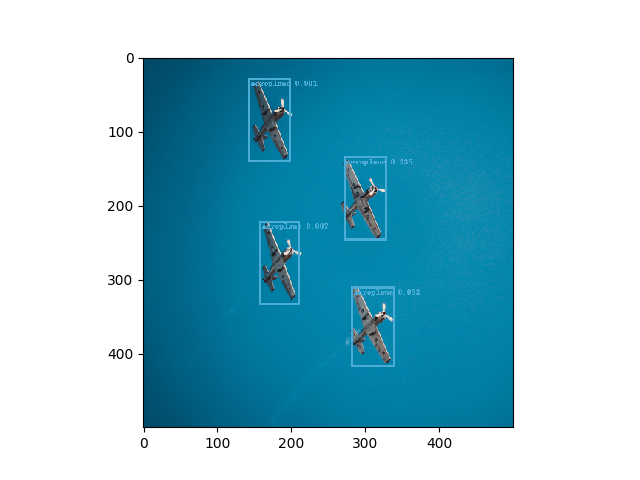

python faster_rcnn_trainer.py -handle-gpu| Trained with VOC 0712 trainval data |

|---|

|

| Photo by William Peynichou on Unsplash |

|

| Photo by Vishu Gowda on Unsplash |