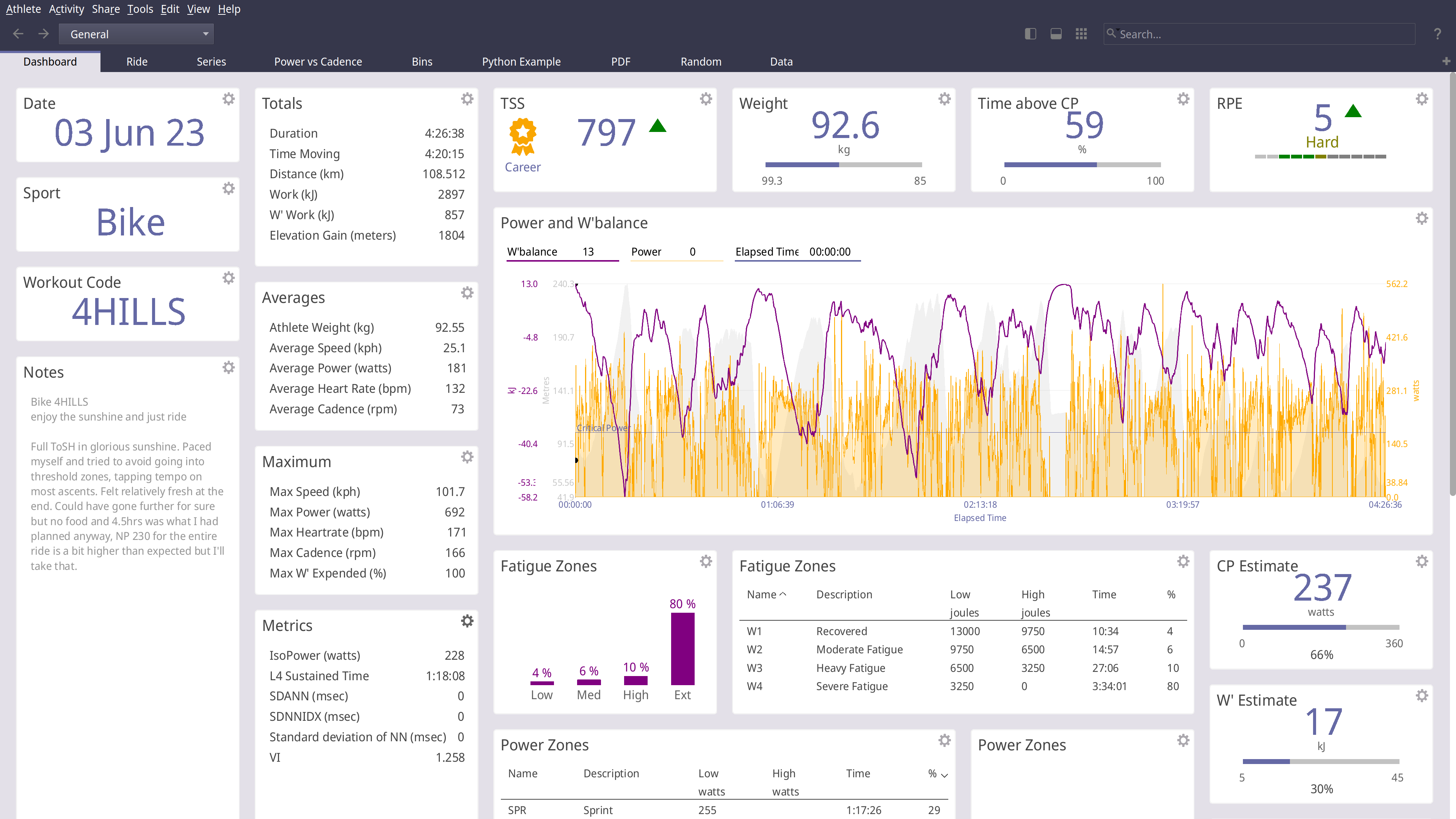

GoldenCheetah is a desktop application for cyclists and triathletes and coaches

- Analyse using summary metrics like BikeStress, TRIMP or RPE

- Extract insight via models like Critical Power and W'bal

- Track and predict performance using models like Banister and PMC

- Optimise aerodynamics using Virtual Elevation

- Train indoors with ANT and BTLE trainers

- Upload and Download with many cloud services including Strava, Withings and Todays Plan

- Import and export data to and from a wide range of bike computers and file formats

- Track body measures, equipment use and setup your own metadata to track

GoldenCheetah provides tools for users to develop their own own metrics, models and charts

- A high-performance and powerful built-in scripting language

- Local Python runtime or embedding a user installed runtime

- Embedded user installed R runtime

GoldenCheetah supports community sharing via the Cloud

- Upload and download user developed metrics

- Upload and download user, Python or R charts

- Import indoor workouts from the ErgDB

- Share anonymised data with researchers via the OpenData initiative

GoldenCheetah is free for everyone to use and modify, released under the GPL v2 open source license with pre-built binaries for Mac, Windows and Linux.

Golden Cheetah install and build instructions are documented for each platform;

INSTALL-WIN32 For building on Microsoft Windows

INSTALL-LINUX For building on Linux

INSTALL-MAC For building on Apple MacOS

Official release builds, snapshots and development builds are all available from http://www.goldencheetah.org

If you are looking for the NOTIO fork of GoldenCheetah it can be found here: https://github.com/notio-technologies/GCNotio