holywu / vs-realesrgan Goto Github PK

View Code? Open in Web Editor NEWReal-ESRGAN function for VapourSynth

License: BSD 3-Clause "New" or "Revised" License

Real-ESRGAN function for VapourSynth

License: BSD 3-Clause "New" or "Revised" License

similar to HolyWu/vs-scunet#4 I get expected scalar type Half but found Float when calling

from vsrealesrgan import RealESRGAN

# adjusting color space from YUV444P8 to RGBH for VsRealESRGAN

clip = core.resize.Bicubic(clip=clip, format=vs.RGBH, matrix_in_s="470bg", range_s="limited")

# resizing using RealESRGAN

clip = RealESRGAN(clip=clip, model=5, device_index=0, trt=True, trt_cache_path=r"J:\tmp") # 704x576

# resizing 704x576 to 704x526

# adjusting resizing

clip = core.resize.Bicubic(clip=clip, format=vs.RGBS, range_s="limited")

clip = core.fmtc.resample(clip=clip, w=704, h=526, kernel="lanczos", interlaced=False, interlacedd=False)

from vsscunet import scunet as SCUNet

# denoising using SCUNet

clip = SCUNet(clip=clip, model=4, cuda_graphs=True, nvfuser=True)

using

vsrealesrgan-4.0.1.dist-info

vsscunet-1.0.0.dist-info

on Windows 11, 64GB RAM, Geforce RTX 4080 16GB, in a portable VapourSynth setup.

Would be nice if this could be fixed in vs-realesrgan as it was in vs-femasr. 👍

Is it possible to use x2 and x3 scales with vs-realesrgan and realesr-animevideov3 model?

Hi

i'm not python coder, but, should be added onnxruntime on the install_requires field into setup.cfg file?

greetings

There is a feature in the official implementation of Real-ESRGAN that utilizes GFPGAN for face enhancement.

Could you please add this feature to the implementation of vs-realesrgan? It would be very helpful for processing some videos.

Thank you very much!

Error on frame 15 request:

'vapoursynth.VideoFrame' object is not subscriptable

py3.6.4

vs.core.version:

VapourSynth Video Processing Library\nCopyright (c) 2012-2018 Fredrik Mellbin\nCore R44\nAPI R3.5\nOptions: -\n

torch.version:

1.10.0+cu111

vpy:

import vapoursynth as vs

import sys

sys.path.append("C:\C\Transcoding\VapourSynth\core64\plugins\Scripts")

import mvsfunc as mvf

sys.path.append(r"C:\Users\liujing\AppData\Local\Programs\Python\Python36\Lib\site-packages\vsrealesrgan")

from vsrealesrgan import RealESRGAN

core = vs.get_core(accept_lowercase=True)

source = core.ffms2.Source(sourcename)

source = mvf.ToRGB(source,depth=32)

source = RealESRGAN(source)

source= mvf.ToYUV(source,depth=16)

source.set_output()

Hi all,

Kindly requesting if anyone has a full example inference script for vs-realesrgan? No clue how to use vapoursynth, and so many errors. Also, the example usage on Readme is minimal, so not even sure how to initiate the clip.

Thank you in advance for your time.

Can you rework it so it can do one image also?

Thanks

from vsrealesrgan import RealESRGAN

ret = RealESRGAN(clip)

Would it be possible to port A-ESRGAN?

Thank you and hope you have a happy new year!🥳

When trying to install vs-realesrgan in Vapoursynth R58 I get:

I:\Hybrid\64bit\Vapoursynth>python -m pip install --upgrade vsrealesrgan

Collecting vsrealesrgan

Using cached vsrealesrgan-2.0.0-py3-none-any.whl (12 kB)

Collecting VapourSynth>=55

Using cached VapourSynth-57.zip (567 kB)

Preparing metadata (setup.py) ... error

error: subprocess-exited-with-error

× python setup.py egg_info did not run successfully.

│ exit code: 1

╰─> [15 lines of output]

Traceback (most recent call last):

File "C:\Users\Selur\AppData\Local\Temp\pip-install-7_na63f8\vapoursynth_4864864388024a95a1e8b4adda80b293\setup.py", line 64, in <module>

dll_path = query(winreg.HKEY_LOCAL_MACHINE, REGISTRY_PATH, REGISTRY_KEY)

File "C:\Users\Selur\AppData\Local\Temp\pip-install-7_na63f8\vapoursynth_4864864388024a95a1e8b4adda80b293\setup.py", line 38, in query

reg_key = winreg.OpenKey(hkey, path, 0, winreg.KEY_READ)

FileNotFoundError: [WinError 2] Das System kann die angegebene Datei nicht finden

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "C:\Users\Selur\AppData\Local\Temp\pip-install-7_na63f8\vapoursynth_4864864388024a95a1e8b4adda80b293\setup.py", line 67, in <module>

raise OSError("Couldn't detect vapoursynth installation path")

OSError: Couldn't detect vapoursynth installation path

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

note: This is an issue with the package mentioned above, not pip.

hint: See above for details.

any idea how to fix this?

As https://github.com/xinntao/Real-ESRGAN provides a ncnn version, is it possible to implement it as a Vapoursynth filter?

I'm getting this error:

`

python -m pip install --upgrade vsrealesrgan

Collecting vsrealesrgan

Using cached vsrealesrgan-3.1.0-py3-none-any.whl (7.4 kB)

Collecting tqdm

Using cached tqdm-4.64.0-py2.py3-none-any.whl (78 kB)

Requirement already satisfied: numpy in d:\vapoursynth\lib\site-packages (from vsrealesrgan) (1.22.3)

Collecting VapourSynth>=55

Using cached VapourSynth-58.zip (558 kB)

Preparing metadata (setup.py) ... error

error: subprocess-exited-with-error

× python setup.py egg_info did not run successfully.

│ exit code: 1

╰─> [15 lines of output]

Traceback (most recent call last):

File "C:\Users*\AppData\Local\Temp\pip-install-2415kpn4\vapoursynth_712c69d39f4a4718a3f6b523a85b39eb\setup.py", line 64, in

dll_path = query(winreg.HKEY_LOCAL_MACHINE, REGISTRY_PATH, REGISTRY_KEY)

File "C:\Users*\AppData\Local\Temp\pip-install-2415kpn4\vapoursynth_712c69d39f4a4718a3f6b523a85b39eb\setup.py", line 38, in query

reg_key = winreg.OpenKey(hkey, path, 0, winreg.KEY_READ)

FileNotFoundError: [WinError 2] The system cannot find the file specified

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "C:\Users\**\AppData\Local\Temp\pip-install-2415kpn4\vapoursynth_712c69d39f4a4718a3f6b523a85b39eb\setup.py", line 67, in <module>

raise OSError("Couldn't detect vapoursynth installation path")

OSError: Couldn't detect vapoursynth installation path

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

note: This is an issue with the package mentioned above, not pip.

hint: See above for details.

`

Using:

# Imports

import vapoursynth as vs

import os

import sys

# getting Vapoursynth core

import ctypes

# Loading Support Files

Dllref = ctypes.windll.LoadLibrary("i:/Hybrid/64bit/vsfilters/Support/libfftw3f-3.dll")

core = vs.core

# Import scripts folder

scriptPath = 'i:/Hybrid/64bit/vsscripts'

sys.path.insert(0, os.path.abspath(scriptPath))

import site

# Adding torch dependencies to PATH

path = site.getsitepackages()[0]+'/torch_dependencies/bin/'

ctypes.windll.kernel32.SetDllDirectoryW(path)

path = path.replace('\\', '/')

os.environ["PATH"] = path + os.pathsep + os.environ["PATH"]

# Loading Plugins

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/GrainFilter/AdaptiveGrain/adaptivegrain_rs.dll")

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/GrainFilter/AddGrain/AddGrain.dll")

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/Support/fmtconv.dll")

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/GrainFilter/RemoveGrain/RemoveGrainVS.dll")

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/SharpenFilter/CAS/CAS.dll")

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/DenoiseFilter/DFTTest/DFTTest.dll")

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/SourceFilter/DGDecNV/DGDecodeNV.dll")

# Import scripts

import havsfunc

# source: 'C:\Users\Selur\Desktop\VideoTest_9s.mkv'

# current color space: YUV420P8, bit depth: 8, resolution: 720x306, fps: 23.976, color matrix: 709, yuv luminance scale: limited, scanorder: progressive

# Loading C:\Users\Selur\Desktop\VideoTest_9s.mkv using DGSource

clip = core.dgdecodenv.DGSource("J:/tmp/2023-01-30@19_43_10_8210.dgi")# 23.976 fps, scanorder: progressive

# Setting detected color matrix (709).

clip = core.std.SetFrameProps(clip, _Matrix=1)

# Setting color transfer info, when it is not set

clip = clip if not core.text.FrameProps(clip,'_Transfer') else core.std.SetFrameProps(clip, _Transfer=1)

# Setting color primaries info, when it is not set

clip = clip if not core.text.FrameProps(clip,'_Primaries') else core.std.SetFrameProps(clip, _Primaries=1)

# Setting color range to TV (limited) range.

clip = core.std.SetFrameProp(clip=clip, prop="_ColorRange", intval=1)

# making sure frame rate is set to 23.976

clip = core.std.AssumeFPS(clip=clip, fpsnum=24000, fpsden=1001)

clip = core.std.SetFrameProp(clip=clip, prop="_FieldBased", intval=0)

clip = havsfunc.LSFmod(input=clip)

# Using FastLineDarkenMOD for line darkening

clip = havsfunc.FastLineDarkenMOD(c=clip)

from vsrealesrgan import RealESRGAN

clip = core.std.AddBorders(clip=clip, left=0, right=0, top=0, bottom=2) # add borders to archive mod 4 (VsRealESRGAN) - 720x308

# adjusting color space from YUV420P8 to RGBH for VsRealESRGAN

clip = core.resize.Bicubic(clip=clip, format=vs.RGBH, matrix_in_s="709", range_s="limited")

# resizing using RealESRGAN

clip = RealESRGAN(clip=clip, model=5, device_index=0, trt=True, trt_cache_path=r"J:\tmp") # 2880x1232

# resizing 2880x1232 to 720x306

clip = core.std.CropRel(clip=clip, left=0, right=0, top=0, bottom=8) # removing borders (VsRealESRGAN) - 2880x1224

# adjusting resizing

clip = core.resize.Bicubic(clip=clip, format=vs.RGBS, range_s="limited")

clip = core.fmtc.resample(clip=clip, w=720, h=306, kernel="lanczos", interlaced=False, interlacedd=False)

# contrast sharpening using CAS

clip = core.cas.CAS(clip=clip)

# adjusting color space from RGBS to YUV444P16 for vsAddGrain

clip = core.resize.Bicubic(clip=clip, format=vs.YUV444P16, matrix_s="709", range_s="limited", dither_type="error_diffusion")

# adding Grain using AddGrain and adaptive luma mask

clip = core.std.PlaneStats(clipa=clip)

clipmask = core.adg.Mask(clip=clip, luma_scaling=12)

clipgrained = core.grain.Add(clip=clip, var=8.00)

clip = core.std.MaskedMerge(clip, clipgrained, clipmask)

# adjusting output color from: YUV444P16 to YUV420P10 for x265Model

clip = core.resize.Bicubic(clip=clip, dither_type="error_diffusion", format=vs.YUV420P10, range_s="limited")

# set output frame rate to 23.976fps

clip = core.std.AssumeFPS(clip=clip, fpsnum=24000, fpsden=1001)

# Output

clip.set_output()`

And calling:

i:\Hybrid\64bit\Vapoursynth\VSPipe.exe "J:\tmp\encodingTempSynthSkript_2023-01-30@19_43_10_8210_0.vpy" - -c y4m | i:\Hybrid\64bit\x265.exe --input - --output-depth 10 --y4m --profile main10 --limit-modes --no-early-skip --no-open-gop --opt-ref-list-length-pps --crf 18.00 --opt-qp-pps --cbqpoffs -2 --crqpoffs -2 --limit-refs 0 --ssim-rd --psy-rd 2.50 --rdoq-level 2 --psy-rdoq 10.00 --aq-mode 0 --deblock=-1:-1 --limit-sao --no-repeat-headers --range limited --colormatrix bt709 --output "J:\tmp\2023-01-30@19_43_10_8210_03.265"

I get:

Warning: i:\Hybrid\64bit\Vapoursynth\Lib\site-packages\torch_tensorrt\fx\tracer\acc_tracer\acc_tracer.py:584: UserWarning: acc_tracer does not support currently support models for training. Calling eval on model before tracing.

warnings.warn(

Information: == Log pass <function fuse_permute_matmul at 0x0000029B26F88700> before/after graph to C:\Users\Selur\AppData\Local\Temp\tmpjsmylxpt, before/after are the same = True

Information: == Log pass <function fuse_permute_linear at 0x0000029B26F884C0> before/after graph to C:\Users\Selur\AppData\Local\Temp\tmp5zwqs8fw, before/after are the same = True

Information: Now lowering submodule _run_on_acc_0

Information: split_name=_run_on_acc_0, input_specs=[InputTensorSpec(shape=torch.Size([1, 3, 308, 720]), dtype=torch.float16, device=device(type='cuda', index=0), shape_ranges=[], has_batch_dim=True)]

Information: Timing cache is used!

x265 [error]: unable to open input file <->

Information: TRT INetwork construction elapsed time: 0:00:00.387301

Information: Build TRT engine elapsed time: 0:01:01.767990

Information: Lowering submodule _run_on_acc_0 elapsed time 0:01:05.018931

Information: Now lowering submodule _run_on_acc_2

Information: split_name=_run_on_acc_2, input_specs=[InputTensorSpec(shape=torch.Size([1, 3, 1232, 2880]), dtype=torch.float16, device=device(type='cuda', index=0), shape_ranges=[], has_batch_dim=True), InputTensorSpec(shape=torch.Size([1, 3, 1232, 2880]), dtype=torch.float16, device=device(type='cuda', index=0), shape_ranges=[], has_batch_dim=True)]

Information: Timing cache is used!

Information: TRT INetwork construction elapsed time: 0:00:00

Information: Build TRT engine elapsed time: 0:00:00.224972

Information: Lowering submodule _run_on_acc_2 elapsed time 0:00:00.229702

Error: fwrite() call failed when writing frame: 0, plane: 0, errno: 22

Output 33 frames in 0.80 seconds (41.09 fps)

With trt=False the script works fine.

Also, I set trt_cache_path=r"J:\tmp" and the error complains about something in C:\Users\Selur\AppData\Local\Temp\tmpjsmylxpt :/

Funny thing is, that using vsViewer/vsedit the preview works fine.

Any idea what could be the issue?

'torch_dependencies/bin/'-folder contains the files from CUDA-11.7_cuDNN-8.6.0_TensorRT-8.5.2.2_win64.7z I'm using NVIIDA Studio driver 528.24 atm. on Windows 11 pro 64bit

Hi there, 1st of all thanks for your great work in porting all those goods to VapourSynth !

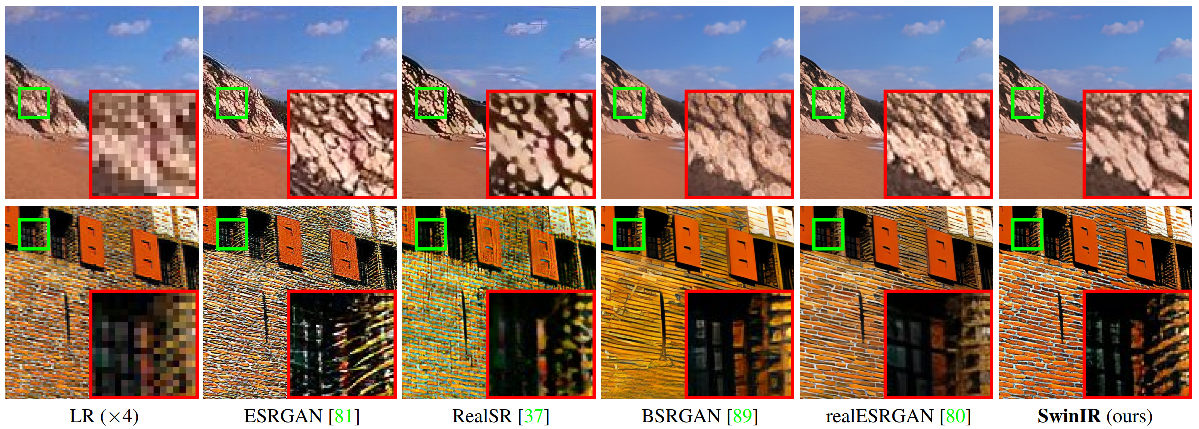

Dunno if it's the right place to ask, but it would be great to have SwinIR by @JingyunLiang in VS too:

SwinIR: Image Restoration Using Swin Transformer

Hope that inspires !

I have been trying to use this plugin, however I get the below error when trying to preview the video in VapourSynth Editor r19-mod-2-x86_64

Error on frame 0 request:

'vapoursynth.VideoFrame' object has no attribute 'get_read_array'

The code I am getting this error from is below

from vapoursynth import core

from vsrealesrgan import RealESRGAN

import havsfunc as haf

import vapoursynth as vs

video = core.ffms2.Source(source='EDIT.mkv')

video = haf.QTGMC(video, Preset="slow", MatchPreset="slow", MatchPreset2="slow", SourceMatch=3, TFF=True)

video = core.std.SelectEvery(clip=video, cycle=2, offsets=0)

video = core.std.Crop(clip=video, left=8, right=8, top=0, bottom=0)

video = core.resize.Spline36(clip=video, width=640, height=480)

video = core.resize.Bicubic(clip=video, format=vs.RGBS, matrix_in_s="470bg", range_s="limited")

video = RealESRGAN(clip=video, device_index=0)

video = core.resize.Bicubic(clip=video, format=vs.YUV420P10, matrix_s="470bg", range_s="limited")

video = core.resize.Spline36(clip=video, width=1440, height=1080)

video = core.std.AssumeFPS(clip=video, fpsnum=30000, fpsden=1001)

video.set_output()

I was wondering if you know of any configuration that can run real time on reasonable consumer gpus such as the rtx 3060?

Hi,

I just compared

clip = core.resize.Bicubic(clip, width=720, height=574, format=vs.RGBS, matrix_in_s='709')

clip = core.trt.Model(clip, engine_path="realesr-general-wdn-x4v3_opset16_574x720_fp16.engine", num_streams=4, device_id=0)

created with

trtexec --fp16 --onnx=./realesr-general-wdn-x4v3_opset16.onnx --minShapes=input:1x3x8x8 --optShapes=input:1x3x574x720 --maxShapes=input:1x3x574x720 --saveEngine=./realesr-general-wdn-x4v3_opset16_574x720_fp16.engine --tacticSources=+CUDNN,-CUBLAS,-CUBLAS_LT --skipInference --useCudaGraph --noDataTransfers --builderOptimizationLevel=5 --infStreams=4

gives me:

vspipe -e 300 test3.vpy -p .

Script evaluation done in 2.26 seconds

Output 301 frames in 8.15 seconds (36.92 fps)

using vs-realesrgan instead:

clip = core.resize.Bicubic(clip, width=720, height=574, format=vs.RGBH, matrix_in_s='709')

clip = realesrgan(clip, num_streams = 4, trt = True, model = 5, denoise_strength = 0)

gives me:

vspipe -e 300 test3.vpy -p .

Script evaluation done in 2.26 seconds

Output 601 frames in 23.73 seconds (25.33 fps)

How come that using core.trt.Model is almost 50% faster?

Using:

# Imports

import vapoursynth as vs

# getting Vapoursynth core

core = vs.core

import site

import os

# Adding torch dependencies to PATH

path = site.getsitepackages()[0]+'/torch_dependencies/'

path = path.replace('\\', '/')

os.environ["PATH"] = path + os.pathsep + os.environ["PATH"]

# Loading Plugins

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/Support/fmtconv.dll")

core.std.LoadPlugin(path="i:/Hybrid/64bit/vsfilters/SourceFilter/LSmashSource/vslsmashsource.dll")

# source: 'G:\TestClips&Co\files\test.avi'

# current color space: YUV420P8, bit depth: 8, resolution: 640x352, fps: 25, color matrix: 470bg, yuv luminance scale: limited, scanorder: progressive

# Loading G:\TestClips&Co\files\test.avi using LWLibavSource

clip = core.lsmas.LWLibavSource(source="G:/TestClips&Co/files/test.avi", format="YUV420P8", stream_index=0, cache=0, prefer_hw=0)

# Setting color matrix to 470bg.

clip = core.std.SetFrameProps(clip, _Matrix=5)

clip = clip if not core.text.FrameProps(clip,'_Transfer') else core.std.SetFrameProps(clip, _Transfer=5)

clip = clip if not core.text.FrameProps(clip,'_Primaries') else core.std.SetFrameProps(clip, _Primaries=5)

# Setting color range to TV (limited) range.

clip = core.std.SetFrameProp(clip=clip, prop="_ColorRange", intval=1)

# making sure frame rate is set to 25

clip = core.std.AssumeFPS(clip=clip, fpsnum=25, fpsden=1)

clip = core.std.SetFrameProp(clip=clip, prop="_FieldBased", intval=0)

original = clip

from vsrealesrgan import RealESRGAN

# adjusting color space from YUV420P8 to RGBH for VsRealESRGAN

clip = core.resize.Bicubic(clip=clip, format=vs.RGBH, matrix_in_s="470bg", range_s="limited")

# resizing using RealESRGAN

clip = RealESRGAN(clip=clip, device_index=0, trt=True, trt_cache_path="G:/Temp", num_streams=4) # 2560x1408

# resizing 2560x1408 to 640x352

# adjusting resizing

clip = core.resize.Bicubic(clip=clip, format=vs.RGBS, range_s="limited")

clip = core.fmtc.resample(clip=clip, w=640, h=352, kernel="lanczos", interlaced=False, interlacedd=False)

original = core.resize.Bicubic(clip=original, width=640, height=352)

# adjusting output color from: RGBS to YUV420P8 for x264Model

clip = core.resize.Bicubic(clip=clip, format=vs.YUV420P8, matrix_s="470bg", range_s="limited", dither_type="error_diffusion")

original = core.text.Text(clip=original,text="Original",scale=1,alignment=7)

clip = core.text.Text(clip=clip,text="Filtered",scale=1,alignment=7)

stacked = core.std.StackHorizontal([original,clip])

# Output

stacked.set_output()

I get

Failed to evaluate the script:

Python exception: Ran out of input

Traceback (most recent call last):

File "src\cython\vapoursynth.pyx", line 2866, in vapoursynth._vpy_evaluate

File "src\cython\vapoursynth.pyx", line 2867, in vapoursynth._vpy_evaluate

File "C:\Users\Selur\Desktop\test_2.vpy", line 32, in

clip = RealESRGAN(clip=clip, device_index=0, trt=True, trt_cache_path="G:/Temp", num_streams=4) # 2560x1408

File "I:\Hybrid\64bit\Vapoursynth\Lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "I:\Hybrid\64bit\Vapoursynth\Lib\site-packages\vsrealesrgan\__init__.py", line 284, in RealESRGAN

module = [torch.load(trt_engine_path) for _ in range(num_streams)]

File "I:\Hybrid\64bit\Vapoursynth\Lib\site-packages\vsrealesrgan\__init__.py", line 284, in

module = [torch.load(trt_engine_path) for _ in range(num_streams)]

File "I:\Hybrid\64bit\Vapoursynth\Lib\site-packages\torch\serialization.py", line 795, in load

return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args)

File "I:\Hybrid\64bit\Vapoursynth\Lib\site-packages\torch\serialization.py", line 1002, in _legacy_load

magic_number = pickle_module.load(f, **pickle_load_args)

EOFError: Ran out of input

Works fine with trt=False.

->Any idea what is going wrong there?

It would be sweet to be able to select our own models.

Maybe also support for pth format models?

Thanks.

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.