This repository is not going to be updated anymore. The new detection model will be published here: TARTDetection

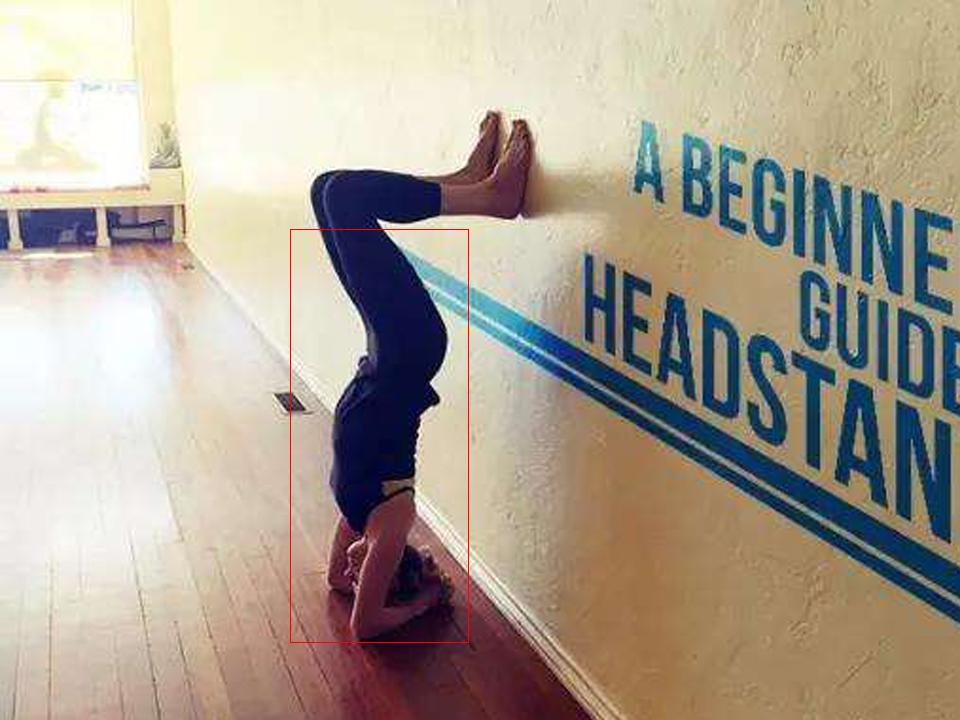

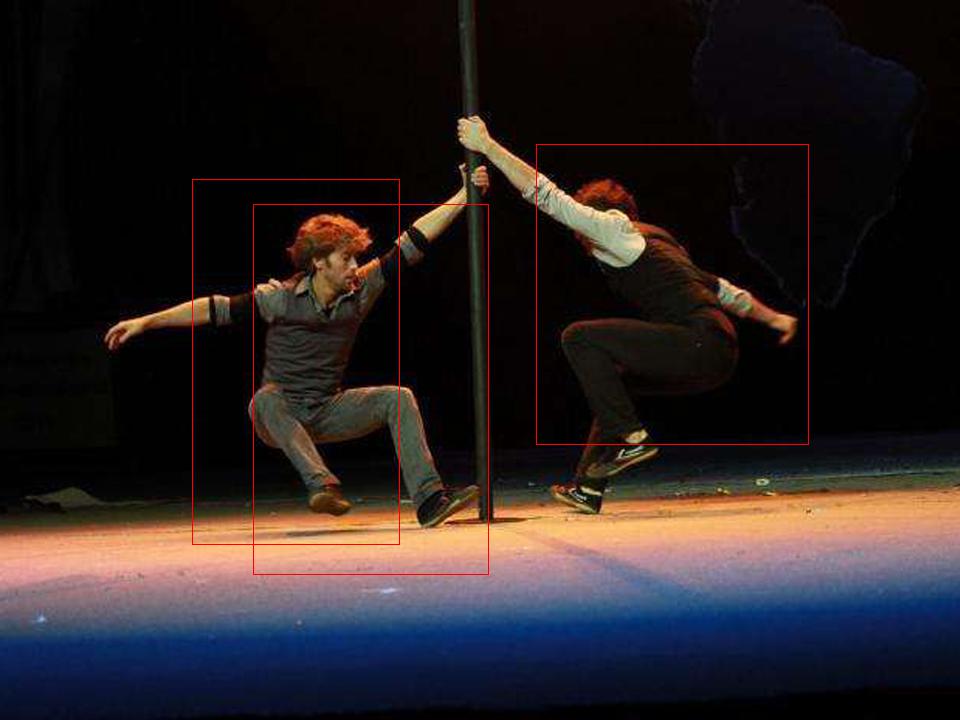

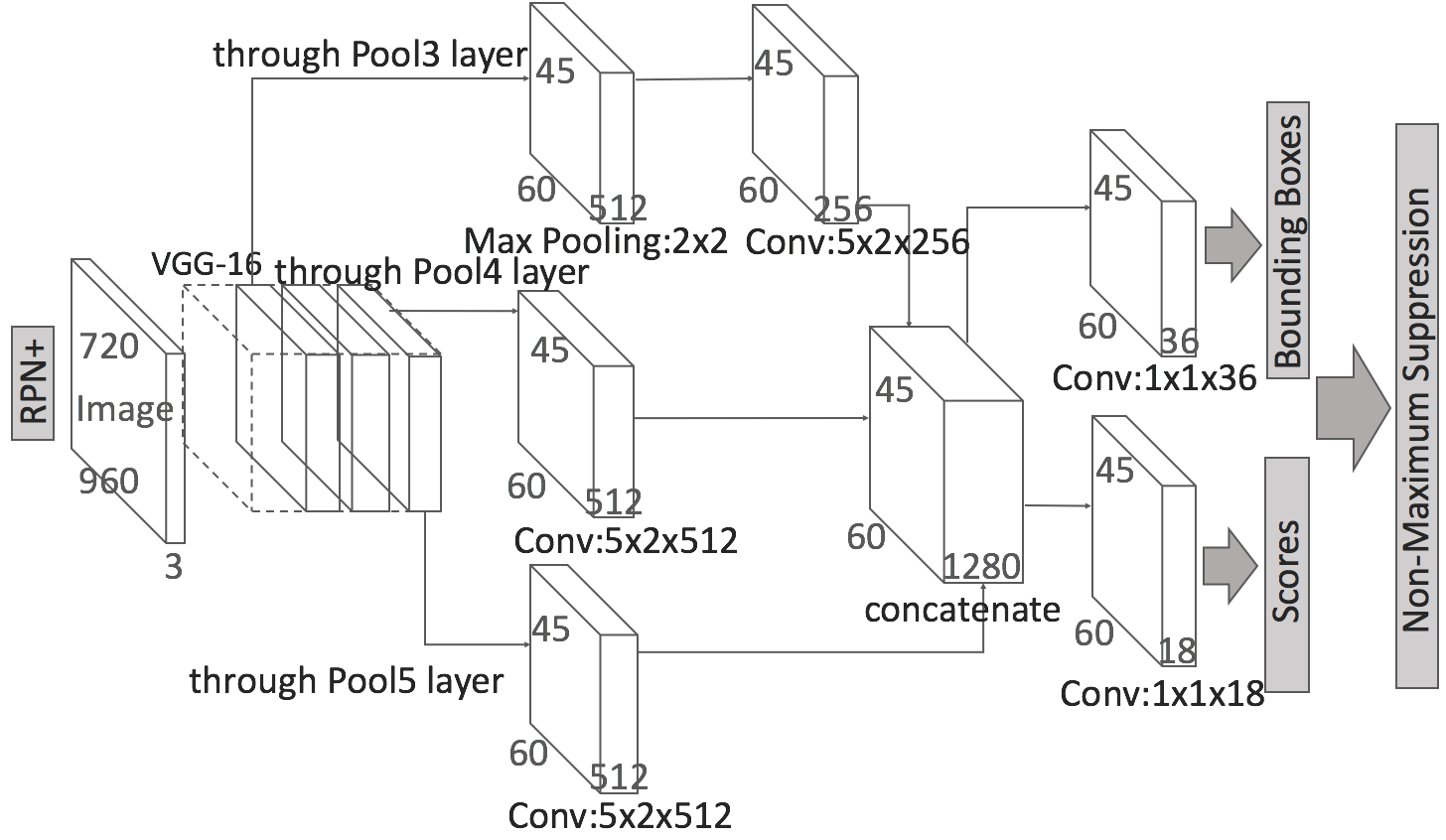

Code accompanying the paper "Expecting the Unexpected: Training Detectors for Unusual Pedestrians with Adversarial Imposters(CVPR2017)". As for the generator for synthetic data, please take this repo for reference.

- ubuntu or Mac OS

- tensorflow==1.1+

- pip install image

- pip install sklearn

- pip install scipy

- image_pylib(This repository should be put under the same folder with RPNplus.)

Run Demo:

- Download model files(RPN_model & VGG16_model) first, and put them in the ./models/ folder.

- The number 0 is your GPU index, and you can change to any available GPU index.

- This demo will test the images in the ./images/ folder and output the results to ./results/ folder.

python demo.py 0Train:

- The number 0 is your GPU index, and you can change to any available GPU index.

- Open train.py and set

imageLoadDirandanoLoadDirto proper values(imageLoadDirmeans where you store your images andanoLoadDirmeans where you store your annotation files).

python train.py 0Please cite our paper if you use this code or our datasets in your own work:

@InProceedings{Huang_2017_CVPR,

author = {Huang, Shiyu and Ramanan, Deva},

title = {Expecting the Unexpected: Training Detectors for Unusual Pedestrians With Adversarial Imposters},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {July},

year = {2017}

}

- Our code is based on Yinpeng Dong's code and this repo: https://github.com/machrisaa/tensorflow-vgg

Shiyu Huang([email protected])