AgentLLM is the first proof of concept to utilize an open-source large language model (LLM) to develop autonomous agents that operate solely on the browser. Its main goal is to demonstrate that embedded LLMs have the ability to handle the complex goal-oriented tasks of autonomous agents with acceptable performance. Learn more on Medium and feel free to check out the demo.

AgentLLM.mp4

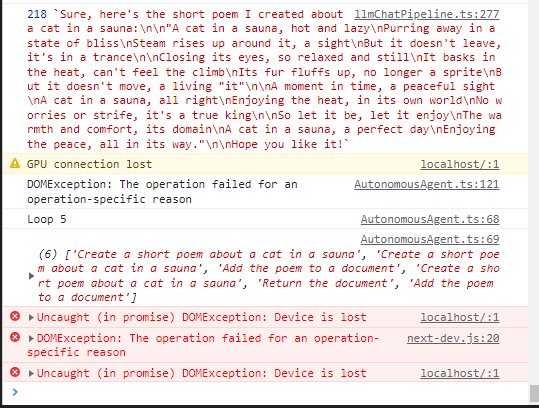

The implementation of the embedded LLM builds on the fantastic research of WebLLM, which takes advantage of Chromium's bleeding edge introduction of WebGPU to run inference utilizing the GPU. It offers significant performance gains over the previously available CPU-based implementations.

To create a sanitary and accessible sandbox, I chose to modify the popular AgentGPT project by replacing ChatGPT with WizardLM and changing the prompt mechanism. At its core, AgentGPT allows deploying autonomous agents to perform any arbitrary goal (from basic tasks to complex problem solving) by running a loop of task generation and execution. It's a perfect match for our requirements as its agents do not use tools, eliminating the complexity and unpredictability of external factors (which is present in full-blown implementations of other popular frameworks), and its GUI is friendly and feature-rich. The sandbox enables quick prototyping of the models' ability to break down tasks and plan ahead (feel free to try it!).

Consider supporting AgentGPT (the template for this project) by clicking here.

Recent advancements in large language models (LLMs) like OpenAI's ChatGPT and GPT-4 have led to the rise of autonomous agents that can act independently, without human intervention, to achieve specified goals. However, most notable LLMs require massive computational resources, restricting their operation to powerful remote servers. Their remote operation leads to a variety of issues concerning privacy, cost, and availability. Enter browser-native LLMs come in, changing the way we think about autonomous agents and their potential applications.

Disclaimer

This project is a proof-of-concept utilizing experimental technologies. It is by no means a production-ready implementation, and it should not be used for anything other than research. It's provided "as-is" without any warranty, expressed or implied. By using this software, you agree to assume all risks associated with its use, including but not limited to data loss, system failure, or any other issues that may arise.

- Install Chrome or Edge. Make sure you're up-to-date (at least M113 Chromium version).

- Launch the browser (preferably with

--enable-dawn-features=disable_robustnessfor performance). For example, in MacOS with Chrome, run the following command in the terminal:/Applications/Google\ Chrome.app/Contents/MacOS/Google\ Chrome --enable-dawn-features=disable_robustness - Navigate to AgentLLM

Since running the model locally is very taxing, lower-tier devices may not be able to run the demo. For the best experience, try AgentLLM on a powerful desktop device.

The easiest way to run AgentLLM locally is by using docker. A convenient setup script is provided to help you get started.

./setup.sh --dockerUsing docker-compose deploy

./setup.sh --docker-composeIf you wish to develop AgentLLM locally, the easiest way is to use the provided setup script.

./setup.sh --local🚧 You will need Nodejs +18 (LTS recommended) installed.

- Fork this project:

- Clone the repository:

git clone [email protected]:YOU_USER/AgentLLM.git- Install dependencies:

cd AgentLLM

npm install- Create a .env file with the following content:

🚧 The environment variables must match the following schema.

# Deployment Environment:

NODE_ENV=development

# Next Auth config:

# Generate a secret with `openssl rand -base64 32`

NEXTAUTH_SECRET=changeme

NEXTAUTH_URL=http://localhost:3000

DATABASE_URL=file:./db.sqlite

# Your open api key

OPENAI_API_KEY=changeme- Modify prisma schema to use sqlite:

./prisma/useSqlite.shNote: This only needs to be done if you wish to use sqlite.

- Ready 🥳, now run:

# Create database migrations

npx prisma db push

npm run devSet up AgentLLM in the cloud immediately by using GitHub Codespaces.

- From the GitHub repo, click the green "Code" button and select "Codespaces".

- Create a new Codespace or select a previous one you've already created.

- Codespaces opens in a separate tab in your browser.

- In terminal, run

bash ./setup.sh --local - When prompted in terminal, add your OpenAI API key.

- Click "Open in browser" when the build process completes.

- To shut AgentLLM down, enter Ctrl+C in Terminal.

- To restart AgentLLM, run

npm run devin Terminal.

Run the project 🥳

npm run dev