This is the repo of our EMNLP'20 paper:

Scalable Multi-Hop Relational Reasoning for Knowledge-Aware Question Answering

Yanlin Feng*, Xinyue Chen*, Bill Yuchen Lin, Peifeng Wang, Jun Yan and Xiang Ren.

EMNLP 2020.

*=equal contritbution

This repository also implements other graph encoding models for question answering (including vanilla LM finetuning).

- RelationNet

- R-GCN

- KagNet

- GConAttn

- KVMem

- MHGRN (or. MultiGRN)

Each model supports the following text encoders:

- LSTM

- GPT

- BERT

- XLNet

- RoBERTa

We provide preprocessed ConceptNet and pretrained entity embeddings for your own usage. These resources are independent of the source code.

Note that the following reousrces can be download here.

| Description | Downloads | Notes |

|---|---|---|

| Entity Vocab | entity-vocab | one entity per line, space replaced by '_' |

| Relation Vocab | relation-vocab | one relation per line, merged |

| ConceptNet (CSV format) | conceptnet-5.6.0-csv | English tuples extracted from the full conceptnet with merged relations |

| ConceptNet (NetworkX format) | conceptnet-5.6.0-networkx | NetworkX pickled format, pruned by filtering out stop words |

Entity embeddings are packed into a matrix of shape (#ent, dim) and stored in numpy format. Use np.load to read the file. You may need to download the vocabulary files first.

| Embedding Model | Dimensionality | Description | Downloads |

|---|---|---|---|

| TransE | 100 | Obtained using OpenKE with optim=sgd, lr=1e-3, epoch=1000 | entities relations |

| NumberBatch | 300 | https://github.com/commonsense/conceptnet-numberbatch | entities |

| BERT-based | 1024 | Provided by Zhengwei | entities |

- Python >= 3.6

- PyTorch == 1.1.0

- transformers == 2.0.0

- tqdm

- dgl == 0.3.1 (GPU version)

- networkx == 2.3

Run the following commands to create a conda environment (assume CUDA10):

conda create -n krqa python=3.6 numpy matplotlib ipython

source activate krqa

conda install pytorch=1.1.0 torchvision cudatoolkit=10.0 -c pytorch

pip install dgl-cu100==0.3.1

pip install transformers==2.0.0 tqdm networkx==2.3 nltk spacy==2.1.6

python -m spacy download enFirst, you need to download all the necessary data in order to train the model:

git clone https://github.com/INK-USC/MHGRN.git

cd MHGRN

bash scripts/download.shThe script will:

- Download the CommonsenseQA dataset

- Download ConceptNet

- Download pretrained TransE embeddings

To preprocess the data, run:

python preprocess.pyBy default, all available CPU cores will be used for multi-processing in order to speed up the process. Alternatively, you can use "-p" to specify the number of processes to use:

python preprocess.py -p 20The script will:

- Convert the original datasets into .jsonl files (stored in

data/csqa/statement/) - Extract English relations from ConceptNet, merge the original 42 relation types into 17 types

- Identify all mentioned concepts in the questions and answers

- Extract subgraphs for each q-a pair

The preprocessing procedure takes approximately 3 hours on a 40-core CPU server. Most intermediate files are in .jsonl or .pk format and stored in various folders. The resulting file structure will look like:

.

├── README.md

└── data/

├── cpnet/ (prerocessed ConceptNet)

├── glove/ (pretrained GloVe embeddings)

├── transe/ (pretrained TransE embeddings)

└── csqa/

├── train_rand_split.jsonl

├── dev_rand_split.jsonl

├── test_rand_split_no_answers.jsonl

├── statement/ (converted statements)

├── grounded/ (grounded entities)

├── paths/ (unpruned/pruned paths)

├── graphs/ (extracted subgraphs)

├── ...

To search the parameters for RoBERTa-Large on CommonsenseQA:

bash scripts/param_search_lm.sh csqa roberta-largeTo search the parameters for BERT+RelationNet on CommonsenseQA:

bash scripts/param_search_rn.sh csqa bert-large-uncasedEach graph encoding model is implemented in a single script:

| Graph Encoder | Script | Description |

|---|---|---|

| None | lm.py | w/o knowledge graph |

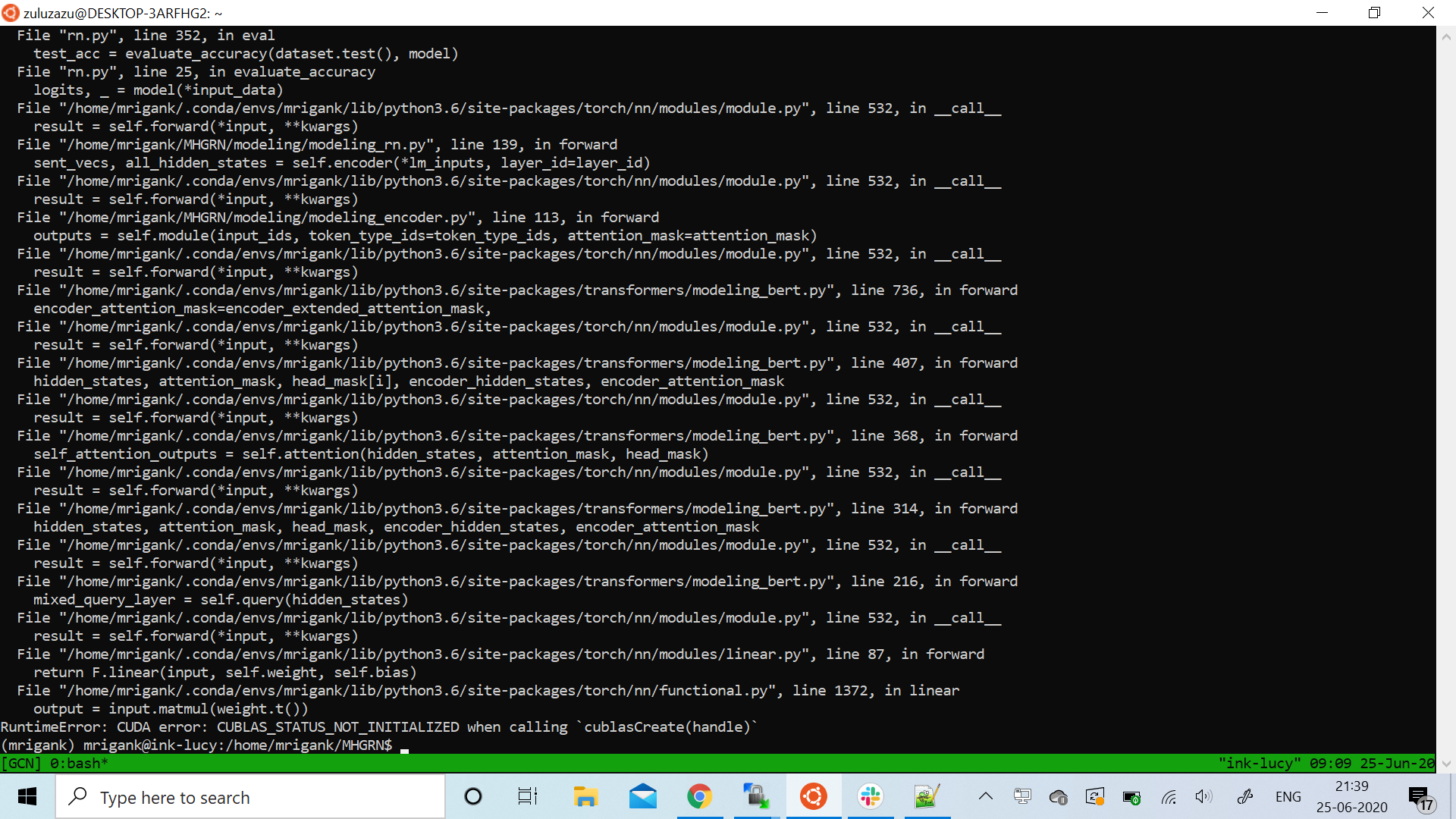

| Relation Network | rn.py | |

| R-GCN | rgcn.py | Use --gnn_layer_num and --num_basis to specify #layer and #basis |

| KagNet | kagnet.py | Adapted from https://github.com/INK-USC/KagNet, still tuning |

| Gcon-Attn | gconattn.py | |

| KV-Memory | kvmem.py | |

| MHGRN | grn.py |

Some important command line arguments are listed as follows (run python {lm,rn,rgcn,...}.py -h for a complete list):

| Arg | Values | Description | Notes |

|---|---|---|---|

--mode |

{train, eval, ...} | Training or Evaluation | default=train |

-enc, --encoder |

{lstm, openai-gpt, bert-large-unased, roberta-large, ....} | Text Encoer | Model names (except for lstm) are the ones used by huggingface-transformers, default=bert-large-uncased |

--optim |

{adam, adamw, radam} | Optimizer | default=radam |

-ds, --dataset |

{csqa, obqa} | Dataset | default=csqa |

-ih, --inhouse |

{0, 1} | Run In-house Split | default=1, only applicable to CSQA |

--ent_emb |

{transe, numberbatch, tzw} | Entity Embeddings | default=tzw (BERT-based node features) |

-sl, --max_seq_len |

{32, 64, 128, 256} | Maximum Sequence Length | Use 128 or 256 for datasets that contain long sentences! default=64 |

-elr, --encoder_lr |

{1e-5, 2e-5, 3e-5, 6e-5, 1e-4} | Text Encoder LR | dataset specific and text encoder specific, default values in utils/parser_utils.py |

-dlr, --decoder_lr |

{1e-4, 3e-4, 1e-3, 3e-3} | Graph Encoder LR | dataset specific and model specific, default values in {model}.py |

--lr_schedule |

{fixed, warmup_linear, warmup_constant} | Learning Rate Schedule | default=fixed |

-me, --max_epochs_before_stop |

{2, 4, 6} | Early Stopping Patience | default=2 |

--unfreeze_epoch |

{0, 3} | Freeze Text Encoder for N epochs | model specific |

-bs, --batch_size |

{16, 32, 64} | Batch Size | default=32 |

--save_dir |

str | Checkpoint Directory | model specific |

--seed |

{0, 1, 2, 3} | Random Seed | default=0 |

For example, run the following command to train a RoBERTa-Large model on CommonsenseQA:

python lm.py --encoder roberta-large --dataset csqaTo train a RelationNet with BERT-Large-Uncased as the encoder:

python rn.py --encoder bert-large-uncasedTo reproduce the reported results of MultiGRN on CommonsenseQA official set:

bash scripts/run_grn_csqa.sh

To evaluate a trained model (you need to specify --save_dir if the checkpoint is not stored in the default directory):

python {lm,rn,rgcn,...}.py --mode eval [ --save_dir path/to/directory/ ]- Convert your dataset to

{train,dev,test}.statement.jsonlin .jsonl format (seedata/csqa/statement/train.statement.jsonl) - Create a directory in

data/{yourdataset}/to store the .jsonl files - Modify

preprocess.pyand perform subgraph extraction for your data - Modify

utils/parser_utils.pyto support your own dataset - Tune

encoder_lr,decoder_lrand other important hyperparameters, modifyutils/parser_utils.pyand{model}.pyto record the tuned hyperparameters