[Model] Model Compiled

Time taken: 0:00:00.809316

[Model] Training Started

[Model] 2 epochs, 32 batch size, 124 batches per epoch

Epoch 1/2

124/124 [==============================] - 45s 363ms/step - loss: 0.0022

Epoch 2/2

1/124 [..............................] - ETA: 36s - loss: 6.4717e-04

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-27-e3c645699881> in <module>()

76

77 if __name__=='__main__':

---> 78 main()

<ipython-input-27-e3c645699881> in main()

58 batch_size = configs['training']['batch_size'],

59 steps_per_epoch = steps_per_epoch,

---> 60 save_dir = configs['model']['save_dir']

61 )

62

/notebooks/storage/core/model.py in train_generator(self, data_gen, epochs, batch_size, steps_per_epoch, save_dir)

81 epochs=epochs,

82 callbacks=callbacks,

---> 83 workers=1

84 )

85

/usr/local/lib/python3.5/dist-packages/keras/legacy/interfaces.py in wrapper(*args, **kwargs)

89 warnings.warn('Update your `' + object_name +

90 '` call to the Keras 2 API: ' + signature, stacklevel=2)

---> 91 return func(*args, **kwargs)

92 wrapper._original_function = func

93 return wrapper

/usr/local/lib/python3.5/dist-packages/keras/engine/training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1413 use_multiprocessing=use_multiprocessing,

1414 shuffle=shuffle,

-> 1415 initial_epoch=initial_epoch)

1416

1417 @interfaces.legacy_generator_methods_support

/usr/local/lib/python3.5/dist-packages/keras/engine/training_generator.py in fit_generator(model, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

211 outs = model.train_on_batch(x, y,

212 sample_weight=sample_weight,

--> 213 class_weight=class_weight)

214

215 outs = to_list(outs)

/usr/local/lib/python3.5/dist-packages/keras/engine/training.py in train_on_batch(self, x, y, sample_weight, class_weight)

1207 x, y,

1208 sample_weight=sample_weight,

-> 1209 class_weight=class_weight)

1210 if self._uses_dynamic_learning_phase():

1211 ins = x + y + sample_weights + [1.]

/usr/local/lib/python3.5/dist-packages/keras/engine/training.py in _standardize_user_data(self, x, y, sample_weight, class_weight, check_array_lengths, batch_size)

747 feed_input_shapes,

748 check_batch_axis=False, # Don't enforce the batch size.

--> 749 exception_prefix='input')

750

751 if y is not None:

/usr/local/lib/python3.5/dist-packages/keras/engine/training_utils.py in standardize_input_data(data, names, shapes, check_batch_axis, exception_prefix)

125 ': expected ' + names[i] + ' to have ' +

126 str(len(shape)) + ' dimensions, but got array '

--> 127 'with shape ' + str(data_shape))

128 if not check_batch_axis:

129 data_shape = data_shape[1:]

ValueError: Error when checking input: expected lstm_1_input to have 3 dimensions, but got array with shape (8, 1)

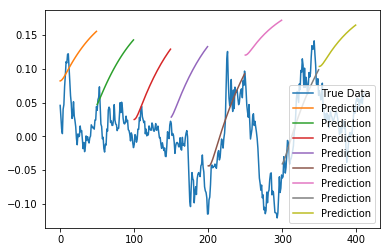

Did some debugging using the example config for stock market prediction. For each step in epoch 1, generate_train_batch yields two arrays x_batch and y_batch of shape (32, 49, 1) and (32, 1) respectively.