Version 1.5.0

Download as single header from here

transwarp is a header-only C++ library for task concurrency. It enables you to free your functors from explicit threads and transparently manage dependencies. Under the hood, a directed acyclic graph is built that allows for efficient traversal and type-safe dependencies. Use transwarp if you want to model your dependent operations in a graph of tasks and intend to invoke the graph more than once.

A task in transwarp is defined through a functor, parent tasks, and an optional name. Chaining tasks creates an acyclic graph. A task can either be consuming all or just one of its parents, or simply wait for their completion similar to continuations. transwarp supports executors either per task or globally when scheduling the tasks in the graph. Executors are decoupled from tasks and simply provide a way of running a given function.

transwarp is designed for ease of use, portability, and

scalability. It is written in C++11 and only depends on the standard

library. Just copy src/transwarp.h to your project and off you go!

Tested with GCC, Clang, and Visual Studio.

Table of contents

The master branch is always at the latest release. The develop branch is at the latest release plus some delta.

GCC/Clang on master and develop

Visual Studio on master and develop

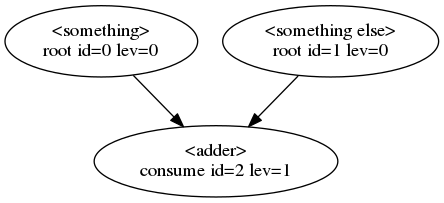

This example creates three tasks and connects them with each other to form a two-level graph. The tasks are then scheduled twice for computation while using 4 threads.

#include <fstream>

#include <iostream>

#include "transwarp.h"

namespace tw = transwarp;

double add_em_up(double x, int y) {

return x + y;

}

int main() {

// building the task graph

auto task1 = tw::make_value_task("something", 13.3);

auto task2 = tw::make_value_task("something else", 42);

auto task3 = tw::make_task(tw::consume, "adder", add_em_up, task1, task2);

// creating a dot-style graph for visualization

const auto graph = task3->get_graph();

std::ofstream("basic_with_three_tasks.dot") << tw::to_string(graph);

// schedule() can now be called as much as desired. The task graph

// only has to be built once

// parallel execution with 4 threads for independent tasks

tw::parallel executor{4};

task3->schedule_all(executor); // schedules all tasks for execution

std::cout << "result = " << task3->get() << std::endl; // result = 55.3

// modifying data input

task1->set_value(15.8);

task2->set_value(43);

task3->schedule_all(executor); // re-schedules all tasks for execution

std::cout << "result = " << task3->get() << std::endl; // result = 58.8

}The resulting graph of this example looks like this:

Every bubble represents a task and every arrow an edge between two tasks. The first line within a bubble is the task name. The second line denotes the task type followed by the task id and the task level in the graph.

This is a brief API doc of transwarp. In the following we will use tw as a namespace alias for transwarp.

transwarp supports seven different task types:

root, // The task has no parents

accept, // The task's functor accepts all parent futures

accept_any, // The task's functor accepts the first parent future that becomes ready

consume, // The task's functor consumes all parent results

consume_any, // The task's functor consumes the first parent result that becomes ready

wait, // The task's functor takes no arguments but waits for all parents to finish

wait_any, // The task's functor takes no arguments but waits for the first parent to finishThe task type is passed as the first parameter to make_task, e.g., to create

a consume task simply do this:

auto task = tw::make_task(tw::consume, functor, parent1, parent2);where functor denotes some callable and parent1/2 the parent tasks.

Note that functor in this case has to accept two arguments that match the

result types of the parent tasks.

Tasks can be freely chained together using the different task types.

The only restriction is that tasks without parents have to be either labeled as root tasks

or defined as value tasks.

The accept and accept_any types give you the greatest flexibility but require your

functor to take std::shared_future<T> types. The consume and consume_any task types, however, require your functor to take the direct result types of the parent tasks.

If you have a task that doesn't require a functor and should only ever return a given value or throw an exception then a value task can be used:

auto task = tw::make_value_task(42); A call to task->get() will now always return 42.

Once a task is created it can be scheduled just by itself:

auto task = tw::make_task(tw::root, functor);

task->schedule();which, if nothing else is specified, will run the task on the current thread.

However, using the built-in parallel executor the task can be pushed into a

thread pool and executed asynchronously:

tw::parallel executor{4}; // thread pool with 4 threads

auto task = tw::make_task(tw::root, functor);

task->schedule(executor);Regardless of how you schedule, the task result can be retrieved through:

std::cout << task->get() << std::endl;When chaining multiple tasks together a directed acyclic graph is built in which every task can be scheduled individually. Though, in many scenarios it is useful to compute all tasks in the right order with a single call:

auto parent1 = tw::make_task(tw::root, foo); // foo is a functor

auto parent2 = tw::make_task(tw::root, bar); // bar is a functor

auto task = tw::make_task(tw::consume, functor, parent1, parent2);

task->schedule_all(); // schedules all parents and itselfwhich can also be scheduled using an executor, for instance:

tw::parallel executor{4};

task->schedule_all(executor);which will run those tasks in parallel that do not depend on each other.

A task can be canceled by calling task->cancel(true) which will, by default,

only affect tasks that are not currently running yet. However, if you create a functor

that inherits from transwarp::functor you get access to the transwarp_cancel_point

function. This function can be used to denote well defined points where the functor

will exit when the associated task is canceled.

We have seen that we can pass executors to schedule() and schedule_all().

Additionally, they can be assigned to a task directly:

auto exec1 = std::make_shared<tw::parallel>(2);

task->set_executor(exec1);

tw::sequential exec2;

task->schedule(exec2); // exec1 will be used to schedule the taskThe task-specific executor will always be preferred over other executors when scheduling tasks.

transwarp defines an executor interface which can be implemented to perform custom behavior when scheduling tasks. The interface looks like this:

class executor {

public:

virtual ~executor() = default;

virtual std::string get_name() const = 0;

virtual void execute(const std::function<void()>& functor, const std::shared_ptr<tw::node>& node) = 0;

};

where functor denotes the function to be run and node an object that holds

meta-data of the current task.

This comparison should serve as nothing more than a quick overview of a few portable, open-source libraries for task parallelism in C++. By no means is this an exhaustive summary of the features those libraries provide.

C++11/14/17

These language standards only provide a basic way of dealing with tasks. The simplest way to launch an asynchronous task is through:

auto future = std::async(std::launch::async, functor, param1, param2);which will run the given functor with param1 and param2 on a

separate thread and return a std::future object. There are other

primitives such as std::promise and std::packaged_task that assist

with constructing asynchronous tasks. The latter is used internally by

transwarp to schedule functions.

Unfortunately, there is no way to chain futures together to create a graph of dependent operations. There is also no way of easily scheduling these operations on certain, user-defined threads. The standard library does, however, provide all the tools to build a framework, such as a transwarp, which implements these features.

C++20 and beyond

There are proposals through the concurrency

ts

to extend std::future and std::shared_future to support

continuations, e.g.

std::future<int> f1 = std::async([]() { return 123; });

std::future<std::string> f2 = f1.then([](std::future<int> f) { return std::to_string(f.get()); });In addition, there is talk about adding support for when_all and

when_any. These features combined would make it possible to create a

dependency graph much like the one in transwarp. Future continuations

will, however, not support a re-scheduling of tasks in the graph but

rather serve as one-shot operations. Also, there seems to be currently

no efforts towards custom executors.

The above example in transwarp would look something like this:

tw::parallel executor{4};

auto t1 = tw::make_value_task(123);

auto t2 = tw::make_task(tw::consume, [](int x) { return std::to_string(x); }, t1);

t2->schedule_all(executor);Boost supports

continuations

much like the ones proposed in the above concurrency ts. In addition,

boost supports custom executors that can be passed into overloaded

versions of future::then and async. The custom executor is

expected to implement a submit method accepting a function<void()>

which then runs the given function, possibly asynchronously. Hence,

this is quite similar to what transwarp does.

A difference to point out is that transwarp uses std::shared_future

to implement transfer between tasks which may be more expensive in

certain situations compared to std::future. Note that a call to

get() on a future will either return a reference or a moved result

while the same call on a shared future will either provide a reference

or a copy but never move.

Boost also supports a form of scheduling of tasks. This allows users

to schedule tasks when certain events take place, such as reaching a

certain time. In transwarp, tasks are scheduled by calling schedule

or schedule_all on the task object.

HPX implements all of the features proposed in the concurrency ts and currently available in boost regarding continuations. It also supports custom executors and goes slightly beyond what boost has to offer. This blog post has a nice summary.

Neither Boost nor HPX seem to support task graphs for multiple invocations.

TBB implements its own version of task-based programming, for instance

int Fib(int n) {

if ( n < 2 ) {

return n;

} else {

int x, y;

tbb::task_group g;

g.run([&]{ x = Fib(n-1); }); // spawn a task

g.run([&]{ y = Fib(n-2); }); // spawn another task

g.wait(); // wait for both tasks to complete

return x+y;

}

}which computes the Fibonacci series in a parallel fashion. The corresponding code in transwarp would look like this:

int Fib(int n) {

if ( n < 2 ) {

return n;

} else {

int x, y;

auto t1 = tw::make_task(tw::root, [&]{ x = Fib(n-1); });

auto t2 = tw::make_task(tw::root, [&]{ y = Fib(n-2); });

auto t3 = tw::make_task(tw::wait, []{}, t1, t2);

t3->schedule_all();

t3->wait();

return x+y;

}

}Note that for any real-world application the graph of tasks should be created upfront and not on the fly. This is just a silly toy example.

TBB supports both automatic and fine-grained task scheduling. Creating an acyclic graph of tasks appears to be somewhat cumbersome and is not nearly as straightforward as in transwarp. This post shows an example of such a graph. Simple continuations suffer from the same usability problem, though, it is possible to use them.

Stlab appears to be the library in the list that's the closest to what transwarp is trying to achieve. It supports future continuations in multiple directions (essentially a graph) and also canceling futures. Stlab splits its implementation into futures for single-shot graphs and channels for multiple invocations. A simple example using channels:

stlab::sender<int> send;

stlab::receiver<int> receive;

std::tie(send, receive) = stlab::channel<int>(stlab::default_executor);

std::atomic_int v{0};

auto result = receive

| stlab::executor{ stlab::immediate_executor } & [](int x) { return x * 2; }

| [&v](int x) { v = x; };

receive.set_ready();

send(1);

// Waiting just for illustrational purpose

while (v == 0) {

this_thread::sleep_for(chrono::milliseconds(1));

}This will take the input provided via send, multiply it by two, and

then assign v the result. The corresponding code in transwarp would

look like this:

auto t1 = tw::make_value_task(0);

auto t2 = tw::make_task(tw::consume, [](int x) { return x * 2; }, t1);

t1->set_value(1);

t2->schedule_all();

int v = t2->get();As can be seen from the comparison, transwarp shares many similarities to existing libraries. The notion of chaining dependent, possibly asynchronous operations and scheduling them using custom executors is a common use case. To summarize:

Use transwarp if:

- you want to model your dependent operations in a task graph

- you construct the task graph upfront and invoke it multiple times

- you possibly now or later want to run some tasks on different threads

- you want a header-only task library that is easy to use and has no dependencies

Don't use transwarp if:

- you construct the task graph on the fly for one-shot operations (use futures instead)

- significant chunks of memory are copied when invoking dependent tasks (transwarp uses shared_futures to communicate results between tasks)

Contact me if you have any questions or suggestions to make this a better library!

You can post on gitter, submit a pull request,

create a Github issue, or simply email me at [email protected].

If you're serious about contributing code to transwarp (which would be awesome!) then please submit a pull request and keep in mind that:

- all new development happens on the develop branch while the master branch is at the latest release

- unit tests should be added for all new code by extending the existing unit test suite

- C++ code uses spaces throughout