Quick Start | Documentation | Join Slack

Label Sleuth is an open source no-code system for text annotation and building text classifers. With Label Sleuth, domain experts (e.g., physicians, lawyers, psychologists) can quickly create custom NLP models by themselves, with no dependency on NLP experts.

Creating real-world NLP models typically requires a combination of two expertise - deep knowledge of the target domain, provided by domain experts, and machine learning knowledge, provided by NLP experts. Thus, domain experts are dependent on NLP experts. Label Sleuth comes to eliminate this dependency. With an intuitive UX, it escorts domain experts in the process of labeling the data and building NLP models which are tailored to their specific needs. As domain experts label examples within the system, machine learning models are being automatically trained in the background, make predictions on new examples, and provide suggestions for the users on the examples they should label next.

Label Sleuth is a no-code system, no knowledge in machine learning is need, and - it is fast to obtain a model – from task definition to a working model in just a few hours!

Table of contents

Setting up a development environment

Follow the instructions on our website.

The system requires Python 3.8 or 3.9 (other versions are currently not supported and may cause issues).

-

Clone the repository:

git clone [email protected]:label-sleuth/label-sleuth.git -

cd to the cloned directory:

cd label-sleuth -

Install the project dependencies using

conda(recommended) orpip:

Installing with conda

-

Install Anaconda https://docs.anaconda.com/anaconda/install/index.html

-

Restart your console

-

Use the following commands to create a new anaconda environment and install the requirements:

# Create and activate a virtual environment:

conda create --yes -n label-sleuth python=3.9

conda activate label-sleuth

# Install requirements

pip install -r requirements.txtInstalling with pip

Assuming python 3.8/3.9 is already installed.

-

Install pip https://pip.pypa.io/en/stable/installation/

-

Restart your console

-

Install requirements:

pip install -r requirements.txt-

Start the Label Sleuth server: run

python -m label_sleuth.start_label_sleuth.By default all project files are written to

<home_directory>/label-sleuth, to change the directory add--output_path <your_output_path>.You can add

--load_sample_corpus wiki_animals_2000_pagesto load a sample corpus into the system at startup. This fetches a collection of Wikipedia documents from the data-examples repository.By default, the host will be

localhostto expose the server only on the host machine. If you wish to expose the server to external communication, add--host <IP>for example,--host 0.0.0.0to listen to all IPs.Default port is 8000, to change the port add

--port <port_number>to the command.The system can then be accessed by browsing to http://localhost:8000 (or http://localhost:<port_number>)

The repository consists of a backend library, written in Python, and a frontend that uses React. A compiled version of the frontend can be found under label_sleuth/build.

See our website for a simple tutorial that illustrates how to use the system with a sample dataset of Wikipedia pages. Before starting the tutorial, make sure you pre-load the sample dataset by running:

python -m label_sleuth.start_label_sleuth --load_sample_corpus wiki_animals_2000_pages.

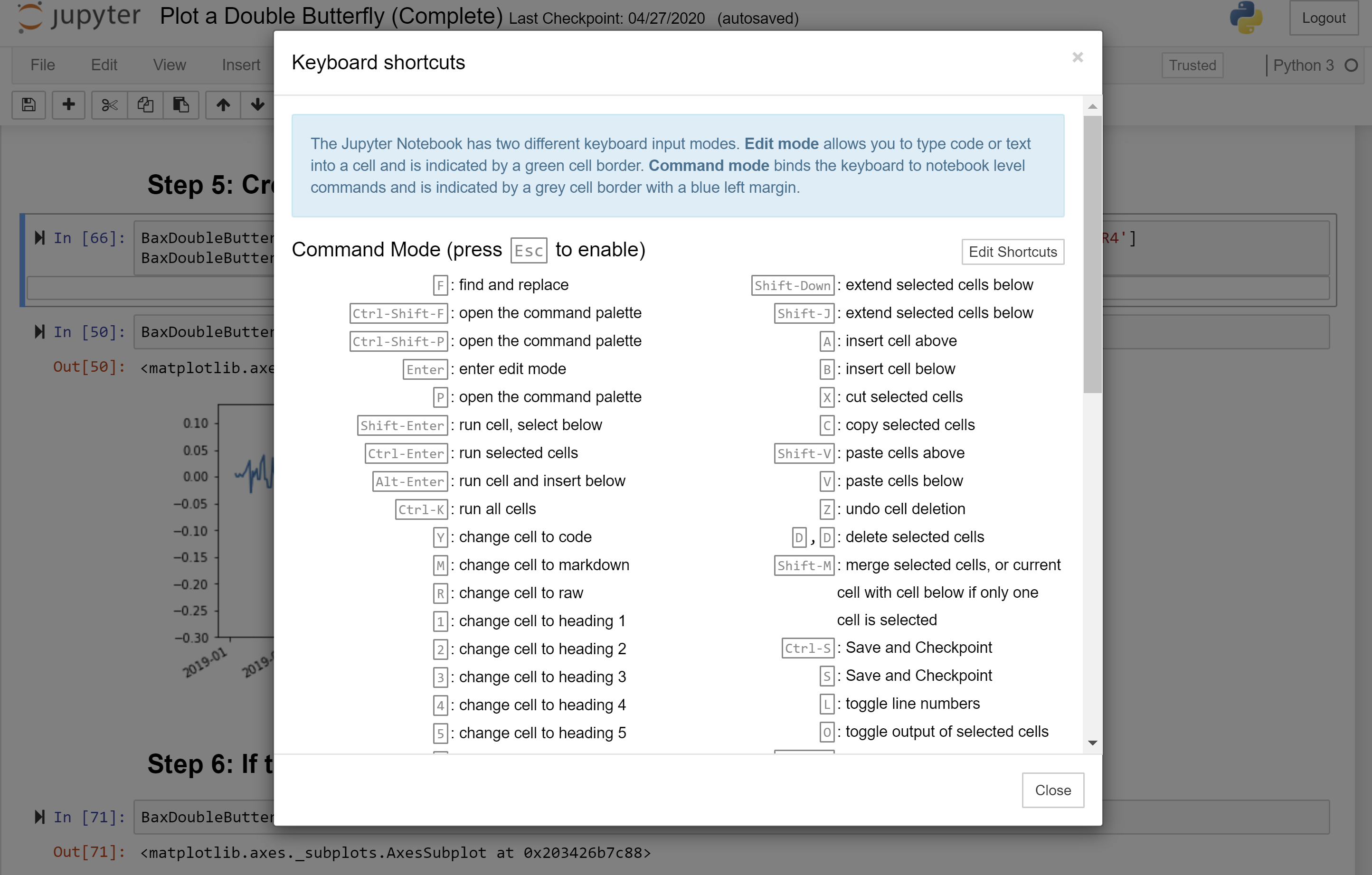

The configurable parameters of the system are specified in a json file. The default configuration file is label_sleuth/config.json.

A custom configuration can be applied by passing the --config_path parameter to the "start_label_sleuth" command, e.g., python -m label_sleuth.start_label_sleuth --config_path <path_to_my_configuration_json>

Alternatively, it is possible to override specific configuration parameters at startup by adding them to the run command, e.g., python -m label_sleuth.start_label_sleuth --changed_element_threshold 100

Configurable parameters:

| Parameter | Description |

|---|---|

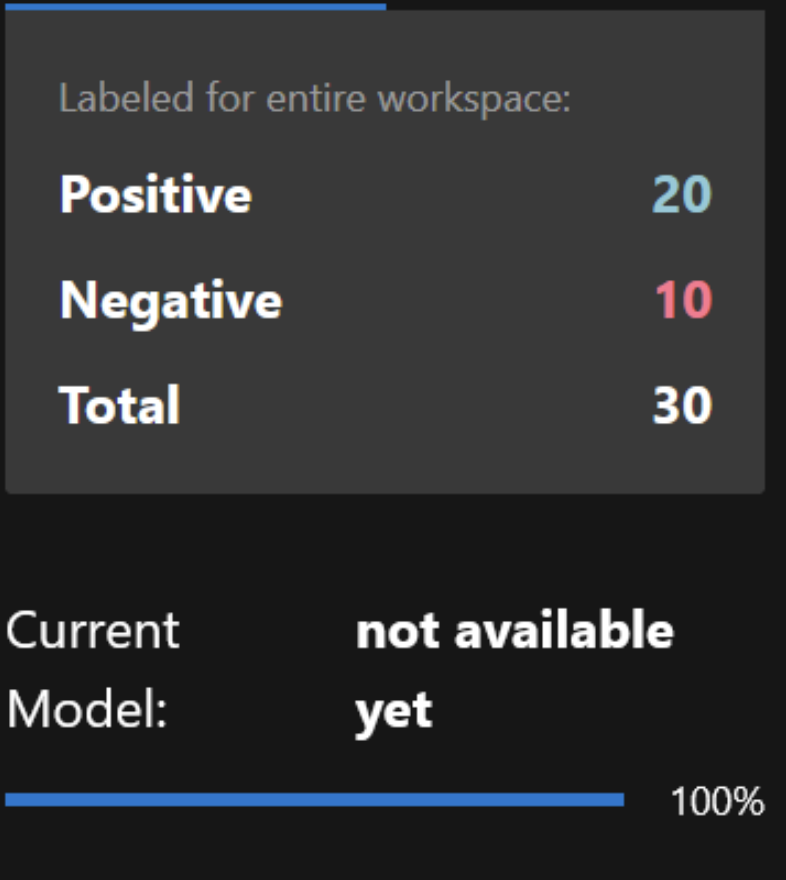

first_model_positive_threshold |

Number of elements that must be assigned a positive label for the category in order to trigger the training of a classification model. See also: The training invocation documentation. |

first_model_negative_threshold |

Number of elements that must be assigned a negative label for the category in order to trigger the training of a classification model. See also: The training invocation documentation. |

changed_element_threshold |

Number of changes in user labels for the category -- relative to the last trained model -- that are required to trigger the training of a new model. A change can be a assigning a label (positive or negative) to an element, or changing an existing label. Note that first_model_positive_threshold must also be met for the training to be triggered. See also: The training invocation documentation. |

training_set_selection_strategy |

Strategy to be used from TrainingSetSelectionStrategy. A TrainingSetSelectionStrategy determines which examples will be sent in practice to the classification models at training time - these will not necessarily be identical to the set of elements labeled by the user. For currently supported implementations see get_training_set_selector(). See also: The training set selection documentation. |

model_policy |

Policy to be used from ModelPolicies. A ModelPolicy determines which type of classification model(s) will be used, and when (e.g. always / only after a specific number of iterations / etc.). See also: The model selection documentation. |

active_learning_strategy |

Strategy to be used from ActiveLearningCatalog. An ActiveLearner module implements the strategy for recommending the next elements to be labeled by the user, aiming to increase the efficiency of the annotation process. For currently supported implementations see the ActiveLearningCatalog. See also: The active learning documentation. |

precision_evaluation_size |

Sample size to be used for estimating the precision of the current model. To be used in future versions of the system, which will provide built-in evaluation capabilities. |

apply_labels_to_duplicate_texts |

Specifies how to treat elements with identical texts. If true, assigning a label to an element will also assign the same label to other elements which share the exact same text; if false, the label will only be assigned to the specific element labeled by the user. |

language |

Specifies the chosen system-wide language. This determines some language-specific resources that will be used by models and helper functions (e.g., stop words). The list of supported languages can be found in Languages. We welcome contributions of additional languages. |

login_required |

Specifies whether or not using the system will require user authentication. If true, the configuration file must also include a users parameter. |

users |

Only relevant if login_required is true. Specifies the pre-defined login information in the following format: "users":[* The list of usernames is static and currently all users have access to all the workspaces in the system. |

Label Sleuth is a modular system. We welcome the contribution of additional implementations for the various modules, aiming to support a wider range of user needs and to harness efficient and innovative machine learning algorithms.

Below are instructions for implementing new models and active learning strategies:

Implementing a new machine learning model

These are the steps for integrating a new classification model:

- Implement a new

ModelAPI

Machine learning models are integrated by adding a new implementation of the ModelAPI.

The main functions are _train(), load_model() and infer():

def _train(self, model_id: str, train_data: Sequence[Mapping], model_params: Mapping):- model_id

- train_data - a list of dictionaries with at least the "text" and "label" fields. Additional fields can be passed e.g. [{'text': 'text1', 'label': 1, 'additional_field': 'value1'}, {'text': 'text2', 'label': 0, 'additional_field': 'value2'}]

- model_params - dictionary for additional model parameters (can be None)

def load_model(self, model_path: str):- model_path: path to a directory containing all model components

Returns an object that contains all the components that are necessary to perform inference (e.g., the trained model itself, the language recognized by the model, a trained vectorizer/tokenizer etc.).

def infer(self, model_components, items_to_infer) -> Sequence[Prediction]:- model_components: the return value of

load_model(), i.e. an object containing all the components that are necessary to perform inference - items_to_infer: a list of dictionaries with at least the "text" field. Additional fields can be passed, e.g. [{'text': 'text1', 'additional_field': 'value1'}, {'text': 'text2', 'additional_field': 'value2'}]

Returns a list of Prediction objects - one for each item in items_to_infer - where Prediction.label is a boolean and Prediction.score is a float in the range [0-1]. Additional outputs can be passed by inheriting from the base Prediction class and overriding the get_predictions_class() method.

-

Add the newly implemented ModelAPI to

ModelsCatalog -

Add one or more policies that use the new model to

ModelPolicies

Implementing a new active learning strategy

These are the steps for integrating a new active learning approach:

- Implement a new

ActiveLearner

Active learning modules are integrated by adding a new implementation of the ActiveLearner API. The function to implement is get_per_element_score:

def get_per_element_score(self, candidate_text_elements: Sequence[TextElement],

candidate_text_element_predictions: Sequence[Prediction], workspace_id: str,

dataset_name: str, category_name: str) -> Sequence[float]: Given sequences of text elements and the model predictions for these elements, this function returns an active learning score for each element. The elements with the highest scores will be recommended for the user to label next.

- Add the newly implemented ActiveLearner to the

ActiveLearningCatalog

Eyal Shnarch, Alon Halfon, Ariel Gera, Marina Danilevsky, Yannis Katsis, Leshem Choshen, Martin Santillan Cooper, Dina Epelboim, Zheng Zhang, Dakuo Wang, Lucy Yip, Liat Ein-Dor, Lena Dankin, Ilya Shnayderman, Ranit Aharonov, Yunyao Li, Naftali Liberman, Philip Levin Slesarev, Gwilym Newton, Shila Ofek-Koifman, Noam Slonim and Yoav Katz (EMNLP 2022). Label Sleuth: From Unlabeled Text to a Classifier in a Few Hours.

Please cite:

@inproceedings{shnarch2022labelsleuth,

title={{L}abel {S}leuth: From Unlabeled Text to a Classifier in a Few Hours},

author={Shnarch, Eyal and Halfon, Alon and Gera, Ariel and Danilevsky, Marina and Katsis, Yannis and Choshen, Leshem and Cooper, Martin Santillan and Epelboim, Dina and Zhang, Zheng and Wang, Dakuo and Yip, Lucy and Ein-Dor, Liat and Dankin, Lena and Shnayderman, Ilya and Aharonov, Ranit and Li, Yunyao and Liberman, Naftali and Slesarev, Philip Levin and Newton, Gwilym and Ofek-Koifman, Shila and Slonim, Noam and Katz, Yoav},

booktitle={Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing ({EMNLP}): System Demonstrations},

month={dec},

year={2022},

address={Abu Dhabi, UAE},

publisher={Association for Computational Linguistics},

url={https://aclanthology.org/2022.emnlp-demos.16},

pages={159--168}

}