Barebone kafka with Spring Boot

Event streaming is the digital equivalent of the human body's central nervous system. Event streaming ensures a continuous flow and interpretation of data so that the right information is at the right place, at the right time.

Technically speaking,

- It is the practice of capturing data in real-time from event sources in the form of streams of events;

- Storing these event streams durably for later retrieval;

- Manipulating, processing, and reacting to the event streams in real-time as well as retrospectively;

- Routing the event streams to different destination technologies as needed.

Kafka combines three key capabilities for event streaming end-to-end with a single battle-tested solution:

- To publish (write) and subscribe to (read) streams of events, including continuous import/export of your data from other systems.

- To store streams of events durably and reliably for as long as you want.

- To process streams of events as they occur or retrospectively.

Above functionalities are provided in a distributed, highly scalable, elastic, fault-tolerant, and secure manner. Kafka can be deployed on bare-metal hardware, virtual machines, containers, on-premises as well as in the cloud.

This is a distributed system consisting of servers and clients that communicate via a high-performance TCP network protocol .

- Servers :

- Run as a cluster of one or more servers.

- Some of these servers form the storage layer, called the brokers.

- Other servers run Kafka Connect to continuously import and export data as event streams.

- A Kafka cluster is highly scalable and fault-tolerant. if any of its servers fails, the other servers will take over their work to ensure continuous operations without any data loss.

- Clients : This allow us to write " distributed applications and microservices that read, write, and process streams of events in parallel, at scale, and in a fault-tolerant manner" even in the case of network problems or machine failures.

Apache Kafka is the de facto standard for event streaming to process data in motion.

- ❌ A proxy for millions of clients (like mobile apps) – but Kafka-native proxies (like REST or MQTT) exist for some use cases.

- ❌ An API Management platform – but these tools are usually complementary and used for the creation, life cycle management, or the monetization of Kafka APIs.

- ❌ A database for complex queries and batch analytics workloads – but good enough for transactional queries and relatively simple aggregations (especially with ksqlDB).

- ❌ An IoT platform with features such as device management – but direct Kafka-native integration with (some) IoT protocols such as MQTT or OPC-UA is possible and the appropriate approach for (some) use cases.

- ❌ A technology for hard real-time applications such as safety-critical or deterministic systems – but that’s true for any other IT framework, too. Embedded systems are a different software!

✅ For these reasons, Kafka is complementary, not competitive, to these other technologies.

This blog post explores when NOT to use Apache Kafka.

👉 🔗 When NOT to use Apache Kafka

Event, Producers, Consumers, Topics, Partition, Replication

- An event records the fact that "something happened". Also called record or message in the documentation. When we read or write data to Kafka, we do this in the form of events.

- Conceptually, an event has a key, value, timestamp, and optional metadata headers.

-

Example Event

- Event key: "Alice"

- Event value: "Made a payment of $200 to Bob"

- Event timestamp: "Jun. 25, 2020 at 2:06 p.m."

- Producers are those client applications that publish (write) events to Kafka

- Consumers are those that subscribe to (read and process) these events.

- In Kafka, producers and consumers are fully decoupled and agnostic of each other, which is a key design element to achieve the high scalability that Kafka is known for.

- For example, producers never need to wait for consumers. Kafka provides various guarantees such as the ability to process events exactly-once.

- Events are organized and durably stored in topics.

- Very simplified, a topic is similar to a folder in a filesystem, and the events are the files in that folder.

- An example topic name could be "payments".

- Topics in Kafka are always multi-producer and multi-subscriber: a topic can have zero, one, or many producers that write events to it, as well as zero, one, or many consumers that subscribe to these events.

- Events in a topic can be read as often as needed—unlike traditional messaging systems, events are not deleted after consumption. Instead, we can define for how long Kafka should retain your events through a per-topic configuration setting, after which old events will be discarded.

- Kafka's performance is effectively constant with respect to data size, so storing data for a long time is perfectly fine.

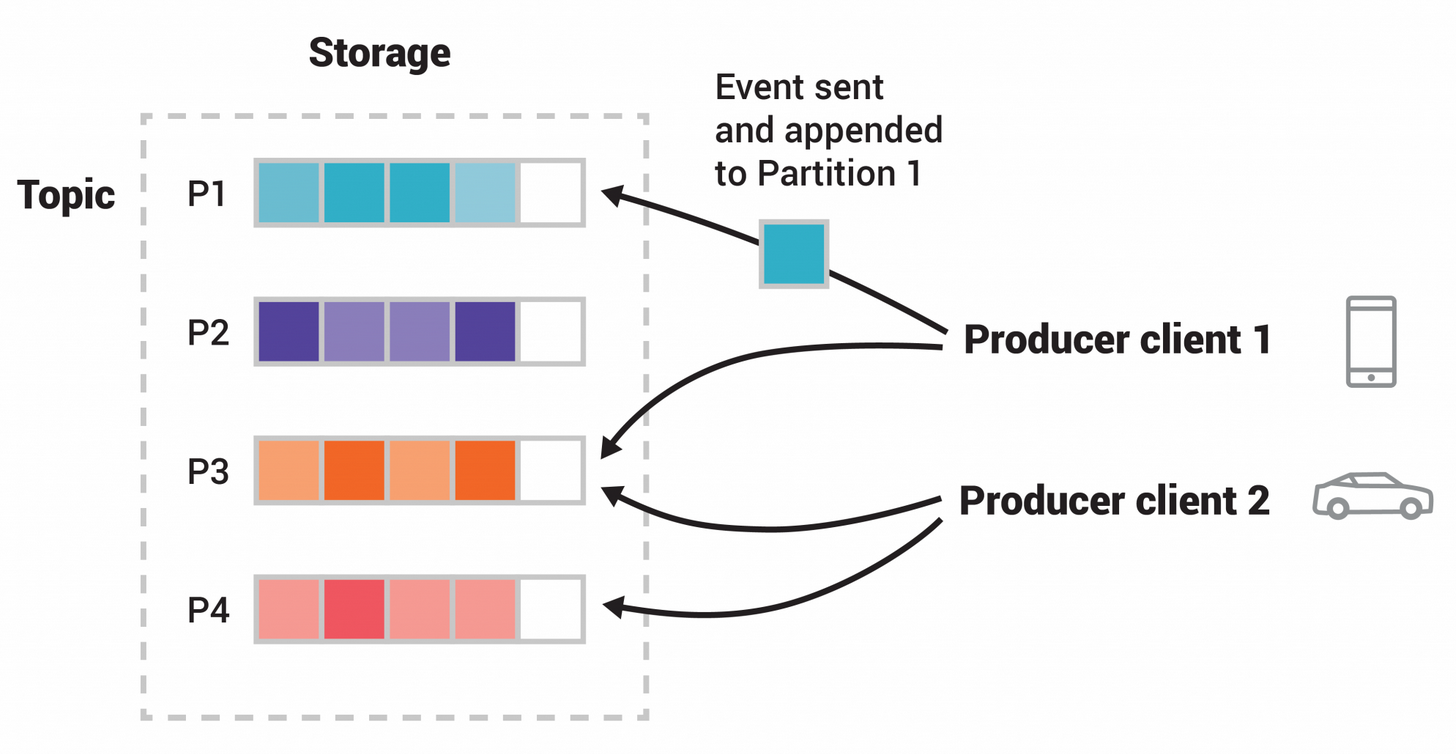

- Topics are partitioned, meaning a topic is spread over a number of "buckets" located on different Kafka brokers.

- This distributed placement of the data is vital for scalability because it allows client applications to both read and write the data from/to many brokers at the same time.

- When a new event is published to a topic,

- it is actually appended to one of the topic's partitions.

- events with the same event key (e.g., a customer or vehicle ID) are written to the same partition, and

- Kafka guarantees that any consumer of a given topic-partition will always read that partition's events in exactly the same order as they were written.

- This example topic has four partitions P1–P4.

- Two different producer clients are publishing, independently from each other,

- new events to the topic by writing events over the network to the topic's partitions.

- Events with the same key (denoted by their color in the figure) are written to the same partition.

- Note that both producers can write to the same partition if appropriate.

To make our data fault-tolerant and highly-available,

- every topic can be replicated,

- even across geo-regions or datacenters, so that there are always multiple brokers that have a copy of the data just in case things go wrong, or maintenance required on the brokers, and so on.

- A common production setting is a replication factor of 3, i.e., there will always be three copies of your data. This replication is performed at the level of topic-partitions.

Kafka has five core APIs for Java and Scala:

To manage and inspect topics, brokers, and other Kafka objects.

To publish (write) a stream of events to one or more Kafka topics.

To subscribe to (read) one or more topics and to process the stream of events produced to them.

To implement stream processing applications and microservices.

It provides higher-level functions to process event streams, including transformations, stateful operations like aggregations and joins, windowing, processing based on event-time, and more.

Input is read from one or more topics in order to generate output to one or more topics, effectively transforming the input streams to output streams.

To build and run reusable data import/export connectors that consume (read) or produce (write) streams of events from and to external systems and applications so they can integrate with Kafka.

For example, a connector to a relational database like PostgreSQL might capture every change to a set of tables. However, in practice, you typically don't need to implement your own connectors because the Kafka community already provides hundreds of ready-to-use connectors.

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-streams</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>3.2.0</version>

</dependency>