| page_type | name | description | languages | products | |||

|---|---|---|---|---|---|---|---|

sample |

OpenXR Mixed Reality samples for Unity |

These sample projects showcase how to build Unity applications for HoloLens 2 or Mixed Reality headsets using the Mixed Reality OpenXR plugin. |

|

|

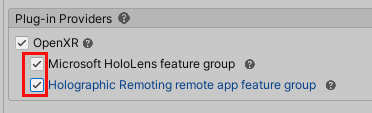

These sample projects showcase how to build Unity applications for HoloLens 2 or Mixed Reality headsets using the Mixed Reality OpenXR plugin. For more details on installing related tools and setting up a Unity project, please reference the plugin documentation on https://docs.microsoft.com/. For more details on using the Mixed Reality OpenXR plugin API, please reference the API documentation on https://docs.microsoft.com/

⚠️ NOTE : This repository uses Git Large File Storage to store large files, such as Unity packages and images. Please install the latest git-lfs before cloning this repo.

It's recommended to run these samples on HoloLens 2 using the following versions:

- Latest Visual Studio 2022 or 2019

- Latest Unity 2020.3 LTS. Please double check the Unity's blocking bugs for HoloLens 2.

- Latest Unity OpenXR plugin, recommended 1.3.1 or newer.

- Latest Mixed Reality OpenXR Plugin, recommended 1.4.0 or newer. Please follow the latest release notes.

- Latest MRTK-Unity, recommended 2.7.3 or newer.

- Latest Windows Mixed Reality Runtime, recommended 109 or newer.

See details in Anchor Sample Scene.

- FeatureUsageHandJointsManager.cs in the HandTracking scene demos using Unity Feature Usages to obtain hand joint data.

- OpenXRExtensionHandJointsManager.cs in the HandTracking scene demos the usage of the Mixed Reality OpenXR Extension APIs to obtain hand joint data.

- HandMesh.cs in the HandTracking scene demos the usage of hand meshes.

FollowEyeGaze.cs in the Interaction scene demos using Unity Feature Usages to obtain eye tracking data.

LocatableCamera.cs in the LocatableCamera scene demos the setup and usage of the locatable camera.

Scenes ARAnchor, ARRaycast, ARPlane, and ARMesh are all implemented using ARFoundation, backed in this project by OpenXR plugin on HoloLens 2.

- Find planes using ARPlaneManager

- Place holograms using ARRaycastManager.

- Display meshes using ARMeshManager

SpatialAnchorsSample.cs in the Azure Spatial Anchors sample project demos saving and locating spatial anchors. For more information on how to set up the Azure Spatial Anchors project, see the readme in the project's folder.

AppRemotingSample.cs in the Main Menu scene demos app remoting. For more information on how to set up the Basic Sample project for App Remoting, see the readme in the project's folder.

This project uses GitHub Issues to track bugs and feature requests. For help and questions about using this project, please use GitHub Issues in this project. Please search the existing issues before filing new issues to avoid duplicates. For new issues, file your bug or feature request as a new Issue.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.