Arcus is a memcached-based cache cloud developed by NAVER Corp. arcus-memcached has been heavily modified to support functional and performance requirements of NAVER services. Arcus supports collection data structures (List, Set, Map, B+tree) for storing/retrieving multiple values as a structured form in addition to the basic Key-Value data model of memcached.

Arcus manages multiple clusters of memcached nodes using ZooKeeper. Each cluster or cloud is identified by its service code. Think of the service code as the cloud's name. The user may add/remove memcached nodes/clouds on the fly. And, Arcus detects failed nodes and automatically removes them.

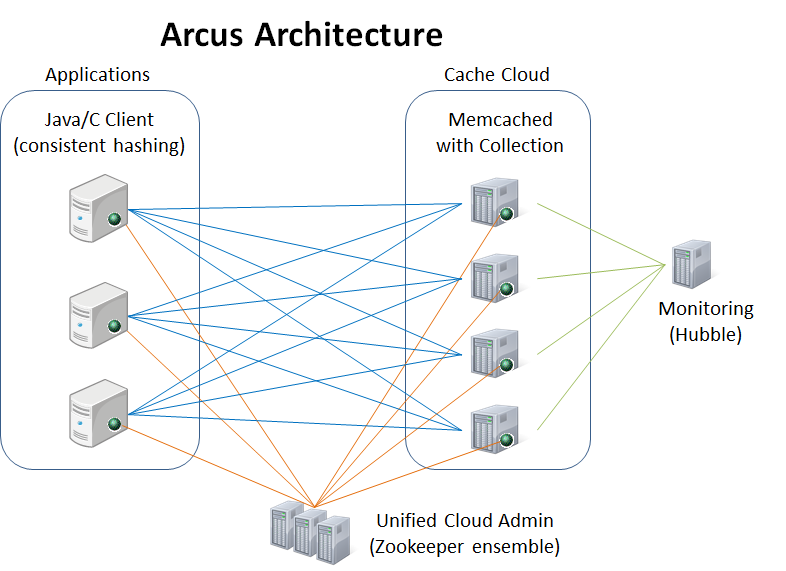

The overall architecture is shown below. The memcached node is identified by its name (IP address:port number). ZooKeeper maintains a database of memcached node names and the service code (cloud) that they belong to. ZooKeeper also maintains a list of alive nodes in each cloud (cache list).

Upon startup, each memcached node contacts ZooKeeper and finds the service code that it belongs to. Then the node inserts its name on the cache list so Arcus client can see it. ZooKeeper periodically checks if the cache node is alive, remove failed nodes from the cache cloud, and notifies the updated cache list to cache clients. With the latest cache list, Arcus clients do consistent hashing to find the cache node for each key-value operation. Hubble collects and shows the statistics of the cache cloud.

Currently, Arcus only supports 64-bit Linux. It has been tested on the following OS platforms.

- CentOS 6.x, 7.x 64bit

- Ubuntu 12.04, 14.04, 16.04, 18.04 LTS 64bit

If you are interested in supporting other OS platforms, please try building/running Arcus on them. And let us know of any issues.

Arcus setup usually follows three steps below.

- Preparation - clone and build this Arcus code, and deploy Arcus code/binary package.

- Zookeeper setup - initialize Zookeeper ensemble for Arcus and start Zookeeper processes.

- Memcached setup - register cache cloud information into Zookeeper and start cache nodes.

To quickly set up and test an Arcus cloud on the local machine, run the commands below. They build memcached, set up a cloud of two memcached nodes in ZooKeeper, and start them, all on the local machine. The commands assume RedHat/CentOS environment. If any problem exists in build, please refer to build FAQ.

# Requirements: JDK & Ant (java >= 1.8)

# Install dependencies (python version 2 that is 2.6 or higher)

sudo yum install gcc gcc-c++ autoconf automake libtool pkgconfig cppunit-devel python-setuptools python-devel python-pip nc (CentOS)

sudo apt-get install build-essential autoconf automake libtool libcppunit-dev python-setuptools python-dev python-pip netcat (Ubuntu)

# Clone & Build

git clone https://github.com/naver/arcus.git

cd arcus/scripts

./build.sh

# Setup a local cache cloud with conf file. (Should be non-root user)

./arcus.sh quicksetup conf/local.sample.json

# Test

echo "stats" | nc localhost 11211 | grep version

STAT version 1.7.0

echo "stats" | nc localhost 11212 | grep version

STAT version 1.7.0

To set up Arcus cache clouds on multiple machines, you need following two things.

- Arcus cloud configuration file: Arcus cache cloud settings in JSON format

- Arcus cloud admin script (arcus.sh): A tool to control Arcus cache cloud.

Please see Arcus cache cloud setup in multiple servers for more details.

Once you finish setting up an Arcus cache cloud on multiple machines, you can quickly test Arcus on the command line, using telnet and ASCII commands. See Arcus telnet interface. Details on Arcus ASCII commands are in Arcus ASCII protocol document.

To develop Arcus application programs, please take a look at Arcus clients. Arcus currently supports Java and C/C++ clients. Each module includes a short tutorial where you can build and test "hello world" programs.

- How To Install Dependencies for beginners

- Build FAQ for troubleshooting build

- Arcus Directory Structure after building

- Arcus Cloud Configuration File

- Arcus Admin Script Usage

- Arcus Cache Cloud Setup in Multiple Servers