This is an example chat app intended to get you started with your first OpenAI API project. It uses the Chat Completions API to create a simple general purpose chat app with streaming.

To send your first API request with the OpenAI Node SDK, make sure you have the right dependencies installed and then run the following code:

import OpenAI from "openai";

const openai = new OpenAI();

async function main() {

const completion = await openai.chat.completions.create({

messages: [{ role: "system", content: "You are a helpful assistant." }],

model: "gpt-3.5-turbo",

});

console.log(completion.choices[0]);

}

main();This quickstart app builds on top of the example code above, with streaming and a UI to visualize messages.

-

If you don’t have Node.js installed, install it from nodejs.org (Node.js version >= 16.0.0 required)

-

Clone this repository

-

Navigate into the project directory

$ cd openai-quickstart-node -

Install the requirements

$ npm install

-

Make a copy of the example environment variables file

On Linux systems:

$ cp .env.example .env

On Windows:

$ copy .env.example .env

-

Add your API key to the newly created

.envfile -

Run the app

$ npm run dev

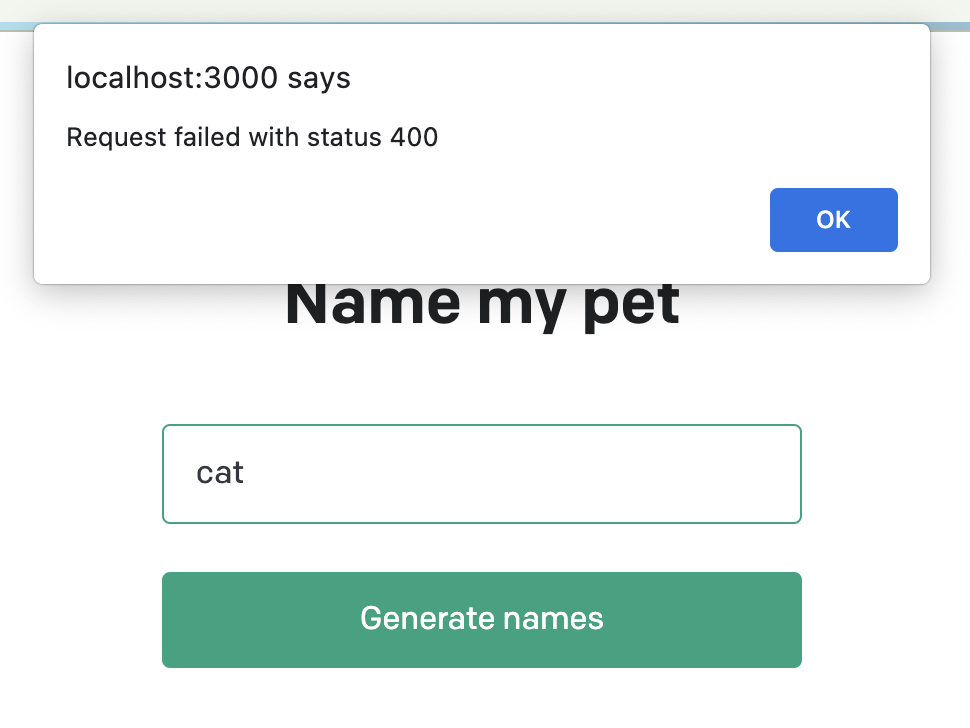

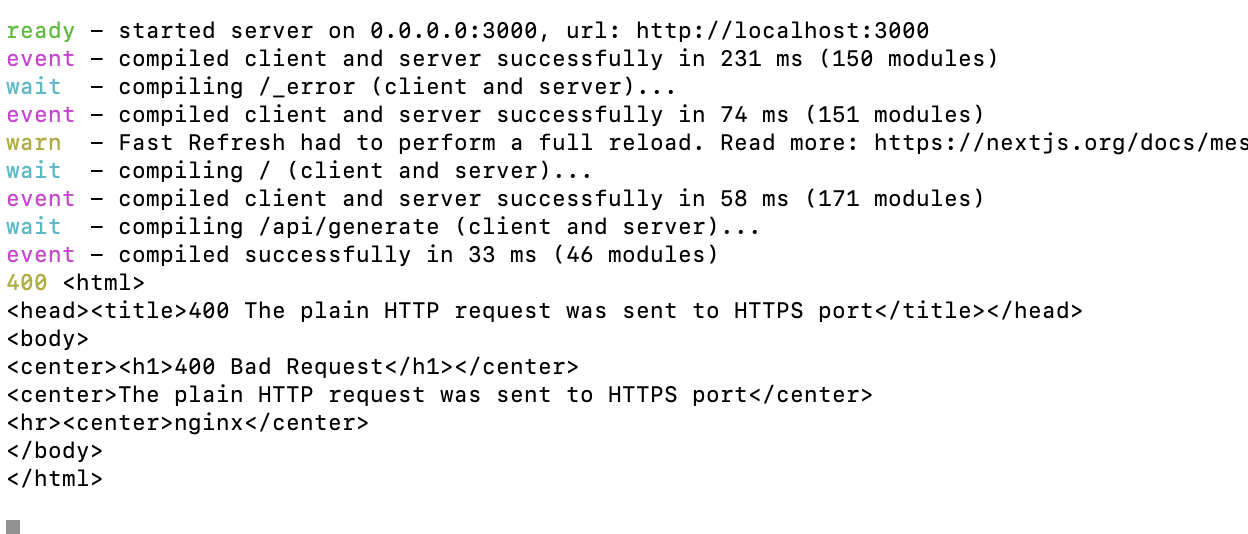

You should now be able to access the app at http://localhost:3000! For the full context behind this example app, check out the tutorial.