slmsuite combines GPU-accelerated beamforming algorithms with optimized hardware control, automated calibration, and user-friendly scripting to enable high-performance programmable optics with modern spatial light modulators.

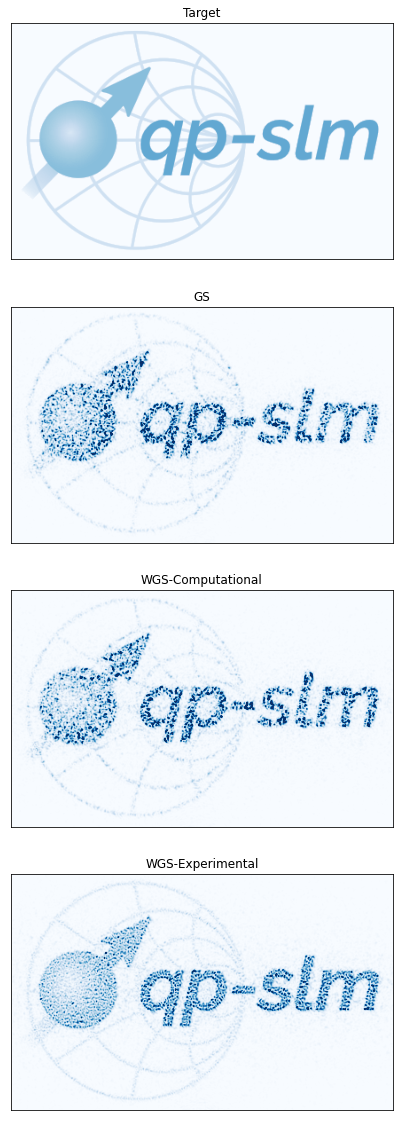

- GPU-accelerated iterative phase retrieval algorithms (e.g. Gerchberg-Saxton, weighted GS, or phase-stationary WGS)

- A simple hardware-control interface for working with various SLMs and cameras

- Automated Fourier- to image-space coordinate transformations: choose how much light goes to which camera pixels;

slmsuitetakes care of the rest! - Automated wavefront calibration to improve manufacturer-supplied flatness maps or compensate for additional aberrations along the SLM imaging train

- Optimized optical focus/spot arrays using camera feedback, automated statistics, and numerous analysis routines

- Mixed region amplitude freedom, which ignores unused far-field regions in favor of optimized hologram performance in high-interest areas.

- Toolboxes for structured light, imprinting sectioned phase masks, SLM unit conversion, padding and unpadding data, and more

- A fully-featured example library that demonstrates these and other features

Install the stable version of slmsuite from PyPi using:

$ pip install slmsuiteInstall the latest version of slmsuite from GitHub using:

$ pip install git+https://github.com/QPG-MIT/slmsuiteExtensive documentation and API reference are available through readthedocs.

Examples can be found embedded in documentation, live through nbviewer, or directly in source.