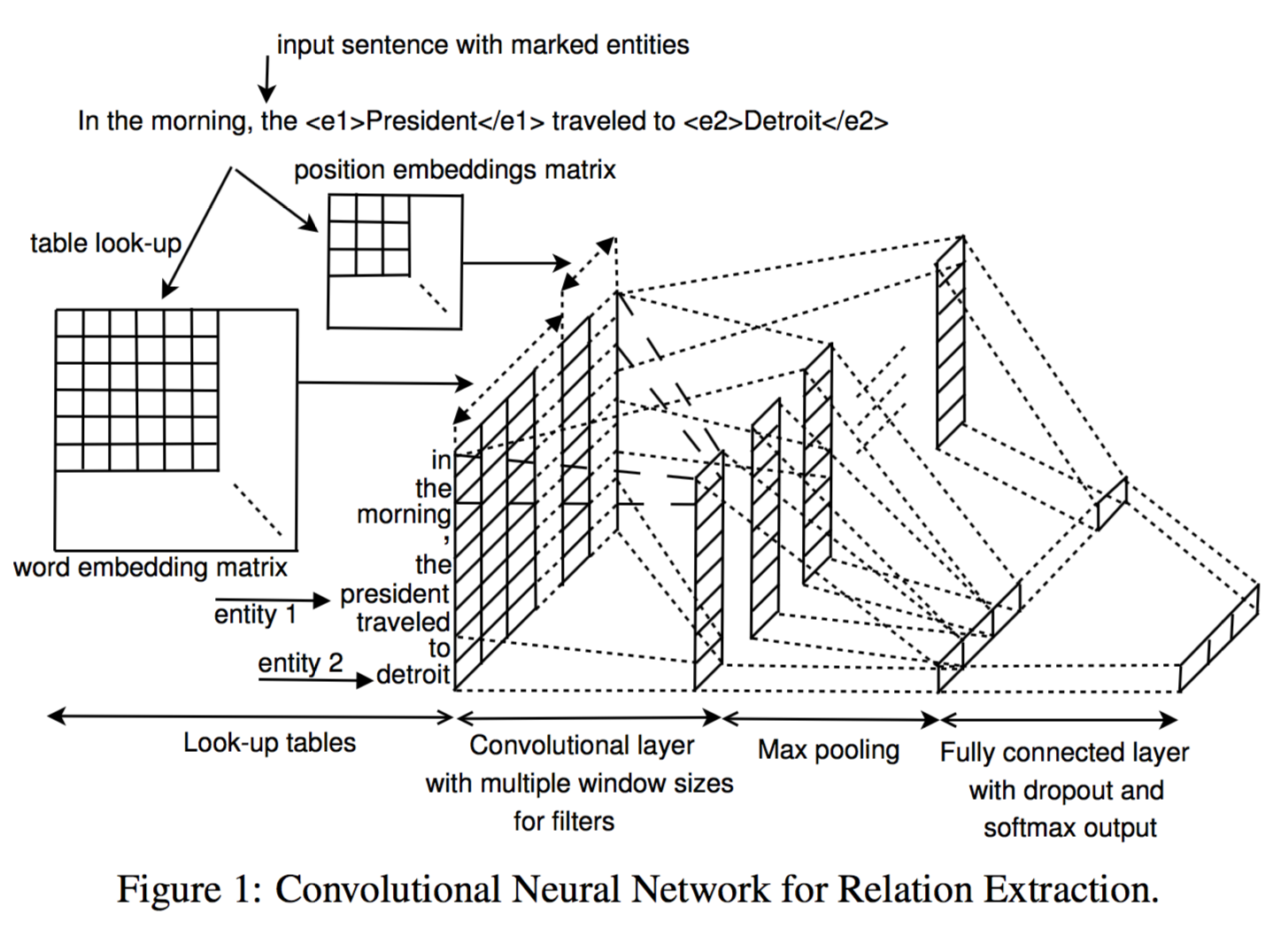

Tensorflow Implementation of Deep Learning Approach for Relation Extraction Challenge(SemEval-2010 Task #8: Multi-Way Classification of Semantic Relations Between Pairs of Nominals) via Convolutional Neural Networks.

- Train data is located in "SemEval2010_task8_all_data/SemEval2010_task8_training/TRAIN_FILE.TXT".

- "GoogleNews-vectors-negative300" is used as pre-trained word2vec model.

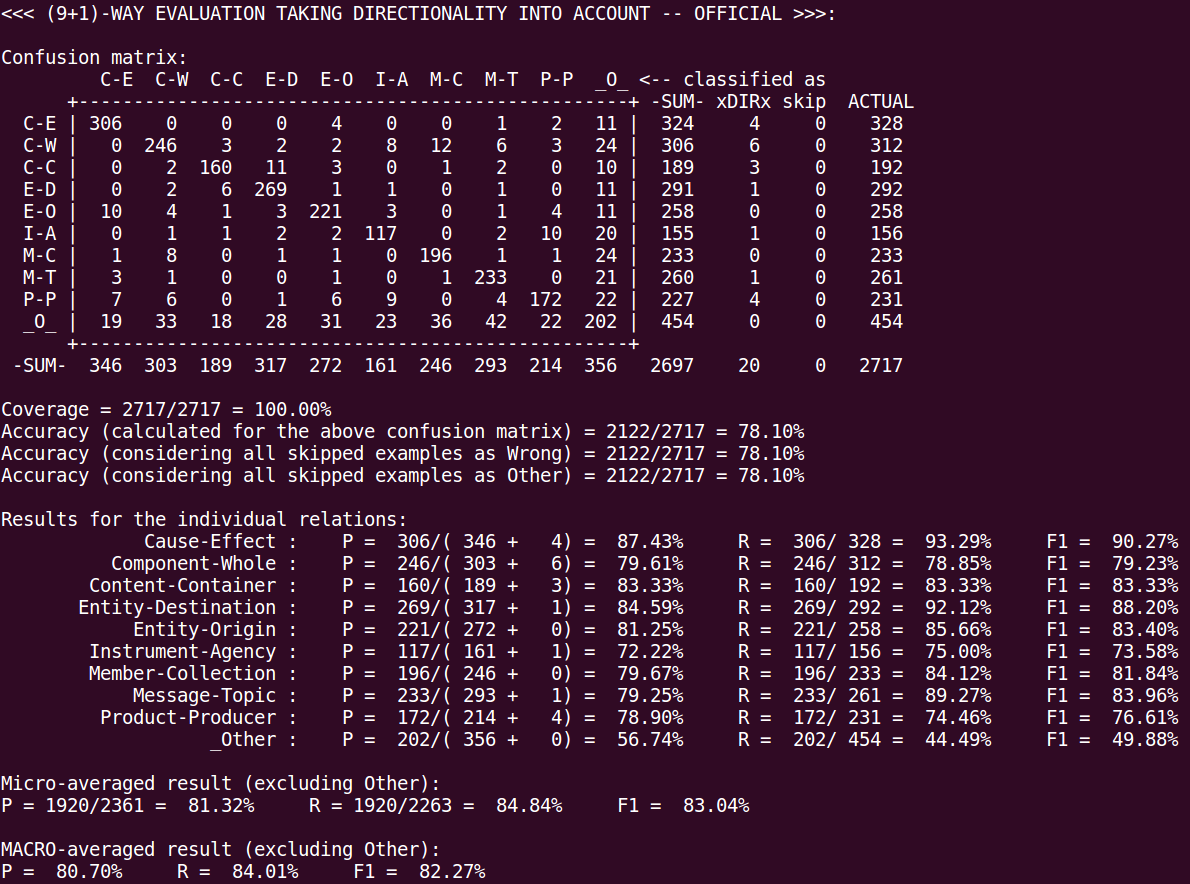

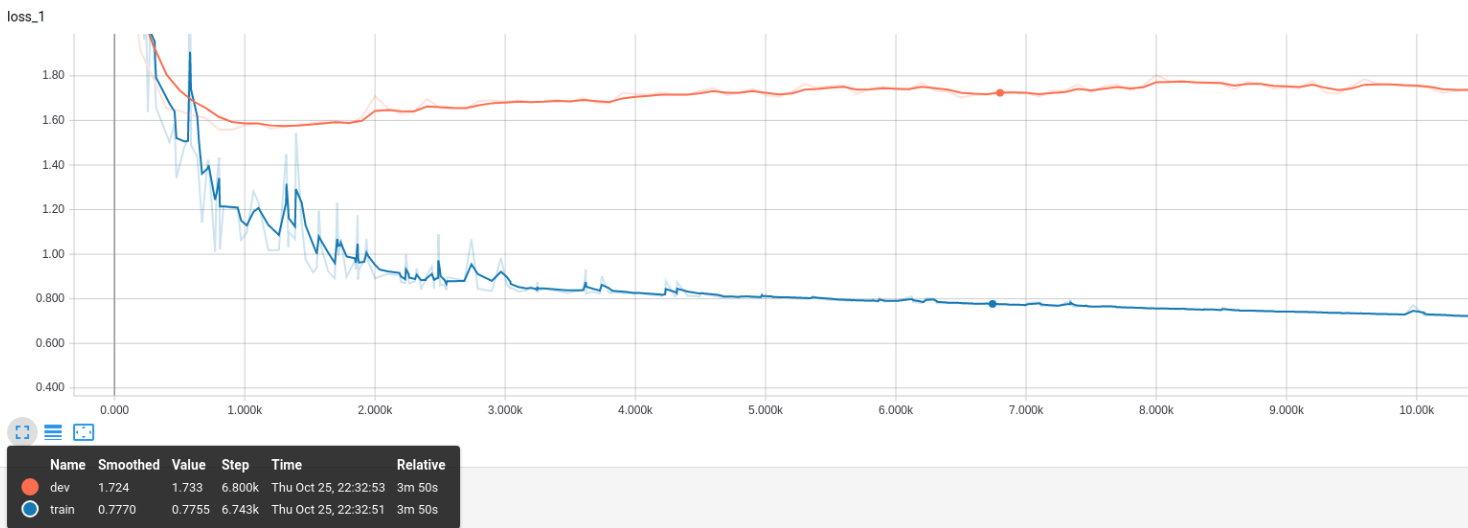

- Performance (accuracy and f1-socre) outputs during training are NOT OFFICIAL SCORE of SemEval 2010 Task 8. To compute the official performance, you should proceed the follow Evaluation step with checkpoints obtained by training.

$ python train.py --help$ python train.py --embedding_path "GoogleNews-vectors-negative300.bin"- You can get an OFFICIAL SCORE of SemEval 2010 Task 8 for test data by following this step. README describes how to evaluate the official score.

- Test data is located in "SemEval2010_task8_all_data/SemEval2010_task8_testing_keys/TEST_FILE_FULL.TXT".

- MUST GIVE

--checkpoint_dirARGUMENT, checkpoint directory from training run, like below example.

$ python eval.py --checkpoint_dir "runs/1523902663/checkpoints/"- Given: a pair of nominals

- Goal: recognize the semantic relation between these nominals.

- Example:

- "There were apples, pears and oranges in the bowl."

→ CONTENT-CONTAINER(pears, bowl) - “The cup contained tea from dried ginseng.”

→ ENTITY-ORIGIN(tea, ginseng)

- "There were apples, pears and oranges in the bowl."

- Cause-Effect(CE): An event or object leads to an effect(those cancers were caused by radiation exposures)

- Instrument-Agency(IA): An agent uses an instrument(phone operator)

- Product-Producer(PP): A producer causes a product to exist (a factory manufactures suits)

- Content-Container(CC): An object is physically stored in a delineated area of space (a bottle full of honey was weighed) Hendrickx, Kim, Kozareva, Nakov, O S´ eaghdha, Pad ´ o,´ Pennacchiotti, Romano, Szpakowicz Task Overview Data Creation Competition Results and Discussion The Inventory of Semantic Relations (III)

- Entity-Origin(EO): An entity is coming or is derived from an origin, e.g., position or material (letters from foreign countries)

- Entity-Destination(ED): An entity is moving towards a destination (the boy went to bed)

- Component-Whole(CW): An object is a component of a larger whole (my apartment has a large kitchen)

- Member-Collection(MC): A member forms a nonfunctional part of a collection (there are many trees in the forest)

- Message-Topic(CT): An act of communication, written or spoken, is about a topic (the lecture was about semantics)

- OTHER: If none of the above nine relations appears to be suitable.

-

SemEval-2010 Task #8 Dataset [Download]

Relation Train Data Test Data Total Data Cause-Effect 1,003 (12.54%) 328 (12.07%) 1331 (12.42%) Instrument-Agency 504 (6.30%) 156 (5.74%) 660 (6.16%) Product-Producer 717 (8.96%) 231 (8.50%) 948 (8.85%) Content-Container 540 (6.75%) 192 (7.07%) 732 (6.83%) Entity-Origin 716 (8.95%) 258 (9.50%) 974 (9.09%) Entity-Destination 845 (10.56%) 292 (10.75%) 1137 (10.61%) Component-Whole 941 (11.76%) 312 (11.48%) 1253 (11.69%) Member-Collection 690 (8.63%) 233 (8.58%) 923 (8.61%) Message-Topic 634 (7.92%) 261 (9.61%) 895 (8.35%) Other 1,410 (17.63%) 454 (16.71%) 1864 (17.39%) Total 8,000 (100.00%) 2,717 (100.00%) 10,717 (100.00%)