Automated documentation for event-driven applications built with Spring Boot

We are on discord for any question, discussion, request etc. Join us at https://discord.gg/HZYqd5RPTd

- About

- Demo & Documentation

- Why You Should Use Springwolf

- Usage & Example

- Who's Using Springwolf

- How To Participate

- Contributors

This project is inspired by Springfox. It documents asynchronous APIs using the AsyncAPI specification.

springwolf-ui adds a web UI, much like that of Springfox, and allows easy publishing of auto-generated payload examples.

Take a look at the Springwolf live demo and a generated AsyncAPI document.

springwolf.dev includes the quickstart guide and full documentation.

Springwolf exploits the fact that you already fully described your consumer endpoint (with listener annotations, such as

@KafkaListener, @RabbitListener, @SqsListener, etc.) and generates the documentation based on this information.

Share API Schema Definition

The AsyncAPI conform documentation can be integrated into API hubs (like backstage)

or be shared as a json/yaml file with others.

UI Based API Testing

In projects using asynchronous APIs, you may often find yourself needing to manually send a message to some topic, whether you are manually testing a new feature, debugging or trying to understand some flow.

Using the automatically generated example payload object as a suggestion, you can publish it to the correct channel with a single click.

Protocols not supported natively can still be documented using @AsyncListener and @AsyncPublisher annotation.

More details in the documentation.

| Plugin | Example project | Current version | SNAPSHOT version |

|---|---|---|---|

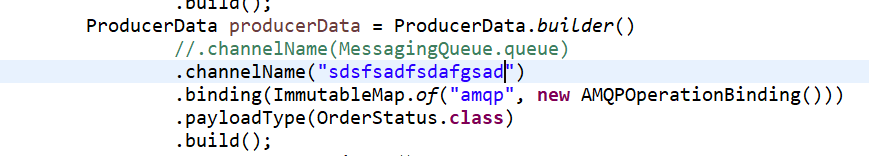

| AMQP | AMQP Example |  |

|

| AWS SNS | AWS SNS Example |  |

|

| AWS SQS | AWS SQS Example |  |

|

| Cloud Stream | Cloud Stream Example |  |

|

| JMS | JMS Example |  |

|

| Kafka | Kafka Example |  |

|

Click to expand all artifacts, bindings and add-ons

| Artifact | Current version | SNAPSHOT version |

|---|---|---|

| AsyncAPI implementation |  |

|

| Core |  |

|

| UI |  |

|

| Bindings | Current version | SNAPSHOT version |

|---|---|---|

| AMQP Binding |  |

|

| AWS SNS Binding |  |

|

| AWS SQS Binding |  |

|

| Google PubSub Binding |  |

|

| Kafka Binding |  |

|

| JMS Binding |  |

|

| Add-on | Current version | SNAPSHOT version |

|---|---|---|

| Common Model Converter |  |

|

| Generic Binding |  |

|

| Json Schema |  |

|

| Kotlinx Serialization Model Converter |  |

|

- 2bPrecise

- b.well Connected Health

- DGARNE - Public Service of Wallonia (BE)

- LVM Versicherung

- OTTO

- Teambank

- aconium

Comment in this PR to add your company and spread the word

Check out our CONTRIBUTING.md guide.

Testing SNAPSHOT version

Add the following to the repositories closure in build.gradle:

repositories {

// ...

maven {

url "https://s01.oss.sonatype.org/content/repositories/snapshots"

}

}Or add the repository to your pom.xml if you are using maven:

<repositories>

<repository>

<id>oss-sonatype</id>

<name>oss-sonatype</name>

<url>https://s01.oss.sonatype.org/content/repositories/snapshots</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

</repositories>To work with local builds, run the publishToMavenLocal task. The current version number is set in .env file.

Thanks goes to these wonderful people (emoji key):

To add yourself as a contributor, install the all-contributors CLI and run:

all-contributors checkall-contributors add <username> codeall-contributors generate