Podinfo is a tiny web application made with Go that showcases best practices of running microservices in Kubernetes. Podinfo is used by CNCF projects like Flux and Flagger for end-to-end testing and workshops.

Specifications:

- Health checks (readiness and liveness)

- Graceful shutdown on interrupt signals

- File watcher for secrets and configmaps

- Instrumented with Prometheus and Open Telemetry

- Structured logging with zap

- 12-factor app with viper

- Fault injection (random errors and latency)

- Swagger docs

- Timoni, Helm and Kustomize installers

- End-to-End testing with Kubernetes Kind and Helm

- Multi-arch container image with Docker buildx and GitHub Actions

- Container image signing with Sigstore cosign

- SBOMs and SLSA Provenance embedded in the container image

- CVE scanning with Trivy

Web API:

GET /prints runtime informationGET /versionprints podinfo version and git commit hashGET /metricsreturn HTTP requests duration and Go runtime metricsGET /healthzused by Kubernetes liveness probeGET /readyzused by Kubernetes readiness probePOST /readyz/enablesignals the Kubernetes LB that this instance is ready to receive trafficPOST /readyz/disablesignals the Kubernetes LB to stop sending requests to this instanceGET /status/{code}returns the status codeGET /paniccrashes the process with exit code 255POST /echoforwards the call to the backend service and echos the posted contentGET /envreturns the environment variables as a JSON arrayGET /headersreturns a JSON with the request HTTP headersGET /delay/{seconds}waits for the specified periodPOST /tokenissues a JWT token valid for one minuteJWT=$(curl -sd 'anon' podinfo:9898/token | jq -r .token)GET /token/validatevalidates the JWT tokencurl -H "Authorization: Bearer $JWT" podinfo:9898/token/validateGET /configsreturns a JSON with configmaps and/or secrets mounted in theconfigvolumePOST/PUT /cache/{key}saves the posted content to RedisGET /cache/{key}returns the content from Redis if the key existsDELETE /cache/{key}deletes the key from Redis if existsPOST /storewrites the posted content to disk at /data/hash and returns the SHA1 hash of the contentGET /store/{hash}returns the content of the file /data/hash if existsGET /ws/echoechos content via websocketspodcli ws ws://localhost:9898/ws/echoGET /chunked/{seconds}usestransfer-encodingtypechunkedto give a partial response and then waits for the specified periodGET /swagger.jsonreturns the API Swagger docs, used for Linkerd service profiling and Gloo routes discovery

gRPC API:

/grpc.health.v1.Health/Checkhealth checking/grpc.EchoService/Echoechos the received content/grpc.VersionService/Versionreturns podinfo version and Git commit hash/grpc.DelayService/Delayreturns a successful response after the given seconds in the body of gRPC request/grpc.EnvService/Envreturns environment variables as a JSON array/grpc.HeaderService/Headerreturns the headers present in the gRPC request. Any custom header can also be given as a part of request and that can be returned using this API/grpc.InfoService/Inforeturns the runtime information/grpc.PanicService/Paniccrashes the process with gRPC status code as '1 CANCELLED'/grpc.StatusService/Statusreturns the gRPC Status code given in the request body/grpc.TokenService/TokenGenerateissues a JWT token valid for one minute/grpc.TokenService/TokenValidatevalidates the JWT token

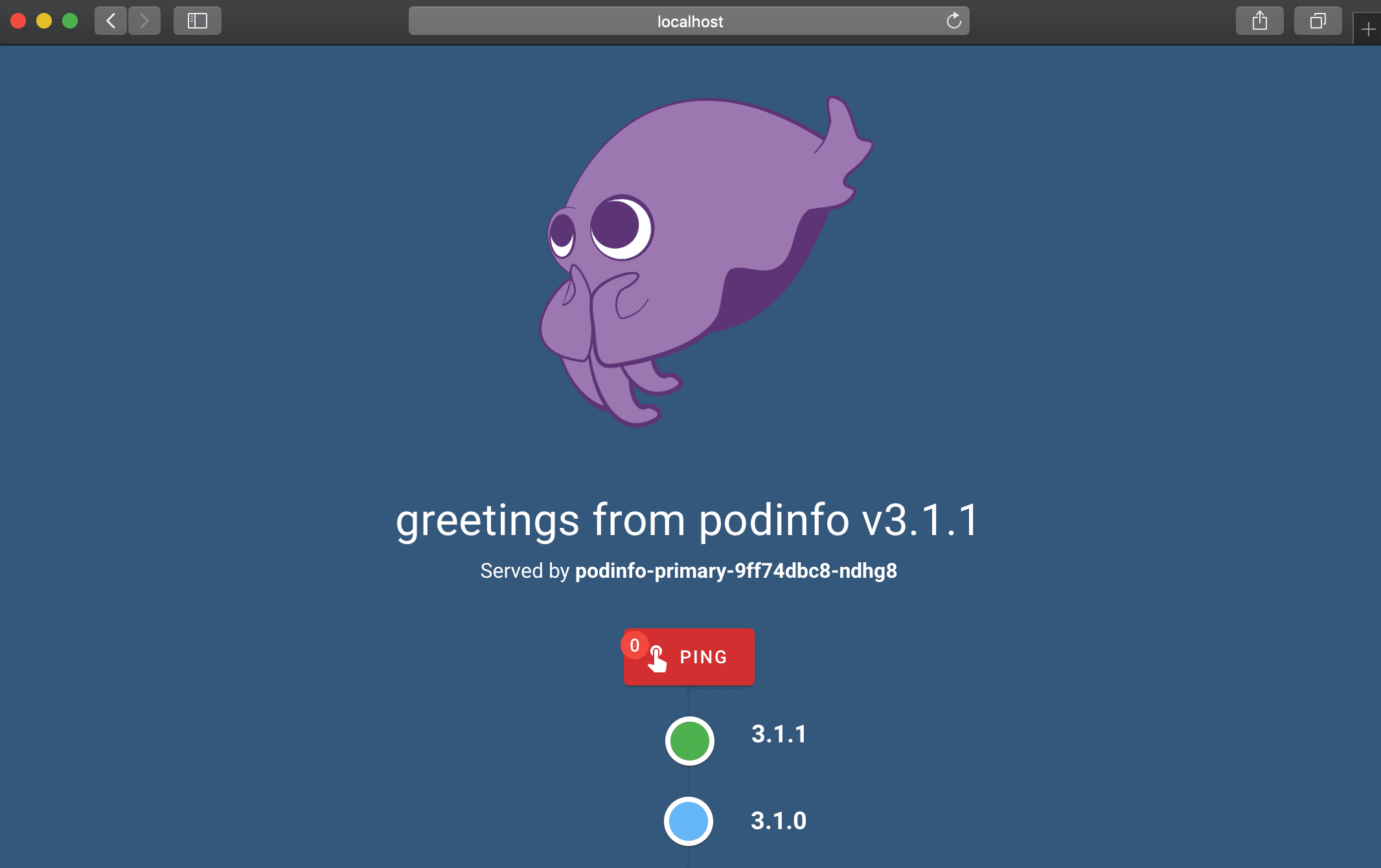

Web UI:

To access the Swagger UI open <podinfo-host>/swagger/index.html in a browser.

- Getting started with Timoni

- Getting started with Flux

- Progressive Deliver with Flagger and Linkerd

- Automated canary deployments with Kubernetes Gateway API

To install Podinfo on Kubernetes the minimum required version is Kubernetes v1.23.

Install with Timoni:

timoni -n default apply podinfo oci://ghcr.io/stefanprodan/modules/podinfoInstall from github.io:

helm repo add podinfo https://stefanprodan.github.io/podinfo

helm upgrade --install --wait frontend \

--namespace test \

--set replicaCount=2 \

--set backend=http://backend-podinfo:9898/echo \

podinfo/podinfo

helm test frontend --namespace test

helm upgrade --install --wait backend \

--namespace test \

--set redis.enabled=true \

podinfo/podinfoInstall from ghcr.io:

helm upgrade --install --wait podinfo --namespace default \

oci://ghcr.io/stefanprodan/charts/podinfokubectl apply -k github.com/stefanprodan/podinfo//kustomizedocker run -dp 9898:9898 stefanprodan/podinfoIn order to install podinfo on a Kubernetes cluster and keep it up to date with the latest release in an automated manner, you can use Flux.

Install the Flux CLI on MacOS and Linux using Homebrew:

brew install fluxcd/tap/fluxInstall the Flux controllers needed for Helm operations:

flux install \

--namespace=flux-system \

--network-policy=false \

--components=source-controller,helm-controllerAdd podinfo's Helm repository to your cluster and configure Flux to check for new chart releases every ten minutes:

flux create source helm podinfo \

--namespace=default \

--url=https://stefanprodan.github.io/podinfo \

--interval=10mCreate a podinfo-values.yaml file locally:

cat > podinfo-values.yaml <<EOL

replicaCount: 2

resources:

limits:

memory: 256Mi

requests:

cpu: 100m

memory: 64Mi

EOLCreate a Helm release for deploying podinfo in the default namespace:

flux create helmrelease podinfo \

--namespace=default \

--source=HelmRepository/podinfo \

--release-name=podinfo \

--chart=podinfo \

--chart-version=">5.0.0" \

--values=podinfo-values.yamlBased on the above definition, Flux will upgrade the release automatically when a new version of podinfo is released. If the upgrade fails, Flux can rollback to the previous working version.

You can check what version is currently deployed with:

flux get helmreleases -n defaultTo delete podinfo's Helm repository and release from your cluster run:

flux -n default delete source helm podinfo

flux -n default delete helmrelease podinfoIf you wish to manage the lifecycle of your applications in a GitOps manner, check out this workflow example for multi-env deployments with Flux, Kustomize and Helm.