An example of streaming ChatGPT via the OpenAI v4.0 node SDK. See this Stack post for more information.

To see a version that does the streaming over HTTP and only writes to the database

at the end, see the http-streaming branch, or this repo.

This app makes up a name for your user using Faker.js and allows you to chat with other users: open up multiple tabs to try it out!

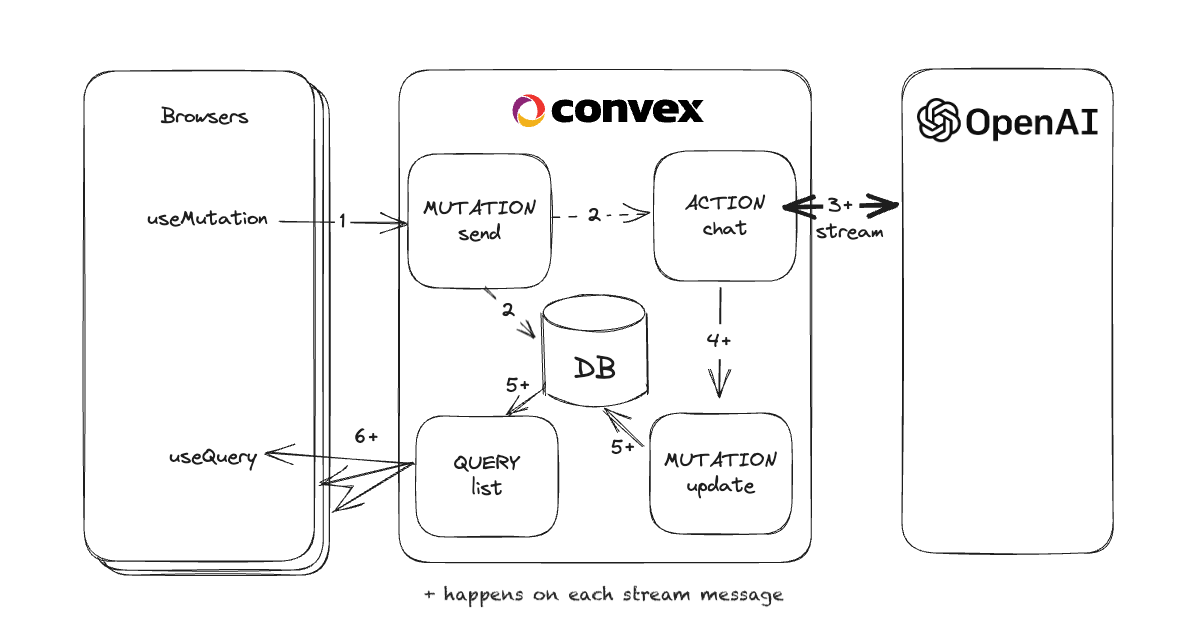

If your message includes "@gpt" it will kick off a request to OpenAI's chat completion API and stream the response, updating the message as data comes back from OpenAI.

- The frontend logic is all in

App.tsx. - The server logic that stores and updates messages in the database

is in

messages.ts. - The asynchronous server function that makes the streaming request to OpenAI

is in

openai.ts. - The initial messages that are scheduled to be sent are in

init.ts.

npm install

npm run dev

This will configure a Convex project if you don't already have one.

It requires an OpenAI API key.

Set the environment variable: OPEN_API_KEY (should start with sk-)

in your Convex backend via the dashboard

once your backend has been configured. You can also get there via:

npx convex dashboard

Once npm run dev successfully syncs, if the database is empty,

it will schedule some messages to be sent so you can see it working in action.

It will then start two processes in one terminal: vite for the frontend,

and npx convex dev for syncing changes to Convex server functions.

Check it out in the scripts section of package.json.

Convex is a hosted backend platform with a

built-in database that lets you write your

database schema and

server functions in

TypeScript. Server-side database

queries automatically

cache and

subscribe to data, powering a

realtime useQuery hook in our

React client. There are also

Python,

Rust,

ReactNative, and

Node clients, as well as a straightforward

HTTP API.

The database support NoSQL-style documents with relationships and custom indexes (including on fields in nested objects).

The

query and

mutation server functions have transactional,

low latency access to the database and leverage our

v8 runtime with

determinism guardrails

to provide the strongest ACID guarantees on the market:

immediate consistency,

serializable isolation, and

automatic conflict resolution via

optimistic multi-version concurrency control (OCC / MVCC).

The action server functions have

access to external APIs and enable other side-effects and non-determinism in

either our

optimized v8 runtime or a more

flexible node runtime.

Functions can run in the background via scheduling and cron jobs.

Development is cloud-first, with hot reloads for server function editing via the CLI. There is a dashbord UI to browse and edit data, edit environment variables, view logs, run server functions, and more.

There are built-in features for reactive pagination, file storage, reactive search, https endpoints (for webhooks), streaming import/export, and runtime data validation for function arguments and database data.

Everything scales automatically, and it’s free to start.