ToplingDB is developed and maintained by Topling Inc. It is built with RocksDB. See ToplingDB Branch Name Convention.

ToplingDB's submodule rockside is the entry point of ToplingDB, see SidePlugin wiki.

ToplingDB has much more key features than RocksDB:

- SidePlugin enables users to write a json(or yaml) to define DB configs

- Embedded Http Server enables users to view almost all DB info on web, this is a component of SidePlugin

- Embedded Http Server enables users to online change db/cf options and all db meta objects(such as MemTabFactory, TableFactory, WriteBufferManager ...) without restart the running process

- Many improvements and refactories on RocksDB, aimed for performance and extendibility

- Topling transaction lock management, 5x faster than rocksdb

- MultiGet with concurrent IO by fiber/coroutine + io_uring, much faster than RocksDB's async MultiGet

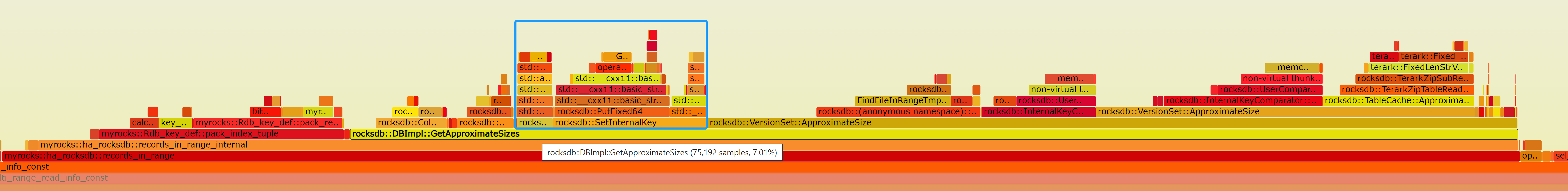

- Topling de-virtualization, de-virtualize hotspot (virtual) functions, and key prefix caches, bechmarks

- Topling zero copy for point search(Get/MultiGet) and Iterator

- Builtin SidePlugins for existing RocksDB components(Cache, Comparator, TableFactory, MemTableFactory...)

- Builtin Prometheus metrics support, this is based on Embedded Http Server

- Many bugfixes for RocksDB, a small part of such fixes was Pull Requested to upstream RocksDB

- MyTopling(MySQL on ToplingDB), MyTopling on aliyun

- Todis(Redis on ToplingDB)

With SidePlugin mechanics, plugins/components can be physically separated from core toplingdb

- Can be compiled to a separated dynamic lib and loaded at runtime

- User code need not any changes, just change json/yaml files

- Topling's non-open-source enterprise plugins/components are delivered in this way

toplingdb

\__ sideplugin

\__ rockside (submodule , sideplugin core and framework)

\__ topling-zip (auto clone, zip and core lib)

\__ cspp-memtab (auto clone, sideplugin component)

\__ cspp-wbwi (auto clone, sideplugin component)

\__ topling-sst (auto clone, sideplugin component)

\__ topling-rocks (auto clone, sideplugin component)

\__ topling-zip_table_reader (auto clone, sideplugin component)

\__ topling-dcompact (auto clone, sideplugin component)

\_ tools/dcompact (dcompact-worker binary app)| Repository | Permission | Description (and components) |

|---|---|---|

| ToplingDB | public | Top repository, forked from RocksDB with our fixes, refactories and enhancements |

| rockside | public | This is a submodule, contains:

|

| cspp-wbwi (WriteBatchWithIndex) |

public | With CSPP and carefully coding, CSPP_WBWI is 20x faster than rocksdb SkipList based WBWI |

| cspp-memtable | public | (CSPP is Crash Safe Parallel Patricia trie) MemTab, which outperforms SkipList on all aspects: 3x lower memory usage, 7x single thread performance, perfect multi-thread scaling) |

| topling-sst | public | 1. SingleFastTable(designed for L0 and L1) 2. VecAutoSortTable(designed for MyTopling bulk_load). 3. Deprecated ToplingFastTable, CSPPAutoSortTable |

| topling-dcompact | public | Distributed Compaction with general dcompact_worker application, offload compactions to elastic computing clusters, much more powerful than RocksDB's Remote Compaction |

| topling-rocks | private | ToplingZipTable, an SST implementation optimized for RAM and SSD space, aimed for L2+ level compaction, which uses topling dedicated searchable in-memory data compression algorithms |

| topling-zip_table_reader | public | For read ToplingZipTable by community users, builder of ToplingZipTable is in topling-rocks |

To simplify the compiling, repos are auto cloned in ToplingDB's Makefile, community users will auto clone public repo successfully but fail to auto clone private repo, thus ToplingDB is built without private components, this is so called community version.

ToplingDB requires C++17, gcc 8.3 or newer is recommended, clang also works.

Even without ToplingZipTable, ToplingDB is much faster than upstream RocksDB:

sudo yum -y install git libaio-devel gcc-c++ gflags-devel zlib-devel bzip2-devel libcurl-devel liburing-devel

#sudo apt-get update -y && sudo apt-get install -y libjemalloc-dev libaio-dev libgflags-dev zlib1g-dev libbz2-dev libcurl4-gnutls-dev liburing-dev libsnappy-dev libbz2-dev liblz4-dev libzstd-dev

git clone https://github.com/topling/toplingdb

cd toplingdb

make -j`nproc` db_bench DEBUG_LEVEL=0

cp sideplugin/rockside/src/topling/web/{style.css,index.html} ${/path/to/dbdir}

cp sideplugin/rockside/sample-conf/db_bench_*.yaml .

export LD_LIBRARY_PATH=`find sideplugin -name lib_shared`

# change db_bench_community.yaml as your needs

# 1. use default path(/dev/shm) if you have no fast disk(such as a cloud server)

# 2. change max_background_compactions to your cpu core num

# 3. if you have github repo topling-rocks permissions, you can use db_bench_enterprise.yaml

# 4. use db_bench_community.yaml is faster than upstream RocksDB

# 5. use db_bench_enterprise.yaml is much faster than db_bench_community.yaml

# command option -json can accept json and yaml files, here use yaml file for more human readable

./db_bench -json=db_bench_community.yaml -num=10000000 -disable_wal=true -value_size=20 -benchmarks=fillrandom,readrandom -batch_size=10

# you can access http://127.0.0.1:2011 to see webview

# you can see this db_bench is much faster than RocksDBFor performance and simplicity, ToplingDB disabled some RocksDB features by default:

| Feature | Control MACRO |

|---|---|

| Dynamic creation of ColumnFamily | ROCKSDB_DYNAMIC_CREATE_CF |

| User level timestamp on key | TOPLINGDB_WITH_TIMESTAMP |

| Wide Columns | TOPLINGDB_WITH_WIDE_COLUMNS |

Note: Dynamic creation of ColumnFamily is not supported by SidePlugin

To enable these features, add -D${MACRO_NAME} to var EXTRA_CXXFLAGS, such as build ToplingDB for java with dynamic ColumnFamily:

make -j`nproc` EXTRA_CXXFLAGS='-DROCKSDB_DYNAMIC_CREATE_CF' rocksdbjava

To conform open source license, the following term of disallowing bytedance is deleted since 2023-04-24, that is say: bytedance using ToplingDB is no longer illeagal and is not a shame.

We disallow bytedance using this software, other terms are identidal with

upstream rocksdb license, see LICENSE.Apache, COPYING and

LICENSE.leveldb.

The terms of disallowing bytedance are also deleted in LICENSE.Apache, COPYING and LICENSE.leveldb.

RocksDB is developed and maintained by Facebook Database Engineering Team. It is built on earlier work on LevelDB by Sanjay Ghemawat ([email protected]) and Jeff Dean ([email protected])

This code is a library that forms the core building block for a fast key-value server, especially suited for storing data on flash drives. It has a Log-Structured-Merge-Database (LSM) design with flexible tradeoffs between Write-Amplification-Factor (WAF), Read-Amplification-Factor (RAF) and Space-Amplification-Factor (SAF). It has multi-threaded compactions, making it especially suitable for storing multiple terabytes of data in a single database.

Start with example usage here: https://github.com/facebook/rocksdb/tree/main/examples

See the github wiki for more explanation.

The public interface is in include/. Callers should not include or

rely on the details of any other header files in this package. Those

internal APIs may be changed without warning.

Questions and discussions are welcome on the RocksDB Developers Public Facebook group and email list on Google Groups.

RocksDB is dual-licensed under both the GPLv2 (found in the COPYING file in the root directory) and Apache 2.0 License (found in the LICENSE.Apache file in the root directory). You may select, at your option, one of the above-listed licenses.