Use SQL to query clusters, jobs, users, and more from Databricks.

- Get started →

- Documentation: Table definitions & examples

- Community: Join #steampipe on Slack →

- Get involved: Issues

Download and install the latest Databricks plugin:

steampipe plugin install databricksConfigure your credentials and config file.

Configure your account details in ~/.steampipe/config/databricks.spc:

connection "databricks" {

plugin = "databricks"

# A connection profile specified within .databrickscfg to use instead of DEFAULT.

# This can also be set via the `DATABRICKS_CONFIG_PROFILE` environment variable.

# profile = "databricks-dev"

# The target Databricks account ID.

# This can also be set via the `DATABRICKS_ACCOUNT_ID` environment variable.

# See Locate your account ID: https://docs.databricks.com/administration-guide/account-settings/index.html#account-id.

# account_id = "abcdd0f81-9be0-4425-9e29-3a7d96782373"

# The target Databricks account SCIM token.

# See: https://docs.databricks.com/administration-guide/account-settings/index.html#generate-a-scim-token

# This can also be set via the `DATABRICKS_TOKEN` environment variable.

# account_token = "dsapi5c72c067b40df73ccb6be3b085d3ba"

# The target Databricks account console URL, which is typically https://accounts.cloud.databricks.com.

# This can also be set via the `DATABRICKS_HOST` environment variable.

# account_host = "https://accounts.cloud.databricks.com/"

# The target Databricks workspace Personal Access Token.

# This can also be set via the `DATABRICKS_TOKEN` environment variable.

# See: https://docs.databricks.com/dev-tools/auth.html#databricks-personal-access-tokens-for-users

# workspace_token = "dapia865b9d1d41389ed883455032d090ee"

# The target Databricks workspace URL.

# See https://docs.databricks.com/workspace/workspace-details.html#workspace-url

# This can also be set via the `DATABRICKS_HOST` environment variable.

# workspace_host = "https://dbc-a1b2c3d4-e6f7.cloud.databricks.com"

# The Databricks username part of basic authentication. Only possible when Host is *.cloud.databricks.com (AWS).

# This can also be set via the `DATABRICKS_USERNAME` environment variable.

# username = "[email protected]"

# The Databricks password part of basic authentication. Only possible when Host is *.cloud.databricks.com (AWS).

# This can also be set via the `DATABRICKS_PASSWORD` environment variable.

# password = "password"

# A non-default location of the Databricks CLI credentials file.

# This can also be set via the `DATABRICKS_CONFIG_FILE` environment variable.

# config_file_path = "/Users/username/.databrickscfg"

# OAuth secret client ID of a service principal

# This can also be set via the `DATABRICKS_CLIENT_ID` environment variable.

# client_id = "123-456-789"

# OAuth secret value of a service principal

# This can also be set via the `DATABRICKS_CLIENT_SECRET` environment variable.

# client_secret = "dose1234567789abcde"

}Or through environment variables:

export DATABRICKS_CONFIG_PROFILE=user1-test

export DATABRICKS_TOKEN=dsapi5c72c067b40df73ccb6be3b085d3ba

export DATABRICKS_HOST=https://accounts.cloud.databricks.com

export DATABRICKS_ACCOUNT_ID=abcdd0f81-9be0-4425-9e29-3a7d96782373

export [email protected]

export DATABRICKS_PASSWORD=password

export DATABRICKS_CLIENT_ID=123-456-789

export DATABRICKS_CLIENT_SECRET=dose1234567789abcdeRun steampipe:

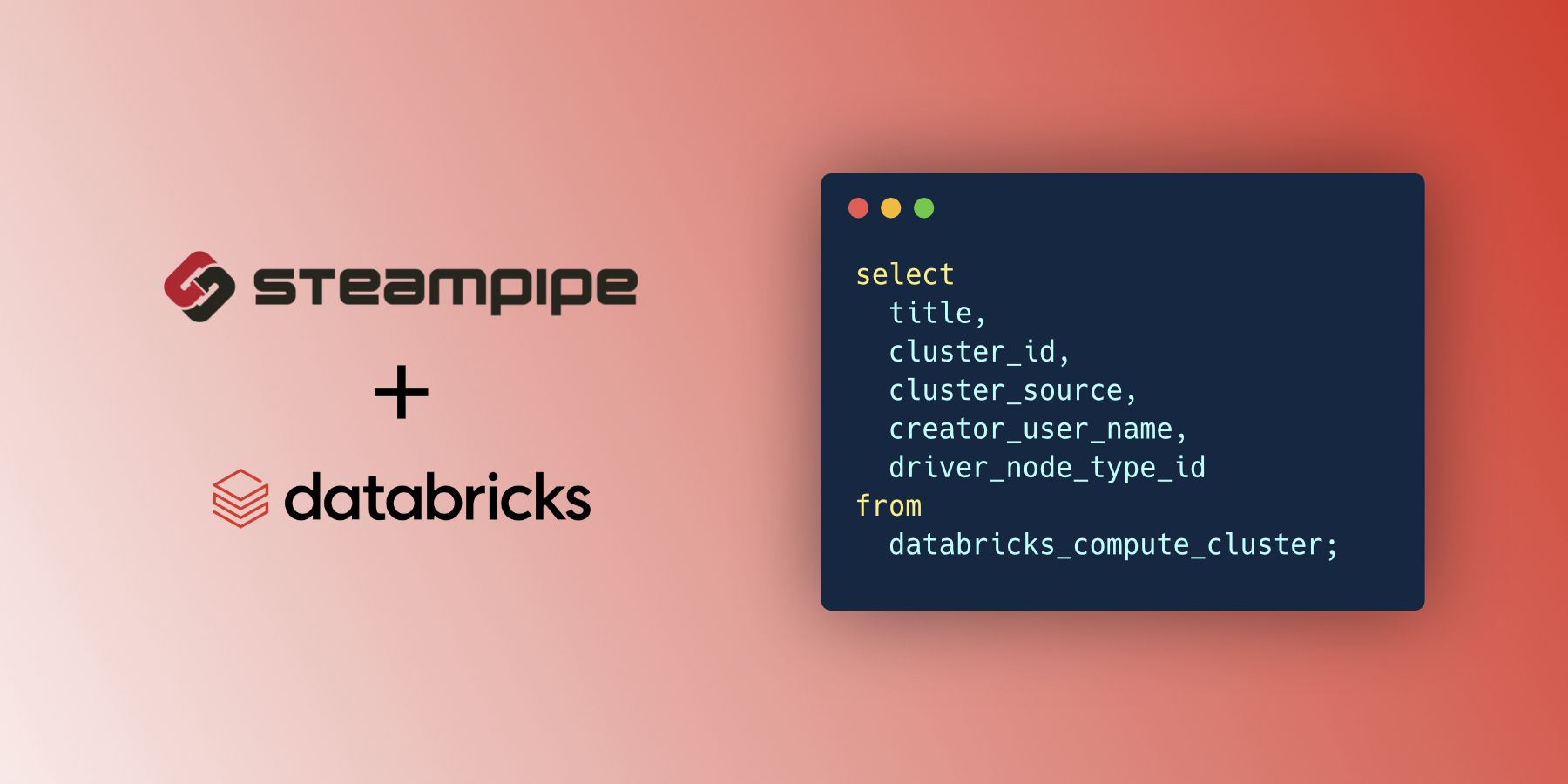

steampipe queryList details of your Databricks clusters:

select

cluster_id,

title,

cluster_source,

creator_user_name,

driver_node_type_id,

node_type_id,

state,

start_time

from

databricks_compute_cluster;+----------------------+--------------------------------+----------------+-------------------+---------------------+--------------+------------+---------------------------+

| cluster_id | title | cluster_source | creator_user_name | driver_node_type_id | node_type_id | state | start_time |

+----------------------+--------------------------------+----------------+-------------------+---------------------+--------------+------------+---------------------------+

| 1234-141524-10b6dv2h | [default]basic-starter-cluster | "API" | [email protected] | i3.xlarge | i3.xlarge | TERMINATED | 2023-07-21T19:45:24+05:30 |

| 1234-061816-mvns8mxz | test-cluster-for-ml | "UI" | [email protected] | i3.xlarge | i3.xlarge | TERMINATED | 2023-07-28T11:48:16+05:30 |

+----------------------+--------------------------------+----------------+-------------------+---------------------+--------------+------------+---------------------------+

This plugin is available for the following engines:

| Engine | Description |

|---|---|

| Steampipe | The Steampipe CLI exposes APIs and services as a high-performance relational database, giving you the ability to write SQL-based queries to explore dynamic data. Mods extend Steampipe's capabilities with dashboards, reports, and controls built with simple HCL. The Steampipe CLI is a turnkey solution that includes its own Postgres database, plugin management, and mod support. |

| Postgres FDW | Steampipe Postgres FDWs are native Postgres Foreign Data Wrappers that translate APIs to foreign tables. Unlike Steampipe CLI, which ships with its own Postgres server instance, the Steampipe Postgres FDWs can be installed in any supported Postgres database version. |

| SQLite Extension | Steampipe SQLite Extensions provide SQLite virtual tables that translate your queries into API calls, transparently fetching information from your API or service as you request it. |

| Export | Steampipe Plugin Exporters provide a flexible mechanism for exporting information from cloud services and APIs. Each exporter is a stand-alone binary that allows you to extract data using Steampipe plugins without a database. |

| Turbot Pipes | Turbot Pipes is the only intelligence, automation & security platform built specifically for DevOps. Pipes provide hosted Steampipe database instances, shared dashboards, snapshots, and more. |

Prerequisites:

Clone:

git clone https://github.com/turbot/steampipe-plugin-databricks.git

cd steampipe-plugin-databricksBuild, which automatically installs the new version to your ~/.steampipe/plugins directory:

make

Configure the plugin:

cp config/* ~/.steampipe/config

vi ~/.steampipe/config/databricks.spc

Try it!

steampipe query

> .inspect databricks

Further reading:

This repository is published under the Apache 2.0 (source code) and CC BY-NC-ND (docs) licenses. Please see our code of conduct. We look forward to collaborating with you!

Steampipe is a product produced from this open source software, exclusively by Turbot HQ, Inc. It is distributed under our commercial terms. Others are allowed to make their own distribution of the software, but cannot use any of the Turbot trademarks, cloud services, etc. You can learn more in our Open Source FAQ.

Want to help but don't know where to start? Pick up one of the help wanted issues: