unagiootoro / ruby-dnn Goto Github PK

View Code? Open in Web Editor NEWruby-dnn is a ruby deep learning library.

License: MIT License

ruby-dnn is a ruby deep learning library.

License: MIT License

Hi @unagiootoro

I ran the XOR sample and found that Cumo was slower than Numo.

If you don't mind my asking, Do you have a GPU + CUDA environment?

If you don't have a GPU, someone in Ruby community (including me) will support you with a donation...

Hello.

I recently introduced rubydnn on reddit.

The reddit comment was "improve the documentation".

I'm not an engineer or a programmer.

I don't really understand deep learning / neural networks.

But, I think the concept of ruby-dnn is nice.

Please brush up the README. This will encourage many people to use it.

I think the following list is useful.

https://github.com/matiassingers/awesome-readme

Flux.jl

https://github.com/FluxML/Flux.jl

Reddit

https://www.reddit.com/r/ruby/comments/bwnely/rubydnn/

Hi.

I think ruby-dnn should use LF line breaks.

ruby-dnn uses Windows (CR LF) line breaks.

When you open a file in vim, it looks like this:

You see ^ M at the end of every line. ^M means (CR).

This is not a fault.

But, Cross-platform open source project usually use LF line breaks.

Potential contributors must set CR + LF in the editor for ruby-dnn project.

Again, this is not a mistake. You can leave the line breaks as they are.

But that would be a disadvantage in attracting users and contributors.

Rubocop find out this line is unclear...

examples/dcgan/dcgan.rb:132:14: W: Lint/Void: Operator + used in void context.

dis_loss + @dis.train_on_batch(images, y_fake)

ruby-dnn/examples/dcgan/dcgan.rb

Lines 124 to 139 in 5114f1e

Hi,

Thanks for creating this package. I am very happy to see a native ruby implementation for DNNs. I have been playing around with it to use in a machine learning class I am teaching.

I have a dataset with two different categorical features and I would like to use one Embedding for each feature. Some other features in my dataset are numeric. In other packages there is a way to slice along an axis, apply the embedding and the concatenate. I can't figure out how to do this. There is code for an Embedding layer but no examples.

Also, can you show how embed features into multiple dimensions.

class MyModel < Model

##...

def forward x

e1 = @embed1.(x) # Slice

e2 = @embed2.(x) # Slice

x = Concatenate.(e1, e2, axis: 1)

x = @dense.(x)

end

endMy data looks like this:

48.0, 2.0595, 1.0, 2.0, 1.0, 0.0

where the last two features are to be embedded and the rest are numeric.

Thanks!

How about making an original character of ruby dnn? A logo has a great power to attract people. I like drawing pictures. When I saw your twitter, I felt that you also like drawing pictures.

(After you create a character concept, you can also order a logo from a designer.)

References:

yoshoku/rumale#4

New to Ruby. Only done web scraping using the same. But hopefully this can be considered. Nice work with the library though.

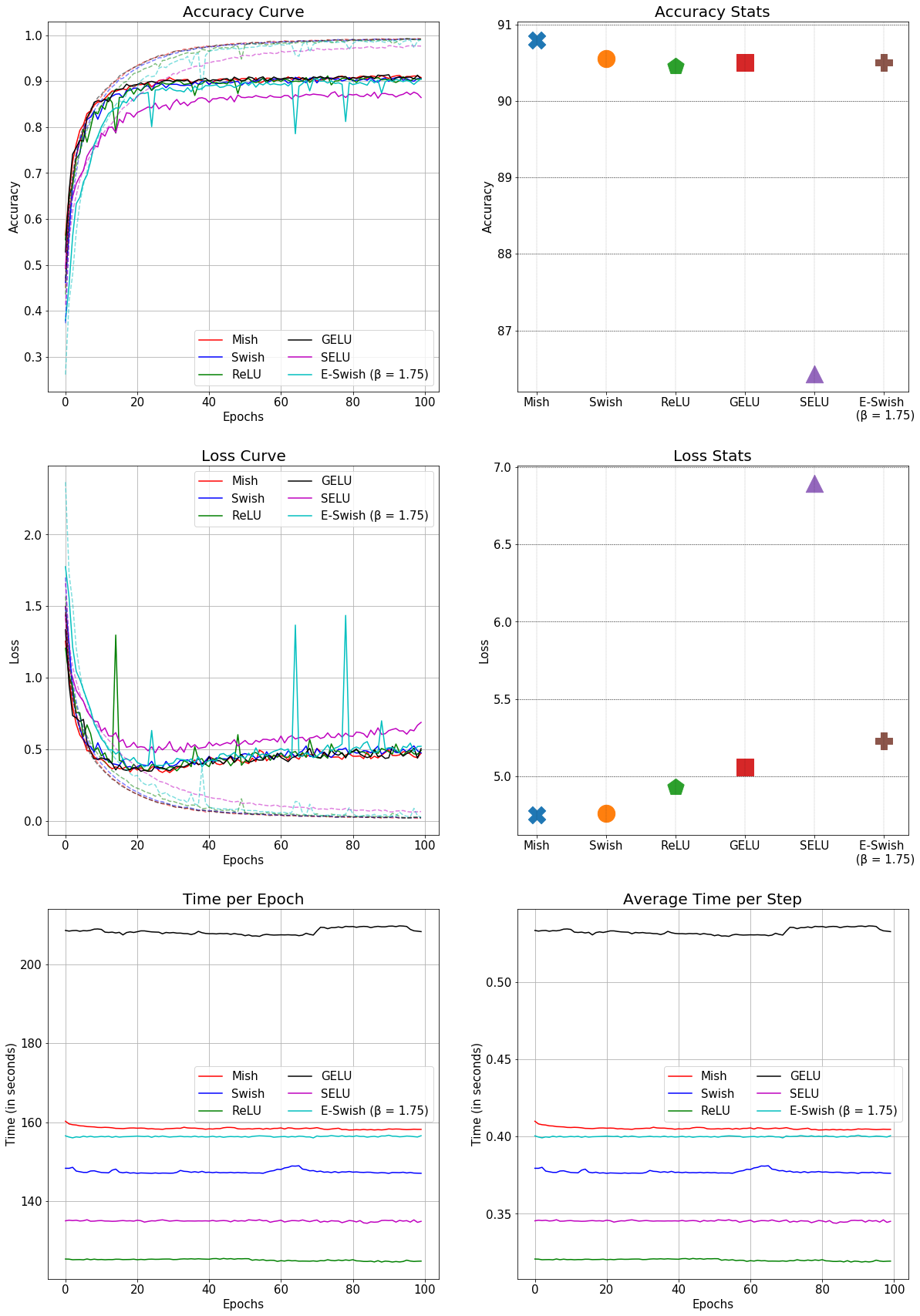

Mish is a new novel activation function proposed in this paper.

It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

It would be nice to have Mish as an option within the activation function group.

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10:

magro created by @yoshoku may be useful.

https://github.com/yoshoku/magro

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.