LTSmin started out as a generic toolset for manipulating labelled transition systems. Meanwhile the toolset was extended to a a full (LTL/CTL/μ-calculus) model checker, while maintaining its language-independent characteristics.

To obtain its input, LTSmin connects a sizeable number of existing (verification) tools: muCRL, mCRL2, DiVinE, SPIN (SpinS), UPPAAL (opaal), SCOOP, PNML, ProB and CADP. Moreover, it allows to reuse existing tools with new state space generation techniques by exporting LTSs into various formats.

Implementing support for a new language module is in the order of 200--600 lines of C "glue" code, and automatically yields tools for standard reachability checking (e.g., for state space generation and verification of safety properties), reachability with symbolic state storage (vector set), fully symbolic (BDD-based) reachability, distributed reachability (MPI-based), and multi-core reachability (including multi-core compression and incremental hashing).

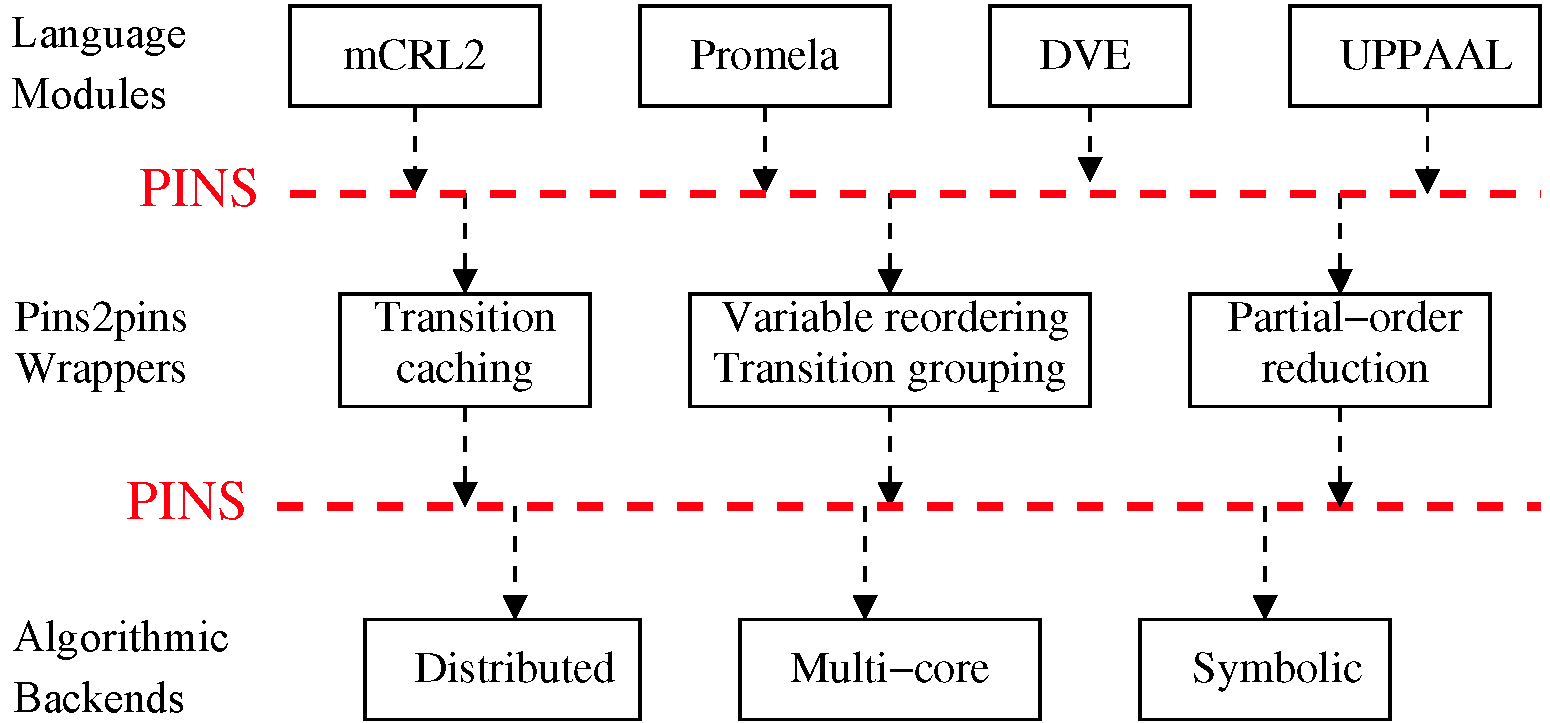

The synergy effects in the LTSmin implementation are enabled by a clean interface: all LTSmin modules work with a unifying state representation of integer vectors of fixed size, and the PINS dependency matrix which exploits the combinatorial nature of model checking problems. This splits model checking tools into three mostly independent parts: language modules, PINS optimizations, and model checking algorithms.

On the other hand, the implementation of a verification algorithm based on the PINS matrix automatically has access to muCRL, mCRL2, DVE, PROMELA, SCOOP, UPPAAL xml, PNML, ProB, and ETF language modules.

Finally, all tools benefit from PINS2PINS optimizations, like local transition caching (which speeds up slow state space generators), matrix regrouping (which can drastically reduce run-time and memory consumption of symbolic algorithms), partial order reduction and linear temporal logic.

LTSmin offers different analysis algorithms covering four disciplines (algorithmic backends):

- Symbolic CTL/mu-calculus model checking using different BDD/MDD packages, including the parallel BDD package Sylvan,

- Multi-core LTL model checking using tree compression,

- Sequential LTL model checking, with partial-order reduction and optional BDD-based state storage, and

- Distributed LTS instantiation, export, and minimization modulo branching/strong bisimulation.

The PINS interface divides our model checking tools cleanly into the two These algorithms each have their own strength, depending on the input model's structure and verification problem. For models with combinatorial state structure, the symbolic tools can process billions of states per second using only few memory. Models that exhibit much concurrency can be reduced significantly using partial-order reduction. Models with more dependencies can be explored with LTSmin's multi-core algorithms, which employ aggressive lossless state compression using a concurrent tree data structure. Finally, our distributed minimization techniques can aid the verification of multiple properties on a single state space.

LTSmin supports language independence via its definition of a Partitioned Next-State Interface (PINS), which exposes enough internal structure of the input model to enable the highly effective algorithms above, while at the same time making it easy to connect your own language module. The interface is also simple enough to support very fast next-state functions such as SPIN's (performance comparison here). LTSmin already connects a sizeable number of existing verification tools as language modules, enabling the use of their modeling formalisms:

- muCRL's process algebra,

- mCRL2's process algebra,

- DiVinE's DVE language, based on extended state machines,

- the builtin symbolic ETF format,

- SPIN's Promela via the included SpinS, a SpinJa spinoff,

- UPPAAL's timed automata via opaal,

- SCOOP's process algebra for Markov Automata,

- Ordinary place/transition Petri nets in PNML format,

- B, Event-B, TLA+, and Z through ProB, and

- POSIX'/UNIX' dlopen API.

Moreover, LTSmin supports multiple export formats for state spaces, which allows interoperability with other tools like CADP.

The Partitioned Next-State Interface (PINS) splits up the next-state function in different groups. For example, each transition group can represent a line of code in an imperative language module or a summand in a process-algebraic language module. Using the static dependency information between transition groups and state vector variables (most groups only depend on a few variables), LTSmin's algorithms can exploit the combinatorial structure of the state space. This leads to exponential gains in performance for the symbolic algorithms, which can now learn the transition relation on-the-fly in a very effectively way, because it is partitioned. Using the same principle, LTSmin provides transition storing for transition caching with negligible memory overhead.

To connect a new language module, one merely needs to implement the PINS next-state functions and provide some type information on the state vector contents, which should be encoded according to PINS unifying integer vector format. By providing additional transition/state dependency information via the PINS dependency matrices, the symbolic exploration algorithms and PINS2PINS modules (see below) can exploit their full potential. Finally, by providing few additional information on transition guards the partial order reduction algorithms become enabled.

The PINS interface divides our model checking tools cleanly into the two independent parts discussed above: language modules and model checking algorithms. However it also enables us to create PINS2PINS modules, that reside between the language module and the algorithm, and modify or optimize the next-state function. These PINS2PINS modules can benefit all algorithmic backends and can be turned on and off on demand:

- transition storing/caching speeds up slow language modules,

- regrouping speeds up the symbolic algorithms by optimizing dependencies, and

- partial order reduction reduces the state space by dropping irrelevant transitions.

Furthermore, we implement linear temporal logic (LTL) as a PINS2PINS module, which is automatically turned on when an LTL formula is supplied and transforms the state space on-the-fly by calculating the cross product with the formula.

If you were to refer to the LTSmin toolset in your academic paper, we would appreciate if you would use one of the following references (depending on the part(s) of the toolset that you are referring to):

- Gijs Kant, Alfons Laarman, Jeroen Meijer, Jaco van de Pol, Stefan Blom and Tom van Dijk: LTSmin: High-Performance Language-Independent Model Checking. TACAS 2015

- Alfons Laarman, Jaco van de Pol and Michael Weber. Multi-Core LTSmin: Marrying Modularity and Scalability. NFM 2011

- Stefan Blom, Jaco van de Pol and Michael Weber. LTSmin: Distributed and Symbolic Reachability. CAV 2010

Ordered by the number of commits (January 2017) LTSmin's contributors are:

- Alfons Laarman,

- Michael Weber,

- Jeroen Meijer,

- Stefan Blom,

- Jeroen Ketema,

- Tom van Dijk,

- Elwin Pater,

- Gijs Kant,

- Vincent Bloemen

- Jaco van de Pol,

- Philipp Körner

- Mads Chr. Olesen,

- Freark van der Berg,

- Steven van der Vegt,

- Andreas Dalsgaard,

- Jeroen Keiren,

- Tobias Uebbing,

- Axel Belinfante,

- Sander van Schouwenburg,

- Tien-Loong Siaw and

- Dennis Guck

- ltsmin (LTSmin's main man page)

- lps2lts-sym (BDD-based reachability with mCRL2 frontend)

- dve2lts-mc (multi-core reachability with DiVinE 2 frontend)

- prom2lts-mc (multi-core reachability with Promela SpinS frontend)

- lpo2lts-seq (sequential enumerative reachability with muCRL frontend)

- etf2lts-dist (distributed reachability with ETF frontend)

- lps2torx (TorX testing tool connector with mCRL2 frontend)

- pbes2lts-sym (Symbolic reachability tool with PBES frontend: example)

- pins2lts-sym (Symbolic reachability tool using the dlopen API: tutorial)

- mapa2lts-sym (Symbolic reachability tool with MAPA frontend)

- pnml2lts-sym (Symbolic reachability tool with PNML frontend)

- prob2lts-sym (Symbolic reachability tool with ProB frontend)

More manpages can be found at here.

- GNU/Linux (tested on Arch Linux, Ubuntu, Debian, OpenSuSE 11.2 and Red Hat Enterprise Linux 6)

- MacOS X, version 10.10 "Yosemite"

- MacOS X, version 10.7 "Lion"

- MacOS X, version 10.6 "Snow Leopard" (no multi-core muCRL/mCRL2)

- MacOS X, version 10.5 "Leopard" (no multi-core muCRL/mCRL2)

- Cygwin/Windows (tested on Windows 7 with Cygwin 1.7)

- Windows (native, with MinGW/MSYS)

For the use of the multi-core BDD package Sylvan and the multi-core

reachability algorithms (*2lts-mc), we further recommend using a 64-bit OS.

If you are building the software from a Git repository rather than a release tarball, refer to Section Building from a Git Repository for additional set-up instructions. Then install the dependencies listed in Section Build Dependencies below.

# Unpack the tarball

$ tar xvzf ltsmin-<version>.tar.gz

$ cd ltsmin-<version>

# Configure

$ ./configure --disable-dependency-tracking --prefix /path/

If you are building a Windows target with MinGW you need to pass additional variables and flags to ./configure:

- You need to set the correct C compiler:

CC=x86_64-w64-mingw32-gcc, - You need to set the correct C++ compiler with the correct threading model:

CXX=x86_64-w64-mingw32-g++-posix, - You need to set the correct host triplet:

--host=--host=x86_64-w64-mingw32. - You need to add additional linker flags:

LDFLAGS='-static-libgcc -static-libstdc++ -Wl,-Bstatic,--whole-archive -Wl,-lwinpthread -L/path/to/libmman.a -lmman -Wl,--no-whole-archive'.

So the whole commanline becomes:

$ ./configure CC=x86_64-w64-mingw32-gcc CXX=x86_64-w64-mingw32-g++-posix --host=x86_64-w64-mingw32 LDFLAGS='-static-libgcc -static-libstdc++ -Wl,-Bstatic,--whole-archive -Wl,-lwinpthread -L/path/to/libmman.a -lmman -Wl,--no-whole-archive' PKG_CONFIG_PATH=/path/to/Windows/packages/lib/pkgconfig --disable-dependency-tracking --prefix /path/

Note that this example assumes the MinGW compiler that is shipped by default with Debian/Ubuntu, hence the host triplet is x86_64-w64-mingw32. If you are using MXE, the host triplet to use is x86_64-w64-mingw32.static, and you may omit specifying the variables CC, and CXX. However, you must specify the location of pkgconf packages using PKG_CONFIG_PATH_x86_64_w64_mingw32_static, instead of PKG_CONFIG_PATH.

If you have installed pkgconf in a non-standard location, you may

need to specify the location of the pkg-config utility, e.g.:

$ ./configure --disable-dependency-tracking --prefix /path/ PKG_CONFIG="/path/to/pkg-config"

If you have installed dependencies (e.g. Sylvan) in a non-standard location,

you may need to tell pkgconf to search for *.pc files in this non standard

location. E.g. if Sylvan is installed in /opt/local/ and Sylvan's sylvan.pc

is located in /opt/local/lib/pkgconfig,

you need to set PKG_CONFIG_PATH to /opt/local/lib/pkgconfig:

$ ./configure --disable-dependency-tracking --prefix /path/ PKG_CONFIG_PATH="/opt/local/lib/pkgconfig"

Note that as usual, you can separate multiple paths to *.pc files with :.

It is a good idea to check the output of ./configure, to see whether all dependencies were found.

# Build

$ make

# Install

$ make install

You can also run tests with

# Run tests

$ make check

For one-shot builds, the following option speeds up the build process by not recording dependencies:

./configure --disable-dependency-tracking ...

Non-standard compilers, etc., can be configured by using variables:

./configure CFLAGS='-O3 -m64' MPICC=/sw/openmpi/1.2.8/bin/mpicc ...

This would add some options to the standard CFLAGS settings used for building, to enable more optimizations and force a 64-bit build (for the GCC C compiler). Furthermore, the MPI compiler wrapper is set explicitly instead of searching it in the current shell PATH.

Note that libraries installed in non-standard places need special attention: to be picked up by the configure script, library and header search paths must be added, e.g.:

./configure LDFLAGS=-L/opt/local/lib CPPFLAGS=-I/opt/local/include

Additional setting of (DY)LD_LIBRARY_PATH might be needed for the dynamic linker/loader (see, e.g., "man ld.so" or "man dyld").

See ./configure --help for the list of available variables,

and file INSTALL for further details.

If you want to configure LTSmin to statically link binaries,

LTSmin needs to run pkg-config with the --static flag.

This will resolve additional flags required for static linking, e.g.:

$ ./configure --enable-pkgconf-static

The following additional make targets are supported:

mostlyclean::

clean::

Clean the source tree.

doxygen-doc::

Builds Doxygen documentation for the source code.

We list the external libraries and tools which are required to build this software.

If you want to compile a Windows target using MinGW we recommend using MXE. MXE provides pre-built Windows libraries as Debian packages. This is useful if you don't want to compile LTSmin's dependencies manually.

Download popt (>= 1.7) from http://rpm5.org/files/popt/. We tested with popt 1.16. If you are compiling popt for Windows with MinGW make sure to apply this patch.

Download zlib from http://www.zlib.net/.

Download GNU make from http://www.gnu.org/software/make/.

Download Apache Ant from http://ant.apache.org/. We tested with ant 1.7.1. Note that ant is not required for building from a distribution tarball (unless Java files were modified). Note that we require JavaCC task support for Ant.

Download pkgconf from https://github.com/pkgconf/pkgconf.

If you are compiling LTSmin for Windows using MinGW, you need an additional

wrapper library for mman, which can be downloaded from: https://github.com/witwall/mman-win32/.

To build the parallel symbolic algorithms, Sylvan (>=1.4) is required. If Sylvan is installed in a non-standard location please refer to this note on aclocal and pkgconf, and this note on configuring pkgconf.

Download muCRL (>= 2.18.5) from http://www.cwi.nl/~mcrl/mutool.html. We tested with muCRL-2.18.5. Without muCRL, the AtermDD decision diagram package will not be built.

Note that for 64-bit builds, you have to explicitly configure muCRL for this (otherwise, a faulty version is silently build):

./configure --with-64bit

For best performance, we advise to configure muCRL like this:

./configure CC='gcc -O2' --with-64bit

Download the latest version of mCRL2 from http://www.mcrl2.org/.

Build and install mCRL2:

cmake . -DCMAKE_INSTALL_PREFIX=...

make

make install

The graphical tools of mCRL2 are not required for ltsmin to work, hence you can also build mCRL2 without:

cmake . -DMCRL2_ENABLE_GUI_TOOLS=OFF -DCMAKE_INSTALL_PREFIX=...

Note: to enable the PBES tools use the latest svn version of mCRL2.

See the CADP website http://www.inrialpes.fr/vasy/cadp/ on how to obtain a license and download the CADP toolkit.

For multi-core reachability on timed automata with the opaal frontend, install TBB malloc. Scalability can be limited without a concurrent allocator, hence the opaal2lts-mc frontend is not compiled in absence of TBB malloc. See http://threadingbuildingblocks.org/file.php?fid=77

Download version 2.4 of DiVinE from http://divine.fi.muni.cz/. We tested with the version 2.4. Apply the patch from <contrib/divine-2.4.patch> to the DiVinE source tree:

cd divine-2.4

patch -p1 < /path/to/ltsmin/contrib/divine-2.4.patch

Alternatively, download a LTSmin-enabled version of DiVinE via git:

git clone https://github.com/utwente-fmt/divine2.git

Build with:

cd divine2

mkdir _build && cd _build

cmake .. -DGUI=OFF -DRX_PATH= -DCMAKE_INSTALL_PREFIX=... -DMURPHI=OFF

make

make install

On MacOS X, option -DHOARD=OFF might have to be added to the cmake command line to make it compile without errors.

Also, on MacOS X Divine2 only compiles with the GNU std C++ library.

Thus on MacOS X one has to provide the option -DCMAKE_CXX_FLAGS="-stdlib=libstdc++"

The LTSmin configure script will find the DiVinE installation automatically, if the divine binary is in the search path.

With suitable options, the divine compile DVE compiler produces

LTSmin compatible libraries:

divine compile -l model.dve

This produces a file model.dve2C, which can also be passed to

LTSmin tools. (This step is done automatically by the LTSmin tools

when passing in a .dve model, doing it manually is rarely needed.)

See the scoop/README file.

Use SpinS (distributed as submodule LTSmin) to compile PROMELA model

leader.pm to 'leader.pm.spins':

spins -o3 leader.pm

The optional flag +-o3+ turns off control flow optimizations.

The resulting compiled SpinS module can be loaded by all SpinS-related LTSmin tools (prom*).

Download opaal from https://code.launchpad.net/~opaal-developers/opaal/opaal-ltsmin-succgen. Follow the included installation instructions.

UPPAAL xml models can be compiled to PINS binaries (.xml.so) using the included opaal_ltsmin script.

opaal_ltsmin --only-compile model.xml

The multcore LTSmin tool can explore the model (opaal2lts-mc). Provide the

TBB allocator location at load run time for good performance:

LD_PRELOAD=/usr/lib/libjemalloc.so.1 opaal2lts-mc <model.xml.so>

Download libDDD (>= 1.7) from http://move.lip6.fr/software/DDD/. We tested with libDDD 1.7.

In principle, any MPI library which supports MPI-IO should work.

However, we tested only with Open MPI http://www.open-mpi.org/.

Without MPI, the distributed tools (xxx2lts-mpi, ltsmin-mpi) will

not be built.

Download AsciiDoc (>= 8.4.4) from http://www.methods.co.nz/asciidoc/. We tested with asciidoc-8.4.4. Without asciidoc, documentation cannot be rebuilt. For convenience, release tarballs are shipping with pre-built man pages and HTML documentation.

Download xmlto from http://cyberelk.net/tim/software/xmlto/. We tested with xmlto-0.0.18. Without xmlto, man pages cannot be rebuilt. Note that xmlto in turn requires docbook-xsl to be installed. We tested with docbook-xsl-1.76.1.

Download Doxygen from http://www.doxygen.org/. We tested with doxygen-1.5.5. Without doxygen, internal source code documentation cannot be generated.

For cross-compilation builds on MacOS X, the Apple Developer SDKs must be installed. They are available from Apple http://developer.apple.com/tools/download/, or from the MacOS X installation CDs.

To build the ProB front-end, CZMQ3 is required.

To build the Petri net front-end, libxml2 is required.

Note that the libxml2 package that macOS provides is not properly configured.

If you want to build the PNML front-end, please install a proper libxml2

package, e.g. libxml2 in Homebrew. And do not forget to set PKG_CONFIG_PATH,

as suggested by Homebrew.

To use Büchi automata created by Spot, download Spot (>=2.3.1) from https://spot.lrde.epita.fr/index.html.

To use BuDDy's FDDs implementation for symbolic state storage, you have two options.

- Install Spot which distributes a BuDDy fork called BDDX (recommended),

- Install BuDDy from https://github.com/utwente-fmt/buddy/releases, which is a fork originally included in LTSmin.

If both versions of BuDDy are installed, LTSmin will use the Spot version of BuDDy.

To use SDDs for symbolic state storage, the simplest way is as follows.

- Download SDD from http://reasoning.cs.ucla.edu/sdd/ (version 2.0)

- Build the SDD package. This produces the file

/sdd/build/libsdd.a - Move or copy the file

libsdd.ato/usr/local/lib/libsdd.a - Move or copy the file

sddapi.hto/usr/local/include/sddapi.h

Alternatively, the files libsdd.a and sddapi.h may be put in a different directory dir,

so long as dir/lib contains libsdd.a and dir/include contains sddapi.h.

In this case, add the option --with-libsdd=/path/to/dir to the configure call, e.g.,

./configure --with-libsdd=/path/to/dir

Alternatively, use --with-libsdd=no to configure without SDD.

Before building the software as described above, the following commands have to be executed in the top-level source directory:

$ git submodule update --init

$ ./ltsminreconf

If you have installed pkgconf in a non-standard location you may need

to specify the location to pkg.m4 using the ACLOCAL_PATH

variable manually, before running ltsminreconf. Assuming

the path to pkg.m4 is ~/.local/share/aclocal/pkg.m4, run:

$ ACLOCAL_PATH=~/.local/share/aclocal ./ltsminreconf

Building from another source than the release tarball requires some extra tools to be installed:

Download automake (>= 1.14) from http://www.gnu.org/software/automake/. We tested with automake-1.14.

Download autoconf (>= 2.65) from http://www.gnu.org/software/autoconf/. We tested with autoconf-2.69.

Download libtool (>= 2.2.6) from http://www.gnu.org/software/libtool/. We tested with libtool-2.4.

Download Flex (>= 2.5.35) from http://flex.sourceforge.net/. We tested with flex 2.5.35.

Download Bison from (>= 3.0.2) from https://www.gnu.org/software/bison/.

See above.

- For support/questions, email: [email protected].

- For bug reports and feature suggestions, visit: https://github.com/utwente-fmt/ltsmin/issues.

- And subscribe to our mailing list: [email protected].

- Jeroen Meijer, and Jaco van de Pol Bandwidth and Wavefront Reduction for Static Variable Ordering in Symbolic Reachability Analysis . NFM 2016

- Jens Bendisposto, Philipp Körner, Michael Leuschel, Jeroen Meijer, Jaco van de Pol, Helen Treharne, and Jorden Whitefield Symbolic Reachability Analysis of B through ProB and LTSmin . iFM 2016

- Tom van Dijk and Jaco van de Pol Sylvan: Multi-core Decision Diagrams . TACAS 2015

- Gijs Kant, Alfons Laarman, Jeroen Meijer, Jaco van de Pol, Stefan Blom and Tom van Dijk LTSmin: High-Performance Language-Independent Model Checking . TACAS 2015

- Jeroen Meijer, Gijs Kant, Stefan Blom and Jaco van de Pol Read, Write and Copy Dependencies for Symbolic Model Checking . HVC 2014

- Alfons Laarman and Anton Wijs Partial-Order Reduction for Multi-Core LTL Model Checking . HVC 2014

- Tom van Dijk and Jaco van de Pol Lace: Non-blocking Split Deque for Work-Stealing . MuCoCoS 2014

- Gijs Kant and Jaco van de Pol Generating and Solving Symbolic Parity Games . GRAPHITE 2014

- Alfons Laarman, Elwin Pater, Jaco van de Pol and Michael Weber. Guard-based Partial-Order Reduction . SPIN 2013

- Alfons Laarman, Mads Chr. Olesen and Andreas Dalsgaard, Kim G. Larsen, Jaco van de Pol. _Multi-Core Emptiness Checking of Timed Büchi Automata using Inclusion Abstraction _. CAV 2013

- Alfons Laarman and David Farago. Improved On-The-Fly Livelock Detection: Combining Partial Order Reduction and Parallelism for DFSFIFO . NASA FM 2013

- Tom van Dijk, Alfons Laarman and Jaco van de Pol. Multi-core and/or Symbolic Model Checking. AVOCS 2012

- Freark van der Berg and Alfons Laarman. SpinS: Extending LTSmin with Promela through SpinJa. PDMC 2012

- Tom van Dijk, Alfons Laarman and Jaco van de Pol. Multi-core BDD Operations for Symbolic Reachability. PDMC 2012

- Tom van Dijk. The parallelization of binary decision diagram operations for model checking. 2012. Thesis

- Sami Evangelista, Alfons Laarman, Laure Petrucci and Jaco van de Pol. Improved Multi-Core Nested Depth-First Search. ATVA 2012

- Andreas Dalsgaard, Alfons Laarman, Kim G. Larsen, Mads Chr. Olesen and Jaco van de Pol. Multi-Core Reachability for Timed Automata. FORMATS 2012

- Gijs Kant and Jaco van de Pol. Efficient Instantiation of Parameterised Boolean Equation Systems to Parity Games. Graphite 2012

- Alfons Laarman and Jaco van de Pol. Variations on Multi-Core Nested Depth-First Search. PDMC 2011

- Elwin Pater. Partial Order Reduction for PINS. 2011. Thesis

- Tien Loong Siaw. Saturation for LTSmin. 2012. Thesis

- Alfons Laarman, Rom Langerak, Jaco van de Pol, Michael Weber and Anton Wijs. Multi-Core Nested Depth-First Search. ATVA 2011

- Alfons Laarman, Jaco van de Pol and Michael Weber. Multi-Core LTSmin: Marrying Modularity and Scalability . NFM 2011

- Alfons Laarman, Jaco van de Pol and Michael Weber. Parallel Recursive State Compression for Free . SPIN 2011

- Alfons Laarman, Jaco van de Pol and Michael Weber. Boosting Multi-Core Reachability Performance with Shared Hash Tables. FMCAD 2010

- Stefan Blom, Jaco van de Pol and Michael Weber. LTSmin: Distributed and Symbolic Reachability. CAV 2010, LNCS 6174, pp. 354–359.

- Stefan Blom, Jaco van de Pol and Michael Weber. Bridging the Gap between Enumerative and Symbolic Model Checkers, Technical Report TR-CTIT-09-30, CTIT, University of Twente, Enschede. (2009)

- Stefan Blom, Bert Lisser, Jaco van de Pol, and Michael Weber. A Database Approach to Distributed State Space Generation. J Logic Computation (2009)

- Stefan Blom, Jaco van de Pol: Symbolic Reachability for Process Algebras with Recursive Data Types. ICTAC 2008: pp. 81–95

- Stefan Blom, Bert Lisser, Jaco van de Pol, and Michael Weber. A Database Approach to Distributed State Space Generation. ENTCS 198(1): pp. 17–32 (2007)

- Stefan Blom, Simona Orzan: Distributed state space minimization. STTT 7(3): pp. 280–291 (2005)

- Stefan Blom, Simona Orzan: A distributed algorithm for strong bisimulation reduction of state spaces. STTT 7(1): pp. 74–86 (2005)

- Stefan Blom, Izak van Langevelde, Bert Lisser: Compressed and Distributed File Formats for Labeled Transition Systems. ENTCS 89(1): (2003)

- Stefan Blom, Simona Orzan: Distributed Branching Bisimulation Reduction of State Spaces. ENTCS 89(1): (2003)

Copyright (c) 2008 - 2018 Formal Methods and Tools, University of Twente All rights reserved.

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

-

Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

-

Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

-

Neither the name of the University of Twente nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.