blog-old's People

blog-old's Issues

Integrating GitHub Issues as a Blogging Platform

Integrating GitHub Issues as a Blogging Platform

Building a simple blog is my go-to project for trying out new backend or frontend technologies. While learning Erlang/Elixir at university, I created a prototype using an ad-hoc escript that generated things via a cronjob.

It was quick, dirty, and fun. I started with concatenating handwritten .html files and building an index page. Eventually, it evolved to compiling .md files by shelling out to pandoc.

However, as I began drafting posts, I realized that some features were missing. Post tagging was limited to the directory structure, and syntax highlighting wasn't working well. I wanted a platform that allowed me to make edits from anywhere, including devices without a command-line or git.

I needed to fix typos on the spot. After careful consideration, I decided to implement my blog abusing GitHub as a backend.

GitHub was a free service with a nice web UI that provided excellent syntax highlighting support, customizable tagging, pinning, and even supported comments for future extensibility.

Implementation

GitHub offers an open API with several options available. While I haven't really used it much beyond tinkering with language tutorials, I've heard great things about it.

Out of all the APIs provided, I opted for their GraphQL API since I'm a big fan of GraphQL and have prior experience with it, having worked on consulting projects for companies that use it on a large scale.

Initially, I stored my posts as files in my repo, and my backend server would crawl through directories based on the URL slug (e.g., localhost:4000/some/post would look for $MY_REPO/some/post.*). This approach was solid, but when I tried to query files through the repository { object(...) } API, it got a little gnarly 😅. I assume they designed it that way to avoid extensive post-processing on the underlying git protocol.

Honestly, I'd much rather query objects through repository { files("somePath") { ... }} instead, since it would make my server logic more manageable. The object field required me to pass instructions that git rev-parse could understand, and that's no fun 🤮.

After digging around, I found out that querying GitHub Issues could be done from the repository node and gave me the freedom to do whatever I wanted. So, I scrapped everything I had done so far and started fresh with this second iteration.

If you're interested, GitHub's GraphiQL explorer is awesome for playing around and getting a feel for GraphQL (if you're not already familiar with it) and understanding what's happening behind the scenes on my blog.

For instance, the basic query I'm doing is:

query($owner: String!, $name: String!) {

repository(owner: $owner, name: $name) {

nameWithOwner,

issues(first: 10, orderBy: {field: CREATED_AT, direction: DESC}) {

nodes {

number

bodyHTML

createdAt

updatedAt

title

labels(first: 10) {

nodes {

name

}

}

}

totalCount

}

}

}This snippet gets the first ten issues ordered by CREATED_AT descending from a given repository. If one were concerned that anyone would be able to make issues in a given repo, you could further constrain the query with something like:

issues(filterBy: {createdBy: $owner}, first: 10, orderBy: {field: CREATED_AT, direction: DESC})Absinthe is an absolutely amazing GraphQL server implementation for the backend, but since my site is rendered by the backend, this wasn't what I needed. Whenever I've worked on frontend code interacting with GraphQL, I've always used a popular library called Apollo but (at least at the time of implementation) there was no Apollo library for Elixir.

I ended up rolling my own simple GraphQL client library because I didn't think it would be too complex. I'm sure as time goes on, simple libraries will be built, but honestly, it was easy enough to work with by just POST-ing to GitHub's API endpoint. For future reference, I ended up with something similar to the following:

def fetch_issues_for_repo(owner, repo) do

%HTTPoison.Response{body: body} =

HTTPoison.post(

"https://api.github.com/graphql",

Jason.encode!(%{

query: """

query {

repository(owner: \"#{owner}\", name: \"#{repo}\") {

issues(first: 10, orderBy: {field: CREATED_AT, direction: DESC}) {

nodes {

title

createdAt

updatedAt

body

author {

login

}

}

}

}

}

"""

})

)

body

|> Jason.decode!()

|> Map.get("data")

|> Map.get("repository")

|> Map.get("issues")

|> Map.get("nodes")

endThis gives me some HTML (and other metadata like tags, etc.) which I essentially insert ad-hoc into my own Phoenix templates, and it just works. If you're reading this post, you can see this approach works pretty well (I'm unlikely to change it going forwards 😜)

One downside I can see is that you're limited to a few thousand queries as rate-limiting* so as time goes forwards, I'll probably cache posts in an ETS table or something so that I'm not wasting my quota whenever someone clicks on a post.

Just for a bit of fun, you can see the source of this post. Features I'll definitely add going forwards are reactions to the main post, which I can display nicely on the index page, and comments!

I'll continue playing around with my blog and the technology driving it, as is tradition for software engineers, but all-in-all, this is a pretty comfy blogging setup 😈

Edit: I'm still using this approach after a few months, but I definitely needed to implement caching. I woke up one night to my Graph API rate limits exceeded and couldn't do anything about it but wait! That's a good problem to have though 😉

Edit: One fancy thing I've seen is adding estimated reading times to blog posts. This proved a little hard because when I request blog contents from GitHub, I get them all as one monolithic block, which I inject into my templates...

To get it working, I end up processing the HTML returned by GitHub to calculate a reading time, injecting the reading time in the template and maximally abusing CSS Grid to re-order the flow of the page as follows:

sub + h1 { grid-area: afterHeaderBeforeContent } h1 + p:first-of-type { grid-area: afterContent }

Edit: Updated writing since it was full of typos. This draft should be much clearer — my writing has really improved thanks to this blog... I highly recommend anyone start their own blog just for the fun and flexibility it enables you and be a great place to improve your writing skills!

Setting up a development environment on Windows 10

Setting up a development environment on Windows 10

I find myself writing a lot of code, both professionally and as a hobby. When I write code, 99% of the time, I'm targeting a Unix-like environment.

I also find myself doing a lot of other things, such as playing games or streaming some TV. It is my sincere opinion that for these things, Windows is the operating system to beat: monopolistic malpractices or not, 4K Netflix only works on Windows 10, as do the majority of games I play which I use to keep in contact with old friends.

Even when I'm not using my powerful workstation with its beefy GPU to play games, I frequently use various models of the Microsoft Surface lineup because their form factor is really appealing to me—especially for a machine for the go. Linux, without surprise, does not run very well at all on these devices 😅

None of this is Linux's fault, of course. If I could use Linux on everything, I would, but the year of the Linux desktop is certainly not 2019 for me. It's not for lack of trying either, I've been a longtime Linux user, and I've tried many approaches to try and get the best of both worlds. I hope that this post can share what I've learnt along the way 💪

Popular (or not) approaches I've tried

As I've said, I've tried a lot of different ways to combine the leisure of Windows and the development potential (and customisability) or Linux. Some of the ones I actually stuck to for a while are outlined in the seconds below:

Windows–native development

For a long time, primarily during the first half of my University experience, I used Windows as a host OS and developed exclusively on/for Windows. If I were writing Python, or Erlang, or Ocaml, I'd use the Windows-native builds of those programming languages/compilers.

This felt natural to me because I started my developer journey when I was nine and playing around with Game Maker. It also worked relatively well for interpreted languages, as well as for C/C++ so long as I was developing against a Windows API. Everything kind of works, but a few weird things also don't work how you'd expect; maybe because most developers writing in languages such as Python or Erlang aren't using the Windows builds of their toolchains? 🤔 I assume if I wanted to stick to C# or .NET development, say, things would go a lot smoother, but alas.

When I couldn't manage to compile some NIFs for the BEAM (which I needed for a particular project at University), I ended up looking for another solution.

Linux VM on a Windows host

After trying to go all-in with native Windows development and being bitten by a couple of small and weird issues, I decided to do what anyone in my situation might and download a virtual machine to run Linux on.

The first thing I tried was VirtualBox, which ran pretty slow and had awful input latency when doing anything. I think this might have been a hardware issue.

VMware Workstation worked a lot better but still suffered from weird quirks like the virtual sound device not working correctly.

If not for the sound device issue, I probably could have lived with this setup. VMware could be maximised, and all of its Window decorations/toolbars could be hidden. It had some rudimentary 3D acceleration support as well, and things generally ran very smoothly. Unfortunately, if I were to do this, I'd want the sound to work as expected; it was also expensive both monetarily and battery-wise for my non-workstation machines.

The Windows ricing community: Cygwin & bblean

In 2015, I really fell into the Windows ricing community. To eke out the customisation that Linux gave me on Windows, I hacked around with hundreds of custom AutoHotkey scripts for system automation and custom keybindings, custom theming, custom shells to replace Window's default explorer.exe and more.

I discovered a custom shell for Windows called bblean which was based on Blackbox, a Linux window manager, perhaps best known nowadays for being a precursor of the much more popular Openbox and Fluxbox window managers. This scratched my customisation itch like never before and was genuinely perfect—until I switched to Windows 10 for modern DirectX support. Unfortunately, bblean was built targeting Windows 2000, and it was a miracle it worked as well as it did on every OS until Windows 10, where it still kind of works to this day.

There weren't very many people using bblean, and other alternative shells on Windows, however. The community was very tight-knit, and we shared a lot. Through being a part of this community, I also discovered Cygwin, which is essentially a collection of GNU (and other) tools, and a DLL providing POSIX-like functionality that you can install on Windows. Cygwin allows you to both install applications you might expect to find as part of a Linux distribution (such as zsh, vim, git etc.), and also compile POSIX-compliant applications to run on Windows natively (provided you have the DLL Cygwin provides).

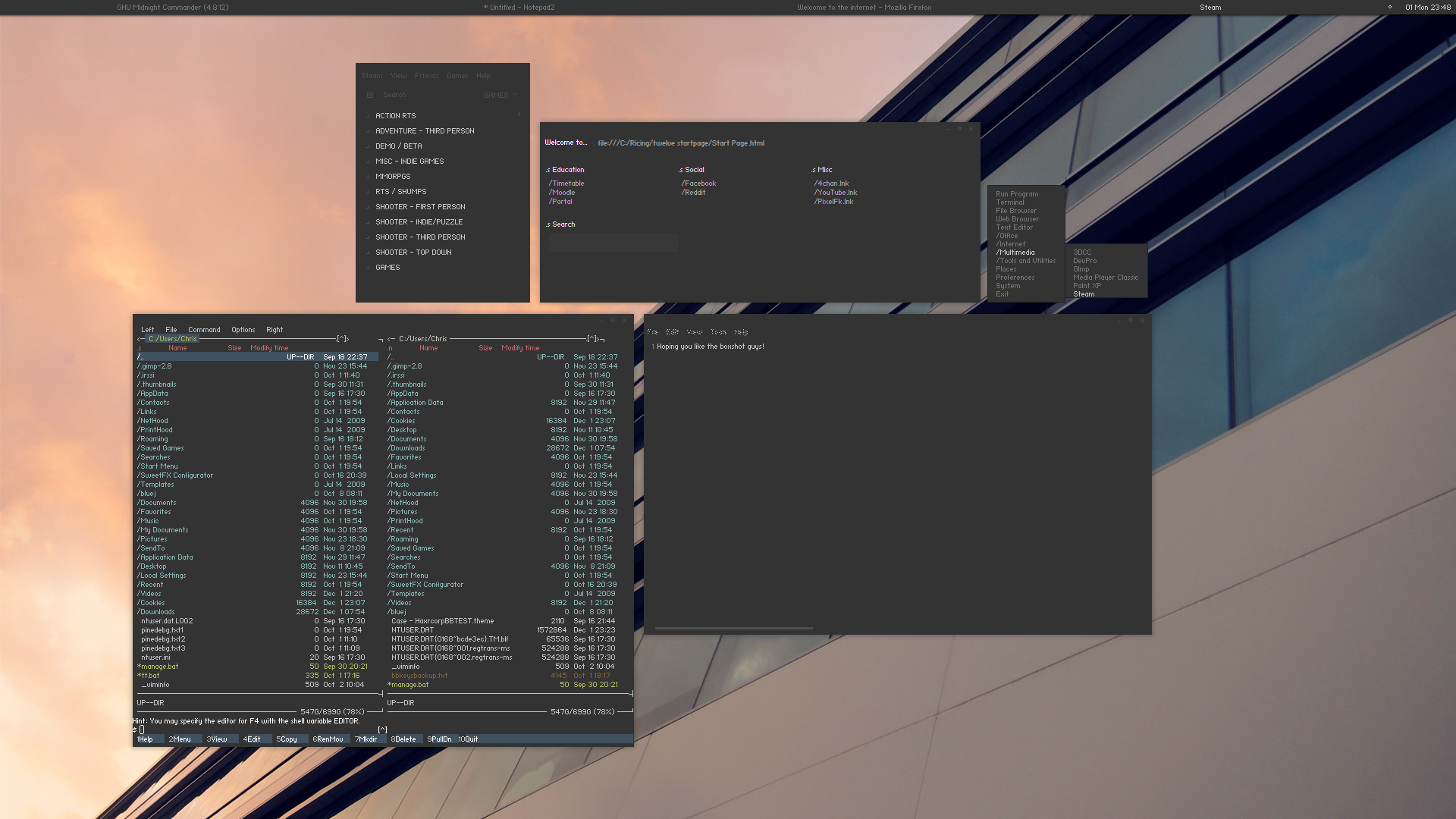

The community was very focused on sharing setups and ideas. I'm unfortunately no longer an active participant in the community because I simply don't use Cygwin anymore (for my use case, a tool we'll explore in a moment is a much better fit). Still, I do have a few old screenshots of the lengths I'd go using these tools—something always fun to reminisce about.

The following images are Windows 7 configurations I had in 2015 and beyond. I taught myself CSS and basic JavaScript to be able to customise my Firefox 😁

Retrospectively, these tools taught me a lot and even contributed a lot to my first paying software development experience (which was a small company I interned at during my University degree. We used Cygwin on Windows extensively, and I attribute my outside experience with having used Cygwin as a strong selling point!)

These tools shall remain forever in my heart ❤️

Windows VM on a Linux host with GPU passthrough

After a few years of the bblean/Cygwin setup, I decided that I would try and learn to use Linux properly and full-time: my internship had just ended, and I had a lot of time on my hands before my final year at University, I had used Linux as a server OS and was familiar with the GNU userland because of Cygwin, but I was not 100% familiar with using it as a host OS for day to day computing.

It worked relatively well, all things being equal. Games, in general, don't work (though Valve's Proton is making genuinely amazing leaps to this end), especially online multiplayer games with anti-cheat. There were other small annoyances, such as high-dpi support not quite working properly (Wayland was not anywhere near being ready yet 😏), but it worked fine in general.

To get around the gaming issue, I set up a virtual machine using QEMU + GPU passthrough, which allowed me to run a Windows 10 in a virtual machine with a dedicated GPU. This worked amazingly, and game performance was effectively native—I couldn't even tell I was in a virtual machine!

It did require 2 monitors, however, and a software/hardware KVM to be used so that I could move my input devices from my host machine to the VM... and every time my GPU's driver updated, I'd end up getting bluescreens 😅

So close, yet so far...

Windows Subsystem for Linux

I heard about Windows Subsystem for Linux on Hacker News while I was still using my GPU passthrough solution and decided I'd try it out. If it worked well enough, I'd switch to it, given the grief my driver updates were causing me.

In short: it was similar to Cygwin in that it gives you a Linux-like CLI on Windows, but it worked by intercepting and translating Linux syscalls to NT syscalls in real-time, providing a real Linux userspace! No more Cygwin bundled DLLs and compiling any software I wanted for myself. I was effectively running a reverse Wine, and things worked pretty well!

This was pretty frictionless, but the performance wasn't the best (primarily because of Windows Defender and NTFS filesystem performance, apparently), but it was good enough for most things. Since WSL worked via syscall translation, however, there were some things that would not work: the lack of a real Linux kernel meant no Docker, no rootfs, no app images, but you could typically work around these issues.

Microsoft then decided to up their game by providing an alternative implementation of WSL: instead of translating syscalls, they would do the following:

- Run a real Linux kernel alongside Window's NT Kernel using Hyper-V

- Wrap said Linux kernel into a super lightweight VM (kind of like a Docker container)

- Perform some black magic kernel extensions to enable Linux–Windows interoperability

- Name it WSL 2 and profit 💰💰💰

I have been using WSL 2 in production since it was first available to try out in a preview branch of Windows. It's been nothing but a pleasure to use, and when using it, I genuinely feel like I have the best of both worlds.

The rest of this post will be about how to build a development environment atop WSL 2; however, before carrying on, there are two main things to note:

-

WSL 2 requires Hyper-V, which means your Windows 10 version will need to support it, and at the time of writing, this makes a machine using WSL 2 incompatible with Virtualbox or VMware software.

-

WSL 2 is currently only available in preview builds of Windows 10 as it's currently in development. WSL 2 is due for general release in Q1 2020, which isn't too far off, at least 🙏

Setting up WSL 2

Right now, WSL 2 is only available on Insider builds of Windows 10. You need to be on build number 18917 or higher to install it. You can follow these instructions to opt into these Insider builds, but it is important to note that you'll need to re-install Windows if you ever want to roll back to the standard distribution channel.

Next, we can enable the pre-requisite services for WSL 2 by executing the following lines in an elevated Powershell terminal (win+x should help bring it up):

Enable-WindowsOptionalFeature -Online -FeatureName VirtualMachinePlatform

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Windows-Subsystem-LinuxYou'll be asked to reboot after running these commands, and after you do, you can go to the Microsoft Store and install any WSL distribution you'd like. I usually go for Ubuntu since it's the most supported one at the time of writing, though others are available.

To speed things up, after installing the distribution of your choice, but before executing it, in a Powershell or CMD prompt, execute the following:

wsl.exe --set-default-version 2 This will cause your installed distribution to initialise itself in WSL 2 mode. You can convert versions freely between WSL 1 mode and WSL 2 mode, but it takes quite a long time, even for a base installation of Ubuntu, so running wsl.exe --set-default-version is preferable in my mind.

Congratulations, you now have access to a pretty complete Linux computing environment inside Windows 10! 🎉 Assuming you're using Ubuntu, you'll be able to apt-get install and run most commands you usually would.

After getting this far, one glaringly obvious issue is that your WSL 2 installation is being hosted in an old-school CMD prompt. This isn't ideal because it doesn't do very much: no true colour support, copy/paste is broken, and it generally isn't very customisable. Thankfully we can work around that in the next section 😉

Choosing a Terminal Emulator

There isn't too much choice for Terminal Emulators on Windows; unfortunately, every single one has some tradeoffs to consider.

For example, the default CMD prompt is actually blazingly fast with regards to input latency. It lacks, however, pretty much everything else I'd want from a terminal outside of decent mouse support (surprisingly). At this point, both my customised Vim configuration and Tmux completely break text rendering because of weird Unicode rendering issues however 😅

Cygwin's default terminal is pretty good. It's called minTTY, and it basically feels like the standard CMD prompt but supercharged. It has mouse support, 24-bit true colour support and more. Unfortunately, it also doesn't support rendering Unicode, so it's a no-go for my use case. If you're okay with Unicode not being supported, you can download wslTTY, which is essentially minTTY with WSL-integration—neat!

ConEmu is another super popular terminal emulator for Windows. It (and its several derivatives) are extremely customisable, approaching the level of terminal emulators you'd find on Linux. There are two main downsides with ConEmu, however: it doesn't easily support WSL by default (it's possible to get a working configuration, but of all the ones I tried, there are always tradeoffs with respect to losing some functionality, be it mouse support or weird text wrapping issues), and it gets very, very slow when running WSL for some reason 🤔 This might be due to the extreme newness of WSL 2 (or the fact what I'm running an insider build of Windows). Because of these issues, I cannot recommend ConEmu at this moment in time.

Then there's Windows Terminal, a super-strong new contender from Microsoft. They really have been doing amazing stuff in the last few years! This terminal emulator supports almost everything I want (lightning-fast, customisation features like the ability to set window padding, ligature support, true colour support), but unfortunately, it doesn't support any kind of mouse integration at the moment, which is a crutch I use a lot in my workflow 😭 Support is planned for the future, and right now the project is super early access. This is one to keep an eye on I'm sure.

Foregoing any of the imperfect choices already talked about (but really, feel free to try them all yourself. It's pretty workflow dependant after all), you can actually run an X Server on Windows and simply use X11 forwarding to run your favourite Linux terminal emulator as though it was running natively. I personally use this approach with the popular terminal emulator called Terminator, but as Windows Terminal matures, I may switch to that.

X11-forwarding between Windows and WSL

A few popular X Servers exist for Windows that work, and most of them are pretty similar. The most popular seem to be VcXsrv, MobaXTerm and X410.

I personally choose to use VcXsrv because it's the one I was already using from my Cygwin days (I believe Cygwin has its own X Server, but I've never tried it). VcXsrv is pretty fast, and it is free.

I have tried X410, and while it worked really seamlessly (it was built with WSL integration in mind, seemingly), my display is 1440p, and running applications would stutter and lag, unlike on VcXsrv. This is likely an issue that'll be fixed soon, but for what it's worth, you also need to buy a license to run X410 from the Microsoft Store.

Both VcXsrv and X410 (and I believe others) will enable you to start them in "multiwindow" mode. This essentially behaves as though Window's explorer.exe is the window manager for your X11 applications, and everything feels super native. Here you can see an image of me running a popular database management GUI, dbeaver, on WSL with X11 forwarding to Windows:

As you can see, it looks and behaves just like any other application!

Before you can run any applications via X11 forwarding, you'll need to add the following line to your shell's configuration:

WSL_HOST=$(cat /etc/resolv.conf | grep nameserver | awk '{print $2}')

export WSL_HOST=$WSL_HOST

export DISPLAY=$WSL_HOST:0.0This sets your WSL instance's X11 display to the server running on Windows. For maximum performance, it's also often recommended to add the following line as well:

export LIBGL_ALWAYS_INDIRECT=1This forces rendering to happen on Windows' side, rather than in software via WSL itself. Having done this, source your changes, and you should be able to just launch any GUI program and have it just work. Even chromium and vscode work if you really want to run them inside WSL for some reason; performance is decent too!

You can make X410 or VcXsrv automatically start by adding shortcuts to them in the following directory: %appdata%/Roaming/Microsoft/Start\ Menu/Programs/Startup/. I really recommend doing this since there isn't much reason not to avoid running an X Server on Windows. It helps improve the system interoperability story even more than already exists!

Other minor notes

How I use Docker

When I was using the original WSL, I was forced to run Docker on Windows and connect to it from my WSL instance. Occasionally things just broke, and this took time to debug and troubleshoot.

Now that WSL 2 is a thing, Docker for Windows has been updated to have its own WSL 2 backend. This works a lot better than standard WSL + Docker on Windows, but it's still not perfect. Occasionally, not shutting down or restarting my workstation for a few days would cause strange networking issues between my containers. I could get around this by running wsl.exe --shutdown but this, of course, also forced me to stop my work.

WSL 2 does, unlike WSL 1, include a real Linux kernel, however, so I opt for just running the native Linux implementation of Docker inside my WSL 2 distribution, and that just works. At this point, I'm not sure why Docker for Windows is even a thing 🤔

After switching to doing this, everything has been super stable! WSL doesn't support any kind of system services, however, so at least once per boot, you'll need to remember to run sudo service docker start to get everything in working order.

Creating shortcuts to Linux tools

Right now, any GUI application you want to run from WSL needs a command prompt open. This is because WSL shuts itself down if no terminals are open.

You can use (probably with some minor tweaking neccessary) the following Visual Basic script to spawn an invisible terminal as a host to launch your applications:

Set oShell = CreateObject ("Wscript.Shell")

Dim strArgs

strArgs = "wsl bash -c 'DISPLAY=WSLHOST:0 <APPLICATION>'"

oShell.Run strArgs, 0, falseSimply change <APPLICATION> to your application of choice (i.e. vscode) and save this script as (in this example) vscode.vbs. Running this script will cause Visual Studio code to open as though it were a normal Windows shortcut 💪

Miscellaneous tips

You can get Linux window management capabilities such as easy dragging/resizing with AltDrag

Microsoft Powertools is a collection of utilities such as a pseudo tiling window manager.

You can set up hotkeys easily with AutoHotkey. I personally utilise these hotkeys in conjunction with the shortcut visual basic script I detailed above to launch Linux applications via keyboard shortcuts.

WSL allows you to import and export your distribution by simply running wsl.exe --export <YOUR_DISTRO> <PATH_TO_EXPORT_TO> and wsl.exe --import <NAME_FOR_IMPORTED_DISTRO> <PATH_TO_IMPORT_TO> <PATH_OF_EXPORTED_DISTRO>

Conclusion

I hope that this post helps you set up and configure a WSL 2 based development environment on Windows 10! There were definitely a few bumps along the way, but I think it works pretty well when you consider how new the technology is and how many small moving parts there are!

This technology is super excited for me, and it's only going to get better going forward. I'm just happy I get all the benefits of running Windows while still being able to develop with Unix-like semantics/idioms. 👀 on the future for even more improvements and interoperability! 🤞

Elixir pet peeves—Ecto virtual fields

Elixir pet peeves—Ecto virtual fields

Ecto is an amazing ORM that is super easy to use, and with good practices and conventions, it becomes extremely composable and flexible while at the same time being super light weight with minimal magic.

At times though, the lack of "magic" when working with Ecto can prove to be a little limiting: one such time this is an issue in my opinion is when using virtual fields.

What is a virtual field?

Virtual fields are just schema fields that aren't persisted/fetched from the database. For example, if we define the following Ecto.Schema:

def MyApp.User do

use Ecto.Changeset

schema "users" do

field :first_name, :string

field :last_name, :string

end

endWe could define a virtual field full_name which is defined as: field :full_name, :string, virtual: true. This effectively tells Elixir that there can exist a field called full_name but won't do anything beyond that.

This is useful because even though we're forced to write a function for resolving this virtual field (with or without the Ecto schema field definition added), it makes working with the struct itself in Elixir a little more annoying without defining it:

def MyApp.User do

use Ecto.Changeset

schema "users" do

field :first_name, :string

field :last_name, :string

end

def resolve_full_name(%User{first_name: first_name, last_name: last_name} = user) do

Map.put(user, :full_name, first_name <> " " <> last_name)

end

end

iex(1)> MyApp.resolve_full_name(%MyApp.User{first_name: "Chris", last_name: "Bailey"})

%{__struct__: MyApp.User, first_name: "Chris", last_name: "Bailey", full_name: "Chris Bailey"}Notice how that breaks the rendering of the MyApp.User struct? It also messes with autocomplete/dialyzer at times as well as locks you out from being able to reason about what fields a struct might have after one resolves all the custom fields one might want to attach to said struct.

If we define the schema with the virtual field, we can do the following instead:

def MyApp.User do

use Ecto.Changeset

schema "users" do

field :first_name, :string

field :last_name, :string

field :full_name, :string, virtual: true

end

def resolve_full_name(%User{first_name: first_name, last_name: last_name} = user) do

%User{full_name: first_name <> " " <> last_name}

end

end

iex(1)> MyApp.resolve_full_name(%MyApp.User{first_name: "Chris", last_name: "Bailey"})

%MyApp.User{first_name: "Chris", last_name: "Bailey", full_name: "Chris Bailey"}Note that the inspection of the struct appears unbroken. Doing things this way also allows us to have default field values set up as well as peace of mind when we consider that we essentially enumerate every field we will want to ever work with (plus type definitions) on the schema itself.

Using virtual fields

Certain virtual fields can be resolved during query time, but more often than not (at least on projects I've seen or worked on) virtual fields are resolved after query time (i.e. in your contexts).

Virtual fields that are resolved at query time can be done via using Ecto.Query.select_merge/4: I could probably resolve MyApp.User.full_name at query time via the following Ecto query:

# This is certainly ugly and unneccessary to do like this, but more realistically if your

# virtual fields are just an average over some aggregate or join, this makes more sense to do.

# This is just to follow through with the example we've used thus far

from u in MyApp.User,

where: u.id = 1,

select_merge: %{full_name: fragment("? || ' ' || ?", u.first_name, u.last_name)}

# better example would be:

preload: [:friends],

select_merge: %{friend_count: count(u.friends)}But for simplicity and readability sake having it in your contexts is much nicer for simple virtual fields:

defmodule MyApp.Users do

def get_user_by_id(id) do

User

|> User.where_id(id)

|> Repo.one()

|> case do

%User{} = user ->

{:ok, User.resolve_full_name(user)}

nil ->

{:error, :not_found}

end

end

endThe only annoyance with the latter approach is composability and repetition: wherever we want to resolve this virtual field (likely everywhere), we need to make sure to call User.resolve_full_name/1, and if we have multiple virtual fields to resolve this can become unwieldy (though one can have a User.resolve_virtual_fields/1 function instead, but the former point still stands. This is a longstanding and common pain point to using virtual fields in my opinion.

The pre-query approach to resolving virtual fields thus has the advantage that you don't necessarily need to worry about it, since select_merge calls can be composed and you build your query multiple times for your context functions in any case. Whether or not all virtual fields can be resolved this way, or whether or not it's worth the reduction of readability should be done on a case by case basis however.

Introducing Ecto.Hooks

In the past, Ecto actually provided functionality to execute callbacks whenever Ecto.Models (the old way of defining schemas) were created/read/updated/deleted from the database. This is exactly the kind of functionality one would like when trying to reduce duplication and points of modification when it comes to resolving virtual fields.

I wrote a pretty small library called EctoHooks which re-implements this callback functionality ontop of modern Ecto.

You can simply add use EctoHooks.Repo instead of use Ecto.Repo and callbacks will be automatically executed in your schema modules if they're defined.

Going back to the example given above, we can centralise virtual field handling as follows, using EctoHooks:

def MyApp.User do

use Ecto.Changeset

schema "users" do

field :first_name, :string

field :last_name, :string

field :full_name, :string, virtual: true

end

def after_get(%__MODULE__{first_name: first_name, last_name: last_name} = user) do

%__MODULE__{user | full_name: first_name <> " " <> last_name}

end

endSimply add the following line to your application's corresponding MyApp.Repo

module:

use Ecto.Repo.HooksAny time an Ecto.Repo callback successfully returns a struct defined in a module that use-es Ecto.Model, any corresponding defined hooks are executed.

All hooks are of arity one, and take only the struct defined in the module as an argument. Hooks are expected to return an updated struct on success, any other value is treated as an error.

A list of valid hooks is listed below:

after_get/1which is executed followingEcto.Repo.all/2,Ecto.Repo.get/3,Ecto.Repo.get!/3,Ecto.Repo.get_by/3,Ecto.Repo.get_by!/3,Ecto.Repo.one/2,Ecto.Repo.one!/2.after_insert/1which is executed followingEcto.Repo.insert/2,Ecto.Repo.insert!/2,Ecto.Repo.insert_or_update/2,Ecto.Repo.insert_or_update!/2after_update/1which is executed followingEcto.Repo.update/2,Ecto.Repo.update!/2,Ecto.Repo.insert_or_update/2,Ecto.Repo.insert_or_update!/2after_delete/1which is executed followingEcto.Repo.delete/2,Ecto.Repo.delete!/2

The EctoHooks repo repo has links to useful places like the Hex.pm entry for the library as well as documentation!

I hope this proves useful!

My usage of Nix, and Lorri + Direnv

My usage of Nix, and Lorri + Direnv

I recently wrote about me transitioning my main working environment to run on NixOS. I've used NixOS on my main workstation and Nix atop Ubuntu on WSL2 on my laptop for ~5 months now and I feel I've really gotten into the flow with it on a handful of client projects.

The coolest bit of the setup is Nix/NixOS agnostic: lorri and direnv. At face value, they're usecase is pretty basic and we will dive into how you're meant to use them on projects, but using them actually enables some pretty cool functionality I've been trying to implement for awhile now.

What is Lorri and Direnv?

Nix provides a really cool utility built in called nix-shell. Basically, invoking nix-shell starts a new interactive shell after evaluating a given Nix expression (which by default is assumed to be ./shell.nix, relative to the CWD). This means that you can write a Nix expression detailing what packages you want to install, what environment variables to set, and automatically set/build/enable them within the context of the interactive shell.

This is already extremely powerful as you can declarative manage software versions etc, but Lorri + Direnv make this even nicer to use!

direnv is a super neat project which provides a shell hook that allows your shell to automatically set environment variables defined within a .envrc file (again, relative the the CWD) whenever you change directories. A basic .envrc is very simple and can look like the following:

export SOME_ENV_VARIABLE="test"A major win here is that it's super fast, supports most popular shells (including some more esoteric ones), is language agnostic, and is pretty extensible.

lorri is essentially an extension of nix-shell built atop direnv. As soon as you change directory, if the new CWD has a shell.nix and an appropriate .envrc (autogenerated by running lorri init), the shell.nix is immediately evaluated. Lorri improves upon nix-shell in a bunch of ways as well, w.r.t caching of built derivations so it is also super quick.

General usage

Once you have lorri and direnv set up, the most basic use case in my opinion is replacing something like asdf which does much of the same stuff:

- it enables you to document what packages are needed for a certain project (within a certain limited, but extensible list)

- it enables you to specify certain versions of said packages

- it will automatically build said packages by running

asdf install - those packages will only be available if you're in a directory (or a child directory) with a

.tool-versionsfile

Since lorri is built with Nix however, we get the following instead:

- it enables you to document what packages are needed for a certain project (any package available for Nix, which is huge)

- it enables you to specify certain versions of said packages, override versions, build certain custom versions

- it will automatically build said packages by entering the directory if needed

- those packages will only be available if you're in a directory (or a child directory) with a

shell.nixfile - you can do anything Nix enables you to do, including file manipulation and describing environment variables

For example, here is an example shell.nix I needed to bootstrap an Elixir project which set some private credentials and needed Docker, GCS utilities, a DB client, and Minikube installed to run:

let

pkgs = import <nixpkgs> {};

in

g_sec_user = <REDACTED>;

g_sec_password = <REDACTED>;

pkgs.mkShell {

buildInputs = [

pkgs.elixir_1_10

pkgs.nodejs-10_x

pkgs.yarn

pkgs.inotify-tools

pkgs.openssl

pkgs.kubectl

pkgs.jq

pkgs.google-cloud-sdk

pkgs.minikube

pkgs.kubernetes-helm

];

}Once this file is created, everything automagically works when entering that directory. Changes to the shell.nix are also automatically tracked so you don't need to do anything fancy: your shell.nix is your single source of truth.

Another advantage is that once your project has a shell.nix checked into it's repository, anyone running Nix/NixOS can start hacking away by just running shell.nix, but if you they Direnv + Lorri, they just have to enter the directory and stuff just works 🎉

Usage for Nix unfriendly projects

I'm an Erlang/Elixir consultant, and as a result of this, I have the opportunity to work on a lot of projects for a lot of different clients. I also know that a relatively esoteric package manager (or worse, an entirely different Linux distribution) is a hard sell.

Thankfully though, even in environments where you can't get away with commiting any of the lorri/direnv artefacts into the core repository of a project, you can get away with using lorri + direnv!

Because this entire workflow is dependent on directory structure, my workstation typically has a structure like this:

/home/chris/git

├── esl

│ ├── shell.nix

│ ├── internal_project_1

│ └── internal_project_2

│ └── shell.nix

├── client_1

│ ├── shell.nix

│ ├── client_project_1

│ ├── client_project_2

│ ├── client_project_3

│ └── client_project_4

├── client_2

│ ├── shell.nix

│ └── client_project_1

└── vereis

├── shell.nix

├── blog

│ └── shell.nix

├── build_you_a_telemetry

│ └── shell.nix

├── horde

├── httpoison

└── nixos

If I'm not allowed to add direnv/lorri artefacts, I can simply put them a directory above the repository and I still get all of the benefits 😄 You need to add the source_up to any child .envrc files in order to also execute their parent but otherwise it works as expected.

This means that for I can install packages / handle dependencies on a project by project basis, or a client by client bases. Because the effects of executing these Nix expressions is cumulative, you can even do it on a client by client basis, except for a given project for that client.

As Lorri/Direnv lets me install dependencies and set environment variables, this setup is also perfect for a seamless dev credential manager solution. For example, assuming a client has it's own npm registry for JavaScript dependencies, you can simple do the following:

let

pkgs = import <nixpkgs> {};

in

pkgs.mkShell {

buildInputs = [

pkgs.nodejs-10_x

];

npm_config_user_config = toString ./. + "/.npmrc";

}Where /home/chris/client_1/.npmrc contains:

registry=<REDACTED>

email=<REDACTED>

init.author.name="Chris Bailey"

init.author.email=<REDACTED>

always-auth=true

_auth=<REDACTED>

Notice that esl, client_1, client_2, and vereis all have a top level shell.nix. This is also perhaps a slight abuse of direnv/lorri but the end effect is that I'm able to treat these different directories as completely separate development domains. Each of these top level shell.nix files also set environment variables to set my git username and email. This means that I no longer need to faff around with setting up each and every git repo I clone: if I'm in /home/chris/git/vereis, I'm going to be working as @vereis, if I'm in /home/chris/git/esl/ I'll be @cbaileyesl etc.

Conclusion

I hope this small write-up has been helpful 😄 I genuinely think that the Nix ecosystem offers a lot of advantages, especially for developers. Tools like nix-shell, lorri, and direnv are super helpful in streamlining the development process.

Of course, tools like asdf, dotenv and others exist which allow you to emulate all of what I've described, but the true advantage of Nix/NixOS is that the configuration is very declarative and built into the core experience. Any nix user can do exactly what I've described, even if they're not running lorri or direnv simply by virtue that it's all built upon the first-class nix-shell.

I hope if you're not a nix user, this has piqued your interests a little bit, and if you are a nix user, this will help you jump a few of the small roadbumps when trying to approach development leveraging all the niceties nix provides you.

Drinking the NixOS kool aid

Drinking the NixOS kool aid

The last time I blogged about my development environment I had become disillusioned with the numerous problems that affected me when using Linux as my daily driver—scrolling and microstutters in Firefox, mouse acceleration and sensitivity setup was archaic and obscure, GPU issues to be worked around.

Ultimately, the times I did get a comfortable setup going on, after a few months something would inadvertantly break; or if I were trying to configure another machine, it'd be a lot of time spent emulating my exact setup.

I've heard about Nix and NixOS passingly over the last few years and with some down time in between projects at work, I decided to take a look at it in depth and I've really started to drink the kool aid.

What is NixOS?

At it's simplest, NixOS is a Linux distribution that aims to let you do system management / package management in a reliable, reproducible and declarative way built atop the Nix package manager (which can be installed independantly in a plethora of operating systems).

It enables you to do all your OS management, package management and configuration in the Nix programming language (which again, you can read more about here—Whats more: every time you make a change to your NixOS configuration and tell NixOS to rebuild your system, the exact specifications of your current system aren't just overwritten but instead a new 'generation' is created and you can switch between these versions of your operating system setup as you please. This means that if you somehow happen to break your environment you can just roll back to how it was before.

When jumping to NixOS, I made the mistake of setting up my configuration such that I could do what I usually do on UNIX-like operating systems: install a bunch of packages to enable me to compile whatever tools I need for work, and while this worked for awhile, for a few things, it wasn't the optimal way of doing things on NixOS. I spend some time looking the the Nix language and some NixOS configurations from other people around the internet and I slowly pieced together a configuration that worked for me. Besides just working for me: I can take my configuration and put it on any machine I own and run nixos-rebuild switch and boom, my entire operating system is set up exactly how I like it.

My NixOS configuration has come a long, long way since the first commit (documentation for Nix is pretty sparse) and I've learned a bunch of things that I feel would be useful to share, so take a look at my NixOS configuration if you'd like a decent, modular starting point and I'll outline a few things below.

My configuration

Modular configuration

A lot of NixOS configurations I've looked at essentially just look like one absolutely huge configuration.nix file; this is an ok way to do things but it can definitely get a bit unwieldy very easily. One of the really cool things about the Nix language is that it is a programming language. I don't leverage anything particularly complicated in my setup, but one nice thing is that you can define modules and import them in a top level configuration.nix module.

My NixOS configuration has the top level configuration.nix and hardware-configuration.nix files gitignored because installing NixOS will generate these for you anyway—because these files contain auto-generated nix expressions and are install/machine dependant (UEFI installation, GPU drivers, boot device etc) I don't think it makes sense to version these. Instead, my NixOS config is set up with the following three directories:

profiles/, which contains nix modules such asdesktop.nix,laptop.nix,server.nix. These nix modules, when imported, set up things that I'd expect from that particular class of machine. If I importserver.nix, I won't bother setting up any X11 related functionality because I won't need a GUI. If I importlaptop.nix, I might automatically include packages such aspowertopor enable bluetooth. In fact, myprofiles/directory also contains abase.nixin which I define a standard set of packages to install which for me I use on all machines, as well as configure my preferred timezone, keyboard layout and other random things.modules/which contains nix modules such asneovim.nix,dwm.nix,amd_gpu.nix. These are smaller nix modules which are set up to be enabled or disabled explicitly. Myneovim.nixmodule not only downloads Neovim, but also downloads my dotfiles and configures Neovim exactly how I like it, plugins and all; mydwm.nixgrabs my personal build of DWM, compiles it and installs it. Modules defined inmodules/can literally be anything that I might want to install but want to abstract away—installing GPU drivers on my workstation requires three different lines of configuration, so I'd rather just addmodules.amd_gpu.enable = true;to my configuration instead. The idea there is that these modules can be easily enabled/disabled on a machine-machine basis.machines/which contains nix modules which acts as configuration for particular machines of mine. These modules will do things such as set the hostname of a machine, as well as include anyprofiles/*.nixandmodules/*nixfiles neccessary for that particular machine. The VPS running my site has a HTTP server, SSL certificate generation and related things configured inmachines/cbailey.co.uk.nixfor example 😄

The main upside to this kind of configuration is that I can share configuration between multiple machines I want to manage in a DRY fashion. My girlfriend also recently installed NixOS and since she's not too familiar with Linux, it's extremely convinient that she can use my NixOS configuration; swapping out say, modules/dwm.nix for modules/plasma5.nix and I can contribute changes that solve technical difficulties she might be having.

Writing a Nix module

I won't go too deeply into the details of the Nix language (I wouldn't call myself an authority on this, I picked up what I know from other configs and playing around), but there are a few nice features/quirks of Nix which seemed you might not expect, which can be leveraged in your modules.

Configuration merging

Nix modules are essentially just attribute sets (basically a map/JSON object like thing) containing keys and values. There are a few different sections to a nix module, but the main bit that is important for NixOS configuration is that an attribute set has a value set to the key config, like this:

{ config, lib, pkgs, ... }:

{

config = {

something = true;

}

}When this module is imported by your top level configuration.nix, the key config gets merged with your configuration.nix's configuration.

We can utilise this to define a modules/vmware_guest.nix module for instance:

{ config, lib, pkgs, ... }:

{

virtualisation.vmware.guest.enable = true;

}When this module gets imported to your top level configuration.nix, virtualisation.vmware.guest.enable = true gets added to your configuration. This is pretty cool because you can utilise this to break your configuration up into smaller chunks.

The merging that nix does is a deep merge as well, so if you have services.xserver.enable = true; in your top level configuration and want to import multiple window managers or desktop environments, these window managers and desktop environments not only can set values nested inside services.xserver, but these values can be used in the top level configuration.nix as well.

If your top level configuration.nix has a list of packages to install; but you also include another nix module that defines a seperate list of packages to install:

# configuration.nix

environment.systemPackages = with pkgs; [

firefox

];

# some_module.nix

environment.systemPackages = with pkgs; [

wget

unzip

git

];Then these lists get concatenated and merged together as well, installing all the packages define across all modules imported. This seemed pretty unintuitive but it's pretty cool!

Enabling/disabling modules

Alongside the config key, you can also create options of varying types in your module's options key. Actually, all nix packages you install are defined with modules, and they use this options key to define what configuration options can be set at all!

In my modules/ directory, I have a convention where all of my modules define a modules.<name>.enable boolean option for instance, so I can explicitly enable or disable these modules regardless of whether or not they're imported.

You get access to a bunch of types such as paths, strings, integers etc; pretty much what you'd expect. I'm not sure where exactly this is documented since I've only really needed to have simple boolean type options but reading other nixpkgs was a decent and quick way to learn.

You can define a modules.<name>.enable options like I do with the following boilerplate:

{ config, lib, pkgs, ...}:

with lib;

let

cfg = config.modules.NAME;

in

{

options.modules.NAME = {

enable = mkOption {

type = types.bool;

default = false;

description = ''

Some documentation here

'';

};

};

config = mkIf cfg.enable (mkMerge [{

# my optional configuration here

}]);

}Overriding packages

NixOS and Nix (the language) can be pretty intimidating at times. The documentation around Nix/NixOS is pretty sparse, potentially outdated and dense. I got a lot of help from the related IRC channels though and definitely suggest you seek help there since it's one of the friendliest and most helpful communities I've had the pleasure of interacting with!

One of the coolest features hidden behind the lack of documentation is the fact that you can override packages. This is especially useful if you want to install a standard package with a patch or small tweak; the standard library for Nix contains functions to fetch remote files from Git or just fetch a tarball from somewhere. I try not to overuse this feature though because it kind of clashes with the declarative-ness of the system otherwise; but for things like my DWM build, it's super helpful.

DWM is a window manager which is configured by recompiling it. When setting up NixOS, I didn't want to learn how to package my personal build of DWM before even getting to grips with the OS, so being able to override the existing DWM package but point at a different set of source code was super helpful. You can override a package with the following pattern:

nixpkgs.overlays = [

(self: super: {

dwm = super.dwm.overrideAttrs(_: {

src = builtins.fetchGit {

url = "https://github.com/vereis/dwm";

rev = "b247aeb8e713ac5c644c404fa1384e05e0b8bc6f";

ref = "master";

};

});

})

];Home-Manager

Home-Manager is an application which lets you configure your applications (basically a declarative nix-ish implementation of dotfiles). It's super useful and I've transitioned to using it for a bunch of the applications I use.

My terminal emulator's colors, default Firefox plugins and other things are configured via Home-Manager and thus are versioned in my NixOS generations and upgraded whenever I do a nixos-rebuild switch.

There isn't too much else to say about Home-Manager; it integrates well with Nix and it just works. Check it out if you're using NixOS.

Developer Environments

NixOS by default comes with a feature which lets you set up environments for different projects (similar to how ASDF might work for your Erlang/Elixir projects).

Essentially, you can create a shell.nix file in a directory that contains a list of applications you want to be available in that directory and activate it by invoking nix-shell. You can see an example shell.nix set up for a Phoenix application below:

let

pkgs = import <nixpkgs> {};

in

pkgs.mkShell {

buildInputs = [

pkgs.elixir_1_10

pkgs.nodejs-10_x

pkgs.yarn

];

}If you're using direnv (a really nice application which lets you automatically set environment variables when you enter a directory), you can use a cool project called lorri which sets up a daemon and automatically updates your local dev environment when you enter a directory (avoiding the need to run nix-shell manually) as well as automatically watching for changes in your shell.nix.

This emulates the flow of using asdf and defining a .tool-versions file pretty well, and I've actually found it even more useful because if someone has trouble compiling one of my applications, even if they don't use Nix, you can look at the shell.nix and tell them exactly what packages you need and at what versions.

Wrapping up

The more I play with Nix and NixOS, the more in love I fall with it. I've not had this much fun (and drunk this much kool-aid) since falling for OTP or discovering Arch Linux or Gentoo when I was just starting to get into Linux.

Hopefully the contents of this post will be helpful for other people looking to get into NixOS and I'm sure I'll have more to contribute w.r.t this as time goes on!

Thanks for reading 😄

edit:

man configuration.nixgives you an exhaustive list of configuration options! happy tinkering!

Testing with tracing on the BEAM

Testing with tracing on the BEAM

When I was writing some tests for a event broadcasting system (the one I outlined in this blog post I found that, as expected, the unit tests worked very easily but when it came to testing that the entire system worked as intended end to end, it was a little more difficult because I'd be trying to test completely asynchronous and pretty decoupled behaviour.

Testing with Mock/:meck

At this point in time, the test suites inside our code base use mocks very extensively which might be a topic for another day, because I don't think I believe that mocking code in tests in the best—nor most idiomatic—approach. One nice bit about the de-facto mocking library for Elixir is that it also comes with a few assertions you can import into your test suites; namely assert_called/1.

Using this library, we could write our tests, mocking the event handling functions in the event listeners we're interested in for the particular test and then use assert_called/n to determine whether or not that function was called as expected and optionally you can provide what looks roughly like an Erlang match spec to assert that the function was called with specific arguments.

Our tests end up looking roughly like the following:

defmodule E2ETests.EctoBroadcasterTest do

use ExUnit.Case

alias EventListener.EctoHandler

import Mock

setup_with_mocks(

[

{EctoHandler, [:passthrough],

handle_event: fn {_ecto_action, _entity_type, _data} ->

{:ok, :mocked}

end}

],

_tags

) do

:ok

end

test "Creating entity foo is handled by EctoHandler" do

assert {:ok, %MyApp.Foo{}} = MyApp.Foos.create_foo(...)

assert_called EctoHandler.handle_event({:insert, MyApp.Foo, :_})

end

endThis approach basically met all of our requirements except for the fact that mocking via :meck (the de-facto Erlang mocking library and used internally by Mock) does things in a pretty heavy handed way.

At a high level, all :meck does is replace the module you're trying to mock with a slightly edited one where the functions you want to mock are inserted in place of the original implementations. This comes with three disadvantages for our particular use case:

- Because we're essentially loading code into the BEAM VM, we can no longer run our tests asynchronously because we've patched code at the VM level and these patches are global

- Because we're replacing the function we want to call; we can't actually test that that particular function behaves as expected; only that it was called

- If we want to mock our functions purely for the

assert_called/1assertion (though this has annoyed us somewhat elsewhere too) we need to be careful and essentially re-implement any functionality that you actually expect to happen for that to be testable; which itself might lead itself to brittle mocks.

My main qualm was with the second problem; we ran into it pretty hard for a few edge cases (though we resolved the second case too!): say EctoHandler.handle_event({:delete, _, _}) needed to be implemented in a special way where it persists these actions into an ETS table and you want to assert that that actually happened, you'd be a little stuffed... image your source code looks like:

defmodule EventListener.EctoHandler do

...

def handle_event(:delete, entity_type, data) do

Logger.log("Got delete event for #{entity_type} at #{System.monotonic_time()}")

data

|> EventListener.DeletePool.write()

|> process_delete_pool_response()

|> case do

{:ok, _} = response ->

response

error ->

add_to_retry_queue({:delete, entity_type, data})

Logger.error("Failed to add to delete pool, adding to retry queue")

error

end

end

...

endTo mock this function properly, you'd probably just have to implement your mock such that we essentially duplicate the implementation, but this of course will blow up when the mocks come out of sync with the implementation.

A less heavy handed approach

One of the coolest but possibly least used features of the BEAM is the built in ability to do tracing.

Tracing essentially lets you capture things such as messages being passed to particular processes, functions being called among other things. It does come with some disadvantages (i.e. if you're not careful you'll blow up your application because of the performance impact (be careful if you trace on a production deployment 😉)) and it's very low level but it works well and there exist several high level wrappers.

I wanted to experiment with tracing because I've never used it very much so I chose a pretty low level abstraction to do the work for me: the :dbg application which comes built into the normal Erlang OTP distribution.

I'm in the process of cleaning up the actual implementation, but if you wanted to build your own replacement for the assert_called/1 function (with a nice added feature) feel free to follow along!

You can start the :dbg application via the following:

def init() do

# Flush :dbg in case it was already started prior to this

:ok = :dbg.stop_clear()

{:ok, _pid} = :dbg.start()

# This is the important bit; by default :dbg will just log what gets traced on stdout

# but we actually need to handle these traces because we want to capture that the

# function was called programmatically rather than reading it off the shell/log file

# This tells `:dbg` to call the `process_trace/2` function in the current running process

# whenever we get a trace message

{:ok, _pid} = :dbg.tracer(:process, {&process_trace/2, []})

# And this sets up `:dbg` to handle function calls

:dbg.p(:all, [:call])

endNow that the tracer is started up; we need to tell it to start tracing certain functions. You can do this quite easily by passing an mfa into :dbg.tpl/4 as follows:

def trace(m, f, a) do

# :dbg.tpl traces all function calls including private functions (which we needed for our use-case)

# but I think it's a good default

#

# The 4th parameter basically says to trace all invocations of the given mfa (`:_` means we pattern

# match on everything), and we also pass in `{:return_trace}` to tell the tracer we want to receive

# not just function invocation messages but also capture the return of those functions

:dbg.tpl(m, f, a, [{:_, [], [{:return_trace}]}])

endNow provided a super simple stub function for process_trace/2, you should be able to see your traces come back looking all good:

# Using the following process_trace/2 implementation:

def process_trace({:trace, _pid, :call, {module, function, args}} = _message, _state) do

IO.inspect(inspect({module, function, args}) <>" - call start")

end

def process_trace({:trace, _pid, :return_from, {module, function, arity}, return_value}, _state)

when length(args) == arity do

IO.inspect(inspect({module, function, arity}) <>" - returns: " <> inspect(return_value))

end

-------------------------------------------------------------------------------------------------

iex(1)> Assertions.init()

{:ok, [{:matched, :nonode@nohost, 64}]}

iex(2)> Assertions.trace(Enum, :map, 2)

{:ok, [{:matched, :nonode@nohost, 1}, {:saved, 1}]}

iex(3)> Enum.map([1, 2, 3], & &1 + 2)

"{Enum, :map, [[1, 2, 3], #Function<44.33894411/1 in :erl_eval.expr/5>]} - call start"

"{Enum, :map, 2} - returns: [3, 4, 5]"

[3, 4, 5]This gets us most of the way to what we want! We can see what functions were called and capture the variables passed into it; as well as capturing what the functions returned.

But if you look closely, the message for :call and :return_from have a few issues:

- Ideally, I'd have preferred to have a single message rather than two, because we have some additional complexity here now...

- Especially because the

:return_frommessage doesn't get theargspassed into it but instead only the function's arity... which means we need to try our best to marry them up as part of theprocess_tracefunction... - Just as a note; I don't think we can pattern match on lambdas easily on the BEAM (nor do things like send them over

:rpc.calls so at least its consistent 🙂

We can solve the first two problems by amending the implementation of process_trace/2 quite easily though (it might not be a foolproof implementation but it worked well enough for our usecase that I'll just add the code here verbatim):

def process_trace({:trace, _pid, :call, {module, function, args}} = _message, state) do

[{:call, {module, function, args}, :pending} | state]

end

def process_trace({:trace, _pid, :return_from, {module, function, arity}, return_value}, [

{:call, {module, function, args}, :pending} | remaining_stack

])

when length(args) == arity do

IO.inspect(inspect({module, function, args}) <> " - returns: " <> inspect(return_value))

remaining_stack

end

-------------------------------------------------------------------------------------------------

iex(1)> Assertions.init()

{:ok, [{:matched, :nonode@nohost, 64}]}

iex(2)> Assertions.trace(Enum, :map, 2)

{:ok, [{:matched, :nonode@nohost, 1}, {:saved, 1}]}

iex(3)> Enum.map([1, 2, 3], & &1 + 2)

"{Enum, :map, [[1, 2, 3], #Function<44.33894411/1 in :erl_eval.expr/5>]} - returns: [3, 4, 5]"

[3, 4, 5]

iex(4)> Enum.map([[1, 2, 3], [:a, :b, :c], []], fn list -> Enum.map(list, &IO.inspect/1) end)

1

2

3

:a

"{Enum, :map, [[1, 2, 3], &IO.inspect/1]} - returns: [1, 2, 3]"

:b

:c

"{Enum, :map, [[:a, :b, :c], &IO.inspect/1]} - returns: [:a, :b, :c]"

"{Enum, :map, [[], &IO.inspect/1]} - returns: []"

[[1, 2, 3], [:a, :b, :c], []]Because whatever gets returned from process_trace/2 accumulates into future calls of process_state/2 in the second parameter, we can essentially do the above and treat it like a stack of function calls. We only emit the actual IO.inspect we care about if we end up popping the function call (because it's resolved) off the stack.

All I do from here is persist these functions into an :ets table of type :bag that we create when init/0 is called, and have process_trace({_, _, :return_from, _}, _) write to it whenever a function call succeeds.

For convenience I really hackily threw together the following macros (please don't judge me 🤣 -- this is going to be cleaned up a lot before I throw it up on hex.pm):

defp apply_clause(

{{:., _, [{:__aliases__, _, mod}, fun]}, _, args},

match \\ {:_, [], Elixir}

) do

module = ["Elixir" | mod] |> Enum.map(&to_string/1) |> Enum.join(".") |> String.to_atom()

arg_pattern =

args

|> Enum.map(fn

{:_, _loc, _meta} -> :_

other -> other

end)

quote do

case :ets.select(unquote(@mod), [

{{:_,

{unquote(module), unquote(fun), unquote(length(arg_pattern)), unquote(arg_pattern),

:_}}, [], [:"$_"]}

]) do

[] ->

false

matches ->

Enum.any?(matches, fn

{_timestamp, {_mod, _fun, _arity, _args, unquote(match)}} -> true

_ -> false

end)

end

end

end

defp capture_clause({{:., _, [{:__aliases__, _, mod}, fun]}, _, args}) do

module = ["Elixir" | mod] |> Enum.map(&to_string/1) |> Enum.join(".") |> String.to_atom()

arg_pattern =

args

|> Enum.map(fn

{:_, _loc, _meta} -> :_

other -> other

end)

quote do

case :ets.select(unquote(@mod), [

{{:_,

{unquote(module), unquote(fun), unquote(length(arg_pattern)), unquote(arg_pattern),

:_}}, [], [:"$_"]}

]) do

[] ->

false

[{_timestamp, {_mod, _fun, _arity, _args, val}} | _] ->

val

end

end

end

defmacro called?({{:., _, _}, _, _} = ast) do

apply_clause(ast)

end

defmacro called?({op, _, [lhs, {{:., _, _}, _, _} = rhs]}) when op in [:=, :"::"] do

apply_clause(rhs, lhs)

end

defmacro called?({op, _, [{{:., _, _}, _, _} = lhs, rhs]}) when op in [:=, :"::"] do

apply_clause(lhs, rhs)

end

defmacro capture({op, _, [lhs, {{:., _, _}, _, _} = rhs]}) when op in [:=, :<-] do

captured_function = capture_clause(rhs)

quote do

var!(unquote(lhs)) = unquote(captured_function)

end

endWhich lets us finally put it all together and do the following 🎉

iex(1)> require Assertions

Assertions

iex(2)> import Assertions

Assertions

iex(3)> Assertions.init()

{:ok, [{:matched, :nonode@nohost, 64}]}

iex(4)> Assertions.trace(Enum, :map, 2)

{:ok, [{:matched, :nonode@nohost, 1}, {:saved, 1}]}

iex(5)> called? Enum.map([:hi, "world!"], _) = [:atom, :string]

false

iex(6)> Enum.map([:hi, "world!"], & (if is_atom(&1), do: :atom, else: :string))

[:atom, :string]

iex(7)> called? Enum.map([:hi, "world!"], _) = [:atom, :string]

true

iex(8)> called? Enum.map([:hi, "world!"], _) = [_, :atom]

false

iex(9)> called? Enum.map([:hi, "world!"], _) = [_, _]

true

iex(10)> called? Enum.map([:hi, "world!"], _)

true

iex(11)> capture some_variable <- Enum.map([:hi, "world!"], _)

[:atom, :string]

iex(12)> [:integer | some_variable]

[:integer, :atom, :string]So as you can see; these macros give us some nice ways to solve two of the three issues we had with Mock:

- We can assert that functions were called (passing in a real pattern match v.s. needing to pass in a match spec like DSL)

- And we can assert that the functions were called and returned a particular value which again can be any Elixir pattern match

- And as a bonus, you can bind whatever some function returned to a variable with my

capture x <- functionmacro! 🤯

The remaining steps for this is to add a before_compile step to automatically parse invocations of called? and capture so that I can automate the Assertions.trace/3 function to make it completely transparent (it'll automatically trace the functions you care about) as well as extensively test some edge cases because we've run into a few already.

I'll also be looking into massively cleaning up these macros since they were thrown together literally in a couple of hours playing around with the ASTs I thought to test but I hope this blog post helped as an intro into tracing on the BEAM but also just a fun notepad of my iterative problem solving process 🙂

Taming my Vim configuration

Taming my Vim configuration

As a long-time Vim user, I've put a lot of time into my Vim configuration over the years. It is something that has organically grown as my editing habits and needs change over time.

Recently, when trying to figure out why certain things weren't working the way I expected, I realised that there was much in my config I either didn't need or just blatantly did not understand. I took this as an opportunity to remove all the old cruft and start my configuration file again from scratch, taking only what I absolutely needed.

My configuration file was also around 2000 lines in length. Organisation was a huge mess: related commands strewn around all over the place due to years of ad-hoc editing. During my refactor of my configuration, one key goal was also to clean this up.

When ranting to a colleague of mine about my configuration woes, he showed me his, and I was struck by inspiration 👼 A means of micromanaging—architecting—Vim configuration to make it saner, compositional, and more optimal too.

A few key ideas I've used in my configuration are outlined below: hopefully, they'll help anyone else also looking to tame their Vim configuration.

Initial refactoring

The first thing I did when breaking apart my 2000 line configuration file was to untangle the organisational mess I've made.

I started this by moving all the configuration related to specific ideas (i.e. tabbing: tabstop, softtabstop, expandtab) into contiguous blocks like the following:

" Cache undos in a file, disable other backup settings

set noswapfile

set nobackup

set undofile

" Better searching functionality

set showmatch

set incsearch

set hlsearch

set ignorecase

set smartcase

" Search related settings

set showmatch

set incsearch

set hlsearch

set ignorecase

set smartcaseThis let me tell at a glance what essential "modules" I was dealing with. These "modules" would be blocks of configuration concerning both related settings and configuration for certain plugins. After doing this, my Vim configuration was actually larger, but at least it was easier to read 😅

Dealing with Plugins

I personally use vim-plug for managing my Vim plugins. Some of the reasons I use vim-plug include features such as the minimal amount of boilerplate needed to get plugins working, as well as hooks for lazy loading: we'll get to this later.

The only requirement to use vim-plug is to install it as per its installation instructions, followed by adding the following block to the top of your Vim configuration:

call plug#begin('~/.vim/my_plugin_directory_of_choice')

Plug 'https://github.com/some_repo/example.git'

Plug 'scrooloose/nerdtree'

call plug#end()Most Vim plugins will have installation instructions for if you're using vim-plug (and if you're not, I'm sure you know how to configure this bit already anyway!), but that's pretty much it. Running :plug-install will fetch all of the configured plugins and download them to the path you give into the call plug#begin(...) option. Once this is done, you're basically good to go.

Because vim-plug is just standard viml, I use the same "module" idea and segregate groups of similar/related plugins together, as follows.

call plug#begin('MY_CONFIG_PATH/bundle')

" General stuff

Plug 'scrooloose/nerdtree'

Plug 'jistr/vim-nerdtree-tabs'

Plug 'neoclide/coc.nvim', {'branch': 'release'}

Plug 'easymotion/vim-easymotion'

Plug 'majutsushi/tagbar'

Plug 'ludovicchabant/vim-gutentags'

Plug 'tpope/vim-surround'

Plug 'tpope/vim-repeat'

Plug 'tpope/vim-eunuch'

Plug 'machakann/vim-swap'

" Setup fzf

Plug '/usr/local/opt/fzf'

Plug '~/.fzf'

Plug 'junegunn/fzf.vim'

" Git plugins

Plug 'Xuyuanp/nerdtree-git-plugin'

Plug 'airblade/vim-gitgutter'

Plug 'tpope/vim-fugitive'

" Erlang plugins

Plug 'vim-erlang/vim-erlang-tags' , { 'for': 'erlang' }

Plug 'vim-erlang/vim-erlang-omnicomplete' , { 'for': 'erlang' }

Plug 'vim-erlang/vim-erlang-compiler' , { 'for': 'erlang' }

Plug 'vim-erlang/vim-erlang-runtime' , { 'for': 'erlang' }

" Elixir plugins

Plug 'elixir-editors/vim-elixir' , { 'for': 'elixir' }

Plug 'mhinz/vim-mix-format' , { 'for': 'elixir' }

" Handlebar Templates

Plug 'mustache/vim-mustache-handlebars' , { 'for': 'html' }

" Bunch of nice themes

Plug 'flazz/vim-colorschemes'

call plug#end()Here we can see another killing feature of vim-plug: by specifying options such as { 'for': 'erlang' }, I'm instructing that that particular plugin should only be loaded if the filetype of the currently opened file is erlang. A 2000 line config file isn't necessarily huge by hardcore Vim standards, but even at 2000 lines, my Vim configuration took a second to load, which was distracting. You can use many different hooks to optimise your vim-plug loading time, so I suggest you consult their README for more information; for what it's worth, I get by simply with this { 'for': $filetype } directive.

Turning comments into real "modules"

Now that everything is super well organised, I did a second refactoring pass over my configuration. Since all my related commands were already in logical blocks, I further broke them out into their own files so that I wouldn't need to have the informational overhead in my brain whenever I wanted to change a trivial setting.

The cool thing is that viml is a Turing complete programming language, able to do pretty much anything a normal programming language can do. One thing I'm abusing is the idea of automatically sourcing files from elsewhere, essentially breaking out my logically separated comments into real, logical modules.

I do this via a very naive for ... in ... loop as follows:

for config in split(glob('MY_CONFIG_PATH/config/*.vim'), '\n')

exe 'source' config

endforThis basically tells vim to look for all .vim files inside my configured config/ directory and source them. Therefore, I end up organising my configuration modules as follows:

MY_CONFIG_PATH/config

├── coc_config.vim

├── ctag_config.vim

├── easymotion_config.vim

├── editor_config.vim

├── elixir_config.vim

├── erlang_config.vim

├── fzf_config.vim

├── jsonc_config.vim

└── nerdtree_config.vim

In the future as this grows, I might even split this up to house modules into different domains (i.e. MY_CONFIG_PATH/plugins/*.vim versus MY_CONFIG_PATH/languages/*.vim versus plain old MY_CONFIG_PATH/tab_settings.vim). In the meantime, however, this level of encapsulation is more than enough: editor_config.vim is where I keep all my editor commands such as tab stop settings; everything else is either plugin configuration (all my settings related to, say, fzf). Otherwise, files such as elixit_config.vim are configuration files that get loaded when I'm editing elixir files specifically.

Plugin Configuration

My nerdtree.vim plugin configuration file is listed below, but essentially all of these files (except language configuration files) look the same:

" NERDTREE toggle (normal mode)

nnoremap <C-n> :NERDTreeToggle<CR>

" Close VIM if NERDTREE is only thing open

autocmd bufenter * if (winnr("$") == 1 && exists("b:NERDTree") && b:NERDTree.isTabTree()) | q | endif

" Show hidden files by default

let NERDTreeShowHidden=1

" If more than one window and previous buffer was NERDTree, go back to it.

autocmd BufEnter * if bufname('#') =~# "^NERD_tree_" && winnr('$') > 1 | b# | endif

" Selecting a file closes NERDTREE

let g:NERDTreeQuitOnOpen = 1

" Style

let NERDTreeMinimalUI = 1

let NERDTreeDirArrows = 1Since the plugins can be set to be lazily loaded, I don't bother with any further tinkering with these files. They're literally just plain viml that is sourced by my top-level init.vim or .vimrc.

Language configuration

Language configuration is likewise simple but a little different. I actually end up writing custom viml functions for overriding configuration, which might be set elsewhere.

As an example, I've included a minimal copy of my elixir.vim file below:

function! LoadElixirSettings()

set tabstop=2

set softtabstop=2

set shiftwidth=2

set textwidth=80

set expandtab

set autoindent

set fileformat=unix

endfunction

au BufNewFile,BufRead *.ex,*.exs,*.eex call LoadElixirSettings()

" Mix format on save

let g:mix_format_on_save = 1As you can see, I basically do two different things:

-

I set a few options specific to plugins that might load for filetypes marked