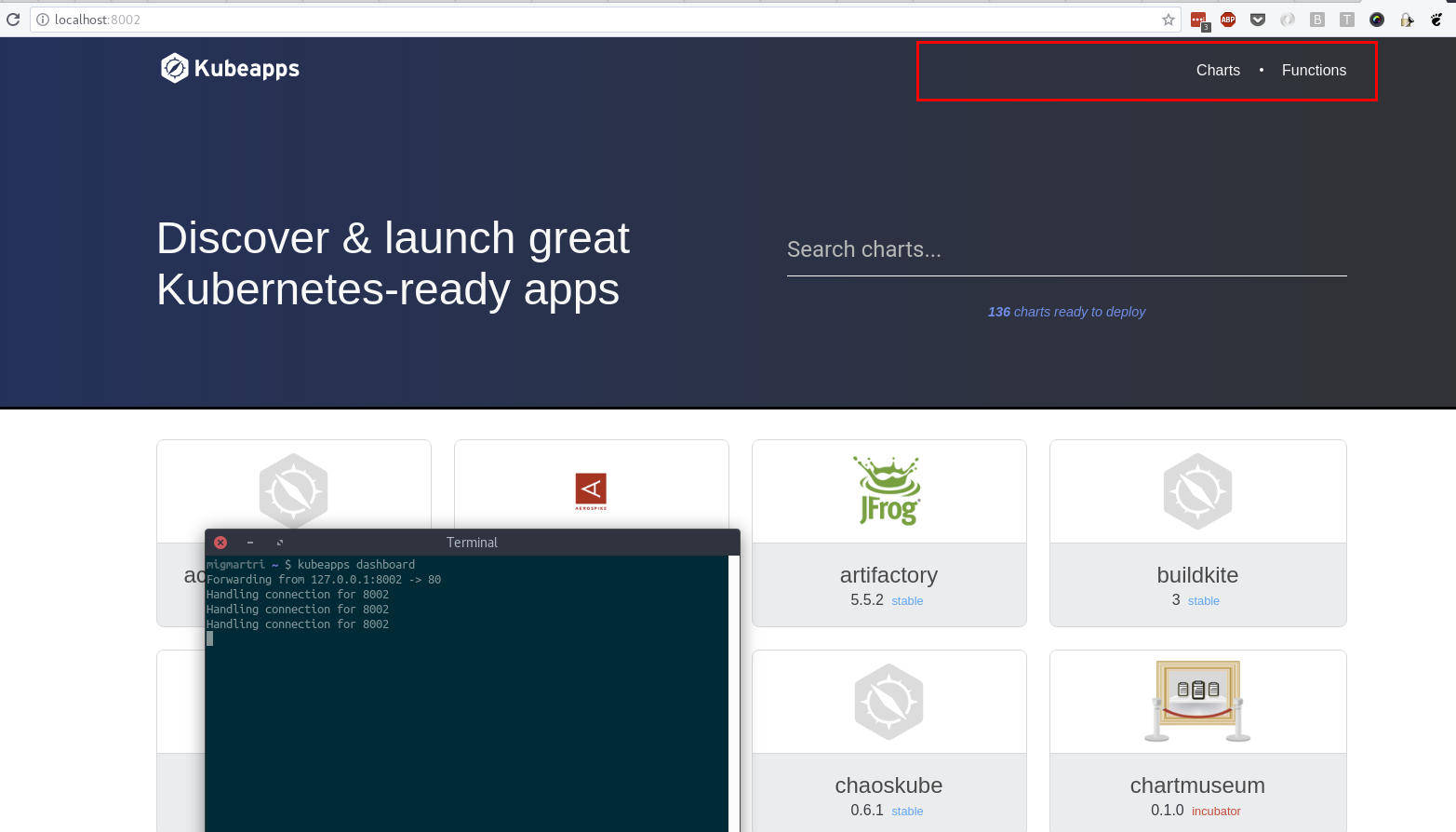

Kubeapps is an in-cluster web-based application that enables users with a one-time installation to deploy, manage, and upgrade applications on a Kubernetes cluster.

With Kubeapps you can:

- Browse and deploy different packages like Helm charts, Flux or Carvel packages from public or private repositories (including VMware Marketplace™ and Bitnami Application Catalog)

- Customize deployments through an intuitive user interface

- Browse, upgrade and delete applications installed in the cluster

- Browse and deploy Kubernetes Operators

- Secure authentication to Kubeapps using a standalone OAuth2/OIDC provider or using Pinniped

- Secure authorization based on Kubernetes Role-Based Access Control

Note: Kubeapps 2.0 and onwards supports Helm 3 only. While only the Helm 3 API is supported, in most cases, charts made for Helm 2 will still work.

Installing Kubeapps is as simple as:

helm repo add bitnami https://charts.bitnami.com/bitnami

kubectl create namespace kubeapps

helm install kubeapps --namespace kubeapps bitnami/kubeappsSee the Getting Started Guide for detailed instructions on how to install and use Kubeapps.

Kubeapps is deployed using the official Bitnami Kubeapps chart from the separate Bitnami charts repository. Although the Kubeapps repository also defines a chart, this is intended for development purposes only.

Complete documentation available in Kubeapps documentation section. Including complete tutorials, how-to guides, and reference for configuration and development in Kubeapps.

For getting started into Kubeapps, please refer to:

- Getting started guide

- Detailed installation instructions

- Kubeapps user guide to easily manage your applications running in your cluster.

- Kubeapps FAQs.

See how to deploy and configure Kubeapps on VMware Tanzu™ Kubernetes Grid™

If you encounter issues, please review the troubleshooting docs, review our project board, file an issue, or talk to Kubeapps maintainers on the #Kubeapps channel on the Kubernetes Slack server.

-

Sign up to the Kubernetes Slack org.

-

Review the FAQs section on the Kubeapps chart README.

If you are ready to jump in and test, add code, or help with documentation, follow the instructions on the start contributing documentation for guidance on how to setup Kubeapps for development.

Take a look at the list of releases to stay tuned for the latest features and changes.