Code and datasets for the ICLR2023 paper "Multimodal Analogical Reasoning over Knowledge Graphs"

- ❗New: We provide a Huggingface Demo at https://huggingface.co/spaces/zjunlp/MKG_Analogy, have fun!

- ❗New: We have released the Checkpoints at Google Drive for reproducibility.

- ❗New: We have released the Powerpoint at ICLR2023_MKG_Analogy.pdf.

In this work, we propose a new task of multimodal analogical reasoning over knowledge graph. A overview of the Multimodal Analogical Reasoning task can be seen as follows:

We provide a knowledge graph

to support and further divide the task into single and blended patterns. Note that the relation marked

by dashed arrows (

pip install -r requirements.txt

To support the multimodal analogical reasoning task, we collect a multimodal knowledge graph dataset MarKG and a Multimodal Analogical ReaSoning dataset MARS. A visual outline of the data collection as shown in following figure:

We collect the datasets follow below steps:

- Collect Analogy Entities and Relations

- Link to Wikidata and Retrieve Neighbors

- Acquire and Validate Images

- Sample Analogical Reasoning Data

The statistics of the two datasets are shown in following figures:

We put the text data under MarT/dataset/, and the image data can be downloaded through the Google Drive or the Baidu Pan(TeraBox) (code:7hoc) and placed on MarT/dataset/MARS/images. Please refer to MarT for details.

The expected structure of files is:

MKG_Analogy

|-- M-KGE # multimodal knowledge representation methods

| |-- IKRL_TransAE

| |-- RSME

|-- MarT

| |-- data # data process functions

| |-- dataset

| | |-- MarKG # knowledge graph data

| | |-- MARS # analogical reasoning data

| |-- lit_models # pytorch_lightning models

| |-- models # source code of models

| |-- scripts # running scripts

| |-- tools # tool function

| |-- main.py # main function

|-- resources # image resources

|-- requirements.txt

|-- README.md

We select some baseline methods to establish the initial benchmark results on MARS, including multimodal knowledge representation methods (IKRL, TransAE, RSME), pre-trained vision-language models (VisualBERT, ViLBERT, ViLT, FLAVA) and a multimodal knowledge graph completion method (MKGformer).

In addition, we follow the structure-mapping theory to regard the Abudction-Mapping-Induction as explicit pipline steps for multimodal knowledge representation methods. As for transformer-based methods, we further propose MarT, a novel framework that implicitly combines these three steps to accomplish the multimodal analogical reasoning task end-to-end, which can avoid error propagation during analogical reasoning. The overview of the baseline methods can be seen in above figure.

1. IKRL

We reproduce the IKRL models via TransAE framework, to evaluate on IKRL, running following code:

cd M-KGE/IKRL_TransAE

python IKRL.pyYou can choose pre-train/fine-tune and TransE/ANALOGY by modifing finetune and analogy parameters in IKRL.py, respectively.

2. TransAE

To evaluate on IKRL, running following code:

cd M-KGE/IKRL_TransAE

python TransAE.pyYou can choose pre-train/fine-tune and TransE/ANALOGY by modifing finetune and analogy parameters in TransAE.py, respectively.

3. RSME

We only provide part of the data for RSME. To evaluate on RSME, you need to generate the full data by following scripts:

cd M-KGE/RSME

python image_encoder.py # -> analogy_vit_best_img_vec.pickle

python utils.py # -> img_vec_id_analogy_vit.pickleFirstly, pre-train the models over MarKG:

bash run.shThen modify the --checkpoint parameter and fine-tune the models on MARS:

bash run_finetune.shMore training details about the above models can refer to their offical repositories.

We leverage the MarT framework for transformer-based models. MarT contains two steps: pre-train and fine-tune.

To train the models fast, we encode the image data in advance with this script (Note that the size of the encoded data is about 7GB):

cd MarT

python tools/encode_images_data.pyTaking MKGformer as an example, first pre-train the model via following script:

bash scripts/run_pretrain_mkgformer.shAfter pre-training, fine-tune the model via following script:

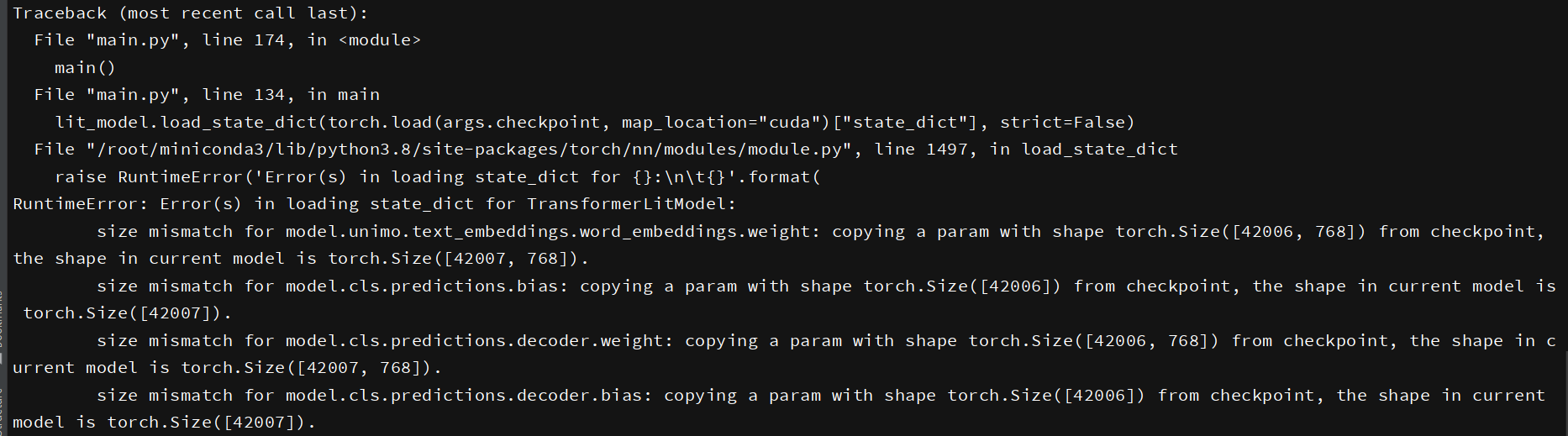

bash scripts/run_finetune_mkgformer.sh🍓 We provide the best checkpoints of transformer-based models during the fine-tuning and pre-training phrases at this Google Drive. Download them and add --only_test in scripts/run_finetune_xxx.sh for testing experiments.

If you use or extend our work, please cite the paper as follows:

@inproceedings{

zhang2023multimodal,

title={Multimodal Analogical Reasoning over Knowledge Graphs},

author={Ningyu Zhang and Lei Li and Xiang Chen and Xiaozhuan Liang and Shumin Deng and Huajun Chen},

booktitle={The Eleventh International Conference on Learning Representations },

year={2023},

url={https://openreview.net/forum?id=NRHajbzg8y0P}

}