InsightFace project is mainly maintained By Jia Guo and Jiankang Deng.

For all main contributors, please check contributing.

The code of InsightFace is released under the MIT License. There is no limitation for both academic and commercial usage.

The training data containing the annotation (and the models trained with these data) are available for non-commercial research purposes only.

Both manual-downloading models from our github repo and auto-downloading models with our python-library follow the above license policy(which is for non-commercial research purposes only).

2024-04-17: Monocular Identity-Conditioned Facial Reflectance Reconstruction accepted by CVPR-2024.

2023-04-01: We move the swapping demo to Discord bot, which support editing on Midjourney generated images, see detail at web-demos/swapping_discord.

2022-08-12: We achieved Rank-1st of

Perspective Projection Based Monocular 3D Face Reconstruction Challenge

of ECCV-2022 WCPA Workshop, paper and code.

2021-11-30: MFR-Ongoing challenge launched(same with IFRT), which is an extended version of iccv21-mfr.

2021-10-29: We achieved 1st place on the VISA track of NIST-FRVT 1:1 by using Partial FC (Xiang An, Jiankang Deng, Jia Guo).

2023-08-08: We released the implementation of Generalizing Gaze Estimation with Weak-Supervision from Synthetic Views at reconstruction/gaze.

2023-05-03: We have launched the ongoing version of wild face anti-spoofing challenge. See details here.

2023-04-01: We move the swapping demo to Discord bot, which support editing on Midjourney generated images, see detail at web-demos/swapping_discord.

2023-02-13: We launch a large scale in the wild face anti-spoofing challenge on CVPR23 Workshop, see details at challenges/cvpr23-fas-wild.

2022-11-28: Single line code for facial identity swapping in our python packge ver 0.7, please check the example here.

2022-10-28: MFR-Ongoing website is refactored, please create issues if there's any bug.

2022-09-22: Now we have web-demos: face-localization, face-recognition, and face-swapping.

2022-08-12: We achieved Rank-1st of

Perspective Projection Based Monocular 3D Face Reconstruction Challenge

of ECCV-2022 WCPA Workshop, paper and code.

2022-03-30: Partial FC accepted by CVPR-2022.

2022-02-23: SCRFD accepted by ICLR-2022.

2021-11-30: MFR-Ongoing challenge launched(same with IFRT), which is an extended version of iccv21-mfr.

2021-10-29: We achieved 1st place on the VISA track of NIST-FRVT 1:1 by using Partial FC (Xiang An, Jiankang Deng, Jia Guo).

2021-10-11: Leaderboard of ICCV21 - Masked Face Recognition Challenge released. Video: Youtube, Bilibili.

2021-06-05: We launch a Masked Face Recognition Challenge & Workshop on ICCV 2021.

InsightFace is an open source 2D&3D deep face analysis toolbox, mainly based on PyTorch and MXNet.

Please check our website for detail.

The master branch works with PyTorch 1.6+ and/or MXNet=1.6-1.8, with Python 3.x.

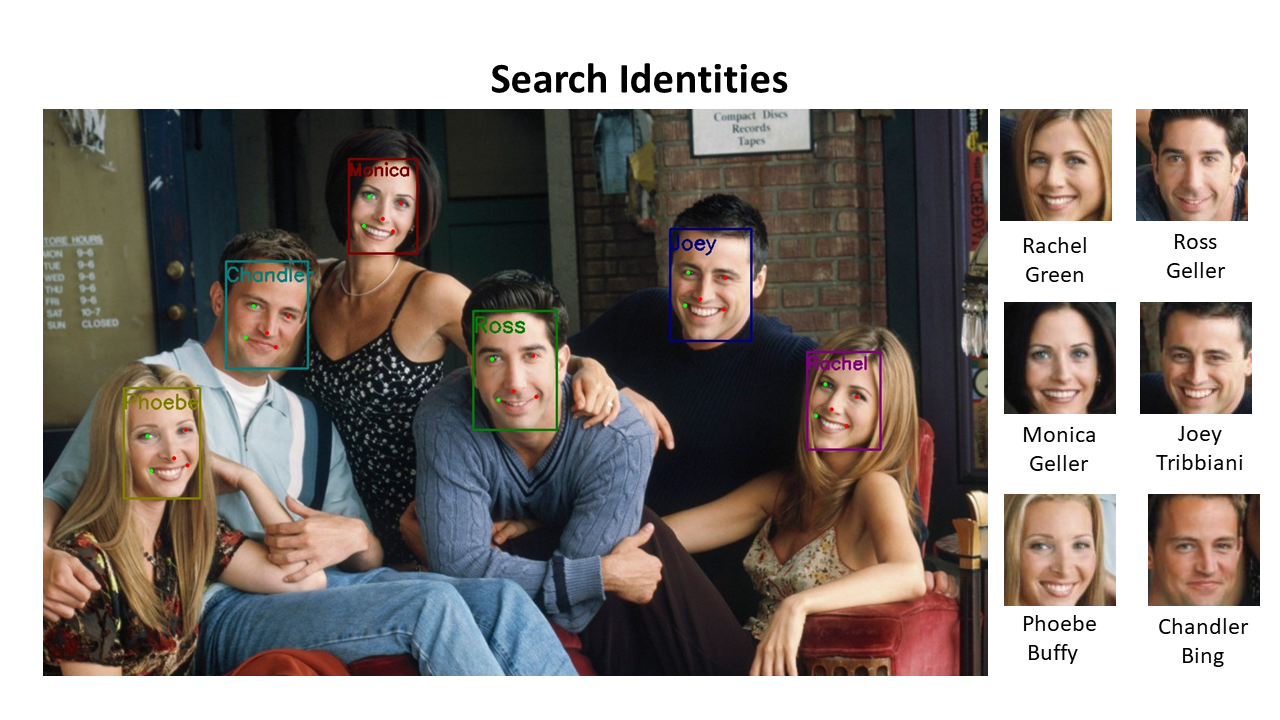

InsightFace efficiently implements a rich variety of state of the art algorithms of face recognition, face detection and face alignment, which optimized for both training and deployment.

Please start with our python-package, for testing detection, recognition and alignment models on input images.

Please click the image to watch the Youtube video. For Bilibili users, click here.

The page on InsightFace website also describes all supported projects in InsightFace.

You may also interested in some challenges hold by InsightFace.

In this module, we provide training data, network settings and loss designs for deep face recognition.

The supported methods are as follows:

- ArcFace_mxnet (CVPR'2019)

- ArcFace_torch (CVPR'2019)

- SubCenter ArcFace (ECCV'2020)

- PartialFC_mxnet (CVPR'2022)

- PartialFC_torch (CVPR'2022)

- VPL (CVPR'2021)

- Arcface_oneflow

- ArcFace_Paddle (CVPR'2019)

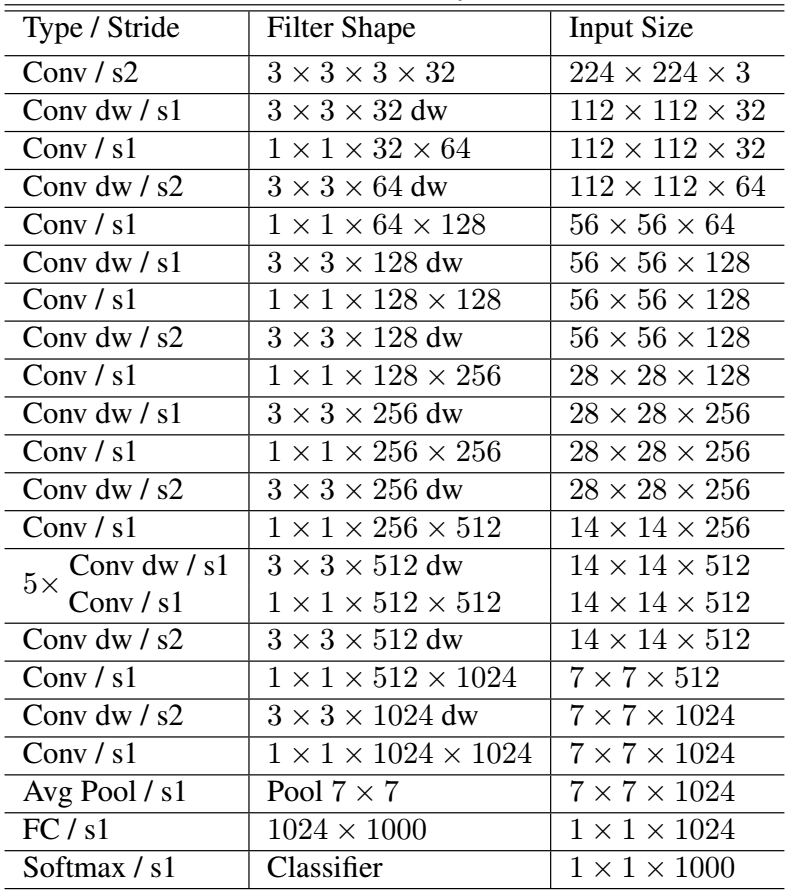

Commonly used network backbones are included in most of the methods, such as IResNet, MobilefaceNet, MobileNet, InceptionResNet_v2, DenseNet, etc..

The training data includes, but not limited to the cleaned MS1M, VGG2 and CASIA-Webface datasets, which were already packed in MXNet binary format. Please dataset page for detail.

We provide standard IJB and Megaface evaluation pipelines in evaluation

Please check Model-Zoo for more pretrained models.

- TensorFlow: InsightFace_TF

- TensorFlow: tf-insightface

- TensorFlow:insightface

- PyTorch: InsightFace_Pytorch

- PyTorch: arcface-pytorch

- Caffe: arcface-caffe

- Caffe: CombinedMargin-caffe

- Tensorflow: InsightFace-tensorflow

- TensorRT: wang-xinyu/tensorrtx

- TensorRT: InsightFace-REST

- ONNXRuntime C++: ArcFace-ONNXRuntime

- ONNXRuntime Go: arcface-go

- MNN: ArcFace-MNN

- TNN: ArcFace-TNN

- NCNN: ArcFace-NCNN

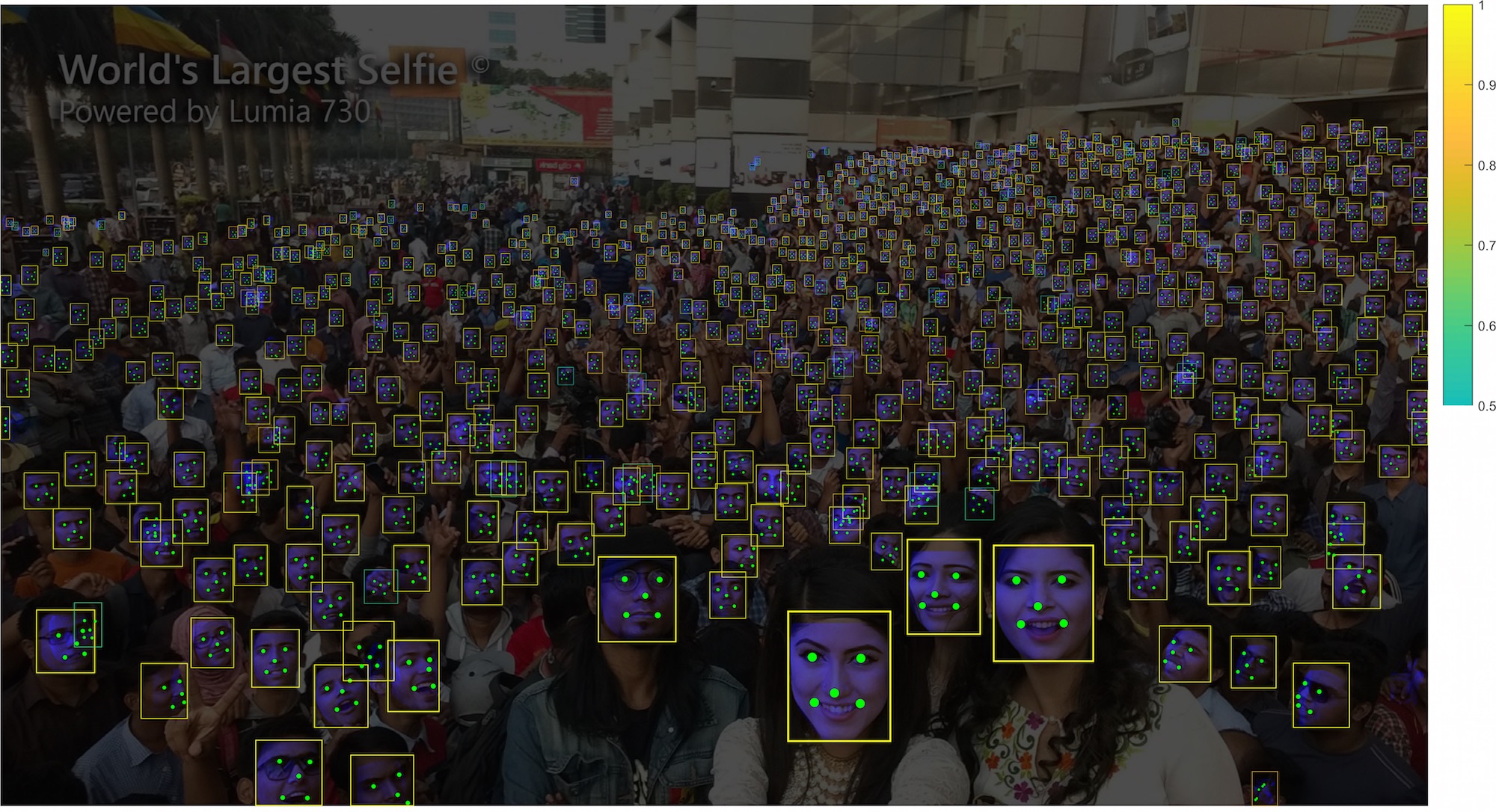

In this module, we provide training data with annotation, network settings and loss designs for face detection training, evaluation and inference.

The supported methods are as follows:

RetinaFace is a practical single-stage face detector which is accepted by CVPR 2020. We provide training code, training dataset, pretrained models and evaluation scripts.

SCRFD is an efficient high accuracy face detection approach which is initialy described in Arxiv. We provide an easy-to-use pipeline to train high efficiency face detectors with NAS supporting.

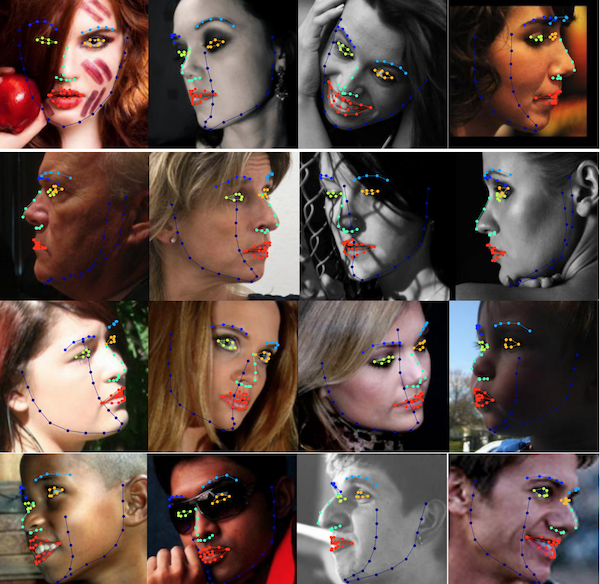

In this module, we provide datasets and training/inference pipelines for face alignment.

Supported methods:

SDUNets is a heatmap based method which accepted on BMVC.

SimpleRegression provides very lightweight facial landmark models with fast coordinate regression. The input of these models is loose cropped face image while the output is the direct landmark coordinates.

If you find InsightFace useful in your research, please consider to cite the following related papers:

@inproceedings{ren2023pbidr,

title={Facial Geometric Detail Recovery via Implicit Representation},

author={Ren, Xingyu and Lattas, Alexandros and Gecer, Baris and Deng, Jiankang and Ma, Chao and Yang, Xiaokang},

booktitle={2023 IEEE 17th International Conference on Automatic Face and Gesture Recognition (FG)},

year={2023}

}

@article{guo2021sample,

title={Sample and Computation Redistribution for Efficient Face Detection},

author={Guo, Jia and Deng, Jiankang and Lattas, Alexandros and Zafeiriou, Stefanos},

journal={arXiv preprint arXiv:2105.04714},

year={2021}

}

@inproceedings{gecer2021ostec,

title={OSTeC: One-Shot Texture Completion},

author={Gecer, Baris and Deng, Jiankang and Zafeiriou, Stefanos},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2021}

}

@inproceedings{an2020partical_fc,

title={Partial FC: Training 10 Million Identities on a Single Machine},

author={An, Xiang and Zhu, Xuhan and Xiao, Yang and Wu, Lan and Zhang, Ming and Gao, Yuan and Qin, Bin and

Zhang, Debing and Fu Ying},

booktitle={Arxiv 2010.05222},

year={2020}

}

@inproceedings{deng2020subcenter,

title={Sub-center ArcFace: Boosting Face Recognition by Large-scale Noisy Web Faces},

author={Deng, Jiankang and Guo, Jia and Liu, Tongliang and Gong, Mingming and Zafeiriou, Stefanos},

booktitle={Proceedings of the IEEE Conference on European Conference on Computer Vision},

year={2020}

}

@inproceedings{Deng2020CVPR,

title = {RetinaFace: Single-Shot Multi-Level Face Localisation in the Wild},

author = {Deng, Jiankang and Guo, Jia and Ververas, Evangelos and Kotsia, Irene and Zafeiriou, Stefanos},

booktitle = {CVPR},

year = {2020}

}

@inproceedings{guo2018stacked,

title={Stacked Dense U-Nets with Dual Transformers for Robust Face Alignment},

author={Guo, Jia and Deng, Jiankang and Xue, Niannan and Zafeiriou, Stefanos},

booktitle={BMVC},

year={2018}

}

@article{deng2018menpo,

title={The Menpo benchmark for multi-pose 2D and 3D facial landmark localisation and tracking},

author={Deng, Jiankang and Roussos, Anastasios and Chrysos, Grigorios and Ververas, Evangelos and Kotsia, Irene and Shen, Jie and Zafeiriou, Stefanos},

journal={IJCV},

year={2018}

}

@inproceedings{deng2018arcface,

title={ArcFace: Additive Angular Margin Loss for Deep Face Recognition},

author={Deng, Jiankang and Guo, Jia and Niannan, Xue and Zafeiriou, Stefanos},

booktitle={CVPR},

year={2019}

}

Main contributors:

- Jia Guo,

guojia[at]gmail.com - Jiankang Deng

jiankangdeng[at]gmail.com - Xiang An

anxiangsir[at]gmail.com - Jack Yu

jackyu961127[at]gmail.com - Baris Gecer

barisgecer[at]msn.com