/home/sucom/.conda/envs/cv_py36/bin/python /home/sucom/hdd_1T/project/video_rec_0831/ClassyVision/main_train.py

INFO:root:Classy Vision's default training script.

INFO:root:AMP disabled

INFO:root:mixup disabled

INFO:root:Synchronized Batch Normalization is disabled

INFO:root:Logging outputs to ./output_2020-08-31T11:43:29.853157

INFO:root:Logging checkpoints to ./checkpoint_101

INFO:root:Starting training on rank 0 worker. World size is 1

INFO:root:Using GPU, CUDA device index: 0

INFO:root:Starting training. Task: <classy_vision.tasks.classification_task.ClassificationTask object at 0x7ff9590a1748> initialized with config:

{

"name": "classification_task",

"checkpoint_folder": "./classy_checkpoint_{time.time()}",

"checkpoint_period": 1,

"num_epochs": 3000000,

"loss": {

"name": "CrossEntropyLoss"

},

"dataset": {

"train": {

"name": "ucf101",

"split": "train",

"batchsize_per_replica": 16,

"use_shuffle": true,

"num_samples": null,

"frames_per_clip": 32,

"step_between_clips": 1,

"clips_per_video": 1,

"video_dir": "/home/sucom/hdd_1T/project/video_rec/UCF-101",

"splits_dir": "/home/sucom/hdd_1T/project/video_rec/ucfTrainTestlist",

"metadata_file": "./ucf101_metadata.pt",

"fold": 1,

"transforms": {

"video": [

{

"name": "video_default_augment",

"crop_size": 112,

"size_range": [

128,

160

]

}

]

}

},

"test": {

"name": "ucf101",

"split": "test",

"batchsize_per_replica": 10,

"use_shuffle": false,

"num_samples": null,

"frames_per_clip": 32,

"step_between_clips": 1,

"clips_per_video": 10,

"video_dir": "/home/sucom/hdd_1T/project/video_rec/UCF-101",

"splits_dir": "/home/sucom/hdd_1T/project/video_rec/ucfTrainTestlist",

"metadata_file": "./ucf101_metadata.pt",

"fold": 1,

"transforms": {

"video": [

{

"name": "video_default_no_augment",

"size": 128

}

]

}

}

},

"meters": {

"accuracy": {

"topk": [

1,

5

]

},

"video_accuracy": {

"topk": [

1,

5

],

"clips_per_video_train": 1,

"clips_per_video_test": 10

}

},

"model": {

"name": "resnext3d",

"frames_per_clip": 32,

"input_planes": 3,

"clip_crop_size": 112,

"skip_transformation_type": "postactivated_shortcut",

"residual_transformation_type": "basic_transformation",

"num_blocks": [

3,

4,

6,

3

],

"input_key": "video",

"stem_name": "resnext3d_stem",

"stem_planes": 64,

"stem_temporal_kernel": 3,

"stem_maxpool": false,

"stage_planes": 64,

"stage_temporal_kernel_basis": [

[

3

],

[

3

],

[

3

],

[

3

]

],

"temporal_conv_1x1": [

false,

false,

false,

false

],

"stage_temporal_stride": [

1,

2,

2,

2

],

"stage_spatial_stride": [

1,

2,

2,

2

],

"num_groups": 1,

"width_per_group": 64,

"num_classes": 101,

"heads": [

{

"name": "fully_convolutional_linear",

"unique_id": "default_head",

"pool_size": [

4,

7,

7

],

"activation_func": "softmax",

"num_classes": 101,

"fork_block": "pathway0-stage4-block2",

"in_plane": 512

}

]

},

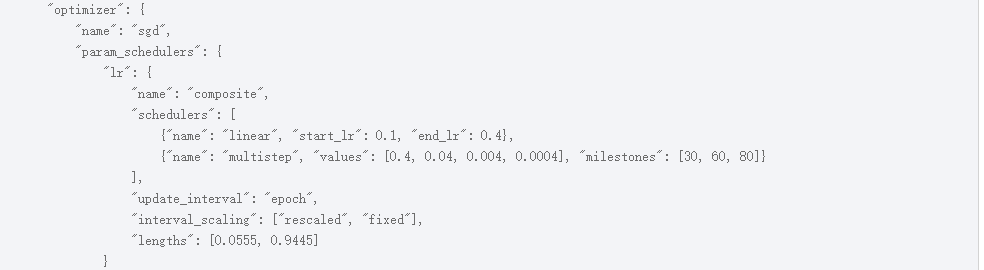

"optimizer": {

"name": "sgd",

"param_schedulers": {

"lr": {

"name": "composite",

"schedulers": [

{

"name": "linear",

"start_value": 0.005,

"end_value": 0.04

},

{

"name": "cosine",

"start_value": 0.04,

"end_value": 4e-05

}

],

"lengths": [

0.13,

0.87

],

"update_interval": "epoch",

"interval_scaling": [

"rescaled",

"rescaled"

]

}

},

"weight_decay": 0.005,

"momentum": 0.9,

"nesterov": true,

"num_epochs": 3000000,

"lr": 0.1,

"use_larc": false,

"larc_config": {

"clip": true,

"eps": 1e-08,

"trust_coefficient": 0.02

}

}

}

INFO:root:Number of parameters in model: 63527717

INFO:root:FLOPs for forward pass: 150950 MFLOPs

INFO:root:Number of activations in model: 65329152

INFO:root:Approximate meters: [0] train phase 0 (0.84% done), loss: 4.8705, meters: [accuracy_meter(top_1=0.012500,top_5=0.025000), video_accuracy_meter(top_1=0.012500,top_5=0.025000)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (1.68% done), loss: 4.8350, meters: [accuracy_meter(top_1=0.025000,top_5=0.037500), video_accuracy_meter(top_1=0.025000,top_5=0.037500)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (2.52% done), loss: 4.8853, meters: [accuracy_meter(top_1=0.029167,top_5=0.050000), video_accuracy_meter(top_1=0.029167,top_5=0.050000)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (3.36% done), loss: 4.9368, meters: [accuracy_meter(top_1=0.021875,top_5=0.046875), video_accuracy_meter(top_1=0.021875,top_5=0.046875)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (4.19% done), loss: 4.9501, meters: [accuracy_meter(top_1=0.020000,top_5=0.047500), video_accuracy_meter(top_1=0.020000,top_5=0.047500)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (5.03% done), loss: 4.9432, meters: [accuracy_meter(top_1=0.018750,top_5=0.047917), video_accuracy_meter(top_1=0.018750,top_5=0.047917)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (5.87% done), loss: 4.9325, meters: [accuracy_meter(top_1=0.017857,top_5=0.060714), video_accuracy_meter(top_1=0.017857,top_5=0.060714)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (6.71% done), loss: 4.9288, meters: [accuracy_meter(top_1=0.017188,top_5=0.064062), video_accuracy_meter(top_1=0.017188,top_5=0.064062)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (7.55% done), loss: 4.9075, meters: [accuracy_meter(top_1=0.018056,top_5=0.063889), video_accuracy_meter(top_1=0.018056,top_5=0.063889)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (8.39% done), loss: 4.8897, meters: [accuracy_meter(top_1=0.018750,top_5=0.063750), video_accuracy_meter(top_1=0.018750,top_5=0.063750)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (9.23% done), loss: 4.8742, meters: [accuracy_meter(top_1=0.020455,top_5=0.072727), video_accuracy_meter(top_1=0.020455,top_5=0.072727)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (10.07% done), loss: 4.8573, meters: [accuracy_meter(top_1=0.020833,top_5=0.076042), video_accuracy_meter(top_1=0.020833,top_5=0.076042)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (10.91% done), loss: 4.8543, meters: [accuracy_meter(top_1=0.019231,top_5=0.074038), video_accuracy_meter(top_1=0.019231,top_5=0.074038)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (11.74% done), loss: 4.8426, meters: [accuracy_meter(top_1=0.019643,top_5=0.073214), video_accuracy_meter(top_1=0.019643,top_5=0.073214)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (12.58% done), loss: 4.8429, meters: [accuracy_meter(top_1=0.018333,top_5=0.072500), video_accuracy_meter(top_1=0.018333,top_5=0.072500)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (13.42% done), loss: 4.8333, meters: [accuracy_meter(top_1=0.018750,top_5=0.074219), video_accuracy_meter(top_1=0.018750,top_5=0.074219)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (14.26% done), loss: 4.8194, meters: [accuracy_meter(top_1=0.019853,top_5=0.077206), video_accuracy_meter(top_1=0.019853,top_5=0.077206)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (15.10% done), loss: 4.7999, meters: [accuracy_meter(top_1=0.020139,top_5=0.079861), video_accuracy_meter(top_1=0.020139,top_5=0.079861)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (15.94% done), loss: 4.7868, meters: [accuracy_meter(top_1=0.019737,top_5=0.085526), video_accuracy_meter(top_1=0.019737,top_5=0.085526)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (16.78% done), loss: 4.7729, meters: [accuracy_meter(top_1=0.020000,top_5=0.086875), video_accuracy_meter(top_1=0.020000,top_5=0.086875)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (17.62% done), loss: 4.7737, meters: [accuracy_meter(top_1=0.020238,top_5=0.085714), video_accuracy_meter(top_1=0.020238,top_5=0.085714)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (18.46% done), loss: 4.7648, meters: [accuracy_meter(top_1=0.020455,top_5=0.087500), video_accuracy_meter(top_1=0.020455,top_5=0.087500)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (19.30% done), loss: 4.7544, meters: [accuracy_meter(top_1=0.020109,top_5=0.090761), video_accuracy_meter(top_1=0.020109,top_5=0.090761)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (20.13% done), loss: 4.7446, meters: [accuracy_meter(top_1=0.020312,top_5=0.093229), video_accuracy_meter(top_1=0.020312,top_5=0.093229)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (20.97% done), loss: 4.7368, meters: [accuracy_meter(top_1=0.022000,top_5=0.095000), video_accuracy_meter(top_1=0.022000,top_5=0.095000)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (21.81% done), loss: 4.7282, meters: [accuracy_meter(top_1=0.023558,top_5=0.095673), video_accuracy_meter(top_1=0.023558,top_5=0.095673)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (22.65% done), loss: 4.7134, meters: [accuracy_meter(top_1=0.024074,top_5=0.098148), video_accuracy_meter(top_1=0.024074,top_5=0.098148)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (23.49% done), loss: 4.7056, meters: [accuracy_meter(top_1=0.024554,top_5=0.098661), video_accuracy_meter(top_1=0.024554,top_5=0.098661)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (24.33% done), loss: 4.6991, meters: [accuracy_meter(top_1=0.024569,top_5=0.100000), video_accuracy_meter(top_1=0.024569,top_5=0.100000)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (25.17% done), loss: 4.6883, meters: [accuracy_meter(top_1=0.026250,top_5=0.101667), video_accuracy_meter(top_1=0.026250,top_5=0.101667)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (26.01% done), loss: 4.6800, meters: [accuracy_meter(top_1=0.027016,top_5=0.103226), video_accuracy_meter(top_1=0.027016,top_5=0.103226)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (26.85% done), loss: 4.6754, meters: [accuracy_meter(top_1=0.026563,top_5=0.103125), video_accuracy_meter(top_1=0.026563,top_5=0.103125)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (27.68% done), loss: 4.6670, meters: [accuracy_meter(top_1=0.028030,top_5=0.104924), video_accuracy_meter(top_1=0.028030,top_5=0.104924)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (28.52% done), loss: 4.6583, meters: [accuracy_meter(top_1=0.029044,top_5=0.105147), video_accuracy_meter(top_1=0.029044,top_5=0.105147)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (29.36% done), loss: 4.6521, meters: [accuracy_meter(top_1=0.028571,top_5=0.106071), video_accuracy_meter(top_1=0.028571,top_5=0.106071)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (30.20% done), loss: 4.6461, meters: [accuracy_meter(top_1=0.029514,top_5=0.107292), video_accuracy_meter(top_1=0.029514,top_5=0.107292)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (31.04% done), loss: 4.6366, meters: [accuracy_meter(top_1=0.030743,top_5=0.109122), video_accuracy_meter(top_1=0.030743,top_5=0.109122)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (31.88% done), loss: 4.6297, meters: [accuracy_meter(top_1=0.029934,top_5=0.109211), video_accuracy_meter(top_1=0.029934,top_5=0.109211)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (32.72% done), loss: 4.6226, meters: [accuracy_meter(top_1=0.030449,top_5=0.109936), video_accuracy_meter(top_1=0.030449,top_5=0.109936)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (33.56% done), loss: 4.6202, meters: [accuracy_meter(top_1=0.030000,top_5=0.111562), video_accuracy_meter(top_1=0.030000,top_5=0.111562)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (34.40% done), loss: 4.6139, meters: [accuracy_meter(top_1=0.029878,top_5=0.113415), video_accuracy_meter(top_1=0.029878,top_5=0.113415)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (35.23% done), loss: 4.6086, meters: [accuracy_meter(top_1=0.030060,top_5=0.113988), video_accuracy_meter(top_1=0.030060,top_5=0.113988)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (36.07% done), loss: 4.6008, meters: [accuracy_meter(top_1=0.030523,top_5=0.115407), video_accuracy_meter(top_1=0.030523,top_5=0.115407)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (36.91% done), loss: 4.5942, meters: [accuracy_meter(top_1=0.031534,top_5=0.117330), video_accuracy_meter(top_1=0.031534,top_5=0.117330)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (37.75% done), loss: 4.5920, meters: [accuracy_meter(top_1=0.031667,top_5=0.118889), video_accuracy_meter(top_1=0.031667,top_5=0.118889)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (38.59% done), loss: 4.5869, meters: [accuracy_meter(top_1=0.032065,top_5=0.119837), video_accuracy_meter(top_1=0.032065,top_5=0.119837)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (39.43% done), loss: 4.5826, meters: [accuracy_meter(top_1=0.032181,top_5=0.120213), video_accuracy_meter(top_1=0.032181,top_5=0.120213)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (40.27% done), loss: 4.5796, meters: [accuracy_meter(top_1=0.032031,top_5=0.120573), video_accuracy_meter(top_1=0.032031,top_5=0.120573)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (41.11% done), loss: 4.5736, meters: [accuracy_meter(top_1=0.032908,top_5=0.122194), video_accuracy_meter(top_1=0.032908,top_5=0.122194)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (41.95% done), loss: 4.5696, meters: [accuracy_meter(top_1=0.033000,top_5=0.122250), video_accuracy_meter(top_1=0.033000,top_5=0.122250)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (42.79% done), loss: 4.5642, meters: [accuracy_meter(top_1=0.033578,top_5=0.123284), video_accuracy_meter(top_1=0.033578,top_5=0.123284)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (43.62% done), loss: 4.5570, meters: [accuracy_meter(top_1=0.033894,top_5=0.124519), video_accuracy_meter(top_1=0.033894,top_5=0.124519)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (44.46% done), loss: 4.5494, meters: [accuracy_meter(top_1=0.034670,top_5=0.125943), video_accuracy_meter(top_1=0.034670,top_5=0.125943)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (45.30% done), loss: 4.5461, meters: [accuracy_meter(top_1=0.034722,top_5=0.126157), video_accuracy_meter(top_1=0.034722,top_5=0.126157)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (46.14% done), loss: 4.5412, meters: [accuracy_meter(top_1=0.035000,top_5=0.127500), video_accuracy_meter(top_1=0.035000,top_5=0.127500)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (46.98% done), loss: 4.5348, meters: [accuracy_meter(top_1=0.035268,top_5=0.129241), video_accuracy_meter(top_1=0.035268,top_5=0.129241)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (47.82% done), loss: 4.5319, meters: [accuracy_meter(top_1=0.035746,top_5=0.130482), video_accuracy_meter(top_1=0.035746,top_5=0.130482)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (48.66% done), loss: 4.5266, meters: [accuracy_meter(top_1=0.036207,top_5=0.131681), video_accuracy_meter(top_1=0.036207,top_5=0.131681)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (49.50% done), loss: 4.5224, meters: [accuracy_meter(top_1=0.036017,top_5=0.132203), video_accuracy_meter(top_1=0.036017,top_5=0.132203)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (50.34% done), loss: 4.5178, meters: [accuracy_meter(top_1=0.036458,top_5=0.133958), video_accuracy_meter(top_1=0.036458,top_5=0.133958)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (51.17% done), loss: 4.5145, meters: [accuracy_meter(top_1=0.036885,top_5=0.135246), video_accuracy_meter(top_1=0.036885,top_5=0.135246)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (52.01% done), loss: 4.5121, meters: [accuracy_meter(top_1=0.036895,top_5=0.135484), video_accuracy_meter(top_1=0.036895,top_5=0.135484)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (52.85% done), loss: 4.5090, meters: [accuracy_meter(top_1=0.036706,top_5=0.135714), video_accuracy_meter(top_1=0.036706,top_5=0.135714)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (53.69% done), loss: 4.5047, meters: [accuracy_meter(top_1=0.036523,top_5=0.136719), video_accuracy_meter(top_1=0.036523,top_5=0.136719)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (54.53% done), loss: 4.5009, meters: [accuracy_meter(top_1=0.037115,top_5=0.136731), video_accuracy_meter(top_1=0.037115,top_5=0.136731)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (55.37% done), loss: 4.4985, meters: [accuracy_meter(top_1=0.037311,top_5=0.136364), video_accuracy_meter(top_1=0.037311,top_5=0.136364)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (56.21% done), loss: 4.5002, meters: [accuracy_meter(top_1=0.037313,top_5=0.135075), video_accuracy_meter(top_1=0.037313,top_5=0.135075)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (57.05% done), loss: 4.4956, meters: [accuracy_meter(top_1=0.037316,top_5=0.136397), video_accuracy_meter(top_1=0.037316,top_5=0.136397)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (57.89% done), loss: 4.4899, meters: [accuracy_meter(top_1=0.037500,top_5=0.137319), video_accuracy_meter(top_1=0.037500,top_5=0.137319)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (58.72% done), loss: 4.4852, meters: [accuracy_meter(top_1=0.037500,top_5=0.138571), video_accuracy_meter(top_1=0.037500,top_5=0.138571)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (59.56% done), loss: 4.4816, meters: [accuracy_meter(top_1=0.037676,top_5=0.139437), video_accuracy_meter(top_1=0.037676,top_5=0.139437)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (60.40% done), loss: 4.4760, meters: [accuracy_meter(top_1=0.037847,top_5=0.140278), video_accuracy_meter(top_1=0.037847,top_5=0.140278)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (61.24% done), loss: 4.4739, meters: [accuracy_meter(top_1=0.037842,top_5=0.140753), video_accuracy_meter(top_1=0.037842,top_5=0.140753)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (62.08% done), loss: 4.4686, meters: [accuracy_meter(top_1=0.038851,top_5=0.142568), video_accuracy_meter(top_1=0.038851,top_5=0.142568)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (62.92% done), loss: 4.4676, meters: [accuracy_meter(top_1=0.039333,top_5=0.142833), video_accuracy_meter(top_1=0.039333,top_5=0.142833)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (63.76% done), loss: 4.4645, meters: [accuracy_meter(top_1=0.039474,top_5=0.142928), video_accuracy_meter(top_1=0.039474,top_5=0.142928)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (64.60% done), loss: 4.4592, meters: [accuracy_meter(top_1=0.039610,top_5=0.143669), video_accuracy_meter(top_1=0.039610,top_5=0.143669)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (65.44% done), loss: 4.4545, meters: [accuracy_meter(top_1=0.040224,top_5=0.145032), video_accuracy_meter(top_1=0.040224,top_5=0.145032)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (66.28% done), loss: 4.4493, meters: [accuracy_meter(top_1=0.041456,top_5=0.146519), video_accuracy_meter(top_1=0.041456,top_5=0.146519)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (67.11% done), loss: 4.4460, meters: [accuracy_meter(top_1=0.042344,top_5=0.147344), video_accuracy_meter(top_1=0.042344,top_5=0.147344)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (67.95% done), loss: 4.4432, meters: [accuracy_meter(top_1=0.042593,top_5=0.148611), video_accuracy_meter(top_1=0.042593,top_5=0.148611)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (68.79% done), loss: 4.4393, meters: [accuracy_meter(top_1=0.042683,top_5=0.148780), video_accuracy_meter(top_1=0.042683,top_5=0.148780)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (69.63% done), loss: 4.4351, meters: [accuracy_meter(top_1=0.043373,top_5=0.149849), video_accuracy_meter(top_1=0.043373,top_5=0.149849)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (70.47% done), loss: 4.4336, meters: [accuracy_meter(top_1=0.043750,top_5=0.150446), video_accuracy_meter(top_1=0.043750,top_5=0.150446)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (71.31% done), loss: 4.4304, meters: [accuracy_meter(top_1=0.044118,top_5=0.150882), video_accuracy_meter(top_1=0.044118,top_5=0.150882)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (72.15% done), loss: 4.4279, meters: [accuracy_meter(top_1=0.044477,top_5=0.151163), video_accuracy_meter(top_1=0.044477,top_5=0.151163)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (72.99% done), loss: 4.4263, meters: [accuracy_meter(top_1=0.044253,top_5=0.151580), video_accuracy_meter(top_1=0.044253,top_5=0.151580)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (73.83% done), loss: 4.4222, meters: [accuracy_meter(top_1=0.044886,top_5=0.152415), video_accuracy_meter(top_1=0.044886,top_5=0.152415)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (74.66% done), loss: 4.4202, meters: [accuracy_meter(top_1=0.044944,top_5=0.152949), video_accuracy_meter(top_1=0.044944,top_5=0.152949)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (75.50% done), loss: 4.4174, meters: [accuracy_meter(top_1=0.044861,top_5=0.153889), video_accuracy_meter(top_1=0.044861,top_5=0.153889)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (76.34% done), loss: 4.4140, meters: [accuracy_meter(top_1=0.044780,top_5=0.154808), video_accuracy_meter(top_1=0.044780,top_5=0.154808)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (77.18% done), loss: 4.4097, meters: [accuracy_meter(top_1=0.045380,top_5=0.155978), video_accuracy_meter(top_1=0.045380,top_5=0.155978)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (78.02% done), loss: 4.4063, meters: [accuracy_meter(top_1=0.045699,top_5=0.156989), video_accuracy_meter(top_1=0.045699,top_5=0.156989)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (78.86% done), loss: 4.4029, meters: [accuracy_meter(top_1=0.046676,top_5=0.158378), video_accuracy_meter(top_1=0.046676,top_5=0.158378)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (79.70% done), loss: 4.3999, meters: [accuracy_meter(top_1=0.046579,top_5=0.158947), video_accuracy_meter(top_1=0.046579,top_5=0.158947)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (80.54% done), loss: 4.3972, meters: [accuracy_meter(top_1=0.046354,top_5=0.158854), video_accuracy_meter(top_1=0.046354,top_5=0.158854)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (81.38% done), loss: 4.3947, meters: [accuracy_meter(top_1=0.046392,top_5=0.159794), video_accuracy_meter(top_1=0.046392,top_5=0.159794)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (82.21% done), loss: 4.3930, meters: [accuracy_meter(top_1=0.046556,top_5=0.159949), video_accuracy_meter(top_1=0.046556,top_5=0.159949)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (83.05% done), loss: 4.3905, meters: [accuracy_meter(top_1=0.046338,top_5=0.160732), video_accuracy_meter(top_1=0.046338,top_5=0.160732)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (83.89% done), loss: 4.3892, meters: [accuracy_meter(top_1=0.046000,top_5=0.160875), video_accuracy_meter(top_1=0.046000,top_5=0.160875)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (84.73% done), loss: 4.3870, meters: [accuracy_meter(top_1=0.046287,top_5=0.161510), video_accuracy_meter(top_1=0.046287,top_5=0.161510)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (85.57% done), loss: 4.3851, meters: [accuracy_meter(top_1=0.046078,top_5=0.161887), video_accuracy_meter(top_1=0.046078,top_5=0.161887)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (86.41% done), loss: 4.3797, meters: [accuracy_meter(top_1=0.046723,top_5=0.163956), video_accuracy_meter(top_1=0.046723,top_5=0.163956)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (87.25% done), loss: 4.3759, meters: [accuracy_meter(top_1=0.046995,top_5=0.164663), video_accuracy_meter(top_1=0.046995,top_5=0.164663)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (88.09% done), loss: 4.3731, meters: [accuracy_meter(top_1=0.047381,top_5=0.165000), video_accuracy_meter(top_1=0.047381,top_5=0.165000)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (88.93% done), loss: 4.3693, meters: [accuracy_meter(top_1=0.047524,top_5=0.165920), video_accuracy_meter(top_1=0.047524,top_5=0.165920)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (89.77% done), loss: 4.3674, meters: [accuracy_meter(top_1=0.047897,top_5=0.166355), video_accuracy_meter(top_1=0.047897,top_5=0.166355)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (90.60% done), loss: 4.3626, meters: [accuracy_meter(top_1=0.048264,top_5=0.167245), video_accuracy_meter(top_1=0.048264,top_5=0.167245)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (91.44% done), loss: 4.3601, meters: [accuracy_meter(top_1=0.048050,top_5=0.167317), video_accuracy_meter(top_1=0.048050,top_5=0.167317)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (92.28% done), loss: 4.3575, meters: [accuracy_meter(top_1=0.048409,top_5=0.167614), video_accuracy_meter(top_1=0.048409,top_5=0.167614)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (93.12% done), loss: 4.3543, meters: [accuracy_meter(top_1=0.048986,top_5=0.169032), video_accuracy_meter(top_1=0.048986,top_5=0.169032)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (93.96% done), loss: 4.3506, meters: [accuracy_meter(top_1=0.049442,top_5=0.169866), video_accuracy_meter(top_1=0.049442,top_5=0.169866)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (94.80% done), loss: 4.3464, meters: [accuracy_meter(top_1=0.050221,top_5=0.171018), video_accuracy_meter(top_1=0.050221,top_5=0.171018)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (95.64% done), loss: 4.3408, meters: [accuracy_meter(top_1=0.051096,top_5=0.172807), video_accuracy_meter(top_1=0.051096,top_5=0.172807)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (96.48% done), loss: 4.3366, meters: [accuracy_meter(top_1=0.051848,top_5=0.174565), video_accuracy_meter(top_1=0.051848,top_5=0.174565)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (97.32% done), loss: 4.3333, meters: [accuracy_meter(top_1=0.052263,top_5=0.175216), video_accuracy_meter(top_1=0.052263,top_5=0.175216)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (98.15% done), loss: 4.3321, meters: [accuracy_meter(top_1=0.052030,top_5=0.175427), video_accuracy_meter(top_1=0.052030,top_5=0.175427)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (98.99% done), loss: 4.3299, meters: [accuracy_meter(top_1=0.052331,top_5=0.176377), video_accuracy_meter(top_1=0.052331,top_5=0.176377)], lr: 0.0050

INFO:root:Approximate meters: [0] train phase 0 (99.83% done), loss: 4.3267, meters: [accuracy_meter(top_1=0.052521,top_5=0.176891), video_accuracy_meter(top_1=0.052521,top_5=0.176891)], lr: 0.0050

INFO:root:Synced meters: [0] train phase 0 (100.00% done), loss: 4.3269, meters: [accuracy_meter(top_1=0.052538,top_5=0.176804), video_accuracy_meter(top_1=0.052538,top_5=0.176804)], lr: 0.0050, processed batches: 596

INFO:root:Plotting to Tensorboard for train phase 0

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Summary name Learning Rate/train is illegal; using Learning_Rate/train instead.

INFO:root:Done plotting to Tensorboard

INFO:root:Saving checkpoint to './checkpoint_101'...I followed your advice and I wait for a long time , The program did not stop, but it was unable to enter the next training can you help me! @mannatsingh