Welcome to Mixed Reality documentation, the place for all things MR, VR, and AR at Microsoft!

If you're contributing or updating docs content, please make sure your pull requests are targeting the correct sub-docset (mr-dev-docs, enthusiast-guide, and so on). New contributors should check out our more detailed contribution guidelines for each subdocset:

For docs-related issues, use the footers at the bottom of each doc, or submit directly to MicrosoftDocs/mixed-reality/issues.

Feel free to send any questions about contribution policies or processes to Harrison Ferrone or Sean Kerawala via Teams or email.

Every path through our docs has a curated journey to help you find your footing. Whether it's design, development, or distributing your apps to the world, we've got you covered.

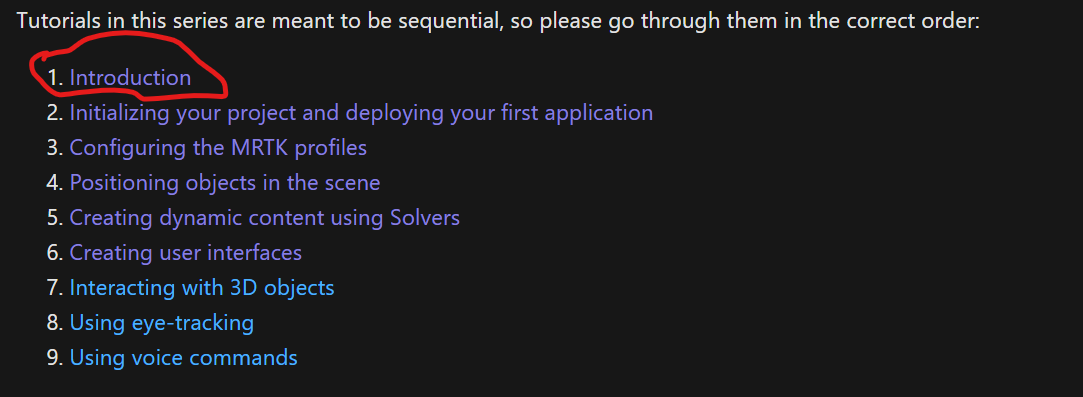

We recommend starting with the Mixed Reality basics and moving on from there:

If you're interested in the design side of things:

When you're ready to start developing, choose the engine and device that best suits your needs:

For engine-specific content, choose one of the following paths:

When you're finally ready to get your app out to your users:

If you're new to VR devices, we recommend starting with our beginner guide:

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

This project has adopted the Microsoft Open Source Code of Conduct. For more information, see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.