Layotto(/leɪˈɒtəʊ/) is an application runtime developed using Golang, which provides various distributed capabilities for applications, such as state management, configuration management, and event pub/sub capabilities to simplify application development.

Layotto is built on the open source data plane MOSN .In addition to providing distributed building blocks, Layotto can also serve as the data plane of Service Mesh and has the ability to control traffic.

Layotto aims to combine Multi-Runtime with Service Mesh into one sidecar. No matter which product you are using as the Service Mesh data plane (e.g. MOSN,Envoy or any other product), you can always attach Layotto to it and add Multi-Runtime capabilities without adding new sidecars.

For example, by adding Runtime capabilities to MOSN, a Layotto process can both serve as the data plane of istio and provide various Runtime APIs (such as Configuration API, Pub/Sub API, etc.)

In addition, we were surprised to find that a sidecar can do much more than that. We are trying to make Layotto even the runtime container of FaaS (Function as a service) with the magic power of WebAssembly .

- Service Communication

- Service Governance.Such as traffic hijacking and observation, service rate limiting, etc

- As the data plane of istio

- Configuration management

- State management

- Event publish and subscribe

- Health check, query runtime metadata

- FaaS model based on WASM and Runtime

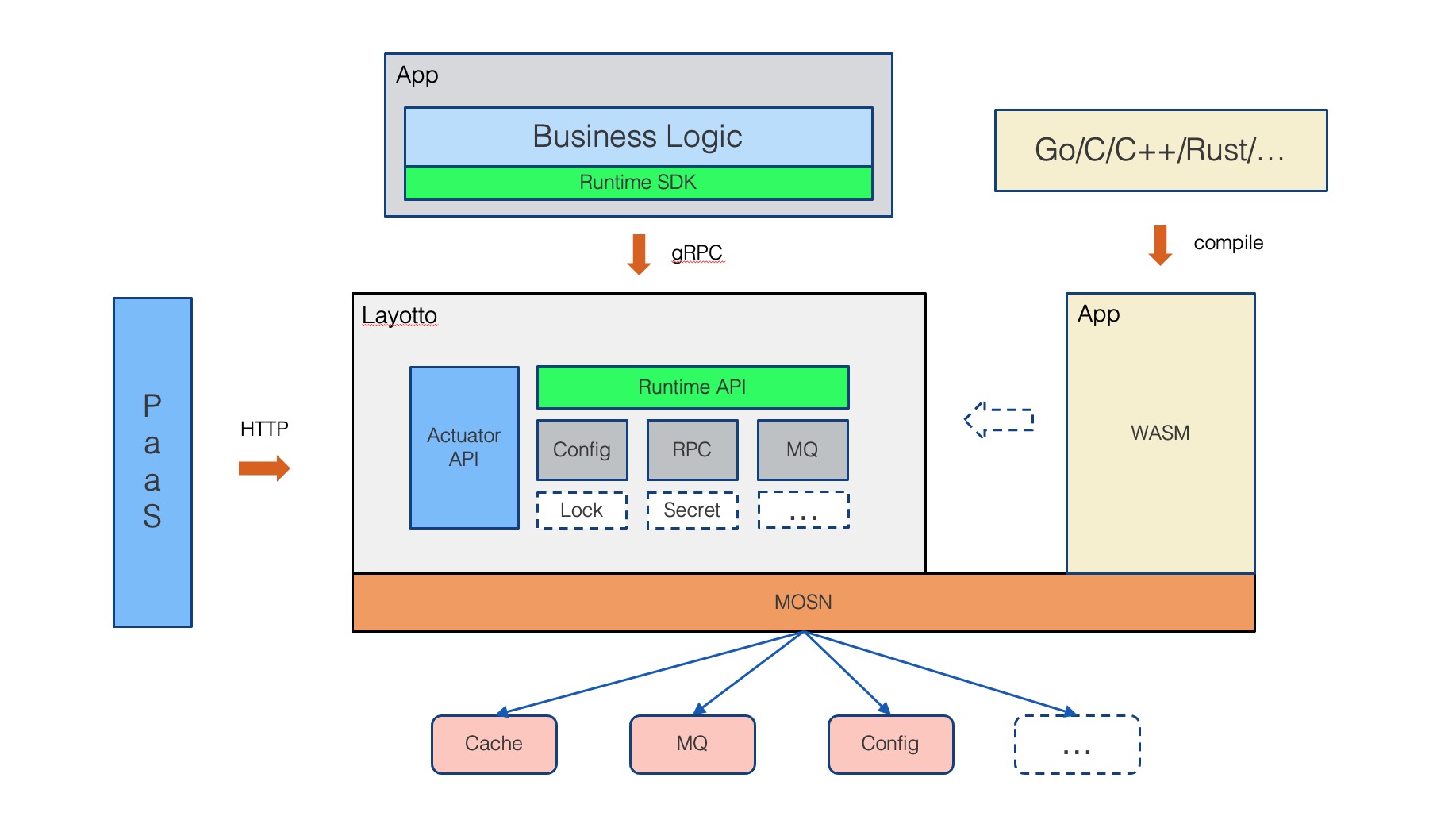

As shown in the architecture diagram below, Layotto uses the open source MOSN as the base to provide network layer management capabilities while providing distributed capabilities. The business logic can directly interact with Layotto through a lightweight SDK without paying attention to the specific back-end infrastructure.

Layotto provides SDKs in various languages. The sdk interacts with Layotto through grpc. Application developers only need to specify their own infrastructure type through the configuration file configure file provided by Layotto. No coding changes are required, which greatly improves the portability of the program.

You can try the quickstart demos below to get started with Layotto. In addition, you can experience the online laboratory

| API | status | quick start | desc |

|---|---|---|---|

| State | ✅ | demo | Write/Query the data of the Key/Value model |

| Pub/Sub | ✅ | demo | Publish/Subscribe message through various Message Queue |

| Service Invoke | ✅ | demo | Call Service through MOSN (another istio data plane) |

| Config | ✅ | demo | Write/Query/Subscribe the config through various Config Center |

| Lock | ✅ | demo | Distributed lock API |

| Sequencer | ✅ | demo | Generate distributed unique and incremental ID |

| File | ✅ | TODO | File API implementation |

| Binding | ✅ | TODO | Transparent data transmission API |

| feature | status | quick start | desc |

|---|---|---|---|

| istio | ✅ | demo | As the data plane of istio |

| feature | status | quick start | desc |

|---|---|---|---|

| API plugin | ✅ | demo | You can add your own API ! |

| feature | status | quick start | desc |

|---|---|---|---|

| Health Check | ✅ | demo | Query health state of app and components in Layotto |

| Metadata Query | ✅ | demo | Query metadata in Layotto/app |

| feature | status | quick start | desc |

|---|---|---|---|

| TCP Copy | ✅ | demo | Dump the tcp traffic received by Layotto into local file system |

| Flow Control | ✅ | demo | limit access to the APIs provided by Layotto |

| feature | status | quick start | desc |

|---|---|---|---|

| Go (TinyGo) | ✅ | demo | Compile Code written by TinyGo to *.wasm and run in Layotto |

| Rust | ✅ | demo | Compile Code written by Rust to *.wasm and run in Layotto |

| AssemblyScript | ✅ | demo | Compile Code written by AssemblyScript to *.wasm and run in Layotto |

| feature | status | quick start | desc |

|---|---|---|---|

| Go (TinyGo) | ✅ | demo | Compile Code written by TinyGo to *.wasm and run in Layotto And Scheduled by k8s. |

| Rust | ✅ | demo | Compile Code written by Rust to *.wasm and run in Layotto And Scheduled by k8s. |

| AssemblyScript | ✅ | demo | Compile Code written by AssemblyScript to *.wasm and run in Layotto And Scheduled by k8s. |

- Layotto - A new chapter of Service Mesh and Application Runtime

- WebAssembly + Application Runtime = A New Era of FaaS?

Layotto enriches the CNCF CLOUD NATIVE Landscape.

| Platform | Link |

|---|---|

| 💬 DingTalk (preferred) | Search the group number: 31912621 or scan the QR code below  |

Where to start? Check "Community tasks" list!

As a programming enthusiast , have you ever felt that you want to participate in the development of an open source project, but don't know where to start? In order to help everyone better participate in open source projects, our community will regularly publish community tasks to help everyone learn by doing!

Thank y'all!

Pubsub API and Compability with Dapr Component

dapr is an excellent Runtime product, but it lacks the ability of Service Mesh, which is necessary for the Runtime product used in production environment, so we hope to combine Runtime with Service Mesh into one sidecar to meet more complex production requirements.