Issue Description

First of all, thank you for this useful container! Now, my problem: I want to perform a live migration of a running docker container. My container is using the Nvidia runtime and GPU passthrough to container using ENV NVIDIA_VISIBLE_DEVICES all. Live migration is done through docker's experimental checkpoint and restore using CRIU. So I start my container

nvidia-docker run --name myApp -i app

which runs fine, and also exits normally if not checkpointed. However, if I create a checkpoint using

docker checkpoint create --leave-running=true myApp checkpoint1

I get following error response:

Error response from daemon: Cannot checkpoint container myApp: nvidia-container-runtime did not terminate sucessfully: criu failed: type NOTIFY errno 0 path= /var/run/docker/containerd/daemon/io.containerd.runtime.v1.linux/moby/10eb4bf688652c5d8f612fca192c12c80cc59bce605cf3ff0a0e8a0e07ce17da/criu-dump.log: unknown

Inspecting file criu-dump.log leads me to the following error:

Error (criu/mount.c:925): mnt: Mount 448 ./proc/driver/nvidia/gpus/0000:01:00.0 (master_id: 13 shared_id: 0) has unreachable sharing. Try --enable-external-masters.

So it seems to be an issue with CRUI not being able to create a checkpoint of the GPU. The --enable-external-masters command is not suitable for GPUs. Including ENV NVIDIA_DRIVER_CAPABILITIES all in the Dockerfile does not resolve the issue either. So my main question is if there is a way of integrating a GPU dump from the nvidia-docker container checkpoint. Proper support from Nvidia seems to be not given yet, but there is Nvidia software for live migrating between GPUs. So can we expect support for this kind of application, too?

Steps to Reproduce

Set docker to experimental mode, install criu for docker, install nvidia driver, install nvidia-docker, install nvidia-container-runtime.

Create your Dockerfile with the following two lines:

FROM ubuntu:16.04

ENV NVIDIA_VISIBLE_DEVICES all

Build the container

sudo docker build -t app .

When the container is built, run it

nvidia-docker run --name myApp -i app

where you have to waive the -t flag to avoid issues with CRIU. Next, we try to create the checkpoint

docker checkpoint create --leave-running=true myApp checkpoint1

upon which you will receive the error response from the issue description.

System Information

I'm attaching hopefully relevant system information, as suggested in the nvidia-docker issues.

Kernel version from uname -a:

Linux ECS 4.15.0-39-generic #42~16.04.1-Ubuntu SMP Wed Oct 24 17:09:54 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

Driver information from nvidia-smi -a:

==============NVSMI LOG==============

Timestamp : Tue Nov 20 09:37:42 2018

Driver Version : 384.130

Attached GPUs : 1

GPU 00000000:01:00.0

Product Name : GeForce GTX 1080

Product Brand : GeForce

Docker version from docker version:

Client:

Version: 18.06.1-ce

API version: 1.38

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:24:56 2018

OS/Arch: linux/amd64

Experimental: false

Server:

Engine:

Version: 18.06.1-ce

API version: 1.38 (minimum version 1.12)

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:23:21 2018

OS/Arch: linux/amd64

Experimental: true

Nvidia packages version from dpkg -l '*nvidia*' or rpm -qa '*nvidia*':

_or_: command not found

Desired=Unknown/Install/Remove/Purge/Hold

| Status=Not/Inst/Conf-files/Unpacked/halF-conf/Half-inst/trig-aWait/Trig-pend

|/ Err?=(none)/Reinst-required (Status,Err: uppercase=bad)

||/ Name Version Architecture Description

+++-======================-================-================-==================================================

ii libnvidia-container-to 1.0.0-1 amd64 NVIDIA container runtime library (command-line too

ii libnvidia-container1:a 1.0.0-1 amd64 NVIDIA container runtime library

ii nvidia-384 384.130-0ubuntu0 amd64 NVIDIA binary driver - version 384.130

ii nvidia-384-dev 384.130-0ubuntu0 amd64 NVIDIA binary Xorg driver development files

un nvidia-common <none> <none> (no description available)

ii nvidia-container-runti 2.0.0+docker18.0 amd64 NVIDIA container runtime

ii nvidia-container-runti 1.4.0-1 amd64 NVIDIA container runtime hook

un nvidia-docker <none> <none> (no description available)

ii nvidia-docker2 2.0.3+docker18.0 all nvidia-docker CLI wrapper

un nvidia-driver-binary <none> <none> (no description available)

un nvidia-legacy-340xx-vd <none> <none> (no description available)

un nvidia-libopencl1-384 <none> <none> (no description available)

un nvidia-libopencl1-dev <none> <none> (no description available)

ii nvidia-modprobe 384.81-0ubuntu1 amd64 Load the NVIDIA kernel driver and create device fi

un nvidia-opencl-icd <none> <none> (no description available)

ii nvidia-opencl-icd-384 384.130-0ubuntu0 amd64 NVIDIA OpenCL ICD

un nvidia-persistenced <none> <none> (no description available)

ii nvidia-prime 0.8.2 amd64 Tools to enable NVIDIA's Prime

ii nvidia-settings 384.81-0ubuntu1 amd64 Tool for configuring the NVIDIA graphics driver

un nvidia-settings-binary <none> <none> (no description available)

un nvidia-smi <none> <none> (no description available)

un nvidia-vdpau-driver <none> <none> (no description available)

dpkg-query: no packages found matching *nvidia*rpm

dpkg-query: no packages found matching -qa

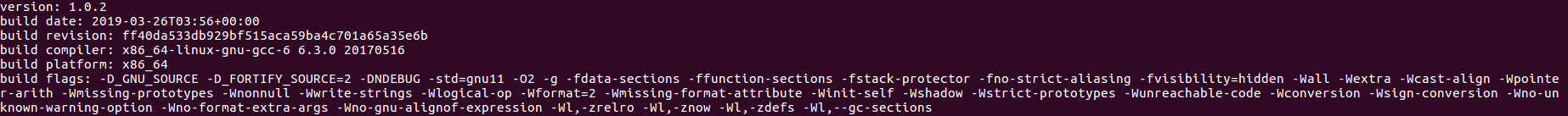

NVIDIA container library version from nvidia-container-cli -V:

version: 1.0.0

build date: 2018-09-20T20:18+00:00

build revision: 881c88e2e5bb682c9bb14e68bd165cfb64563bb1

build compiler: gcc-5 5.4.0 20160609

build platform: x86_64

build flags: -D_GNU_SOURCE -D_FORTIFY_SOURCE=2 -DNDEBUG -std=gnu11 -O2 -g -fdata-sections -ffunction-sections -fstack-protector -fno-strict-aliasing -fvisibility=hidden -Wall -Wextra -Wcast-align -Wpointer-arith -Wmissing-prototypes -Wnonnull -Wwrite-strings -Wlogical-op -Wformat=2 -Wmissing-format-attribute -Winit-self -Wshadow -Wstrict-prototypes -Wunreachable-code -Wconversion -Wsign-conversion -Wno-unknown-warning-option -Wno-format-extra-args -Wno-gnu-alignof-expression -Wl,-zrelro -Wl,-znow -Wl,-zdefs -Wl,--gc-sections

Nvidia-docker version from nvidia-docker --version:

Docker version 18.06.1-ce, build e68fc7a

The debugging logs from nvidia-container-runtime do not contain relevant information.