petergu684 / hololens2-researchmode-unity Goto Github PK

View Code? Open in Web Editor NEWUnity Plugin for using research mode functionality in HoloLens 2. Modified based on HoloLens2ForCV.

License: MIT License

Unity Plugin for using research mode functionality in HoloLens 2. Modified based on HoloLens2ForCV.

License: MIT License

Hello everybody!

I tested the TCP client/server codes which are recently added.

But the problem is, I can not see any depth image in pc through TCP server.

It's just a 'data' folder and no files in there when I running the Hololens app. (Sensor streaming is alright.)

Did I miss any steps? Or should I edit some parts of the scripts?

If anybody knows the solution, please let me know. Thank you!

First big thanks to the developer for the project! I noticed that a few things do not work and I hope to get help here.

So I tried this project today and right now its a bit frustrating.

I got it running on the HoloLens 2 and the python server is also running.

So what is my problem?

if header == 'f': # save spatial camera images and saves it on under /dataif header == 's': # save depth sensor images instead of getting 'f' again. So I gonna check the code in unity to find the reason for that.So I tried to debug the unity part via adding text from the exception into the ui text, that already exists. I did not fix the problem but this is the current information:

SaveAHATSensorDateEvent() . This method has the line var depthMap = researchMode.GetDepthMapBuffer(); which returns null and then passes depthMap (which is null) to the method SendUITunt16Async() in the next step.SendUITunt16Async() throws then a NullReferenceException :System.NullReferenceException: Object reference not set to an instance of an object.

at TCPClient+<SendUINT16Async>d_16.MoveNext()[0x00000] in <000000000000000000000000000000000>:0

at System.Runtime.CompilerService.AsyncVoidMethodBuilder.Start[TStateMachine](TStateMachine&stateMachine)[0x00000] in <00000000000000000000000000000000000>:0

at ResyearchModeVideosStream.SaveAHAATSennsorDataEvent()[0x00000] in <000000000000000000000000000000000000000>:0

at System.Threading.threadStart.Invoke()[0x00000] in<000000000000000000000000000000000000000>:0

at UnityEngine.Events.UnitEvent.Invoke()[0x00000] in <000000000000000000000000000000000000000>:0

at Microsoft.MixedReality.Toolkit.UI.Interactable.SendOnClick

Exception thrown at 0x00007FFB770F5E5C (KernelBase.dll) in HL2ResearchModeUnitySample.exe: 0x800706BA: The RPC server is unavailable.

onecore\com\combase\dcomrem\preventrundownbias.cpp(1310)\combase.dll!00007FFB769C61B0: (caller: 00007FFB769C606C) LogHr(1) tid(444) 800706BA The RPC server is unavailable.

Exception thrown at 0x00007FFB770F5E5C in HL2ResearchModeUnitySample.exe: Microsoft C++ exception: Il2CppExceptionWrapper at memory location 0x00000041099FE2A0.

After fixing the wrong depth-mode-issue I thought I could save the point cloud data to a .ply on the py-server, but the server cant handle the point cloud data! So I get the following Error:

--------------------------

Header: p

524293

Length of point cloud:262144

Oops! <class 'ValueError'> occurred.

Traceback (most recent call last):

File "C:\Users\XXXX\source\repos\HoloLens2-ResearchMode-Unity-master\python\TCPServer.py", line 86, in tcp_server

pointcloud_np = np.frombuffer(data[5:5 + n_pointcloud * 3 * 4], np.float32).reshape((-1, 3))

ValueError: cannot reshape array of size 131072 into shape (3)

Closing socket ...

Press any key ...

So this code cant reshape it because the content/length cannot be divided by 3. Why is this happening and what is wrong?

Exception thrown at 0x00007FF9430A5E5C (KernelBase.dll) in ResearchMode.exe: 0x40080202: WinRT transform error (parameters: 0x000000008000000B, 0x0000000080070490, 0x0000000000000014, 0x000000DAC73FD390).

Exception thrown at 0x00007FF9430A5E5C in ResearchMode.exe: Microsoft C++ exception: Microsoft::CoreUI::Messaging::MessagingValidationException::Holder$ at memory location 0x000000DAC75FE2A0.

analog\uxplat\holoshellruntime\dll\messagedialogasyncoperation.cpp(243)\HoloShellRuntime.dll!00007FF9379E5648: (caller: 00007FF9379BCD60) LogHr(1) tid(178c) 87B20808 Exception thrown at 0x00007FF9430A5E5C in ResearchMode.exe: Microsoft C++ exception: Microsoft::CoreUI::Messaging::MessagingValidationException::Holder$ at memory location 0x000000DAC75FF8C0.

PointCloud "Origin offset" when used with FrozenWorld 1.5.7 (Unity 2019.4.22f1) World Anchor WSA.

I have disabled this line #researchMode.SetReferenceCoordinateSystem(unityWorldOrigin); (ResearchModeVideoStream.cs) - hoping that the PointCloud PCL rendering will not be based on a different (origin 0,0,0) position+orientation.

However, with FrozenWorld 1.5.7 - the Spongy (origin 0,0,0) is following where you restart HL2 app, while the F1(is fixed to where you first launch the Unity App). So, most of the time Spongy (origin 0,0,0) and F1(origin 0,0,0) position and orientation is not the same.

Following this, the PCL will be rendered with similar offset values.

If Spongy (origin 0,0,0) and F1(origin 0,0,0) is offset by x,y,z and rx,ry,rz rotation, then the PCL is rendered in that similar position and orientation.

Is there to make sure that the PCL rendering switch-off its own (origin) and use FrozenWorld origin, instead?

Thanks, Zul

Can I get VLCFrame, I want to convert it to opencv Mat

Hi, I have a problem with streaming the Long throw data to my PC. I keep getting the error ValueError: buffer size must be a multiple of element size whenever I run the python code for receiving both point cloud and depth sensor (long throw mode) on my PC.

This is the send function for the depth map:

public void SaveLongThrowSensorDataEvent()

{

#if ENABLE_WINMD_SUPPORT

var LongdepthMap = researchMode.GetLongDepthMapBuffer();

#if WINDOWS_UWP

if (tcpClient != null)

{

tcpClient.SendUINT16Async(LongdepthMap);

}

#endif

#endif

}

I tried changing the data = conn.recv(512*512*4+100) to data = conn.recv(288*320*4+100) because of the different size of the LT setting. Also, I do get data ( but cannot reshape it to any proper size) when using depth_img_np = np.frombuffer(data[5:5+N], np.uint8) instead of depth_img_np = np.frombuffer(data[5:5+N], np.uint16), but still, this is unusable. Does anyone know what I'm doing wrong? I am getting bytes from the socket but I can't get it into usable data...

Thansk very much for your repository. I have succeed deploy the ResearchMode-Unity. But the debug process is really inconvenient. So do you have any good suggestions for this?

Any replay will be appreciated.

Thanks for author's project. When i try to follow the steps to put the .dll and .winmd into my unity project, it didn't show the depth camera view in hololens.

My hololens just show the view that i built before, but there is no any error msg, what's wrong with this situation, do i need to do anything to activate the .dll?

Hello, thank you for sharing this project.

I am new to hololens 2 , so this question might be weird. When I tried to deploy this project to hololens 2, there is an error occurred as below:

RemoteCommandException: error 0xC00CE011: App content group map validation error: Line 4, Column 6, Reason: Element '{http://schemas.microsoft.com/appx/2016/contentgroupmap}ContentGroup' cannot be empty according to the DTD/Schema.

0xc00ce011 HL2ResearchModeUnitySample

I tried to find it on website ,but I didn't see any relative issue(so I think that's my own fault...). Could give me some hint? Thanks.

@petergu684 I wanna build a c++ dll ,but I find there may be something wrong with my dll. I just built a simple function for ARM64 to deploy it to hololens2.

I just delete all your HL2RmStreamUnityPlugin .h and .cpp code and write a return function.

The .h file is like this:

#pragma once

#ifdef FUNCTIONS_EXPORTS

#define FUNCTIONS_EXPORTS_API extern "C" __declspec(dllexport)

#else

#define FUNCTIONS_EXPORTS_API extern "C" __declspec(dllimport)

#endif

namespace HL2Stream

{

FUNCTIONS_EXPORTS_API int __stdcall DOUT();

}

And the .cpp file is below:

#include "pch.h"

#include "HL2RmStreamUnityPlugin.h"

#define DBG_ENABLE_VERBOSE_LOGGING 1

#define DBG_ENABLE_INFO_LOGGING 1

int __stdcall HL2Stream::DOUT()

{

return 123;

}`

then I deploy it into Unity/Plugin and the scritp is:

[DllImport("HL2RmStreamUnityPlugin.dll", EntryPoint = "DOUT", CallingConvention = CallingConvention.Cdecl)] public static extern int DOUT();

But i find there is something wrong with it.

First of all, I guess it is the version problem.

I find your project .vcxproj version

<WindowsTargetPlatformVersion>10.0.18362.0</WindowsTargetPlatformVersion> <WindowsTargetPlatformMinVersion>10.0.17763.0</WindowsTargetPlatformMinVersion>

and my unity build setting is

Next, maybe there are more detailed settings in building dll. And i would be appreciated

that someone can help !

Thank you for this amazing project!

I have a quick question: When I use the unity sample, the point cloud of objects within 1m distance is often transformed with what seems to be a constant positional and rotational offset that sometimes changes after stopping the stream using the provided UI and closing/restarting the app. After several subsequent iterations of stopping the stream and closing/restarting the app while not restarting the device, the problem goes away but seems to come back randomly. Otherwise, it works perfectly fine.. ?

Could someone advise where I'm going wrong? The transform from the depth sensor to the rig node in HL2ResearchMode.cpp as shown in debug mode makes sense I think and no other transforms are introduced in ResearchModeVideoStream.cs or PointCloudRenderer.cs. I feel like I'm missing something obvious? I'll keep debugging and make a more formal report if the problem persists but I just wanted to reach out beforehand to see if someone has had the same issue.

hello,when i click "save spatial " ,it work and save the image.

but then i click the “save ahat” button.it didn't work.

Hi petergu684,

I am trying to obtain the extrinsic transform from the Left Front Camera (or rig origin) to the Unity world origin. Using your unity sample, I noticed that unity world coordinate system is defined as the reference coordinate system with the line: researchMode.SetReferenceCoordinateSystem(unityWorldOrigin);

I then tried to obtain to the LFToWorld transform using the printLFExtrinsics( ) function but it seemed to give me a transform close to identity. I am assuming this function is not the one I want or I am using it improperly, would I need to modify your plugin to obtain the static Rig To Unity World transform or is there a function I can query to obtain this? I am using Unity 2020.3.30f1.

Thanks in advance,

Daniel Allen

Hi,

I'd like to request a way to get the point cloud for the long throw sensor. I had a look at HL2ResearchMode::DepthSensorLoop, and I think I found where to do it, but I'm not a C++ dev and can barely understand what's going on. The code seems to be written around the short throw sensor, and thus modifying it to include the long throw sensor for point cloud is beyond what I'm able to do.

I understand that the key is to use MapImagePointToCameraUnityPlane, which also exists for the long depth sensor.

I'm trying to run the point cloud sample, and it runs, but I never get any of the feeds showing anything. It's almost like it isn't actually using / loading the plugin, but can do everything else. I'm trying this with Unity 2021.1.12f1. Thanks for any insight.

I'm using your implementation for testing, and without modifying anything in the C# sample script or the C++ files. My plane wasn't displaying depth information, and when I did some debugging to check, the check in SensorTest.cs in the main Update() loop:

if (startRealtimePreview && researchMode.DepthMapTextureUpdated())

The latter researchMode.DepthMapTextureUpdated() is always false. Are there any obvious reasons that may cause this, like the HL2 is configured wrong, or is the problem in the C++ likely in the code? I did test with default HL2ForCV samples to make sure my ResearchMode works correctly and is enabled.

Thanks for any help.

Unity v.2020.1.14f1

VS2019 v16.8.0

Is it possible to get the raw RGB/PV camera frames through this .dll?

In the original C++ app, it saves the RGB frames along with the AHAT results, and I'm looking to extract the raw RGB frame in Unity as well. However, I didn't find a suitable function in the built .dll. Any pointers?

Thanks in advance!

Hello, I wonder if you are still studying. I released Hololens2 according to the code in your example, but it can't work normally. Could you help me?

I have provided the background log of Hololens2, I hope you can help me look at it.

UnityPlayer (11).log

Hi Peter,

At the moment, this is for WSA/ARM64.

and, since the winmd is targeted for ARM64, this cannot be compiled in VS for ARM.

I have another dll that uses ARM(which does not work for ARM64), therefore it conflicts with this HL2UnityPlugin.winmd.

Can you put in a branch for ARM as well for this HL2 plugin?

Thanks, Zul

Hi! Love this work, it's been really helpful thus far. Excited to test out the VLC streaming soon too!

Just wondering, would it be easy to stream the corresponding extrinsics per frame, (for depth and spatial cameras)? I think that would be very useful for people to generate visualizations or perform computation with a moving device across time :)

I see that there's a "LfToWorld" in the HL2ResearchMode.cpp file, but I don't think it's accessible right now?

Thanks, and looking forward to future updates :)

Hello,thank you for you contribution.I want to know how to debug c++ code with unity? And how to run this project in editor?I have tried windows Mixed reality remote,but it's useless.

Hey guys!

First of all, thanks for the plugin. I am trying to get the data from the depth (AHAT) sensor of the Hololens 2, but so far I have had no success.

A few weeks ago, I was able to get the sample apps from the original Hololens2ForCV github to work (namely, SensorVisualization and StreamRecorder).

I couldn't get the sample app from this project to work, and I am now trying to incrementally write my own code using the HL2UnityPlugin.

I have tried building the solution in Release|ARM64 on both VS2022 and VS2019. I have tried using the v141, v142 and v143 toolsets.

Each time, I have then added the .dll and .winmd (as well as .pdb for debugging) in the right folder in my Unity project.

I am also using ChatGPT4 while coding, and it suggested this configuration for the .dll, but I have also tried with Any SDK and Any Scripting Backend

The app is pretty simple: A quad with a texture that will eventually be depth, and some debug code floating in the air. I run this in Unity 2019.4.40f1 at the moment. I deploy on Hololens 2 for the real tests, this is just to give an idea of the app.

The code of the DepthAHATCapture.cs script follows along the sample code (ResearchModeVideoStream.cs) as shown here

For now, most of it is commented because the issue occurs at the creation of the HL2ResearchMode object.

Here are the build settings in Unity:

Once the Unity build is done, I added the lines to the appxmanifest file, this is what it looks like:

I then open the solution in VisualStudio 2022 and build with these settings

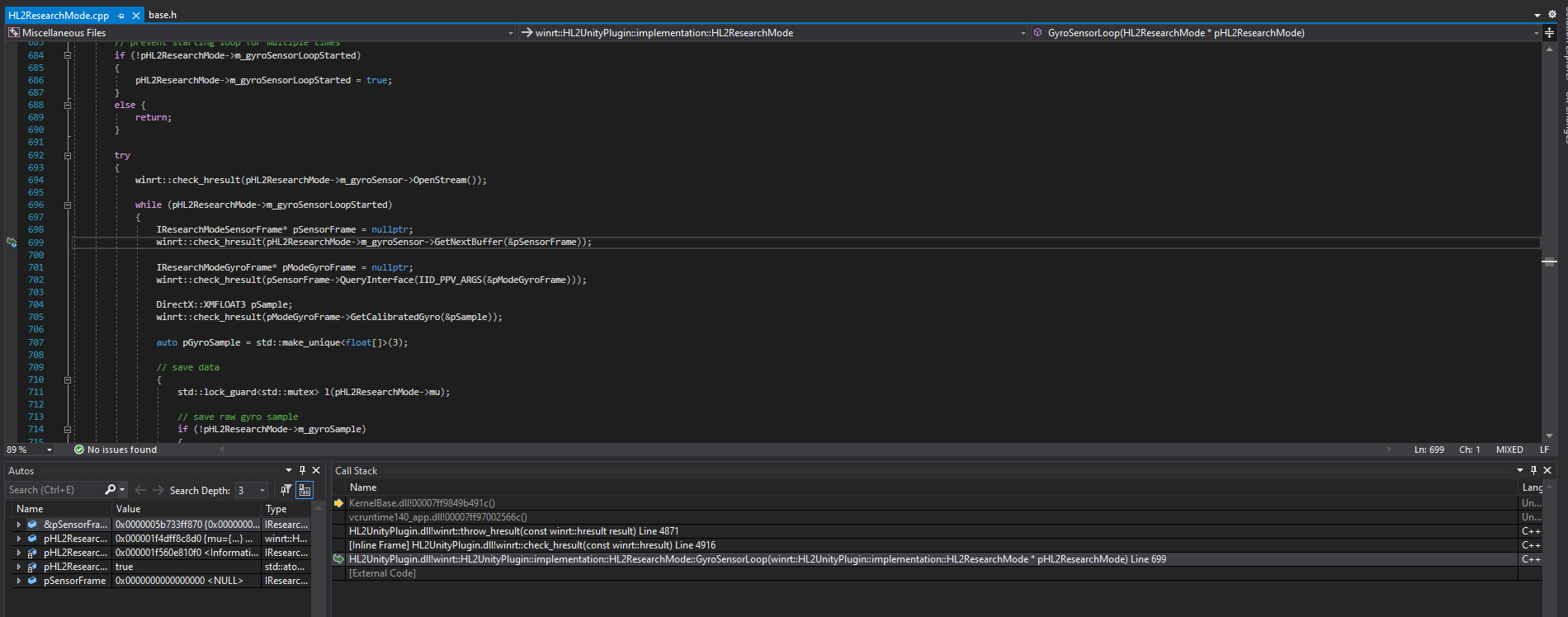

When debugging, I hit an exception here (click to enlarge and see the full call stack)

I have commented the original C++ HL2ResearchMode code before building the .dll so I could get traces.

Here is the commented code of the constructor:

Here is the debug output with the traces, and the moment when it hits the exception. On the Hololens, the debug text actually displays "Catastrophic Failure" as well.

From my understanding, the C# code executes well, the .dll is loaded in Unity as the HL2ResearchMode constructor is accessed at runtime. The first few lines of the constructor, including loading the ResearchModeAPi work. But it looks like the CreateResearchModeSensorDevice function is not found or not accessible?

I am on Unity 2019.4.40f1, but I have tried on 2019.4.22 and 2019.4.28. I was on 2020 before that and downgraded to 2019 to fit the project. I have tried different platform toolsets. Uninstalled and reinstalled both VS2019 and VS2022. I unfortunately can't try this on another Hololens, as the other one we had in the lab broke. The headset is in developper mode, the permissions are right in the manifest. I wanted to try and build on v140, but I can't seem to get the tools working on VS2022 (they're installed but the software tells me they are not).

I am a beginner in C++, Unity, and Mixed Reality in general, so I may have missed something obvious, but I'd appreciate any help, and I can provide any additional info you need.

Thanks a lot!

Repro:

start the sample app

Press stop

CRASH

This also happens in other app using the same dll.

When calculating the center point in the long depth sensor loop, the position of the depth center is still limited by depthCamRoi.depthFarClip, which is the far clip of the short sensor.

As a result, the center point is not calculated beyond .8m, even though the long throw sensor sees much further.

Probable fix (will try it later and create a PR if it works):

Line 500:

if (depth > pHL2ResearchMode->depthCamRoi.depthNearClip && depth < pHL2ResearchMode->depthCamRoi.depthFarClip)

should be

if (depth > 200)

Hi @petergu684

I am trying to extend more sensors for the project. Gary scale images works perfectly. But I can not get IMU data or LONG_THROW depth data using same code structure. The application will crash to desktop in HL2 device.

This is not about you original project, your project is great and efficient. But I still want to ask you and see if you have any advice or comment about this problem, thanks.

How can I get the accelerometer frame in Unity (Transform / local rotation from camera)? It is not aligned with the camera frame, it must be rotated by a few degrees around each axis.

When I put the headset horizontally, the acceleration data is not null on any axis. I need acceleration without gravity in the headset (camera) frame but it is hard to compute if I can't do the transformation between sensor and camera frame.

Hi @petergu684 ,

First of all, Thanks for your work, I have a question for you. how do I set the pointcloudmaterial in PointCloudRenderer.cs? I don't find this material in your unity project, thanks!

Hi,

First of all, thank you very much for this.

I'm trying to take small steps, and first trying to get the center point depth displayed somehow. I managed to get the little sample from #1 compiled, and tweaked it a little so that I don't even use any visual display except for the "text".

The HL2ResearchMode gets loaded, and I can print the extrensics just fine. The StartDepthSensorLoop is also called without exception. However, when I try to update the label with either

var cp = researchMode.GetCenterPoint(); text.text = $"{cp[0]}, {cp[1]}, {cp[2]}";

or just

text.text = researchMode.GetCenterDepth().ToString()

the output remains "0,0,0" or simply "0" in every frame.

Also, PrintResolution logs "0x0x0", which feels strange :)

Any help would be greatly appreciated.

Hi @petergu684 ,

First of all, thanks for your work, and t has helped me a lot. Now, I have a question to ask you. I've used python on the PC to get the m_depthMap specifics, the code is as follows, but during execution, the PC does not display the currently received depth image, what is the cause of this? Thank you very much for your answer!

`while True:

header = recv_nbytes(client_socket, 4)

if header is None:

break

header = np.frombuffer(header, dtype=np.uint32)

depthImageLength = header[0]

print('depthImageLength is ' + str(depthImageLength))

depthImageData = recv_nbytes(client_socket, depthImageLength)

print('len(depthImageData) is ' + str(len(depthImageData)))

depthImage = np.frombuffer(depthImageData, np.uint16).reshape(512, 512)

print('len(depthImage) is ' + str(len(depthImage)))

self.last_frame = depthImage

def main():

print('start')

receiver = VideoReceiver('', 50002)

receiver.start()

fig, ax = plt.subplots()

def func_animate(_):

if receiver.last_frame is not None:

ax.cla()

ax.imshow(receiver.last_frame)

ani = FuncAnimation(fig,

func_animate,

frames=10,

interval=50)

plt.tight_layout()

plt.show()`

Not sure, but shouldnt it be:

In void HL2ResearchMode::SpatialCamerasFrontLoop(HL2ResearchMode pHL2ResearchMode)*

Is:

ResearchModeSensorTimestamp timestamp_left, timestamp_right;

pLFCameraFrame->GetTimeStamp(×tamp_left);

pLFCameraFrame->GetTimeStamp(×tamp_right);

Should be:

ResearchModeSensorTimestamp timestamp_left, timestamp_right;

pLFCameraFrame->GetTimeStamp(×tamp_left);

pRFCameraFrame->GetTimeStamp(×tamp_right);

Hi! petergu

I want to send just one frame point cloud buffer to my pc through TCP for point cloud registration. Is it possible? I fail to get the point cloud buffer after an air-tap action. It returns nothing.

My code:

...

#if ENABLE_WINMD_SUPPORT && !UNITY_EDITOR

using HL2UnityPlugin;

#endif

...

#if ENABLE_WINMD_SUPPORT && !UNITY_EDITOR

HL2ResearchMode researchMode;

#endif

...

private float[] PointCloudFloatArray = null;

private byte[] PointCloudBuffer = null;

...

#if ENABLE_WINMD_SUPPORT && !UNITY_EDITOR

public byte[] GetDepthSensorData()

{

while (researchMode.PointCloudUpdated())

{

PointCloudFloatArray = researchMode.GetPointCloudBuffer();

if (PointCloudFloatArray != null && PointCloudFloatArray.Length > 0)

{

Debug.Log("Get access to point cloud buffer" + PointCloudFloatArray.Length);

// float[] ->byte[] for TCP

PointCloudBuffer = new byte[PointCloudFloatArray.Length * sizeof(float)];

Buffer.BlockCopy(PointCloudFloatArray, 0, PointCloudBuffer, 0, PointCloudBuffer.Length);

researchMode.StopAllSensorDevice();

break;

}

else

{

Debug.Log("Cannot get point cloud buffer");

}

}

return PointCloudBuffer;

}

#endifThe InitializeDepthSensor() and StartDepthSensorLoop() are done in Start() function. The restricted capability is added to the manifest file.

Look forward to your reply.

Hi, I tried using your project (the compiled .dll and .winmd) in my existing project. I followed the steps you described exactly but the packages couldn't be imported into my code files (using command failed). Also multiple regenerations of the code assembly didn't change this. Is there a need to manually link the dlls in the assembly project? I tried linking the .dll but got an error that it could not be added since it is neither accessible nor a valid assembly nor a COM component. Since I am not too familiar with VS and you did not mention any of this in your guide I stopped checking on this. Any hint?

Hello,

Just a simple question, I tried the Unity Scene "ImuViewSample". I would like to acquire the IMU sensor to a certain frequency (for example 60 Hz). It seems that the sensor values are updated less quickly than this. Is it a limitation of the sensor speed or a limitation of the C++ function HL2ResearchMode::AccelSensorLoop in HL2UnityPlugin sln ?

Hello petergu684,

thank you very, very much for such a nice project!

Unfortunately I need to continue my development with the unity version 2021.2 and the problem is that the methods for getting the unityWorldOrigin are discontinued from that version.

Are there any replacements I can implement to keep the same functionality that the pointcloud is visualized directly where they got recorded or is it simply not possible from that version to get the alignment process of both done?

Thank you very, very much for your or anyones help!

BR,

Birkenpapier

I use the plugin and a stripped version of the demo project to extract the 3d coordinates for an object. (Using Infrared markers etc). Might this be related to the plugin or do i need to look somewhere else?

hstring HL2ResearchMode::PrintDepthExtrinsics()

{

std::stringstream ss;

ss << "Extrinsics: \n" << MatrixToString(m_depthCameraPose);

std::string msg = ss.str();

std::wstring widemsg = std::wstring(msg.begin(), msg.end());

OutputDebugString(widemsg.c_str());

return winrt::to_hstring(msg);

}

Are these codes solving the extrinsic parameter matrix of the camera?

Thanks for this project! It is great and I got this work on HoloLens2 ( through Unity2021.3.8f1c1) successfully!

Now I want to implement a function that receive a (u,v) point on the image coordinate system and transform it to a (X,Y,Z) point on the Unity/HoloLens coordinate system with the long throw depth information, to put it simply, its a coordinate transformation. I'm new to C++ so I tried to imitate petergu's code, my code which added to the HL2ReasearchMode.cpp are:

// "OpenStream" and "GetNextBuffer" calls are blocking, each sensor frame loop should be run on its own thread,

// This allows sensors to be processed on their own frame rate

com_array<float> HL2ResearchMode::StartuvToWorld(array_view<float const> const& UV)

{

float xyz[3] = { 0,0,0 };

float uv[2] = { UV[0], UV[1] };

if (m_refFrame == nullptr)

{

m_refFrame = m_locator.GetDefault().CreateStationaryFrameOfReferenceAtCurrentLocation().CoordinateSystem();

}

std::thread m_puvToWorldThread(HL2ResearchMode::uvToWorld, this, std::ref(uv), std::ref(xyz));

m_puvToWorldThread.join();

com_array<float> XYZ = { xyz[0], xyz[1], xyz[2] };

return XYZ;

}

//input: pHL2ResearchMode uv[2] output: XYZ[3]

void HL2ResearchMode::uvToWorld(HL2ResearchMode* pHL2ResearchMode, float(&uv)[2], float(&XYZ)[3])

{

//open

pHL2ResearchMode->m_longDepthSensor->OpenStream();

//access to the interface "IResearchModeSensorFrame"

IResearchModeSensorFrame* pDepthSensorFrame = nullptr;

pHL2ResearchMode->m_longDepthSensor->GetNextBuffer(&pDepthSensorFrame);

//get resolution

ResearchModeSensorResolution resolution;

pDepthSensorFrame->GetResolution(&resolution);

pHL2ResearchMode->m_longDepthResolution = resolution;

//access to the depth camera interface "IResearchModeSensorDepthFrame"

IResearchModeSensorDepthFrame* pDepthFrame = nullptr;

winrt::check_hresult(pDepthSensorFrame->QueryInterface(IID_PPV_ARGS(&pDepthFrame)));

//resolution trans , not sure 1920x1080

float uv_d[2] = { 0, 0 };

if (!(resolution.Width == 1920 && resolution.Height == 1080))

{

uv_d[0] = uv[0] * (resolution.Width / 1920);

uv_d[1] = uv[1] * (resolution.Height / 1080);

}

else

{

std::copy(std::begin(uv), std::end(uv), std::begin(uv_d));

}

//get buffer

size_t outBufferCount = 0;

const UINT16* pDepth = nullptr;

const BYTE* pSigma = nullptr;

pDepthFrame->GetSigmaBuffer(&pSigma, &outBufferCount);

pDepthFrame->GetBuffer(&pDepth, &outBufferCount);

pHL2ResearchMode->m_longDepthBufferSize = outBufferCount;

//timestamp

UINT64 lastTs = 0;

ResearchModeSensorTimestamp timestamp;

pDepthSensorFrame->GetTimeStamp(×tamp);

lastTs = timestamp.HostTicks;

//coordinate transformation

Windows::Perception::Spatial::SpatialLocation transToWorld = nullptr;

auto ts = PerceptionTimestampHelper::FromSystemRelativeTargetTime(HundredsOfNanoseconds(checkAndConvertUnsigned(timestamp.HostTicks)));

transToWorld = pHL2ResearchMode->m_locator.TryLocateAtTimestamp(ts, pHL2ResearchMode->m_refFrame);

XMMATRIX depthToWorld = XMMatrixIdentity();

depthToWorld = pHL2ResearchMode->m_longDepthCameraPoseInvMatrix * SpatialLocationToDxMatrix(transToWorld);

auto idx = (UINT16)uv_d[0] + (UINT16)uv_d[1] * resolution.Width; // the xth pixel

UINT16 depth = pDepth[idx];

depth = (pSigma[idx] & 0x80) ? 0 : depth - pHL2ResearchMode->m_depthOffset;

float xy[2] = { 0, 0 };

pHL2ResearchMode->m_pLongDepthCameraSensor->MapImagePointToCameraUnitPlane(uv_d, xy);

auto pointOnUnitPlane = XMFLOAT3(xy[0], xy[1], 1);

auto tempPoint = (float)depth / 1000 * XMVector3Normalize(XMLoadFloat3(&pointOnUnitPlane));

//get the target point coordinate

auto pointInWorld = XMVector3Transform(tempPoint, depthToWorld);

XYZ[0] = XMVectorGetX(pointInWorld);

XYZ[1] = XMVectorGetY(pointInWorld);

XYZ[2] = -XMVectorGetZ(pointInWorld);

//Release and Close

if (pDepthFrame)

{

pDepthFrame->Release();

}

if (pDepthSensorFrame)

{

pDepthSensorFrame->Release();

}

pHL2ResearchMode->m_longDepthSensor->CloseStream();

pHL2ResearchMode->m_longDepthSensor->Release();

pHL2ResearchMode->m_longDepthSensor = nullptr;

}The StartuvToWorld func above worked for the first call but got an error indicator on the pHL2ResearchMode->m_longDepthSensor->OpenStream() for the second call and the HoloLens2 app crashed, and Visual Studio output were

Exception thrown at 0x00007FFE74686888 (HL2UnityPlugin.dll) in My_Project.exe: 0xC0000005: Access violation reading location 0x0000000000000000

Can anyone help to solve this? Thanks a lot!

What steps would I take to connect a HoloLens 2 to the python server?

I currently am doing:

building and running the application:

starting the python script:

pressing the "Connect to Network button" on the app menu on the HoloLens 2:

Hello, thank you for sharing this project. I currently have a problem. After I compiled the library, I tried to get the point cloud data in Unity. At first, I tried to output the depth center, it always returned 0, but no error occurred. Then I use the same method to output the number of point clouds. At this time, when running in hololens2, it will prompt: NullReferenceException: Object reference not set to an instance of an object.

Excuse me, is there any way to solve my problem.

My code as follow:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using System;

using System.Runtime.InteropServices;

using TMPro;

using UnityEngine.UI;

#if ENABLE_WINMD_SUPPORT

using HL2UnityPlugin;

#endif

public class SensorTest : MonoBehaviour

{

#if ENABLE_WINMD_SUPPORT

HL2ResearchMode researchMode = null;

#endif

// [SerializeField]

//GameObject previewPlane = null;

//[SerializeField]

public TextMeshPro text;

private Material mediaMaterial = null;

private Texture2D mediaTexture = null;

private byte[] frameData = null;

private bool isDepthLoop = false;

// Start is called before the first frame update

void Start()

{

#if UNITY_WSA && !UNITY_EDITOR

researchMode = new HL2UnityPlugin.HL2ResearchMode(); // System.Exception: Exception of type 'System.Exception' was thrown.

researchMode.InitializeDepthSensor();

text.text = "init DepthSensor ok ";

#endif

}

// Update is called once per frame

void Update()

{

if (!isDepthLoop) StartDepthSensingLoopEvent();

#if UNITY_WSA && !UNITY_EDITOR

var pointCloud = researchMode.GetPointCloudBuffer();

text.text = " points count "+ (pointCloud.Length / 3).ToString();

text.text += "myTest " + researchMode.GetCenterDepth().ToString();

#endif

}

public void StartDepthSensingLoopEvent()

{

#if ENABLE_WINMD_SUPPORT

IntPtr WorldOriginPtr = UnityEngine.XR.WSA.WorldManager.GetNativeISpatialCoordinateSystemPtr();

var unityWorldOrigin = System.Runtime.InteropServices.Marshal.GetObjectForIUnknown(WorldOriginPtr) as Windows.Perception.Spatial.SpatialCoordinateSystem;

researchMode.SetReferenceCoordinateSystem(unityWorldOrigin);

researchMode.StartDepthSensorLoop();

text.text = "start Loop ";

#endif

isDepthLoop = true;

}

}Any help would be greatly appreciated.

Hello everybody!

has anybody implemented some code to save the data obtained from sensors to a file? I would appreciate some tips regarding this matter,

thanks a lot!

Hi!

I am trying to build point cloud sample in Unity. My Editor version is 2020.3.34f1.

I followed the README file in the github page to deploy the build in Hololens 2.

I have also enabled research mode on my hololens.

However, when I run the code, I was getting a Null reference exception.

Few debugs later, I found out that researchMode seems to be null in LateUpdate() of ResearchModeVideoStream.

I also checked the permissions: Camera, Microphone and Eye tracker as given. I reopened the app in hololens.

I also tried restarting hololens. I still get the NullReferenceException issue when I call researchMode.LongDepthMapTextureUpdated().

Is there any step that I missed? I can also provide with more information if needed.

Thank you!

Thank you very much for your repo. It's amazingly easy to follow and to extend.

I am using it to generate point clouds for LT sensor. I have managed to make it all work, but the final point clouds are flipped left/right as shown below. I haven't used the SetReferenceCoordinateSystem yet. And the z values seem about right too.

In the image, I have my left hand raised and it's looking like a right hand.

Thanks again for your amazing work.

Hey,

first of all: Thank you very much for this project!

I do not need the depth camera though, but the IMU values. I am not sure how to achieve this though, so I tried accessing a test function first. So I did the following steps:

void TestResearchmodeFunction(); into the HL2ResearchMode.idlHL2ResearchMode.hHL2ResearchMode.cpp (just calculating 2+2 or whatever, no return value of course)Now a console output in the app on the HoloLens 2 shows up telling me "invalid cast exception specified cast is not valid"

Is this the correct way to add a function in your project or did I miss a step?

Btw: Before adding my test function everything worked well and I was able to initialize the depth sensor and read its center value etc. I just have problems after adding a function to the .idl, .h and .cpp, which I thought was everything I would need to do.

Thank you already in advance!

I tried this code.

using System;

using UnityEngine;

using UnityEngine.UI;

#if UNITY_WSA && !UNITY_EDITOR

using static HL2UnityPlugin.HL2ResearchMode;

#endif

public class SensorTest : MonoBehaviour

{

public Text text;

#if UNITY_WSA && !UNITY_EDITOR

HL2UnityPlugin.HL2ResearchMode researchMode;

#endif

void Start()

{

#if UNITY_WSA && !UNITY_EDITOR

researchMode = new HL2UnityPlugin.HL2ResearchMode(); // System.Exception: Exception of type 'System.Exception' was thrown.

researchMode.InitializeDepthSensor();

researchMode.StartDepthSensorOnce();

#endif

}

void Update()

{

#if UNITY_WSA && !UNITY_EDITOR

text.text = researchMode.GetCenterDepth().ToString();

#endif

}

}Can you give me some examples?

Used version

Hololens 2 : 10.0.19041.1375

Windows SDK : 10.0.18362.0

Unity : 2019.04.09f

Hello, I am currently working on a class project involving the Hololens 2 @ University and have run into an issue. I have tried to use your dll and WINMD files with some success, but unfortunately, I am unable to get any data from the sensors I specify. I use your unity example code as the base, and have the following settings for my project...

Unity Version - 2019.4.22f1

MRTK Version - 2.7.2

Minimum Platform Version - 10.0.18362.0

Editor - VS 2019 (Latest installed in Build Settings)

Api Compatibility Level - .Net Standard 2.0

Allow 'unsafe' code - True

Plugin Providers - Windows Mixed Reality checked

Depth Buffer - 16 Bit

I know I have research mode working because I am able to get the HoloLensCV2 sample projects working, and I've followed the instructions specified you have provided so I am unsure of what would be next. I am able to build and load the app, however, and the other functionality for my app seems to be fine. I was finally able to attach a debugger and it pointed me to the accelerometer, gyro, and magnetometer sensor loops, I have included some images below.

Line of code that threw above error

I will be working on this for the next ~month or so, but at this point, I will also try to modify the HoloLens2CV sample code for my needs as I also need to start the paper.

Any help or suggestions would be appreciated, I can give any extra output if needed. If you would be willing to post what exact settings you are able to run the IMU sensor sample app with I would be greatful. 😄

Thank you very much for your hard work.

I want to get RGB-D data using HoloLens 2.

In the official repo, separately stored data is processed using Python and then data is created.

But I want to get RGB-D data from the device and store it frame by frame.

The most similar thing I found is

https://github.com/cyberj0g/HoloLensForCV

https://github.com/doughtmw/HoloLensForCV-Unity

I used the TCPClient.py and got the AHAT depth&Ab image. But I noticed that the saved image was different from what I saw in Unity.

This problem seems to be because the data format Alpha8...?

Any solution to save image same as view in Unity?

Thanks!

Hi,

I try to use mag values to calc ( atan(y/x) ) compass heading, but those value were not like common magnetometer data, so result was wrong.

How could I to get compass heading?

thanks.

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.