🤪 TensorFlowTTS provides real-time state-of-the-art speech synthesis architectures such as Tacotron-2, Melgan, Multiband-Melgan, FastSpeech, FastSpeech2 based-on TensorFlow 2. With Tensorflow 2, we can speed-up training/inference progress, optimizer further by using fake-quantize aware and pruning, make TTS models can be run faster than real-time and be able to deploy on mobile devices or embedded systems.

- 2021/08/18 (NEW!) Integrated to Huggingface Spaces with Gradio. See Gradio Web Demo.

- 2021/08/12 (NEW!) Support French TTS (Tacotron2, Multiband MelGAN). Pls see the colab. Many Thanks Samuel Delalez

- 2021/06/01 Integrated with Huggingface Hub. See the PR. Thanks patrickvonplaten and osanseviero

- 2021/03/18 Support IOS for FastSpeech2 and MB MelGAN. Thanks kewlbear. See here

- 2021/01/18 Support TFLite C++ inference. Thanks luan78zaoha. See here

- 2020/12/02 Support German TTS with Thorsten dataset. See the Colab. Thanks thorstenMueller and monatis

- 2020/11/24 Add HiFi-GAN vocoder. See here

- 2020/11/19 Add Multi-GPU gradient accumulator. See here

- 2020/08/23 Add Parallel WaveGAN tensorflow implementation. See here

- 2020/08/20 Add C++ inference code. Thank @ZDisket. See here

- 2020/08/18 Update new base processor. Add AutoProcessor and pretrained processor json file

- 2020/08/14 Support Chinese TTS. Pls see the colab. Thank @azraelkuan

- 2020/08/05 Support Korean TTS. Pls see the colab. Thank @crux153

- 2020/07/17 Support MultiGPU for all Trainer

- 2020/07/05 Support Convert Tacotron-2, FastSpeech to Tflite. Pls see the colab. Thank @jaeyoo from the TFlite team for his support

- 2020/06/20 FastSpeech2 implementation with Tensorflow is supported.

- 2020/06/07 Multi-band MelGAN (MB MelGAN) implementation with Tensorflow is supported

- High performance on Speech Synthesis.

- Be able to fine-tune on other languages.

- Fast, Scalable, and Reliable.

- Suitable for deployment.

- Easy to implement a new model, based-on abstract class.

- Mixed precision to speed-up training if possible.

- Support Single/Multi GPU gradient Accumulate.

- Support both Single/Multi GPU in base trainer class.

- TFlite conversion for all supported models.

- Android example.

- Support many languages (currently, we support Chinese, Korean, English, French and German)

- Support C++ inference.

- Support Convert weight for some models from PyTorch to TensorFlow to accelerate speed.

This repository is tested on Ubuntu 18.04 with:

- Python 3.7+

- Cuda 10.1

- CuDNN 7.6.5

- Tensorflow 2.2/2.3/2.4/2.5/2.6

- Tensorflow Addons >= 0.10.0

Different Tensorflow version should be working but not tested yet. This repo will try to work with the latest stable TensorFlow version. We recommend you install TensorFlow 2.6.0 to training in case you want to use MultiGPU.

$ pip install TensorFlowTTSExamples are included in the repository but are not shipped with the framework. Therefore, to run the latest version of examples, you need to install the source below.

$ git clone https://github.com/TensorSpeech/TensorFlowTTS.git

$ cd TensorFlowTTS

$ pip install .If you want to upgrade the repository and its dependencies:

$ git pull

$ pip install --upgrade .TensorFlowTTS currently provides the following architectures:

- MelGAN released with the paper MelGAN: Generative Adversarial Networks for Conditional Waveform Synthesis by Kundan Kumar, Rithesh Kumar, Thibault de Boissiere, Lucas Gestin, Wei Zhen Teoh, Jose Sotelo, Alexandre de Brebisson, Yoshua Bengio, Aaron Courville.

- Tacotron-2 released with the paper Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions by Jonathan Shen, Ruoming Pang, Ron J. Weiss, Mike Schuster, Navdeep Jaitly, Zongheng Yang, Zhifeng Chen, Yu Zhang, Yuxuan Wang, RJ Skerry-Ryan, Rif A. Saurous, Yannis Agiomyrgiannakis, Yonghui Wu.

- FastSpeech released with the paper FastSpeech: Fast, Robust, and Controllable Text to Speech by Yi Ren, Yangjun Ruan, Xu Tan, Tao Qin, Sheng Zhao, Zhou Zhao, Tie-Yan Liu.

- Multi-band MelGAN released with the paper Multi-band MelGAN: Faster Waveform Generation for High-Quality Text-to-Speech by Geng Yang, Shan Yang, Kai Liu, Peng Fang, Wei Chen, Lei Xie.

- FastSpeech2 released with the paper FastSpeech 2: Fast and High-Quality End-to-End Text to Speech by Yi Ren, Chenxu Hu, Xu Tan, Tao Qin, Sheng Zhao, Zhou Zhao, Tie-Yan Liu.

- Parallel WaveGAN released with the paper Parallel WaveGAN: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram by Ryuichi Yamamoto, Eunwoo Song, Jae-Min Kim.

- HiFi-GAN released with the paper HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis by Jungil Kong, Jaehyeon Kim, Jaekyoung Bae.

We are also implementing some techniques to improve quality and convergence speed from the following papers:

- Guided Attention Loss released with the paper Efficiently Trainable Text-to-Speech System Based on Deep Convolutional Networks with Guided Attention by Hideyuki Tachibana, Katsuya Uenoyama, Shunsuke Aihara.

Here in an audio samples on valid set. tacotron-2, fastspeech, melgan, melgan.stft, fastspeech2, multiband_melgan

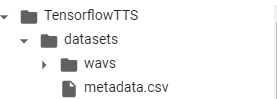

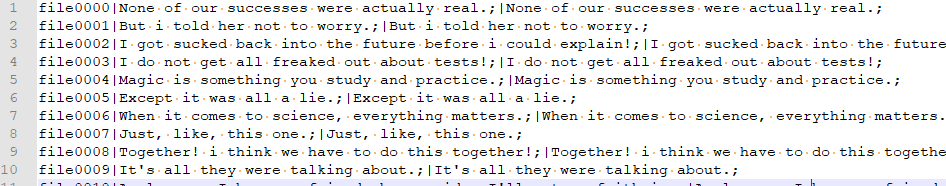

Prepare a dataset in the following format:

|- [NAME_DATASET]/

| |- metadata.csv

| |- wavs/

| |- file1.wav

| |- ...

Where metadata.csv has the following format: id|transcription. This is a ljspeech-like format; you can ignore preprocessing steps if you have other format datasets.

Note that NAME_DATASET should be [ljspeech/kss/baker/libritts/synpaflex] for example.

The preprocessing has two steps:

- Preprocess audio features

- Convert characters to IDs

- Compute mel spectrograms

- Normalize mel spectrograms to [-1, 1] range

- Split the dataset into train and validation

- Compute the mean and standard deviation of multiple features from the training split

- Standardize mel spectrogram based on computed statistics

To reproduce the steps above:

tensorflow-tts-preprocess --rootdir ./[ljspeech/kss/baker/libritts/thorsten/synpaflex] --outdir ./dump_[ljspeech/kss/baker/libritts/thorsten/synpaflex] --config preprocess/[ljspeech/kss/baker/thorsten/synpaflex]_preprocess.yaml --dataset [ljspeech/kss/baker/libritts/thorsten/synpaflex]

tensorflow-tts-normalize --rootdir ./dump_[ljspeech/kss/baker/libritts/thorsten/synpaflex] --outdir ./dump_[ljspeech/kss/baker/libritts/thorsten/synpaflex] --config preprocess/[ljspeech/kss/baker/libritts/thorsten/synpaflex]_preprocess.yaml --dataset [ljspeech/kss/baker/libritts/thorsten/synpaflex]

Right now we only support ljspeech, kss, baker, libritts, thorsten and

synpaflex for dataset argument. In the future, we intend to support more datasets.

Note: To run libritts preprocessing, please first read the instruction in examples/fastspeech2_libritts. We need to reformat it first before run preprocessing.

Note: To run synpaflex preprocessing, please first run the notebook notebooks/prepare_synpaflex.ipynb. We need to reformat it first before run preprocessing.

After preprocessing, the structure of the project folder should be:

|- [NAME_DATASET]/

| |- metadata.csv

| |- wav/

| |- file1.wav

| |- ...

|- dump_[ljspeech/kss/baker/libritts/thorsten]/

| |- train/

| |- ids/

| |- LJ001-0001-ids.npy

| |- ...

| |- raw-feats/

| |- LJ001-0001-raw-feats.npy

| |- ...

| |- raw-f0/

| |- LJ001-0001-raw-f0.npy

| |- ...

| |- raw-energies/

| |- LJ001-0001-raw-energy.npy

| |- ...

| |- norm-feats/

| |- LJ001-0001-norm-feats.npy

| |- ...

| |- wavs/

| |- LJ001-0001-wave.npy

| |- ...

| |- valid/

| |- ids/

| |- LJ001-0009-ids.npy

| |- ...

| |- raw-feats/

| |- LJ001-0009-raw-feats.npy

| |- ...

| |- raw-f0/

| |- LJ001-0001-raw-f0.npy

| |- ...

| |- raw-energies/

| |- LJ001-0001-raw-energy.npy

| |- ...

| |- norm-feats/

| |- LJ001-0009-norm-feats.npy

| |- ...

| |- wavs/

| |- LJ001-0009-wave.npy

| |- ...

| |- stats.npy

| |- stats_f0.npy

| |- stats_energy.npy

| |- train_utt_ids.npy

| |- valid_utt_ids.npy

|- examples/

| |- melgan/

| |- fastspeech/

| |- tacotron2/

| ...

stats.npycontains the mean and std from the training split mel spectrogramsstats_energy.npycontains the mean and std of energy values from the training splitstats_f0.npycontains the mean and std of F0 values in the training splittrain_utt_ids.npy/valid_utt_ids.npycontains training and validation utterances IDs respectively

We use suffix (ids, raw-feats, raw-energy, raw-f0, norm-feats, and wave) for each input type.

IMPORTANT NOTES:

- This preprocessing step is based on ESPnet so you can combine all models here with other models from ESPnet repository.

- Regardless of how your dataset is formatted, the final structure of the

dumpfolder SHOULD follow the above structure to be able to use the training script, or you can modify it by yourself 😄.

To know how to train model from scratch or fine-tune with other datasets/languages, please see detail at example directory.

- For Tacotron-2 tutorial, pls see examples/tacotron2

- For FastSpeech tutorial, pls see examples/fastspeech

- For FastSpeech2 tutorial, pls see examples/fastspeech2

- For FastSpeech2 + MFA tutorial, pls see examples/fastspeech2_libritts

- For MelGAN tutorial, pls see examples/melgan

- For MelGAN + STFT Loss tutorial, pls see examples/melgan.stft

- For Multiband-MelGAN tutorial, pls see examples/multiband_melgan

- For Parallel WaveGAN tutorial, pls see examples/parallel_wavegan

- For Multiband-MelGAN Generator + HiFi-GAN tutorial, pls see examples/multiband_melgan_hf

- For HiFi-GAN tutorial, pls see examples/hifigan

A detail implementation of abstract dataset class from tensorflow_tts/dataset/abstract_dataset. There are some functions you need overide and understand:

- get_args: This function return argumentation for generator class, normally is utt_ids.

- generator: This function have an inputs from get_args function and return a inputs for models. Note that we return a dictionary for all generator functions with the keys that exactly match with the model's parameters because base_trainer will use model(**batch) to do forward step.

- get_output_dtypes: This function need return dtypes for each element from generator function.

- get_len_dataset: Return len of datasets, normaly is len(utt_ids).

IMPORTANT NOTES:

- A pipeline of creating dataset should be: cache -> shuffle -> map_fn -> get_batch -> prefetch.

- If you do shuffle before cache, the dataset won't shuffle when it re-iterate over datasets.

- You should apply map_fn to make each element return from generator function have the same length before getting batch and feed it into a model.

Some examples to use this abstract_dataset are tacotron_dataset.py, fastspeech_dataset.py, melgan_dataset.py, fastspeech2_dataset.py

A detail implementation of base_trainer from tensorflow_tts/trainer/base_trainer.py. It include Seq2SeqBasedTrainer and GanBasedTrainer inherit from BasedTrainer. All trainer support both single/multi GPU. There a some functions you MUST overide when implement new_trainer:

- compile: This function aim to define a models, and losses.

- generate_and_save_intermediate_result: This function will save intermediate result such as: plot alignment, save audio generated, plot mel-spectrogram ...

- compute_per_example_losses: This function will compute per_example_loss for model, note that all element of the loss MUST has shape [batch_size].

All models on this repo are trained based-on GanBasedTrainer (see train_melgan.py, train_melgan_stft.py, train_multiband_melgan.py) and Seq2SeqBasedTrainer (see train_tacotron2.py, train_fastspeech.py).

You can know how to inference each model at notebooks or see a colab (for English), colab (for Korean), colab (for Chinese), colab (for French), colab (for German). Here is an example code for end2end inference with fastspeech2 and multi-band melgan. We uploaded all our pretrained in HuggingFace Hub.

import numpy as np

import soundfile as sf

import yaml

import tensorflow as tf

from tensorflow_tts.inference import TFAutoModel

from tensorflow_tts.inference import AutoProcessor

# initialize fastspeech2 model.

fastspeech2 = TFAutoModel.from_pretrained("tensorspeech/tts-fastspeech2-ljspeech-en")

# initialize mb_melgan model

mb_melgan = TFAutoModel.from_pretrained("tensorspeech/tts-mb_melgan-ljspeech-en")

# inference

processor = AutoProcessor.from_pretrained("tensorspeech/tts-fastspeech2-ljspeech-en")

input_ids = processor.text_to_sequence("Recent research at Harvard has shown meditating for as little as 8 weeks, can actually increase the grey matter in the parts of the brain responsible for emotional regulation, and learning.")

# fastspeech inference

mel_before, mel_after, duration_outputs, _, _ = fastspeech2.inference(

input_ids=tf.expand_dims(tf.convert_to_tensor(input_ids, dtype=tf.int32), 0),

speaker_ids=tf.convert_to_tensor([0], dtype=tf.int32),

speed_ratios=tf.convert_to_tensor([1.0], dtype=tf.float32),

f0_ratios =tf.convert_to_tensor([1.0], dtype=tf.float32),

energy_ratios =tf.convert_to_tensor([1.0], dtype=tf.float32),

)

# melgan inference

audio_before = mb_melgan.inference(mel_before)[0, :, 0]

audio_after = mb_melgan.inference(mel_after)[0, :, 0]

# save to file

sf.write('./audio_before.wav', audio_before, 22050, "PCM_16")

sf.write('./audio_after.wav', audio_after, 22050, "PCM_16")- Minh Nguyen Quan Anh: [email protected]

- erogol: [email protected]

- Kuan Chen: [email protected]

- Dawid Kobus: [email protected]

- Takuya Ebata: [email protected]

- Trinh Le Quang: [email protected]

- Yunchao He: [email protected]

- Alejandro Miguel Velasquez: [email protected]

All models here are licensed under the Apache 2.0

We want to thank Tomoki Hayashi, who discussed with us much about Melgan, Multi-band melgan, Fastspeech, and Tacotron. This framework based-on his great open-source ParallelWaveGan project.