First, great project. Thanks a ton for maintaining it. I'm learning a lot going through the code.

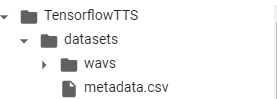

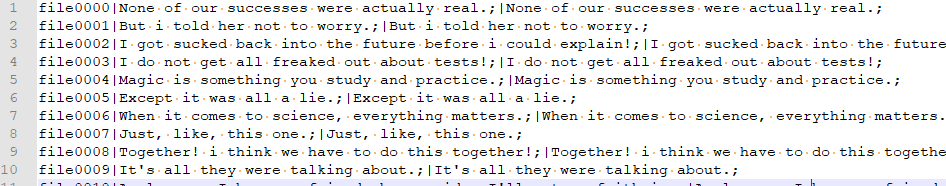

I tried following the Tacotron2 tutorial -- downloaded the dataset, ran the preprocessing steps, and tried training. I ran into this error:

tensorflow.python.framework.errors_impl.InvalidArgumentError: [_Derived_] Trying to access element 156 in a list with 156 elements.

[[{{node while_21/body/_1/TensorArrayV2Read_1/TensorListGetItem}}]]

[[tacotron2/StatefulPartitionedCall/encoder/bilstm/forward_lstm/StatefulPartitionedCall]] [Op:__inference__one_step_tacotron2_406287]

Function call stack:

_one_step_tacotron2 -> _one_step_tacotron2 -> _one_step_tacotron2

Is this an error you've seen before? Unless I've made a dumb mistake, I imagine anyone might experience this error since I'm following the tutorial. Any pointers on how I can debug this? I don't mind trying to solve it myself but I'm fairly new to Tensorflow2.

$ CUDA_VISIBLE_DEVICES=0 python examples/tacotron2/train_tacotron2.py \

> --train-dir /media/usb0/tts/ljspeech_dump/train/ \

> --dev-dir /media/usb0/tts/ljspeech_dump/valid/ \

> --outdir ./examples/tacotron2/exp/train.tacotron2.v1/ \

> --config ./examples/tacotron2/conf/tacotron2.v1.yaml \

> --use-norm 1 \

> --mixed_precision 0 \

> --resume ""

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: hop_size = 256

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: format = npy

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: tacotron2_params = {'embedding_hidden_size': 512, 'initializer_range': 0.02, 'embedding_dropout_prob': 0.1, 'n_speakers': 1, 'n_conv_encoder': 5, 'encoder_conv_filters': 512, 'encoder_conv_kernel_sizes': 5, 'encoder_conv_activation': 'relu', 'encoder_conv_dropout_rate': 0.5, 'encoder_lstm_units': 256, 'n_prenet_layers': 2, 'prenet_units': 256, 'prenet_activation': 'relu', 'prenet_dropout_rate': 0.5, 'n_lstm_decoder': 1, 'reduction_factor': 1, 'decoder_lstm_units': 1024, 'attention_dim': 128, 'attention_filters': 32, 'attention_kernel': 31, 'n_mels': 80, 'n_conv_postnet': 5, 'postnet_conv_filters': 512, 'postnet_conv_kernel_sizes': 5, 'postnet_dropout_rate': 0.1, 'attention_type': 'lsa'}

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: batch_size = 32

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: remove_short_samples = True

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: allow_cache = True

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: mel_length_threshold = 32

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: is_shuffle = True

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: use_fixed_shapes = True

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: optimizer_params = {'initial_learning_rate': 0.001, 'end_learning_rate': 1e-05, 'decay_steps': 150000, 'warmup_proportion': 0.02, 'weight_decay': 0.001}

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: train_max_steps = 200000

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: save_interval_steps = 5000

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: eval_interval_steps = 500

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: log_interval_steps = 100

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: start_schedule_teacher_forcing = 200001

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: start_ratio_value = 0.5

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: schedule_decay_steps = 50000

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: end_ratio_value = 0.0

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: num_save_intermediate_results = 1

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: train_dir = /media/usb0/tts/ljspeech_dump/train/

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: dev_dir = /media/usb0/tts/ljspeech_dump/valid/

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: use_norm = True

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: outdir = ./examples/tacotron2/exp/train.tacotron2.v1/

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: config = ./examples/tacotron2/conf/tacotron2.v1.yaml

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: resume =

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: verbose = 1

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: mixed_precision = False

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: version = 0.6.1

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: max_mel_length = 871

2020-06-27 17:02:49,796 (train_tacotron2:440) INFO: max_char_length = 188

2020-06-27 17:02:49.799192: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcuda.so.1

2020-06-27 17:02:49.827610: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:981] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2020-06-27 17:02:49.827904: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1561] Found device 0 with properties:

pciBusID: 0000:01:00.0 name: GeForce RTX 2080 computeCapability: 7.5

coreClock: 1.815GHz coreCount: 46 deviceMemorySize: 7.79GiB deviceMemoryBandwidth: 417.23GiB/s

2020-06-27 17:02:49.828043: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudart.so.10.1

2020-06-27 17:02:49.828913: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.10

2020-06-27 17:02:49.829804: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcufft.so.10

2020-06-27 17:02:49.829957: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcurand.so.10

2020-06-27 17:02:49.830830: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusolver.so.10

2020-06-27 17:02:49.831252: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusparse.so.10

2020-06-27 17:02:49.831346: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'libcudnn.so.7'; dlerror: libcudnn.so.7: cannot open shared object file: No such file or directory

2020-06-27 17:02:49.831352: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1598] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform.

Skipping registering GPU devices...

2020-06-27 17:02:49.831534: I tensorflow/core/platform/cpu_feature_guard.cc:143] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2020-06-27 17:02:49.835216: I tensorflow/core/platform/profile_utils/cpu_utils.cc:102] CPU Frequency: 4200000000 Hz

2020-06-27 17:02:49.835388: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x7f5318000b60 initialized for platform Host (this does not guarantee that XLA will be used). Devices:

2020-06-27 17:02:49.835400: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version

2020-06-27 17:02:49.836162: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1102] Device interconnect StreamExecutor with strength 1 edge matrix:

2020-06-27 17:02:49.836171: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1108]

Model: "tacotron2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

encoder (TFTacotronEncoder) multiple 8218624

_________________________________________________________________

decoder_cell (TFTacotronDeco multiple 18246402

_________________________________________________________________

post_net (TFTacotronPostnet) multiple 5460480

_________________________________________________________________

residual_projection (Dense) multiple 41040

=================================================================

Total params: 31,966,546

Trainable params: 31,956,306

Non-trainable params: 10,240

_________________________________________________________________

[train]: 0%| | 0/200000 [00:00<?, ?it/s]2020-06-27 17:03:08.327047: I tensorflow/core/kernels/data/shuffle_dataset_op.cc:184] Filling up shuffle buffer (this may take a while): 4125 of 12445

2020-06-27 17:03:18.326677: I tensorflow/core/kernels/data/shuffle_dataset_op.cc:184] Filling up shuffle buffer (this may take a while): 8227 of 12445

2020-06-27 17:03:28.325737: I tensorflow/core/kernels/data/shuffle_dataset_op.cc:184] Filling up shuffle buffer (this may take a while): 12285 of 12445

2020-06-27 17:03:28.724872: I tensorflow/core/kernels/data/shuffle_dataset_op.cc:233] Shuffle buffer filled.

Traceback (most recent call last):

File "examples/tacotron2/train_tacotron2.py", line 513, in <module>

main()

File "examples/tacotron2/train_tacotron2.py", line 503, in main

trainer.fit(train_dataset,

File "examples/tacotron2/train_tacotron2.py", line 343, in fit

self.run()

File "/home/caleb/repos/TensorflowTTS/tensorflow_tts/trainers/base_trainer.py", line 72, in run

self._train_epoch()

File "/home/caleb/repos/TensorflowTTS/tensorflow_tts/trainers/base_trainer.py", line 94, in _train_epoch

self._train_step(batch)

File "examples/tacotron2/train_tacotron2.py", line 116, in _train_step

self._one_step_tacotron2(charactor, char_length, mel, mel_length, guided_attention)

File "/home/caleb/repos/TensorflowTTS/venv/lib/python3.8/site-packages/tensorflow/python/eager/def_function.py", line 580, in __call__

result = self._call(*args, **kwds)

File "/home/caleb/repos/TensorflowTTS/venv/lib/python3.8/site-packages/tensorflow/python/eager/def_function.py", line 644, in _call

return self._stateless_fn(*args, **kwds)

File "/home/caleb/repos/TensorflowTTS/venv/lib/python3.8/site-packages/tensorflow/python/eager/function.py", line 2420, in __call__

return graph_function._filtered_call(args, kwargs) # pylint: disable=protected-access

File "/home/caleb/repos/TensorflowTTS/venv/lib/python3.8/site-packages/tensorflow/python/eager/function.py", line 1661, in _filtered_call

return self._call_flat(

File "/home/caleb/repos/TensorflowTTS/venv/lib/python3.8/site-packages/tensorflow/python/eager/function.py", line 1745, in _call_flat

return self._build_call_outputs(self._inference_function.call(

File "/home/caleb/repos/TensorflowTTS/venv/lib/python3.8/site-packages/tensorflow/python/eager/function.py", line 593, in call

outputs = execute.execute(

File "/home/caleb/repos/TensorflowTTS/venv/lib/python3.8/site-packages/tensorflow/python/eager/execute.py", line 59, in quick_execute

tensors = pywrap_tfe.TFE_Py_Execute(ctx._handle, device_name, op_name,

tensorflow.python.framework.errors_impl.InvalidArgumentError: [_Derived_] Trying to access element 156 in a list with 156 elements.

[[{{node while_21/body/_1/TensorArrayV2Read_1/TensorListGetItem}}]]

[[tacotron2/StatefulPartitionedCall/encoder/bilstm/forward_lstm/StatefulPartitionedCall]] [Op:__inference__one_step_tacotron2_406287]

Function call stack:

_one_step_tacotron2 -> _one_step_tacotron2 -> _one_step_tacotron2

[train]: 0%| | 0/200000 [00:36<?, ?it/s]