A C++ & Java library for Image/Camera/Video filters. PRs are welcomed.

See the image deform demo.

allprojects {

repositories {

maven {

// Use github hosted maven repo for now.

// Will be uploaded to maven central later.

url 'https://maven.wysaid.org/'

}

}

}

//Choose only one of them

dependencies {

//All arch: armeabi-v7a, arm64-v8a, x86, x86_64 with video module (ffmpeg bundled)

implementation 'org.wysaid:gpuimage-plus:3.0.0'

//All arch: armeabi-v7a, arm64-v8a, x86, x86_64 without video module (no ffmpeg)

implementation 'org.wysaid:gpuimage-plus:3.0.0-min'

}The jcenter is out of date, please try the source for now. Latest prebuilt versions will be provided soon.

To compile other versions of ffmpeg, see: https://github.com/wysaid/FFmpeg-Android.git

-

Options to know in

local.properties:usingCMakeCompile=true: Compile the native library with CMake if set to true. (Default to false, so you can use the prebuilt libs)usingCMakeCompileDebug=true: Compile the native library in Debug Mode if set to true. (Default to false)disableVideoModule=true: Disable the video recording feature(Useful for image only scenarios). The whole jni module size will be very small. (Default to false)

-

Build with

Android Studioand CMake: (Recommended)- Put

usingCMakeCompile=truein yourlocal.properties - Open the repo with the latest version of

Android Studio - Waiting for the initialization. (NDK/cmake install)

- Done.

- Put

-

Using

Visual Studio Code: (Requires WSL(Recommended)/MinGW/Cygwin on Windows.)- Setup ENV variable

ANDROID_HOMEto your Android SDK installation directory. - Open the repo with

Visual Studio Code - Press

⌘ + shift + B(Mac) orctrl + shift + B(Win/Linux), choose the optionEnable CMake And Build Project With CMake. - Done.

- Setup ENV variable

-

Build with preset tasks: (Requires WSL(Recommended)/MinGW/Cygwin on Windows.)

# define the environment variable "ANDROID_HOME" # If using Windows, define ANDROID_HOME in Windows Environment Settings by yourself. export ANDROID_HOME=/path/to/android/sdk # Setup Project bash tasks.sh --setup-project # Compile with CMake Debug bash tasks.sh --debug --enable-cmake --build # Compile with CMake Release bash tasks.sh --release --enable-cmake --build # Start Demo By Command bash tasks.sh --run

-

Build

JNIpart with ndk-build: (Not recommended)export NDK=path/of/your/ndk cd folder/of/jni (android-gpuimage-plus/library/src/main/jni) #This will make all arch: armeabi, armeabi-v7a arm64-v8a, x86, mips ./buildJNI #Or use "sh buildJNI" #Try this if you failed to run the shell above export CGE_USE_VIDEO_MODULE=1 $NDK/ndk-build #If you don't want anything except the image filter, #Do as below to build with only cge module #No ffmpeg, opencv or faceTracker. #And remove the loading part of ffmpeg&facetracker $NDK/ndk-build #For Windows user, you should include the `.cmd` extension to `ndk-build` like this: cd <your\path\to\this\repo>\library\src\main\jni <your\path\to\ndk>\ndk-build.cmd #Also remember to comment out these line in NativeLibraryLoader //System.loadLibrary("ffmpeg"); //CGEFFmpegNativeLibrary.avRegisterAll();

You can find precompiled libs here: android-gpuimage-plus-libs (The precompiled '.so' files are generated with NDK-r23b)

Note that the generated file "libFaceTracker.so" is not necessary. So just remove this file if you don't want any feature of it.

- iOS version: https://github.com/wysaid/ios-gpuimage-plus

Sample Code for doing a filter with Bitmap

//Simply apply a filter to a Bitmap.

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

Bitmap srcImage = ...;

//HSL Adjust (hue: 0.02, saturation: -0.31, luminance: -0.17)

//Please see the manual for more details.

String ruleString = "@adjust hsl 0.02 -0.31 -0.17";

Bitmap dstImage = CGENativeLibrary.filterImage_MultipleEffects(src, ruleString, 1.0f);

//Then the dstImage is applied with the filter.

//Save the result image to /sdcard/libCGE/rec_???.jpg.

ImageUtil.saveBitmap(dstImage);

}Your filter must inherit CGEImageFilterInterfaceAbstract or its child class. Most of the filters are inherited from CGEImageFilterInterface because it has many useful functions.

// A simple customized filter to do a color reversal.

class MyCustomFilter : public CGE::CGEImageFilterInterface

{

public:

bool init()

{

CGEConstString fragmentShaderString = CGE_SHADER_STRING_PRECISION_H

(

varying vec2 textureCoordinate; //defined in 'g_vshDefaultWithoutTexCoord'

uniform sampler2D inputImageTexture; // the same to above.

void main()

{

vec4 src = texture2D(inputImageTexture, textureCoordinate);

src.rgb = 1.0 - src.rgb; //Simply reverse all channels.

gl_FragColor = src;

}

);

//m_program is defined in 'CGEImageFilterInterface'

return m_program.initWithShaderStrings(g_vshDefaultWithoutTexCoord, s_fsh);

}

//void render2Texture(CGE::CGEImageHandlerInterface* handler, GLuint srcTexture, GLuint vertexBufferID)

//{

// //Your own render functions here.

// //Do not override this function to use the CGEImageFilterInterface's.

//}

};Note: To add your own shader filter with c++. Please see the demo for further details.

In C++, you can use a CGEImageHandler to do that:

//Assume the gl context already exists:

//JNIEnv* env = ...;

//jobject bitmap = ...;

CGEImageHandlerAndroid handler;

CustomFilterType* customFilter = new CustomFilterType();

//You should handle the return value (false is returned when failed.)

customFilter->init();

handler.initWithBitmap(env, bitmap);

//The customFilter will be released when the handler' destructor is called.

//So you don't have to call 'delete customFilter' if you add it into the handler.

handler.addImageFilter(customFilter);

handler.processingFilters(); //Run the filters.

jobject resultBitmap = handler.getResultBitmap(env);If no gl context exists, the class CGESharedGLContext may be helpful.

In Java, you can simply follow the sample:

See: CGENativeLibrary.cgeFilterImageWithCustomFilter

Or to do with a CGEImageHandler

Doc: https://github.com/wysaid/android-gpuimage-plus/wiki

En: https://github.com/wysaid/android-gpuimage-plus/wiki/Parsing-String-Rule-(EN)

Ch: https://github.com/wysaid/android-gpuimage-plus/wiki/Parsing-String-Rule-(ZH)

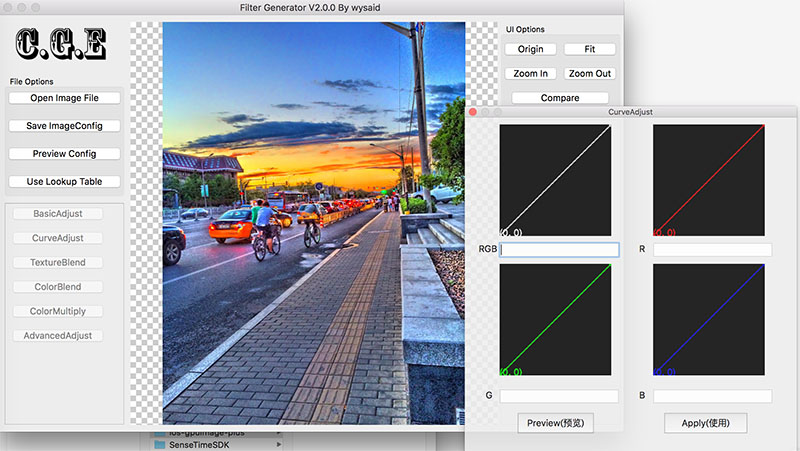

Some utils are available for creating filters: https://github.com/wysaid/cge-tools

Alipay:

Paypal: